What Is Web Scraping: The Ultimate Beginner’s Guide

Learn the basics of web scraping with this comprehensive overview.

Web scraping is a very powerful tool for collecting data on a large scale and extracting valuable insights from it, whether for personal or business use. This guide will give you a comprehensive overview of what web scraping is, how it works, and what you can do with it. Let’s get started!

What Is Web Scraping – the Definition

Web scraping refers to the process of collecting data from the web. It’s usually performed using automated tools – web scraping software or custom-built scripts.

Web scraping goes by various names. It can also be called web harvesting, web data extraction, screen scraping, or data mining. There are some subtle differences between these terms, but they’re used more or less interchangeably.

Why Scrape Data from the Web?

You may wonder – what’s the point of scraping the web? Well, it creates a lot of value.

For one, you can use data scraping to greatly speed up tasks. Let’s say you want to collect reviews from multiple websites like Amazon and Google to learn about a product. With web scraping, it takes minutes; manually, you’d spend hours or even days.

Web scraping also helps to automate repetitive work. During Covid-19 lockdowns, it was often very hard to order food online because all the delivery slots were taken. Instead of refreshing the web page manually, you could build a web scraper do it for you and then notify you once a slot opens.

Web scraping also has powerful commercial uses. Some companies use it to research the market by scraping the product and pricing information of competitors. Others aggregate data from multiple sources – for example, flight companies – to present great deals. Still others scrape various public sources like YellowPages and Crunchbase to find business leads.

The most frequent uses of web scraping in business explained.

How Web Scraping Works

Web scraping involves multiple steps done in a succession:

1. Identify your target web pages. For example, you may want to scrape all products in a category of an e-commerce store. You can do it by hand or build something called a web crawler to find relevant URLs.

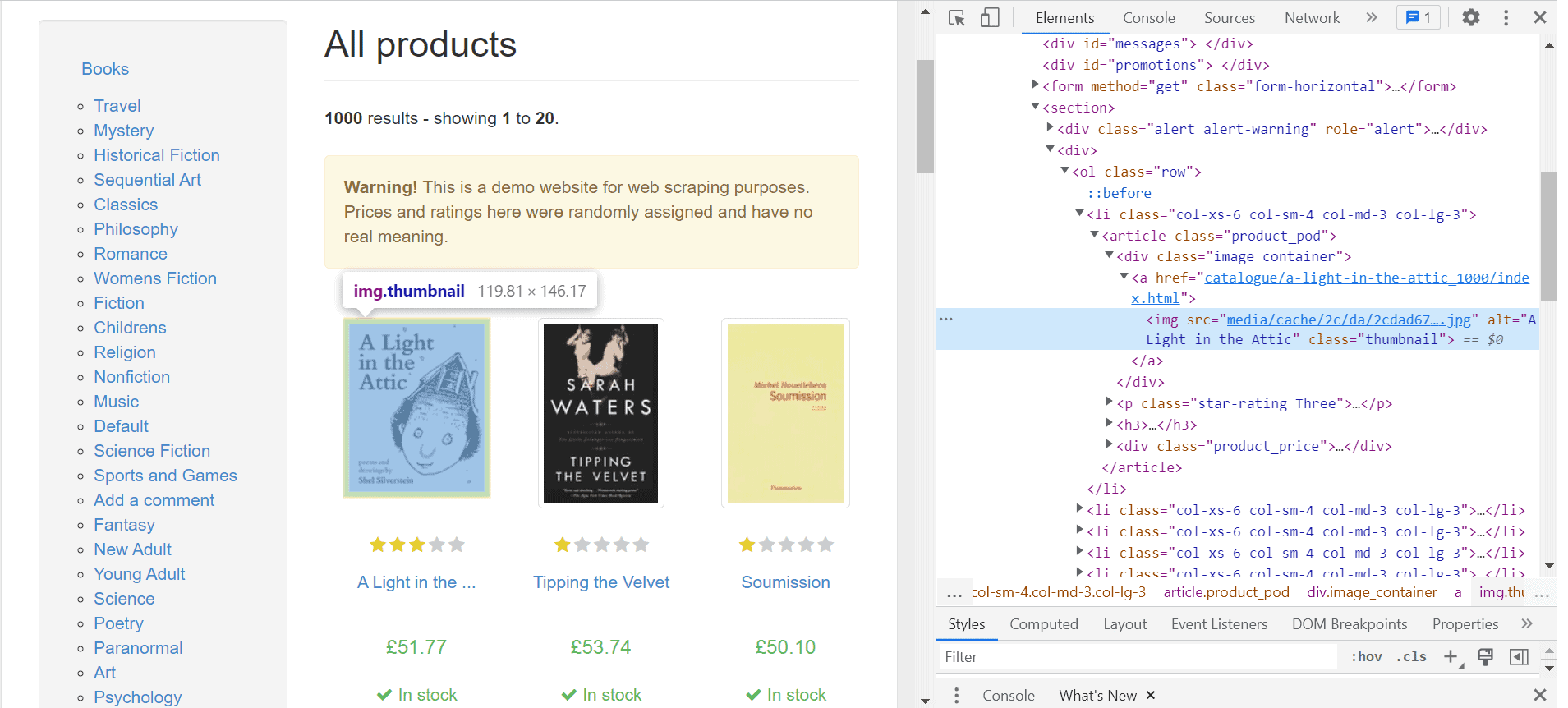

2. Download their HTML code. Every webpage is built using HTML; you can see how it looks by pressing the right mouse button in your web browser and. selecting Inspect.

3. Extract the data points you want. HTML is messy and has unnecessary information, so you’ll need to clean it up. This process is called data parsing. The end result is structured data in a .json, .csv file, or another readable format.

4. Adjust your web scraper as needed. Websites tend to change often, and you might find more efficient ways to do things.

There are many tools to facilitate the data scraping process or offload some of the work from you. Ready-made web scrapers let you avoid building your own; proxies can help you circumvent blocks; and if you want, you can get scraping services to do the whole job for you.

Is Web Scraping Legal?

Web scraping is not exactly a very welcome or sometimes ethical affair. Scrapers often ignore the website’s guidelines (ToS and robots.txt), bring down its servers with too many requests, or even appropriate the data they scrape to launch a competing service. It’s no wonder many websites are keen on blocking any crawler or scraper in sight (except for, of course, search engines).

Still, web scraping as such is legal, with some limitations. Over the years, there have been a number of landmark cases. We’re no lawyers, but it has been established that web scraping a website is okay as long as the information is publicly available and doesn’t involve copyrighted or personal information.

Since the question of web scraping isn’t always straightforward – each use case is considered individually – it’s wise to seek legal advice.

Web Scraping vs API

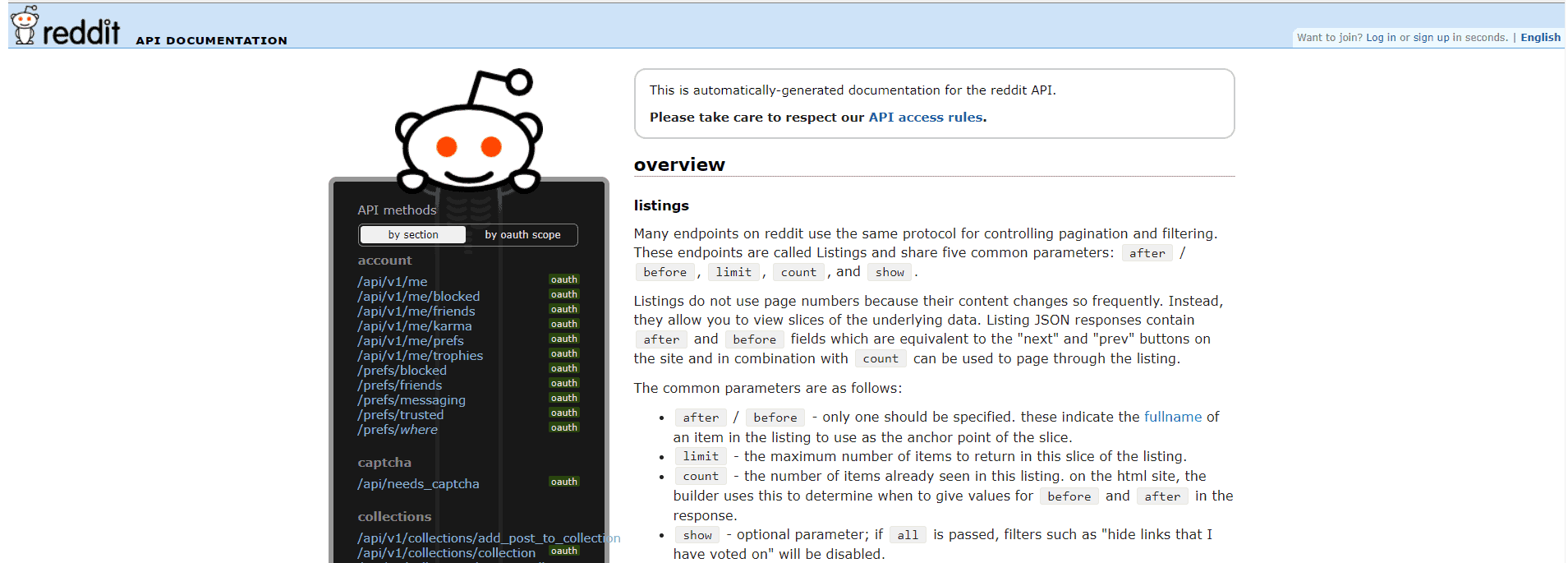

Web scraping is not the only method for getting data from websites. In fact, it’s not even the default one. The preferred approach is using an API.

An API, or application programming interface, provides a method to interact with a certain website or app programmatically. Websites like reddit.com have APIs that allow anyone to download their contents.

However, APIs have problems. First, not all websites offer them. Second, the data an API gives can often be stale. Third, you often have to deal with limits on what data you can collect and how often. And finally, for some reason APIs tend to change or break more often than even web scraping scripts.

So, the main difference between web scraping and an API is that the former gives better access to data: whatever you can see in your browser, you can get. However, web scraping often happens without websites knowing about it. And when they do find out, they’re not very happy about it.

Choosing the Best Web Scraping Tool for the Job

There’s no shortage of web scraping tools in the market. If you want, you can even scrape with Microsoft Excel. Should you, though? Probably not. Web scraping tools can be divided into three categories: 1) custom-built, 2) ready-made, and 3) web scraping APIs.

One way to go about scraping is to build a scraper yourself. There are relevant libraries and frameworks in various programming languages, but web scraping with Python and Node.js are the most popular approaches. Here’s why:

- Python is very easy to read, and you don’t need to compile code. It has many great performing web scraping libraries and other tools catered to any web scraping project you can think of. Python is used by both beginners and advanced users and has a strong community support.

- Node.js is written in JavaScript. It’s asynchronous by default, so it can handle concurrent requests. That means it works best in situations when you need to scrape multiple pages. Node.js is simple to deploy and has great-performing tools for dynamic scraping.

If you’re already familiar with, let’s say, PHP, you can use the skills for web scraping as well.

For those without programming skills or time, you can go with ready-made web scraping tools. No-code web scrapers have everything configured for you and are wrapped in a nice user interface. They let you scrape without any or minimal programming knowledge. You can also try to use pre-collected datasets – a collections of records that are organized (often arranged in a table) and prepared for further analysis.

The middle ground between the first two categories is web scraping APIs. They have a steeper learning curve than visual scrapers but are more extensible. In essence, these APIs handle proxies and the web scraping logic, so that you can extract data by making a simple API call to the provider’s infrastructure.

For those looking for additional support, the growing popularity of ChatGPT has made it a helpful tool in web scraping. While not perfect, it can write simple code and explain the logic behind it. It’s great for beginners learning the ropes or experienced scrapers looking to refine their skills.

There’s no shortage of web scraping tools out there. Find your match.

Web Scraping Challenges

Web scraping isn’t easy; some websites do their best to ensure you can’t catch a break. Here are some of the obstacles you might encounter

Modern websites use request throttling to avoid overloading the servers and unnecessary connection interruptions. The website controls how often you can send requests within a specific time window. When you reach the limit, your web scraper won’t be able to perform any further actions. If you ignore it, you might block your IP address.

Another challenge that can greatly hinder your web scraping efforts is CAPTCHAs. It’s a technique used to fight bots. They can be triggered because you’re making too many requests in a short time, 2) using low-quality proxies, or 3) not covering your web scraper’s fingerprint properly. Some CAPTCHAs are hard-coded into the HTML markup and appear at certain points like registration. And until you pass the test, your scraper is out of work.

The most gruesome way a website can punish you for scraping is by blocking your IP address. However, there’s a problem with IP bans – the website’s owner can ban a whole range of IPs (256), so all the people who share the same subnet will lose access. That’s why websites are reluctant to use this method.

Learn how to deal with six common web scraping obstacles.

Web Scraping Best Practices

Here are some web scraping best practices to help your project succeed.

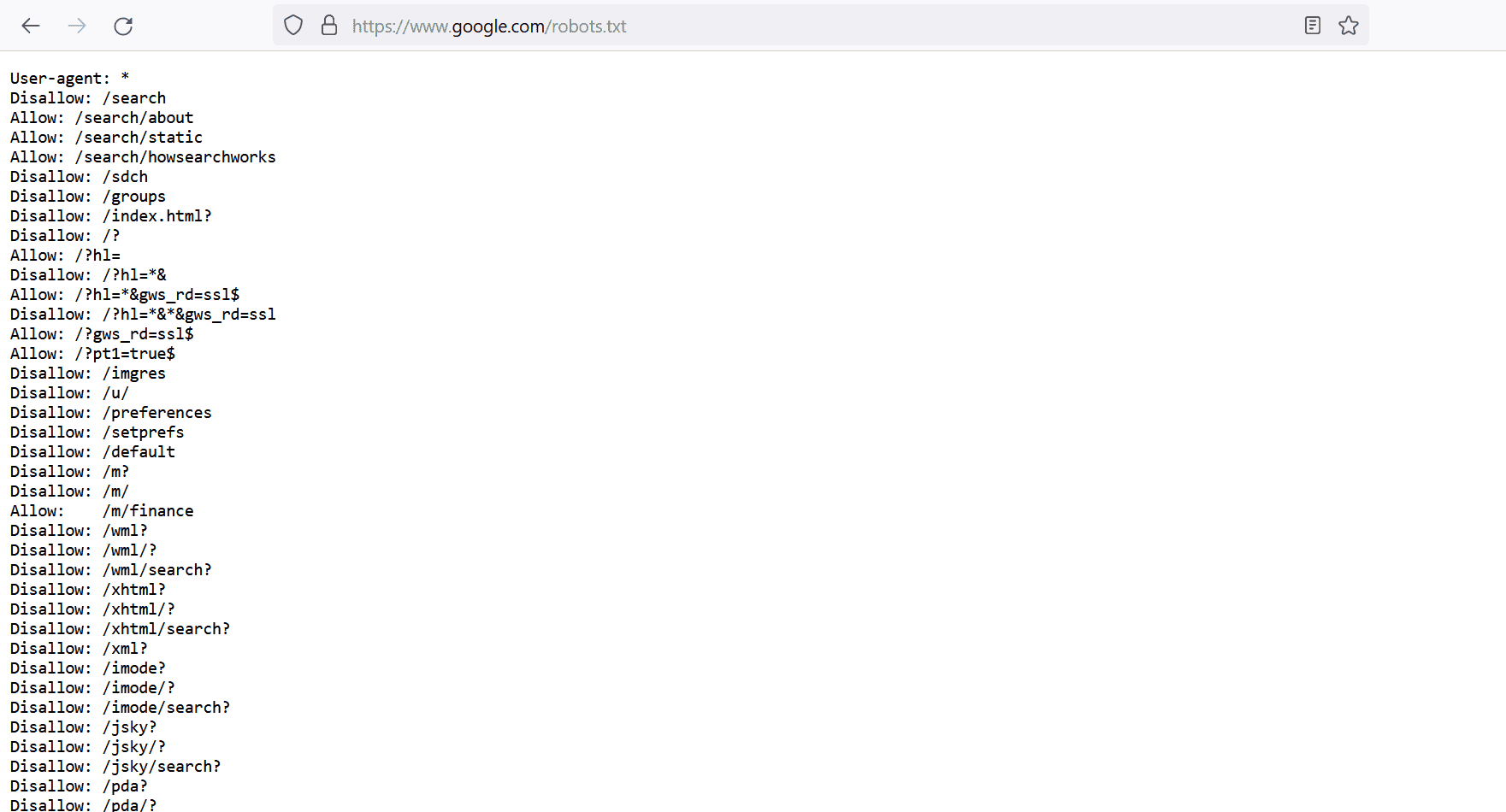

First and foremost, respect the website you’re scraping. You should read data privacy regulations and respect the website’s terms of service. Also, most websites have a robots.txt file – it gives instructions on which content a crawler can access and what it should avoid.

Websites can track your actions. If you send too many requests, your actions will be red-flagged. So, you should act naturally by keeping random intervals between connection requests and reducing the crawling rate. And if you don’t want to burden both the website and your web scraper, don’t collect data during the peak hours.

Another critical step is to take care of your digital identity. Websites use anti-scraping technologies like CAPTCHAs, IP blocks, and request throttling. To avoid these and other obstacles – rotate your proxies and the user-agent. The first covers location hiding, and the latter – browser spoofing. So, every time you connect, you’ll have a “new” identity.

We’ve prepared some tips and tricks that will come in handy when gathering data.

Getting Started with Your First Python Script

Let’s say you want to build your first Python web scraper. How do you go about it? Well, you can write a simple tool with just a few lines of code, but there are a few steps you should follow:

1. If you’re a newbie to web scraping, go with libraries like Requests and Beautiful Soup. Requests is an HTTP client that will fetch you raw HTML, while Beautiful Soup is a HTTP parser that will structure the data you’ve downloaded.

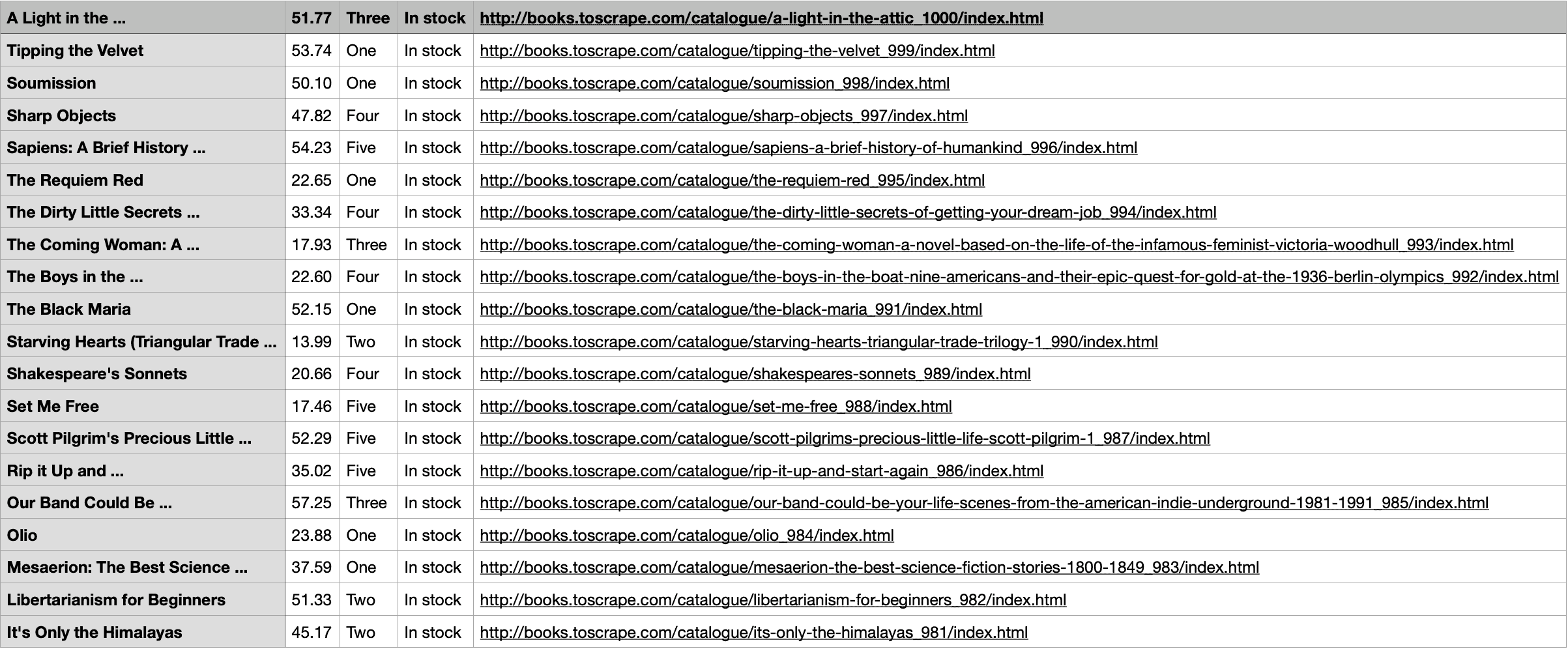

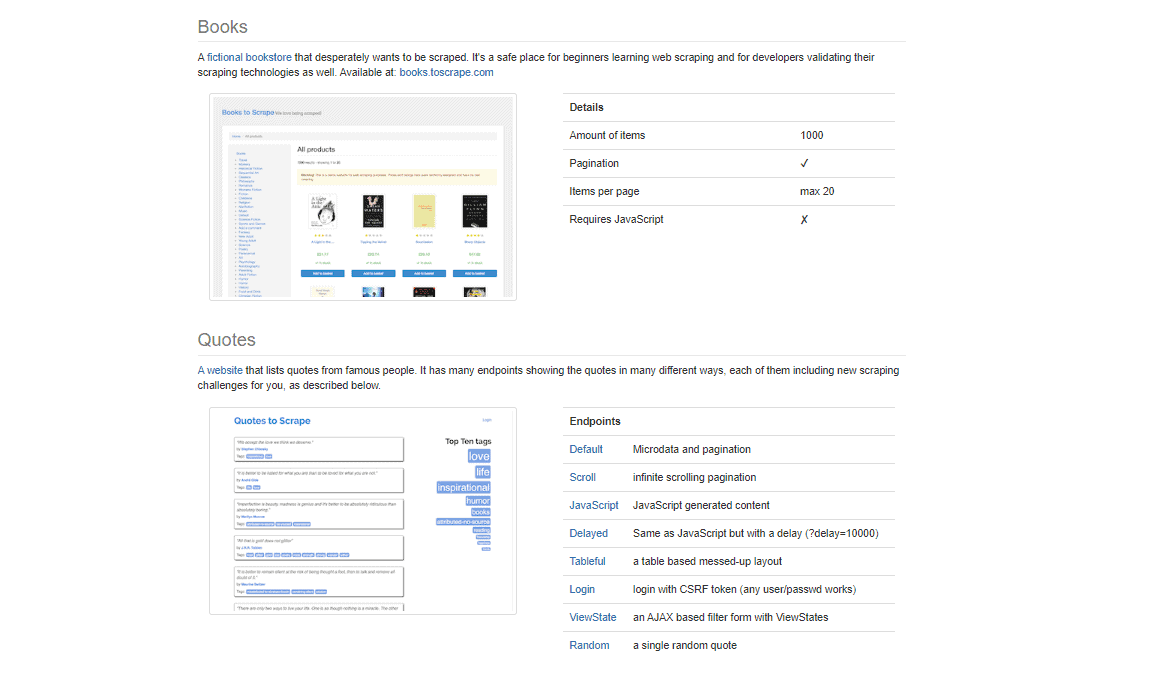

2. Then, decide on a target website and project parameters like URLs and data points you want to scrape. If you don’t have any particular website in mind, choose a dummy site to practice your scraping skills.

3. To build your web scraper, you’ll also need a code editor. You can choose any editor you like, Notepad++, Visual Studio Code, or use the one preinstalled on your computer.

Once you have all the prerequisites, you can write your first Python script – send HTTP requests to the website, parse the HTML response, and save the data.

An introductory guide to Python web scraping with a step-by-step tutorial.

How to Scrape JavaScript-Rendered Websites with Python

With Requests and Beautiful Soup you can learn basic skills by scraping static data. If you want to target dynamic websites and learn how to deal with things like infinite scrolling and lazy loading, go with Selenium. The tool controls a headless browser and is fully capable of dealing with dynamic pages.

A step-by-step guide to web scraping with Selenium.

You’re not limited to web scraping using Selenium and Python. There are other powerful headless browsers that can deal with JavaScript-rendered web pages. For example, if you want to try web scraping with NodeJS, go with Playwright or Puppeteer. Both tools are much lighter on resources than Selenium and easier to set up.

How to Scrape Multiple Pages with Python

While scraping a single page is relatively straightforward, handling multiple pages concurrently can speed up the process and reduce waiting time.

AIOHTTP allows you to make asynchronous HTTP requests in Python, so you can scrape multiple pages concurrently. We’ve prepared a tutorial that walks you through using AIOHTTP to send non-blocking requests by handling many pages at once, without waiting for each request to finish.

Scrape multiple pages in parallel.

Handling Cookies, Headers, and Proxies with Python

When scraping websites, it’s important to know how to handle cookies, headers, and proxies to mimic real user behavior and avoid IP blocks. Cookies help maintain sessions, headers allow you to simulate your scraper as a real browser, and proxies help you avoid IP detection by rotating IPs. Each of these elements can be managed using different tools in Python.

One tool you can use to manage all of this is cURL, a command-line tool. By integrating cURL with Python, you can make requests, send custom headers, manage cookies, and set up proxies.

This tutorial will show you the basics to use cURL with Python for gathering data.

If you’re using Selenium, you can set up proxies on your own. You can configure a proxy server in your browser automation, so you can rotate IPs while simulating real user behavior across multiple pages.

Learn how to set up a proxy server with Selenium.

For those using the Python Requests library, setting up proxies is also straightforward and allows you to route your requests through different IPs.

Learn how to run proxies with Python Requests.

Getting Started with Node.js

Enough about Python, let’s look at Node.js. So, you want to build your first web scraper using Node.js. How do you go about it? It’s actually pretty simple to get started with just a few lines of code, but there are a few steps you should follow:

- If you’re new to web scraping, start with libraries like Cheerio and Axios. Axios will help you fetch HTML from websites, while Cheerio will allow you to parse and manipulate that HTML, similar to how jQuery works.

- Choose a target website and decide what data you want to scrape. For practice, select a simple website or use a demo site – we’ve prepared a whole list.

- You’ll need a code editor to write your script. You can use any editor you prefer—Visual Studio Code, Notepad++, or even the built-in editor on your machine.

Once you’ve set up everything, you can start writing your first Node.js script to send HTTP requests, parse the response, and extract the data you need.

Everything you need to know about web scraping with Node.js and JavaScript in one place.

Web Scraping Static Pages with Node.js

Interested in scraping data from static web pages? Cheerio and Axios are a great choice for scraping static sites.

This tutorial will guide you through using Cheerio to scrape static content. Cheerio helps you select and manipulate HTML elements quickly, making it perfect for websites that don’t require JavaScript rendering.

Interested in gathering data from static web pages? Cheerio and Axios libraries are great for the job.

If you’re scraping static pages, Cheerio is your go-to tool. But if the website uses JavaScript for rendering, Puppeteer or Playwright will be necessary to handle dynamic content. This comparison will help you decide when to use each tool.

Web Scraping Dynamic Pages with Node.js

What if the website you want to scrape loads content dynamically using JavaScript? For this, you’ll need to use a headless browser that can interact with JavaScript. We’ve prepared two guides on how to do so.

First, let’s look at Puppeteer. It’s ideal for scraping dynamic pages, as it can render content that JavaScript loads after the page is initially served.

A step-by-step guide to web scraping using the Node.js library Puppeteer.

If you want to practice scraping JavaScript-based websites with more advanced features, Playwright is an excellent alternative to Puppeteer. This tutorial will show you how to set up Playwright to scrape data from dynamic websites while offering more flexibility across different browsers.

Want to practise your skills with JavaScript-based websites? We’ll show you how.