Playwright Web Scraping: A Step-by-Step Tutorial with Node.js

Want to practise your skills with JavaScript-based websites? We’ll show you how.

Gathering data from websites that rely on dynamic elements or elaborate anti-bot systems isn’t an easy task. Before extracting the information, you need to render the entire page like a real user. A headless browser library, Playwright, is just right for the job.

In this step-by-step guide, you’ll learn why Playwright is such a popular Node.js library used for web scraping JavaScript-rendered websites. You’ll also be able to practice your skills with a real-life example.

What is Web Scraping with Playwright?

Web scraping with Playwright is the process of gathering data from JavaScript-rendered websites. The tool works by programmatically controlling a headless browser. It doesn’t have a user interface like a tab bar, so Playwright doesn’t need to load visual elements. This saves a lot of resources when web scraping.

Playwright is a relatively new library developed by Microsoft in 2018. It’s used to automate actions on different browsers: emulate scrolling, clicking, downloading – all the actions you could do with a mouse. Playwright gives you full control over the browser in both headless and headful modes. And most importantly, it’s able to render JavaScript, which regular HTTP libraries can’t do. This makes Playwright a powerful tool for scraping dynamic content from modern websites.

Why Use Playwright for Web Scraping?

Playwright is used in web scraping for several reasons:

- Cross-browser support. The library can emulate Chromium, Firefox, and WebKit.

- Cross-language support. Playwright supports JavaScript, Python, Java, TypeScript, and .NET.

- Use it with any operating system. You can use Playwrit with Windows, Linux, or macOS.

- Supports asynchronous and synchronous approaches. Playwright is asynchronous by default; it allows you to make concurrent requests and scrape multiple pages in parallel. Or, you can make one request at a time to reduce complexity.

- Good performer. The library uses a WebSocket connection that stays open while scraping. So you can send multiple requests in one go. This greatly improves the performance.

- Great for spoofing browser fingerprints. Playwright has packages like the playwright-extra for preventing bot detection.

- Good documentation. Even though Playwright is a newbie in the web scraping world, it has extensive documentation with many examples.

Node.js and Playwright Web Scraping: A Step-by-Step Tutorial

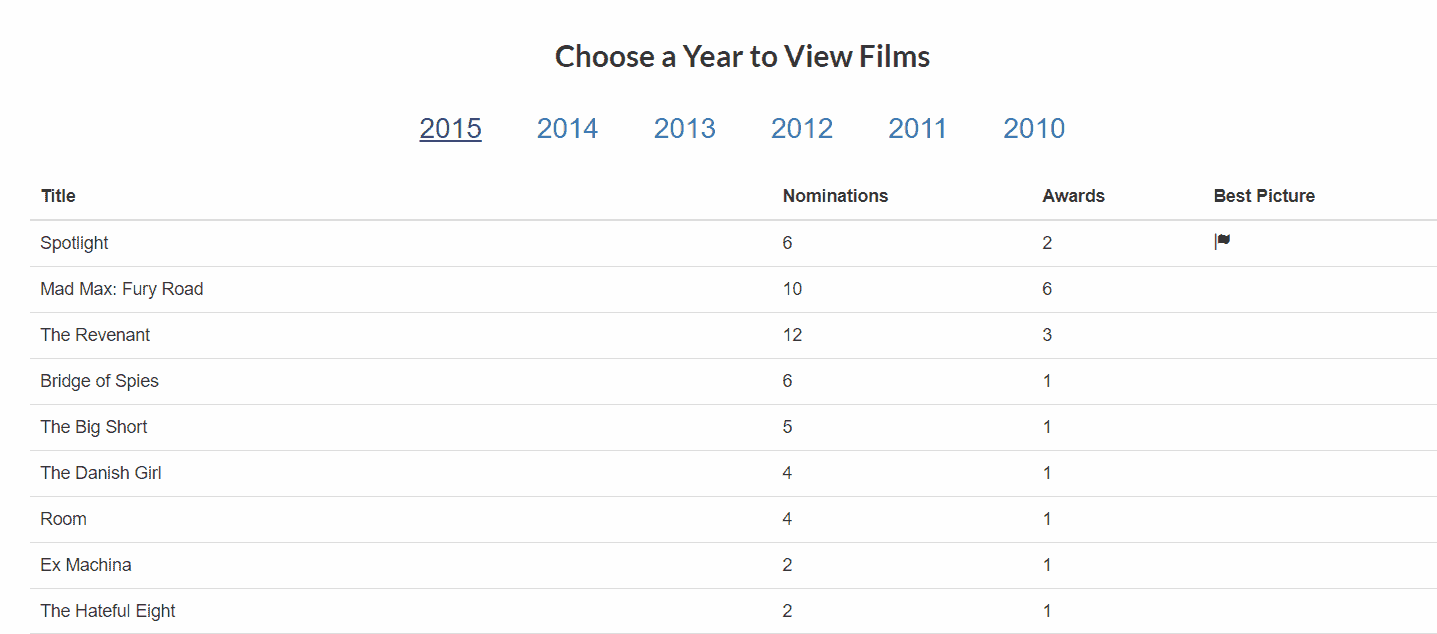

In this tutorial, we’ll be scraping the film year, title, nominations, awards, and if a film has won the Best Picture awards. You’ll practice some web scraping skills like:

- scraping a single page;

- handling multiple pages;

- waiting for elements to load;

- loading dynamic content by clicking on buttons;

- scraping tables;

- handling errors;

- writing the output to .json format.

Prerequisites

- have the latest Node.js version on your system. Refer to the official website.

- install Playwright. You can get the instructions from the official website.

Importing the Libraries

Step 1. Import the necessary elements: Playwright library and file system. It will allow you to work on your computer and write the output later.

import playwright from 'playwright'

import fs from 'fs'

Step 2. Then, enter the URL you want to scrape and create films_list that will hold the output.

const url = 'https://www.scrapethissite.com/pages/ajax-javascript/'

var films_list = []

Preparing to Scrape

Step 1. In this web scraping example, we’ll be using the Chromium browser. For you to see how the browser is working, we’ll use the headful mode by specifying headless to false.

async function prepare_browser() {

const browser = await playwright.chromium.launch({

headless: false,

})

return browser

}

Step 2. Now, let’s write the main() function to create the browser. Then, we’ll use the created browser context to open a new page, and pass it to a function called get_page(). It will begin the scraping.

async function main() {

const browser = await prepare_browser()

const context = await browser.newContext()

const page = await context.newPage()

await get_page(page, url)

await browser.close()

}

main()

Scraping a Single and Multiple Pages

Gathering Data from a Single Page

Step 1. Now, let’s scrape one page:

- page.goto() tells the browser to go to the URL.

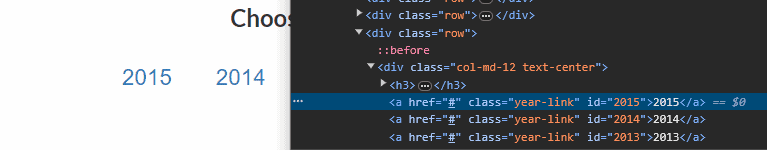

- To find and click the year buttons on the page, we need to create a year_btn_selector variable that contains the CSS selector of these elements. This will allow you to load the content. Inspect the page source by right-clicking anywhere on the page and selecting “Inspect”.

async function get_page(page, url) {

await page.goto(url)

const year_btn_selector = '.year-link'

Step 2. Then, tell Playwright to wait until at least one button element appears on the screen. Let’s set a timeout to 20 seconds. Once a single element has appeared, we can assume that the page has loaded and start scraping. If the wait times out, you can implement additional reload or retry functionality to address the issue.

await page.locator(year_btn_selector).first().waitFor({'timeout': 20_000})

Gathering Data from Multiple Pages

Step 3. Now, we can iterate through all the buttons and get their content:

- We’ll use the same variable year_btns. It stores button information that we got using a CSS selector. Then, we’ll iterate through buttons to get their content.

To gather data from each table for every year (2010-2015), we’ll create a function called scrape_table. It’ll take the browser “page” and the “year” variables as parameters. In this context, the year variable corresponds to the button that represents a specific year.

for (let year of year_btns) {

await scrape_table(page, year)

}

The whole function:

async function get_page(page, url) {

await page.goto(url)

const year_btn_selector = '.year-link'

await page.locator(year_btn_selector).first().waitFor({'timeout': 20_000})

let year_btns = await page.locator(year_btn_selector).all()

for (let year of year_btns) {

await scrape_table(page, year)

}

}

Scraping the Table

Step 1. First, get the text “2015” from the button and save it in the year_text variable.

Then, tell Playwright to click on the year button and wait until content appears. This time we’re locating a table.

async function scrape_table(page, year){

let year_text = await year.textContent()

await year.click()

const table_selector = 'table.table'

await page.locator(table_selector).waitFor({'timeout': 20_000})

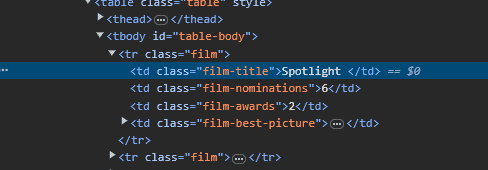

Step 2. When the table appears, we can scrape all the rows. The table row (<tr>) has the class of “film”, so we need to select it.

let table_rows = await page.locator('.film').all()

Getting the Data

Step 1. Now, let’s iterate through the table rows and get movie information using the CSS selectors for each column. The information is stored in a film_info dictionary, including the year of release.

for (let row of table_rows) {

let film_info = {

'film-year': year_text,

'film-title': await row.locator('.film-title').textContent(),

'film-nominations': await row.locator('.film-nominations').textContent(),

'film-awards': await row.locator('.film-awards').textContent(),

}

Step 2. Then, check if the icon (<i>) element is present in that row. If it is, the film has won the best picture award. So, we need to add another key film_best_picture to the dictionary and assign it a “true” value or otherwise, “false”.

if (await row.locator('i').count()>0){

film_info['film-best-picture'] = true

} else {

film_info['film-best-picture'] = false

}

Step 3. After that, print out the result in the console to see the output. The film_info dictionary gets pushed into the films_list list (the one we created at the very beginning).

console.log(film_info)

films_list.push(film_info)

}

The whole function:

async function scrape_table(page, year){

let year_text = await year.textContent()

await year.click()

const table_selector = 'table.table'

await page.locator(table_selector).waitFor({'timeout': 20_000})

let table_rows = await page.locator('.film').all()

for (let row of table_rows) {

let film_info = {

'film-year': year_text,

'film-title': await row.locator('.film-title').textContent(),

'film-nominations': await row.locator('.film-nominations').textContent(),

'film-awards': await row.locator('.film-awards').textContent(),

}

if (await row.locator('i').count()>0){

film_info['film-best-picture'] = true

} else {

film_info['film-best-picture'] = false

}

console.log(film_info)

films_list.push(film_info)

}

}

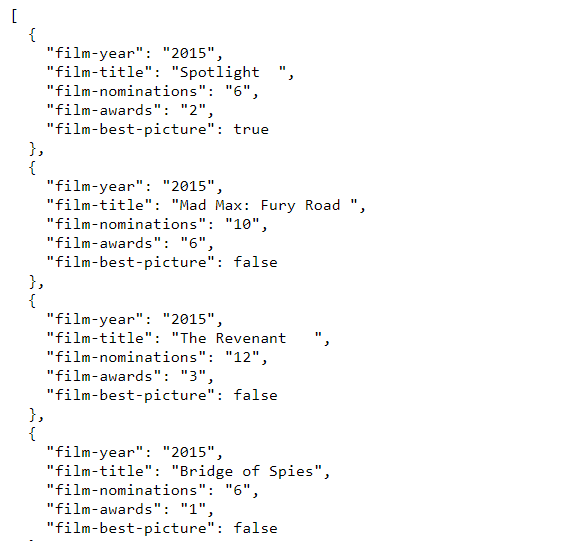

JSON Output

Now that the scraping part is done, we can print out the list of objects (films_list) in a structured .json format.

The write_output() function will handle the task of writing the scraped data to a .json file. Here’s how it works:

function write_output() {

fs.writeFile('output.json', JSON.stringify(films_list, null, 2), (err) => {

if (err) {

console.log(err)

} else {

console.log("Output written successfully")

}

})

}

- JSON.stringify(films_list, null, 2): converts the films_list into a JSON-formatted string with an indentation of 2 spaces for better readability.

- fs.writeFile(‘output.json’, … ): writes the JSON-formatted string to a file named ‘output.json’.

- (err) => { … }: a callback function that handles any errors during the writing process.

- console.log(err): if an error occurs.

- console.log(“Output written successfully”): if the writing process is completed without errors.

Full main function:

async function main() {

const browser = await prepare_browser()

const context = await browser.newContext()

const page = await context.newPage()

await get_page(page, url)

await browser.close()

write_output()

}

Here’s the full code:

import playwright from 'playwright'

import fs from 'fs'

const url = 'https://www.scrapethissite.com/pages/ajax-javascript/'

var films_list = []

async function prepare_browser() {

const browser = await playwright.chromium.launch({

headless: false,

})

return browser

}

async function scrape_table(page, year){

let year_text = await year.textContent()

await year.click()

const table_selector = 'table.table'

await page.locator(table_selector).waitFor({'timeout': 20_000})

let table_rows = await page.locator('.film').all()

for (let row of table_rows) {

let film_info = {

'film-year': year_text,

'film-title': await row.locator('.film-title').textContent(),

'film-nominations': await row.locator('.film-nominations').textContent(),

'film-awards': await row.locator('.film-awards').textContent(),

}

if (await row.locator('i').count()>0){

film_info['film-best-picture'] = true

} else {

film_info['film-best-picture'] = false

}

console.log(film_info)

films_list.push(film_info)

}

}

async function get_page(page, url) {

await page.goto(url)

const year_btn_selector = '.year-link'

await page.locator(year_btn_selector).first().waitFor({'timeout': 20_000})

let year_btns = await page.locator(year_btn_selector).all()

for (let year of year_btns) {

await scrape_table(page, year)

}

}

function write_output() {

fs.writeFile('output.json', JSON.stringify(films_list, null, 2), (err) => {

if (err) {

console.log(err)

} else {

console.log("Output written successfully")

}

})

}

async function main() {

const browser = await prepare_browser()

const context = await browser.newContext()

const page = await context.newPage()

await get_page(page, url)

await browser.close()

write_output()

}

main()

Alternatives to Playwright

While Playwright is a powerful library for scraping dynamic elements, it has some tough competition.

Puppeteer is another great tool for JavaScript-rendered websites. It’s backed up by Google and has a much larger and more active community.

We compare two Node.js headless browser libraries created by two well-known companies. Choose the better fit for web scraping.

Selenium is a veteran in the industry, so it supports more programming languages, has a larger community for support, and works with any browser you can think of.

Look at how the two popular headless browser libraries compare next to each other.

If you want to try scraping static pages with Node.js, we’d recommend using axios and Cheerio. The first one is one of the most popular Node.js HTTP clients that will fetch you the page. The latter is a powerful parser for downloading the data.

A step-by-step guide to web scraping with Node.js: two examples.