An Overview of Python Web Scraping Libraries

Get acquainted with the main Python web scraping libraries and find the best fit for your scraping project.

When it comes to web scraping, there are vast amounts of tools available for the job. And it can get confusing to find the right one for your project.

In this guide we’ll focus on Python web scraping libraries. You’ll find out which libraries excel in performance but work well only with static pages, and which can deal with dynamic content at the expense of speed.

Let’s look at the 5 most popular libraries in detail.

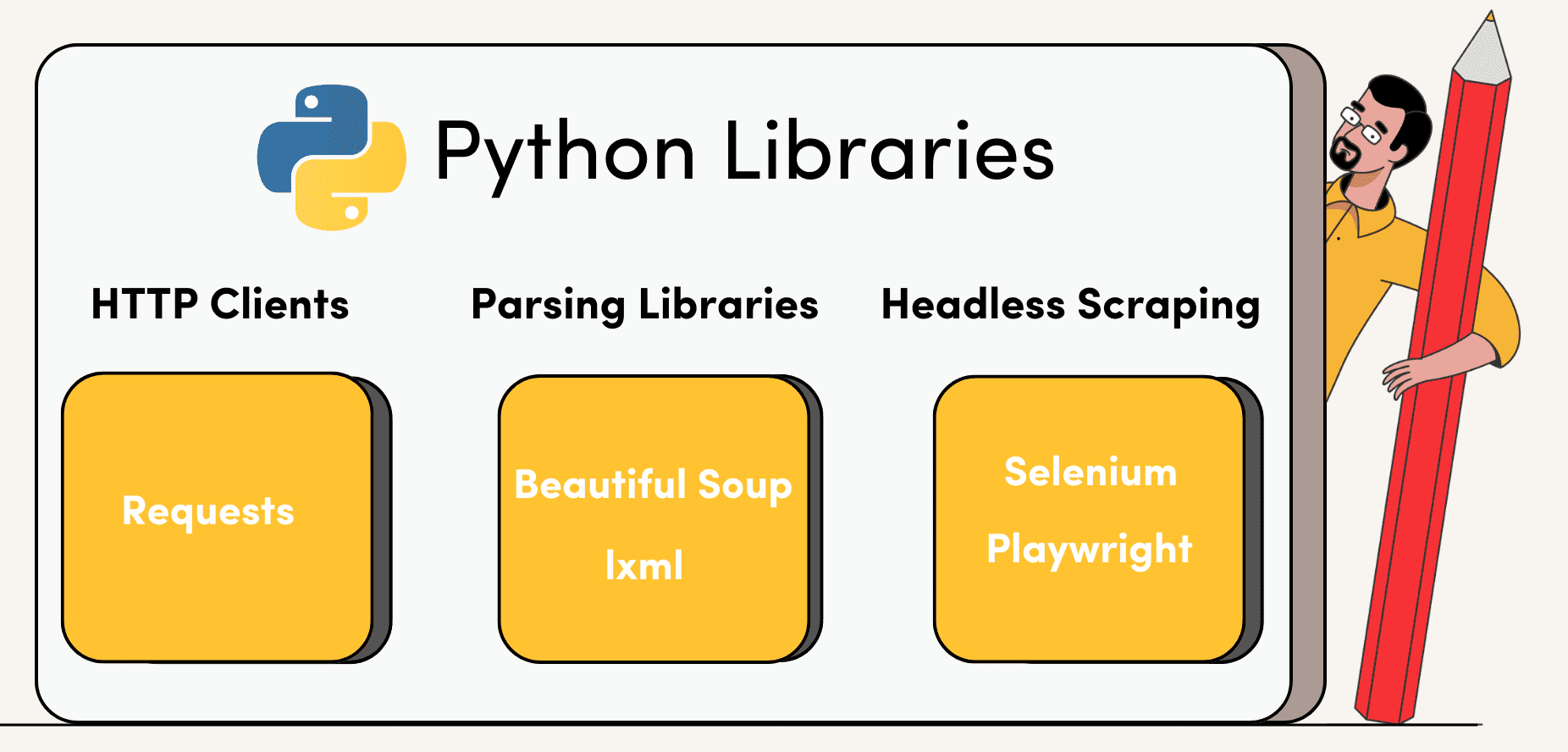

What Are Python Web Scraping Libraries?

Python web scraping libraries are tools written in the Python programming language that control one or more aspects of the web scraping process – crawling, downloading the page, or parsing.

Web scraping libraries can be divided into two groups: 1) ones that require other tools to scrape, crawl or parse data and 2) standalone libraries. Although some libraries can function all alone, they’re often still used with others for a better scraping experience.

Each library has its own capabilities. Some tools are light on resources, so they’re fast but can’t deal with dynamic websites. Others are slow and need a lot of computing power but can handle content nested in JavaScript elements. The choice on which library is best for you depends on the website you’re trying to scrape. But remember, there’s no universal tool.

Since the Python programming language is preferred by many developers, you’ll find hundreds of guides on how to use a specific library. Check out Proxyway’s scraping knowledge base – you’ll find step-by-step tutorials that will help you develop your scraping skills.

The Best Python Web Scraping Libraries

1. Requests

2. Beautiful Soup

3. lxml

4. Selenium

Selenium is a library that allows you to control a headless browser programmatically. It was built for browser automation and web testing, but with JavaScript being so popular, Selenium is now used as a Python library for dynamic web scraping.

Aside from having superpowers with JavaScript-rich websites, the tool is also very versatile. It provides multiple ways to interact with websites, such as taking screenshots, clicking buttons, or filling out forms. Selenium supports many programming languages including Python, Ruby, node.js. and Java. And it can control major browsers like Chrome, Firefox, Safari, or Internet Explorer.

Selenium is an open-source tool that makes it easily accessible to any user; you can find extensive documentation and consult with other community members on sites like StackOverflow.

The library controls a whole headless browser, so it requires more resources than other Python-based web scraping libraries. This makes Selenium significantly slower and more demanding compared to HTTP libraries. So, you should only use it when necessary.

5. Playwright

Playwright is a node.js library for controlling headless browsers with a single API. By and large, Playwright is used for web testing, but it also has been recognized by the scraping community. And the reason lies in its capability of handling JavaScript websites.

One of the biggest advantages of the tool is that it can emulate three major browser groups: Chromium, WebKit, and Firefox. It’s also flexible in terms of programming languages – it supports JavaScript, TypeScript, Python, Java, C++, and .NET.

When it comes to JavaScript-rendered websites, Playwright can be considered a substitute to Selenium. Both libraries support page navigation, clicking, text input, downloading and uploading content, emulating mobile devices, and more. Aside from being a new player in the field, it comes with more advanced capabilities than Selenium including auto-waits, network control, permissions like geolocation, and more. It’s also faster. However, it might lack community support which you won’t have to worry about with Selenium.

Playwright can handle requests synchronously and asynchronously; it’s ideal for both small and large-scale scraping. Synchronous scrapers deal with a single request at a time, so this technique works well with smaller projects. And if you’re after multiple sites, you should stick to the asynchronous approach.

The library is capable of parsing since it runs a full browser. Unfortunately, this option isn’t ideal – the parser can easily break. If this is the case, use Beautiful Soup, which is more robust and faster.

An Overview of the Web Scraping Libraries

| Requests | Beautiful Soup | lxml | Selenium | Playwright | |

| Used for | Sending HTTP Requests | Parsing | Parsing | JavaScript rendering | JavaScript rendering |

| Web crawling | Yes | No | No | Yes | Yes |

| Data parsing | No | Yes | Yes | Yes | No |

| JavaScript rendering | No | No | No | Yes | Yes |

| Proxy integration | Yes | No | No | Yes | Yes |

| Performance | Fast | Average | Fast | Slow | Average |

| Best for | Small to medium-sized projects | Small to medium-sized projects | Continuous large-scale scraping projects | Small to medium-sized projects | Continuous large-scale scraping projects |

Tips and Tricks to Successful Web Scraping

First, maintain your web scraper. Custom-built software is of high-maintenance and needs constant supervision. Since there are quite a few challenges when gathering data, each can impact your scraper’s work.

Also, scrape politely since smaller websites don’t usually monitor the traffic and can’t handle the load. Also, don’t scrape during the busiest hours. There are time intervals when millions of users connect and burden the servers. For you, it means slow speed and connection interruptions.

And don’t forget to practice your web scraping skills in a sandbox. There are few websites designed for people to practice web scraping skills. They provide multiple endpoints with different challenges, like scraping JavaScript-generated content with lazy loading and delayed rendering. If you want to dive deeper, check out our best web scraping best practices list.