Web Scraping with Selenium: An Ultimate Guide for Beginners

A step-by-step guide to web scraping with Selenium.

Modern websites like social media rely on JavaScript. Unfortunately, traditional scripts fall short when it comes to extracting information from dynamic elements. This is because you need JavaScript to render the entire page before getting the data. Selenium has gained popularity for its capability to achieve this – it deals with asynchronous loading or infinite scrolling like a champ.

This article will explain why you should choose Selenium for web scraping and how to increase your chances to get successful requests. You’ll also find a step-by-step tutorial on how to build a Selenium web scraper with Python.

What is Python Web Scraping with Selenium?

Why Choose Selenium for Web scraping?

Selenium is a popular choice for web scraping due to multiple reasons:

- Ability to render JavaScript. Selenium is primarily used for browser automation, which makes it ideal for scraping websites that rely on JavaScript. It will fully render the target website and extract the data.

- Cross-browser support. The best part about Selenium is that it can emulate major browsers like Chrome, Firefox, and Microsoft Edge.

- Supports multiple programming languages. It’s also flexible in terms of programming languages – you can work with Python, Java, Ruby, and C#.

- Emulates user behaviour. With Selenium, you can mimic human behavior by interacting with web pages, including clicking buttons, filling out forms, submitting data, scrolling, and navigating through pages.

- Handles CAPTCHAs. Some websites use CAPTCHAs to prevent bot-like activities. Selenium can handle these tests by displaying them in the browser and allowing you to solve them or integrate with third-party services to automate the process.

- Prevents fingerprint detection. Selenium has packages like selenium-stealth to hide your digital fingerprint. Properly configured, it can prevent logins or pass reCAPTCHAs.

- Large community. The library has an active community, which means there are many resources, tutorials, and plugins available to ease your experience.

While Selenium is a powerful tool for web scraping, it may not be the most efficient option in all scenarios. For simple scraping tasks or when dealing with static websites, other libraries like BeautifulSoup or Requests might be a better option. Also, you can go with other headless libraries like Puppeteer that use fewer resources. However, when working with complex, dynamic websites, Selenium’s capabilities make it a reliable choice.

Getting Ready to Build a Selenium Web Scraper

Choose a Project Idea and Devise Your Project Parameters

The first step in building a Selenium web scraper is to devise your project parameters.

There are several programming languages to choose from when it comes to web scraping with Selenium. But deciding which one to use can be difficult, so we compared popular languages best suited for scraping tasks (*not all the languages on the list are supported by Selenium). If you still can’t decide, go with Python – it’s one of the easiest to use and fits most project requirements.

You won’t need additional libraries to fetch or parse the data because Selenium has its own packages and modules covering all web scraping stages. For example, if you need to clean your data, you can install the selenium.by module. Otherwise, Selenium works perfectly fine with other powerful parsers like Beautiful Soup.

Now, let’s move on to the project itself. You can either scrape real targets like Amazon or practice your skills on websites that are intentionally created to be scraped. This way, you can explore different techniques, languages, and tools in a safe environment. You can find several website recommendations in our list of web-scraping sandboxes.

When you get the hang of Selenium, you’ll probably want to put your skills to work. Let’s say you want to get the best flight deals; you can build a scraper to gather prices and download the results daily. If you don’t have any use cases in mind, you can check out a list of ideas for both beginners and advanced users in our guide.

Consider Web Scraping Guidelines

While web scraping can serve many purposes, there are certain guidelines to follow for ethical and legal practices.

First, it’s crucial to respect website terms of service and not scrape data behind a login. This can get you into legal trouble. You can find more advice in our article on web scraping best practices.

Furthermore, web scraping can become burdensome if you aren’t aware of all the challenges websites will throw at you. From CAPCHAs and IP address bans to structural changes, these roadblocks can disturb your Selenium web scraper.

Use a Proxy Server

You’ll need more than one IP address to scrape a website. So, consider setting up a proxy with Selenium. This way, when you send a connection request to the target website, you’ll appear as a new visitor each time.

You’ll most likely be tempted to use free proxy lists, but if you don’t want anyone to misuse your personal information, stick to paid providers. A tip: you should check whether the provider gives extensive technical documentation for proxy setup with Selenium.

There are several types of proxies to choose from, but we recommend using residential addresses. They come from real people’s devices, they inevitably rotate, and most services offer sticky sessions for longer connection time.

How to Use Selenium for Web Scraping: A Step-by-Step Tutorial

Both links include JavaScript-generated content, and the second page delays rendering. Why would you need to learn how to deal with delayed content? Sometimes it takes a while for a page to load, or you need to wait before a specific element (or condition) is satisfied before you can extract the data.

Prerequisites

- Python 3. Make sure you have the latest Python installed on your system. You can download it from the official website Python.org.

- Selenium. Install the Selenium package using pip. Open your command prompt or terminal and run the following command: pip install selenium.

- Chrome WebDriver: Download the Chrome WebDriver that corresponds to your Chrome browser.

Importing the Libraries

from selenium import webdriver

2) Then import the web driver using the By selector module from Selenium to simplify element selection.

from selenium.webdriver.common.by import By

3) Make sure to have all the elements for pausing your scraper before moving onto the next step.

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

4) Since we’ll be using the CSV module, you’ll need to import it, too.

import csv

Setting Up Global Variables and Finding Elements We Need

Step 1. Now, let’s set up global variables, so that we can store the values:

- url – the page you’ll be scraping;

- timeout – if the page is loading longer than the timeout, your scrape will fail. So, you need to specify the timeout to wait for the element to appear before solving the error;

- output – the list into which you’ll write the scraped quotes.

url = 'http://quotes.toscrape.com/js/'

#url = 'http://quotes.toscrape.com/js-delayed/'

timeout = 10 #seconds

output = []

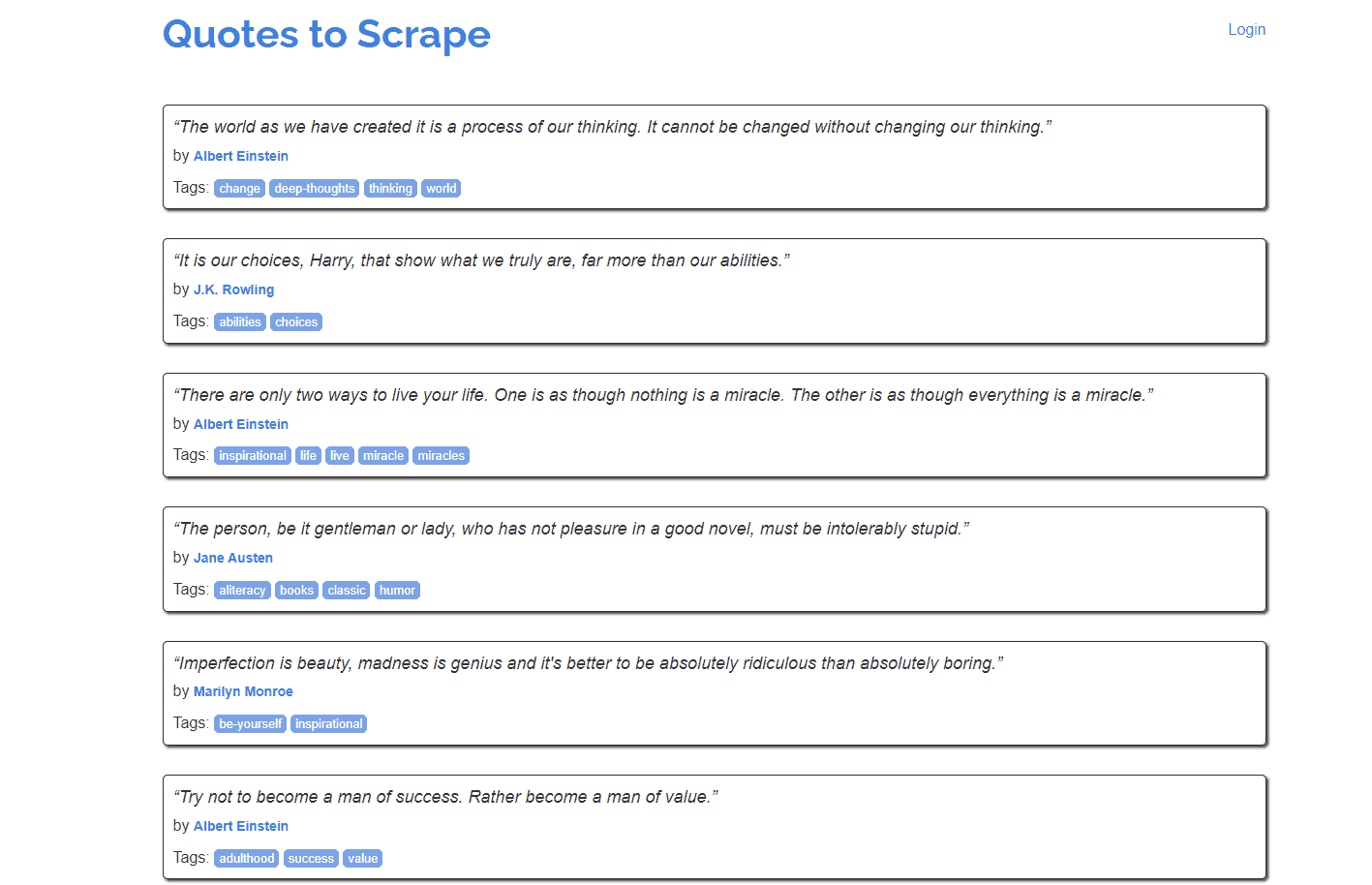

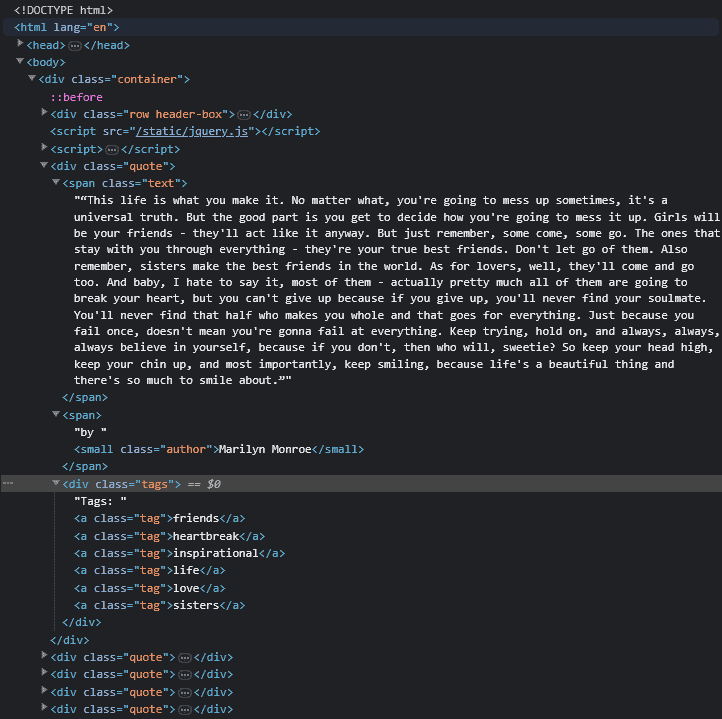

Step 2. Then, inspect the page source of quotes.toscrape.com/js by right-clicking anywhere on the page.

Step 3. You’ll need to select all the quote class objects and, within them, find the text for the following classes: quote, author, and tag. You can use the Selenium By module to find all of those elements.

quotes = driver.find_elements(By.CLASS_NAME, 'quote')

Step 4. For quote text and author, use the find_element() function to find the elements by their class name. Then extract the text and save it in a variable.

for quote in quotes:

text = quote.find_element(By.CLASS_NAME, 'text').text

print (f'Text: {text}')

author = quote.find_element(By.CLASS_NAME, 'author').text

print (f'Author: {author}')

Step 5. Since there can be multiple tags attributed to each quote, you’ll have to use the find_elements() function to find all of them. Then, iterate through each tag and append its text within the tags list.

tags = []

for tag in quote.find_elements(By.CLASS_NAME, 'tag'):

tags.append(tag.text)

print (tags)

Finally, you can put the variables into a dictionary which you then append to the output list you’ve created.

output.append({

'author': author,

'text': text,

'tags': tags,

})

If your target website includes multiple URLs, you can locate them using CSS or XPath selectors.

A step-by-step guide on how to find all URLs using Selenium.

You can also find elements by text:

A step-by-step guide on how to find element by text using Selenium.

Or locate them by looking into the ID:

A step-by-step guide on how to find element by id using Selenium.

Scraping Dynamic Web Pages with Python Selenium

Step 1. First, you’ll need to set up a browser using Selenium. In this example, we’ll be using Chromium.

def prepare_browser():

#Initializing Chrome options

chrome_options = webdriver.ChromeOptions()

driver = webdriver.Chrome(options= chrome_options)

return driver

NOTE: If you need to add selenium_stealth to mask your digital fingerprint or set up proxies to avoid rate limiting, here’s the place to do so. If you don’t know how to set up a proxy with Selenium using Python, you can refer to our guide which will explain everything in detail.

Step-by-step guide on how to set up and authenticate a proxy with Selenium using Python.

def main():

driver = prepare_browser()

scrape(url, driver)

driver.quit()

print (output)

if __name__ == '__main__':

main()

Step 3. Now, let’s start scraping. With the driver.get(url) function, we’ll tell Selenium to open the browser and go to that URL. Since the selectors are already prepared, just paste the code.

def scrape(url, driver):

driver.get(url)

quotes = driver.find_elements(By.CLASS_NAME, 'quote')

for quote in quotes:

text = quote.find_element(By.CLASS_NAME, 'text').text

print (f'Text: {text}')

author = quote.find_element(By.CLASS_NAME, 'author').text

print (f'Author: {author}')

tags = []

for tag in quote.find_elements(By.CLASS_NAME, 'tag'):

tags.append(tag.text)

print (tags)

output.append({

'author': author,

'text': text,

'tags': tags,

})

This will open the browser window, scrape one page, print out the scraped text into the console and the whole output after the script finishes running.

Example console output:

Opened: http://quotes.toscrape.com/js/

Text: “The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”

Author: Albert Einstein

['change', 'deep-thoughts', 'thinking', 'world']

Text: “It is our choices, Harry, that show what we truly are, far more than our abilities.”

Author: J.K. Rowling

['abilities', 'choices']

Text: “There are only two ways to live your life. One is as though nothing is a miracle. The other is as though everything is a

miracle.”

Author: Albert Einstein

['inspirational', 'life', 'live', 'miracle', 'miracles']

Text: “The person, be it gentleman or lady, who has not pleasure in a good novel, must be intolerably stupid.”

Author: Jane Austen

['aliteracy', 'books', 'classic', 'humor']

Text: “Imperfection is beauty, madness is genius and it's better to be absolutely ridiculous than absolutely boring.”

Author: Marilyn Monroe

['be-yourself', 'inspirational']

Text: “Try not to become a man of success. Rather become a man of value.”

Author: Albert Einstein

['adulthood', 'success', 'value']

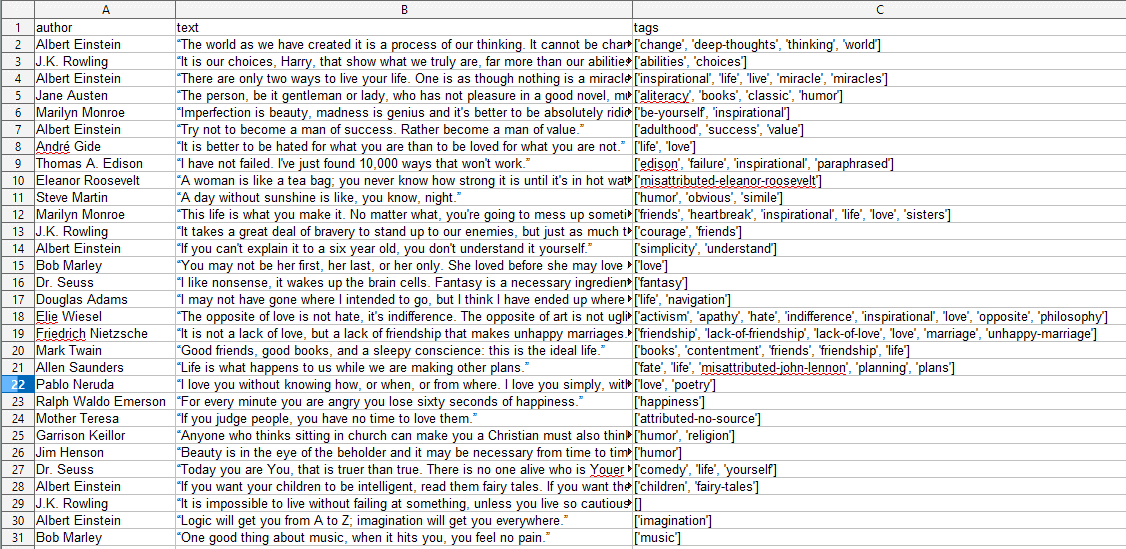

Example of the output in a list:

[{'author': 'Albert Einstein', 'text': '“The world as we have created it is a process of our thinking. It cannot be changed without changing our thinking.”', 'tags': ['change', 'deep-thoughts', 'thinking', 'world']}, {'author': 'J.K. Rowling', 'text': '“It is our choices, Harry, that show what we truly are, far more than our abilities.”', 'tags': ['abilities', 'choices']}, {'author': 'Albert Einstein', 'text': '“There are only two ways to live your life. One is as though nothing is a miracle. The other is as though everything is a miracle.”', 'tags': ['inspirational', 'life', 'live', 'miracle', 'miracles']}, {'author': 'Jane Austen', 'text': '“The person, be it gentleman or lady, who has not pleasure in a good novel, must be intolerably stupid.”', 'tags': ['aliteracy', 'books', 'classic', 'humor']}, {'author': 'Marilyn Monroe', 'text': "“Imperfection is beauty, madness is genius and it's better to be absolutely ridiculous than absolutely boring.”", 'tags': ['be-yourself', 'inspirational']}, {'author': 'Albert Einstein', 'text': '“Try not to become a man of success. Rather become a man of value.”', 'tags': ['adulthood', 'success', 'value']}

Scraping Multiple Pages

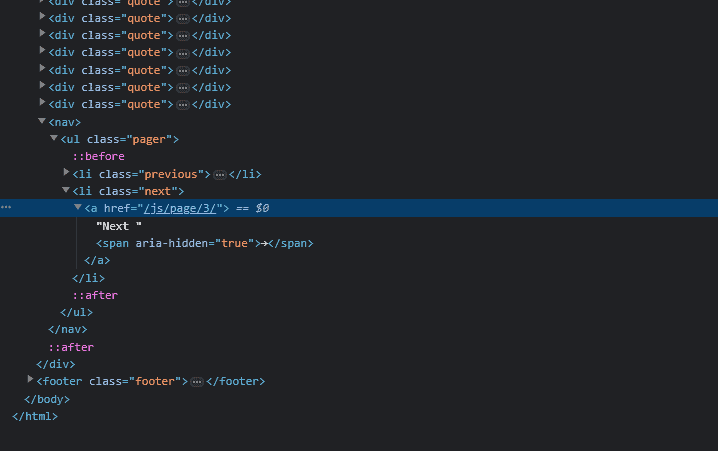

Step 1. First, find the link that takes you to the next page. Inspect the HTML; the link will be under a list item with a class of ‘next’. Look for the <a> tag within that element.

In the second line, look for the href attribute from the element to get the URL of the next page and assign it to the next_url variable.

Finally, call the scrape() function again and pass it the new URL to scrape together with the Webdriver.

elem_next = driver.find_element(By.CLASS_NAME, 'next').find_element(By.TAG_NAME,'a')

next_url = elem_next.get_attribute("href")

scrape(next_url, driver)

Step 2. Now the script will be able to handle pagination and scrape the whole category. However, it still doesn’t know when to stop and will crash on the last page since the element with a class of next won’t be present.

You can wrap the code in a try except block to prevent a crash and, if needed, do something else on the page after reaching the last one.

<try:

elem_next = driver.find_element(By.CLASS_NAME, 'next').find_element(By.TAG_NAME,'a')

next_url = elem_next.get_attribute("href")

scrape(next_url, driver)

except:

print('Next button not found. Quitting.')

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((By.CLASS_NAME, 'quote'))

)

Step 2. You can also put the code above in a try except block. This way, if the code times out or no elements of the quote class appear in the page, you can retry the same request.

Now, your scrape function is done:

def scrape(url, driver):

driver.get(url)

print (f"Opened: {driver.current_url}")

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((By.CLASS_NAME, 'quote'))

)

# Finding all elements with a class of 'quote' in the page

quotes = driver.find_elements(By.CLASS_NAME, 'quote')

for quote in quotes:

text = quote.find_element(By.CLASS_NAME, 'text').text

print (f'Text: {text}')

author = quote.find_element(By.CLASS_NAME, 'author').text

print (f'Author: {author}')

tags = []

for tag in quote.find_elements(By.CLASS_NAME, 'tag'):

tags.append(tag.text)

print (tags)

output.append({

'author': author,

'text': text,

'tags': tags,

})

try:

elem_next = driver.find_element(By.CLASS_NAME, 'next').find_element(By.TAG_NAME,'a')

next_url = elem_next.get_attribute("href")

scrape(next_url, driver)

except:

print('Next button not found. Quitting.')

except:

print ('Timed out.')

Saving the Output to a CSV

Finally, you can write the output to a CSV file (using the csv library) by adding several lines to the main() function. We’ll need a new variable called output_filename for this.

field_names = ['author', 'text', 'tags']

output_filename = 'quotes.csv'

with open (output_filename, 'w', newline='', encoding='utf-8') as f_out:

writer = csv.DictWriter(f_out, fieldnames = field_names)

writer.writeheader()

writer.writerows(output)

The code above creates the file, writes the field_names list as the CSV header. It then populates the file with dictionary objects from the output list.

Here’s the full script:

from selenium import webdriver

# Using By to simplify selection

from selenium.webdriver.common.by import By

# The latter two will be used to make sure that needed elements are present

# Before we begin scraping

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

# For writing output to a CSV file

import csv

url = 'http://quotes.toscrape.com/js/'

#url = 'http://quotes.toscrape.com/js-delayed/'

timeout = 20 #seconds

output = []

def prepare_browser() -> webdriver:

# Initializing Chrome options

chrome_options = webdriver.ChromeOptions()

driver = webdriver.Chrome(options= chrome_options)

return driver

def scrape(url: str, driver: webdriver) -> None:

driver.get(url)

print (f"Opened: {driver.current_url}")

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((By.CLASS_NAME, 'quote'))

)

# Finding all elements with a class of 'quote' in the page

quotes = driver.find_elements(By.CLASS_NAME, 'quote')

for quote in quotes:

text = quote.find_element(By.CLASS_NAME, 'text').text

print (f'Text: {text}')

author = quote.find_element(By.CLASS_NAME, 'author').text

print (f'Author: {author}')

tags = []

for tag in quote.find_elements(By.CLASS_NAME, 'tag'):

tags.append(tag.text)

print (tags)

output.append({

'author': author,

'text': text,

'tags': tags,

})

try:

elem_next = driver.find_element(By.CLASS_NAME, 'next').find_element(By.TAG_NAME,'a')

next_url = elem_next.get_attribute("href")

scrape(next_url, driver)

except:

print('Next button not found. Quitting.')

except:

print ('Timed out.')

def main() -> None:

driver = prepare_browser()

scrape(url, driver)

driver.quit()

print (output)

# CSV

field_names = ['author', 'text', 'tags']

output_filename = 'quotes.csv'

with open (output_filename, 'w', newline='', encoding='utf-8') as f_out:

writer = csv.DictWriter(f_out, fieldnames = field_names)

writer.writeheader()

writer.writerows(output)

if __name__ == '__main__':

main()