Main Web Scraping Challenges and Ways to Overcome Them

Learn how to deal with six common web scraping obstacles.

Web scraping can become a cumbersome process if you aren’t aware of the roadblocks along the way. From reappearing CAPTCHAs to IP address blocks, these challenges can hinder your efforts to gather data. And predictably so, since some webmasters go above and beyond to stop scrapers from accessing their domains.

Then there’s the technical side – websites undergo constant structural changes or experience unstable load speed during peak hours, meaning you’ll have to adapt your scraper for every small configuration.

So, the main question is – how do you go about these obstacles? Continue reading this guide and learn about the frequent web scraping challenges you’re likely to encounter and ways to bypass them.

Why Don’t Some Websites Allow Web Scraping?

There are ethical and privacy concerns about extracting data. Even though the process is legal, website administrators shun scrapers because: 1) they often don’t respect the rules imposed by the site, 2) they put too much load on the domain by scraping data in bulk, 3) they collect information that concerns user privacy. And, of course, no one wants other businesses (or individuals) to gain a competitive advantage.

Can you get in trouble for web scraping? Short answer – yes. From a legal standpoint, you can scrape publicly available data without issues, but even then you’ll remain in a legal gray zone. Contact a lawyer in case you aren’t sure what you can (or can’t) extract.

So, Which Websites You Can Scrape?

It’s always a good idea to practice in a sandbox before going full range – we’ve prepared a list of websites that are friendly toward web scrapers.

Web Scraping Challenges You Need to Be Aware of

1. Rate Limiting

Rate limiting is a popular approach to combat scrapers. The way it works is simple: a website imposes a limited number of actions a user can perform from a single IP address. The limits can vary depending on the website and be based on 1) the number of operations performed within a certain time of period or 2) the amount of data you use.

To overcome rate limiting, use a rotating proxy which automatically gives you IPs from a large pool of addresses. They’re especially effective when you need to make many connection requests quickly. If you don’t know where to get one, here’s a list of the best rotating proxy services.

2. CAPTCHA Prompts

CAPTCHAs are another, more sophisticated approach, to restricting web scraping. You can trigger a CAPTCHA by 1) making too many requests in a short time, 2) not covering your web scraper’s fingerprint properly, or 3) using low-quality proxies. CAPTCHAs can also be hard-coded into the HTML markup. If this is the case, the test will appear on certain pages like registration forms or checkouts no matter what you do.

You can try to either avoid CAPTCHA challenges or solve them. The first method involves improving your web scraper’s fingerprint, mimicking human behavior, and using quality residential proxies. To overcome CAPTCHA challenges, you can try to rotate your IP address and reload the page or use a CAPTCHA solving service. You can learn more about the topic in our guide on bypassing CAPTCHAs.

3. IP Blocks

The worst-case scenario is when your IP address gets blacklisted because of bot-like activities. It mostly happens on well-protected websites like social media.

The main reason to get an IP block is when you continue ignoring request limits or the website’s protection mechanisms decidedly label you as a bot. Websites can block one IP or an entire range of addresses (blocks of 256 IPs, also called subnets). The latter usually happens when you use datacenter proxies from related subnets.

Another reason is that your IP address comes from a location that the website restricts. It can be due to bans imposed by your country, or the webmaster may not want visitors from your location to access its content.

You can overcome IP blocks using the same method as with rate limiting – by changing your IP address.

4. Website Structural Changes

Websites aren’t exactly set in stone, especially if you scrape large e-commerce sites. They often change the HTML markup, which risks breaking your web scraping script.

For example, website can remove or rename certain classes or element ids. This will cause your parser to stop working, because it will no longer be able to find those elements to extract. Google Search constantly changes its class names to make scraping it harder.

Unless you’re willing to build a resilient parser that uses machine learning to adapt, the best way to go about structural changes is to simply maintain your web scraper. Don’t take the code you’ve written for granted and update it once things break.

5. JavaScript-Heavy Websites

Facebook, Twitter, single page applications, and similar interactive websites are rendered onto the browser using JavaScript (JS). This brings useful functionality like endless scrolling and lazy loading. However, it also spells bad news for web scrapers, since content only appears once the JavaScript code runs.

Regular HTML extraction tools like Python’s Requests library don’t have the functionality to deal with dynamic pages. Several years ago, most Twitter scrapers broke because they couldn’t even pass the initial loading screen after an update. A headless browser, on the other hand, will let you fully render the target website and and extract the data you need.

6. Slow Load Speed

When a website receives a large number of requests within a short timeframe, its load speed might slow down and become unstable. In some cases, your requests may simply time out. If you’re browsing regularly, you can always try refreshing the page. However, in the case of web scraping, doing so will interrupt your scraper because it may not know how to handle such a situation.

A way to overcome this roadblock is to add retry functionality to your code, which will automatically reload the page if it identifies that the website failed to serve the request. Just remember not to go overboard with this to prevent from further overloading the server.

Web Scraping Best Practices

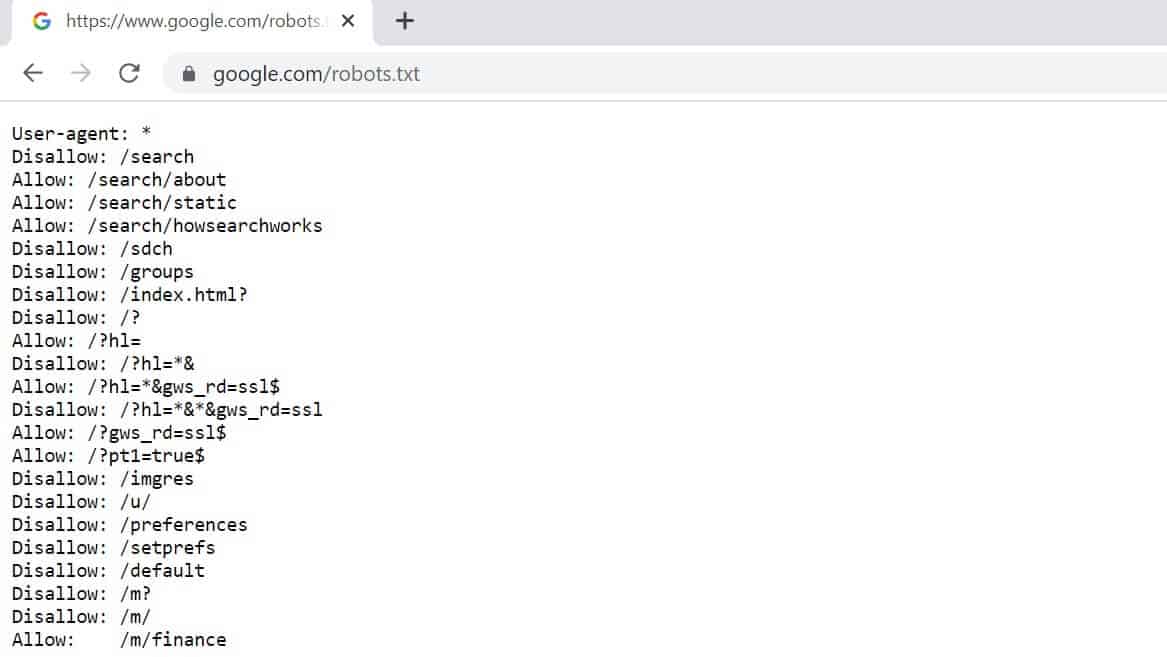

First and foremost, respect the website you’re scraping. You should read data privacy regulations and respect the website’s terms of service. Also, most websites have a robots.txt file – it will help you to identify which data points you can scrape on the target site.

Another way to go about extracting data – be smart about the number of requests you send. Keeping random intervals between your connection requests is a good practice. This way, you’ll look like a real person. You should also keep your distance during peak hours. Millions of connection requests burden the servers. And predictably so, your scraper too.