The Best Node.js Libraries for Web Scraping in 2026

Web scraping with Node.js isn’t that difficult with the right set of tools. We made it easy for you to find the best Node.js library for your project.

Node.js is the preferred option when it comes to web scraping dynamic websites. The runtime became popular for its ability to fully render a website while emulating a real browser. Node.js can also be used to gather data from static pages that don’t need JavaScript rendering. It has many great tools that are performant and relatively easy to use.

In this guide, you’ll learn why Node.js is one of the first choices for web scraping. You’ll also find the top-performing libraries, their strengths, and weaknesses. If you’re in a rush, jump straight to the end and see a comparison table.

Why Choose Node.js Libraries for Web Scraping?

Node.js has many great libraries for handling JavaScript-rendered websites that rely on elements like endless scrolling or infinite loading.

The runtime has several headless browser libraries that are highly scalable. You can handle multiple concurrent connections and requests in parallel because of Node.js’ non-blocking I/O model. Most libraries are suitable for dealing with large amounts of data or scraping multiple pages without losing performance.

Some Node.js libraries include cross-browser support; you can write scripts on a browser of your choice. You can also integrate proxies with the libraries and find different packages for spoofing browser fingerprinting.

In addition, Node.js is easy to learn if you know how to handle JavaScript or CSS. Also, it requires writing less code compared to other programming languages like Ruby when scraping dynamic elements. You can access most tools via npm (Node Package Manager), simplifying installation.

Since Node.js is a popular choice for web scraping, you’ll find that most libraries or frameworks have large communities for support. Many of these communities actively contribute to library updates and troubleshooting, ensuring you have access to the latest features and fixes. So, even though it’s not as beginner-friendly as the Python programming language, you won’t lack answers.

However, I wouldn’t say that Node.js tools are best for scraping static websites because you’ll need to put in a bit more effort than with Python.

Popular Node.js Libraries for Web Scraping

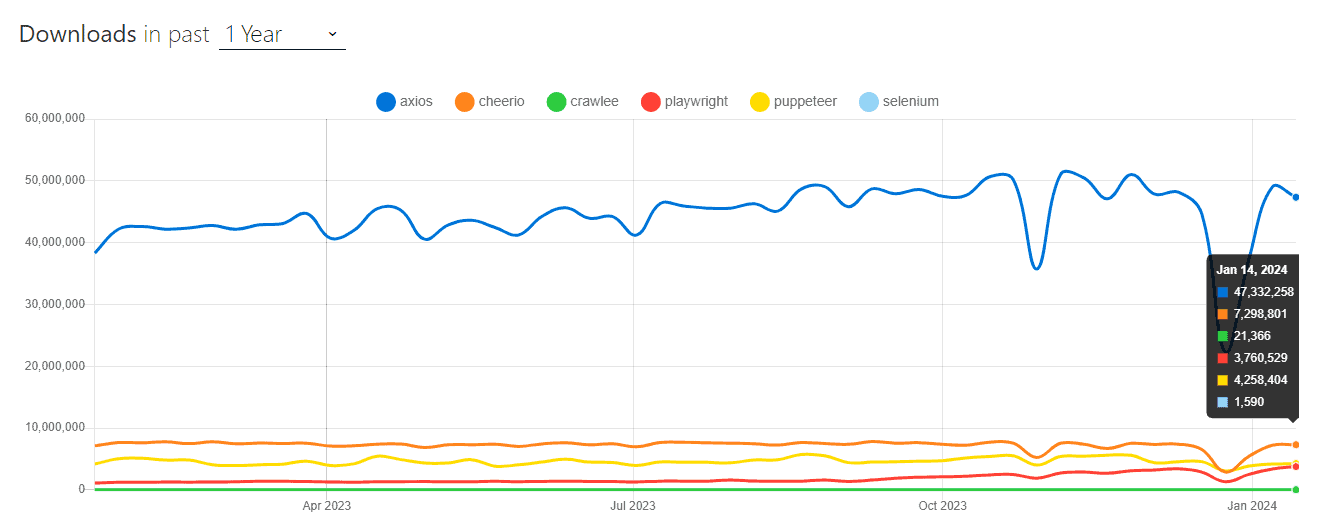

Here’s how the six libraries compare by user downloads in past year:

1. axios

axios is a very popular HTTP client for making requests with Node.js. It’s primarily used for sending asynchronous requests to REST endpoints, but you can also download the page’s HTML.

Usually, axios is used when you don’t need to automate your browser. It isn’t a standalone library, which means it works only with other dependencies. For example, the tool can be paired with other Node.js libraries like Cheerio for a full web scraping experience – downloading and cleaning data.

axios has cross-browser capabilities – it supports Chrome, Firefox, Safari, Opera, and Internet Explorer. It also supports standard HTTP request methods like GET or POST. Additionally, the library automatically handles JSON data – you can easily make a request, scrape data, and get a JSON response.

The library can be used in both browser-based and Node.js environments. It can determine when a request is made to the browser or Node.js. In web scraping, a code can run in different contexts. For example, if you’re using axios with a Node.js headless browser library Puppeteer, it can adjust the behaviour and run in a browser.

Even though axios has confusing documentation, the HTTP client is pretty easy to use.

2. Cheerio

Cheerio is a Node.js data parsing library for extracting HTML and XML code you’ve downloaded with an HTTP client. It’s usually used together with axios and is built specifically for web scraping purposes – to be exact, data parsing.

If you’re familiar with jQuery, getting the hang of Cheerio won’t take much time; it implements core jQuery features, so the syntax is very similar. The library is built over parse5 for parsing HTML and htmlparser2 for XML documents. This makes Cheerio very flexible.

Cheerio is a good tool for beginners and developers working with static pages. It uses fewer resources than headless browser libraries, so you’ll need less computing power.

The library has mediocre documentation, but since it’s easy to set up, you shouldn’t encounter many problems. Despite this, an active community provides support through forums and GitHub discussions.

3. Selenium

When talking about rendering JavaScript, Selenium is probably the first name that pops in mind. The library is a veteran in the industry – it dates back to 2004 – though the tool’s primary purpose is browser automation.

Selenium can control a headless browser programmatically. For example, it can take screenshots, move the mouse, and more. The library imitates human behavior, so it can be used with serious targets like Amazon or eBay. What’s more, it has packages like selenium-stealth that help to avoid fingerprint detection. If you properly set it up, you can avoid reCAPTCHAs.

The library can emulate all major browsers like Chrome, Firefox, and Microsoft Edge. Besides running with Node.js, Selenium can be used with other programming languages like Python, Java, Ruby, and others.

However, Selenium is very slow compared to the combination of axios and Cheerio or even Playwright. You need to use a web driver to control the tool, and this takes up a lot of resources and reduces speed.

You won’t lack documentation or community support, but a word of warning: Selenium has a steep learning curve mainly because it wasn’t built for web scraping purposes.

4. Puppeteer

Puppeteer is a headless browser library for controlling a headless Chrome browser. It was developed in 2018 by Google. Today, the tool is maintained by Chrome Browser Automation developers.

The library is designed to automate browser interactions like filling out forms, moving the mouse, taking screenshots, and more. However, for now, it works well only with Chrome and Chromium; the team is experimenting with the support for Firefox and Microsoft Edge.

You can integrate Puppeteer with proxies to hide your real location and IP address, as well as use plugins like puppeteer-extra-plugin-stealth to mask your browser fingerprint. Such additional integrations help you to look more like a real user.

Puppeteer uses Chromium’s built-in DevTools Protocol that allows you to control the browser directly. The tool is asynchronous by default – you can handle concurrent requests and tackle multiple pages in parallel. It’s also relatively light on resources.

The library is much easier to use than Selenium; you can work with any Integrated Development Environment (IDE) for writing scripts, whereas Selenium has only a built-in one. Why should you care? Well, this way, you’ll have to write less code.

Even though Puppeteer is a “young” tool, it has a growing community and well-drafted documentation.

5. Playwright

Playwright’s primary purpose is end-to-end web and app testing, but it’s a powerful tool in web scraping as well. The history of the tool is quite interesting. The team behind another tool – Puppeteer – shifted its focus and moved to working with Microsoft to create a library that works with all major rendering engines.

Unlike Puppeteer, which mainly works with Chromium, Playwright supports three major browser groups – Chromium, Firefox, and WebKit. It’s also flexible regarding programming languages: you can use it with Python, JavaScript, NET., TypeScript, and Java.

Playwright can also handle browser fingerprinting by offering packages like playwright-extra.

If you need to mimic different sessions or users, you can separate browser instances with Playwright’s cookies or set up auto wait.

Playwright is asynchronous by default, but it also supports a synchronous approach. So, you can easily manage multiple requests in parallel or deal with a single request at a time.

Compared to Selenium, Playwright is much lighter on resources, mainly due to its architecture. The library uses a WebSocket connection that allows you to send requests in one go via the same connection.

While Playwright’s community can’t compare to Selenium’s, it’s growing fast. You’ll also find extensive documentation that includes examples and guides.

6. Crawlee

Unlike some alternatives on this list, Crawlee is specifically designed for web scraping, crawling, and browser automation. It’s the newest library on this list, launched by Apify in 2022.

The framework is built on some of the above-listed libraries – Cheerio, Playwright, and Puppeteer. This gives additional features for Crawlee, for example, Cheerio’s capabilities of fast parsing. Additionally, it uses a single interface for HTTP requests and headless browsing.

Crawlee is written in TypeScript, but you don’t necessarily need to use it – you can still go with JavaScript. The tool allows you to run multiple headless browsers and crawl pages in parallel.

In terms of browser fingerprint techniques, Crawlee is very powerful. It can automatically generate headers, manage sessions, and mimic TLS fingerprints. Crawlee has integrated proxy support and automatic rotation based on success rate. Some other features are error handling, routing, retries, and HTTP2 support.

Like most headless browsers, you can download data in HTML, PDF, JPG, or PNG, and works with major browsers like Chrome, Firefox as well as Webkit.

Due to Crawlee’s novelty, it doesn’t have a very large community of users yet, but it’s growing steadily. Most problems are solved on GitHub, discord, and stackoverflow. You can also find extensive documentation.

Node.js Web Scraping Libraries: A Comparison

Now, let’s see how these tools compare feature and performance wise.

| axios | Cheerio | Selenium | Puppeteer | Playwright | Crawlee | |

| Purpose | HTTP client | Parser | Headless browser library | Headless browser library | Headless browser library | Framework for headless and request-based scraping |

| Browser support | Chrome, Firefox, Safari, Opera, and Internet Explorer | – | Chrome, Firefox, and Microsoft Edge | Chrome and Chromium. Limited support for Firefox and Microsoft Edge | Chromium, Firefox, and WebKit | Chrome, Firefox, and WebKit |

| JavaScript rendering | No | No | Yes | Yes | Yes | Yes |

| Difficulty setting up | Easy | Easy | Difficult | Medium | Medium | Medium |

| Learning curve | Easy | Easy | Difficult | Medium | Medium | Medium |

| Performance | Fast | Fast | Slow | Mediocre | Mediocre | Fast |

| Github starts (January, 2024 data) | 103k | 27k | 29k | 86k | 58k | 11k |

| Community | Large | Mediocre | Largest | Large | Mediocre | Small |