The Best Python HTML Parsers

Scraped web data is of little use to people if they can’t read and analyze it. That’s where HTML parsers play a vital role – they extract only the meaningful pieces from the raw downloaded data, and clean it for better readability.

Python is one of the easiest programming languages to learn, but despite that it’s great for web scraping, and has many libraries to expand its capabilities. For example, there are multiple HTML parser libraries available on the market, so it can be tricky to choose the one best suited for your scraping project. In this article, you’ll find everything you need to know about Python HTML parsers: what they are, how they work, and which ones are the easiest to set up and use.

What is HTML Parsing?

HTML parsing refers to extracting only the relevant information from HTML code. This means that raw HTML data – which includes markup tags, bugs, or other irrelevant pieces of information –is cleaned, structured, and modified into meaningful data points or content.

For example, let’s say you really like this article and want to extract the list of the best parsers for offline reading. While you could download the site as an HTML file, it would be tricky to read because of all the HTML tags. Instead, by using a web scraper to extract the list below and an HTML parser to process it, you would get only the relevant content in a clean format.

Why Parse HTML Data?

Parsing increases the readability of HTML data by removing all necessary or broken information. To illustrate what HTML parsing does, let’s compare raw HTML with parsed data.

Below is the code for a simple HTML website:

<html>

<head>

<title>My Website</title>

</head>

<body>

<h1>Welcome to My Website</h1>

<p>This is an example paragraph.</p>

<a href="https://example.com">Click here</a>

<ul>

<li>Item 1</li>

<li>Item 2</li>

<li>Item 3</li>

</ul>

</body>

</html>

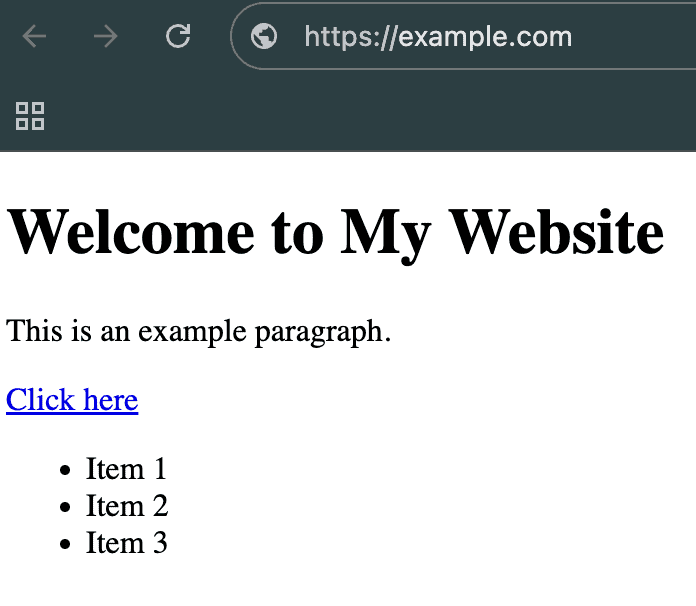

Your browser uses the code, and “translates” it into something that more visually appealing and functional for humans. Here’s how your browser would render this code visually.

As you can see, the code contains HTML elements such as <html>, <body>. While this data is relevant for browsers to display the website correctly, it’s not particularly useful for humans. What we’re interested in is the website’s name, the link, and the data in bullet points.

By using a Python HTML parser like BeautifulSoup, we can remove irrelevant information pieces and convert the raw HTML into structured, readable data like this:

Title: My Website

H1 Heading: Welcome to My Website

Paragraph: This is an example paragraph.

Link: https://example.com

List Items: ['Item 1', 'Item 2', 'Item 3']

In this case, the parser removed HTML elements and structured the most important data points.The result includes fewer lines of code, neatly ordered list items, and the retained link, though the ‘Click here’ text was removed. Importantly, no relevant information was lost. This structured data is much easier to read for us and can be further manipulated or analyzed.

Now, let’s take a look at the best HTML parsers to use with your Python scraper.

The Best Python HTML Parsers of 2026

1. BeautifulSoup

The most popular Python parsing library.

BeautifulSoup is one the most popular Python libraries used for parsing. It’s lightweight, versatile, and relatively easy to learn.

BeautifulSoup is a powerful HTML and XML parser that converts raw HTML documents into Python parse trees (a hierarchical tree model that breaks down structures and syntax based on Python’s rules), and then extracts relevant information from them. You can also navigate, search, and modify these trees as you see fit. BeautifulSoup is also excellent for handling poorly formatted or broken HTML – it can recognize errors, interpret the malformed HTML correctly, and fix it.

Since it’s a library for HTML manipulation, BeautifulSoup doesn’t work alone. To render static content, you’ll need an HTTP client like requests to fetch the web pages for parsing. The same applies for dynamic content – you’ll have to use a headless browser like Selenium or Playwright.

The library is very popular and well-maintained, so you’ll find an active community and extensive documentation to help you out.

To install BeautifulSoup, all you have to do is run pip install beautifulsoup4 in your terminal.

Let’s see how to use BeautifulSoup to parse our simple HTML website.

from bs4 import BeautifulSoup

html_code = """

<html>

<head>

<title>My Website</title>

</head>

<body>

<h1>Welcome to My Website</h1>

<p>This is an example paragraph.</p>

<a href="https://example.com">Click here</a>

<ul>

<li>Item 1</li>

<li>Item 2</li>

<li>Item 3</li>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html_code, 'html.parser')

title = soup.title.string

h1 = soup.h1.string

paragraph = soup.p.string

link_text = soup.a.string

link_href = soup.a['href']

list_items = [li.string for li in soup.find_all('li')]

print("Title:", title)

print("Heading (h1):", h1)

print("Paragraph:", paragraph)

print("Link Text:", link_text)

print("Link Href:", link_href)

print("List Items:", list_items)

Here’s how the final parsed results would look like:

results = {

"Title": "My Website",

"Heading (h1)": "Welcome to My Website",

"Paragraph": "This is an example paragraph.",

"Link Text": "Click here",

"Link Href": "https://example.com",

"List Items": ["Item 1", "Item 2", "Item 3"]

}

for key, value in results.items():

print(f"{key}: {value}")

2. lmxl

An efficient parsing library for HTML and XML documents.

lxml library is probably one of the most efficient parsing libraries for parsing raw HTML and XML data. It’s fast and performant, so it’s great for handling large HTML documents.

The lxml library connects Python with powerful C libraries for processing HTML and XML. It turns raw data into objects you can navigate using XPath or CSS selectors. However, since it’s a static parser, you’ll need a headless browser for dynamic content. While lxml is very fast, it can be harder to learn if you’re not familiar with XPath queries.

Install lxml by running pip install lxml in your terminal, and adding from lxml import html in your scraping project.

Here’s how lxml would parse a simple website:

from lxml import html

html_code = """

<html>

<head>

<title>My Website</title>

</head>

<body>

<h1>Welcome to My Website</h1>

<p>This is an example paragraph.</p>

<a href="https://example.com">Click here</a>

<ul>

<li>Item 1</li>

<li>Item 2</li>

<li>Item 3</li>

</ul>

</body>

</html>

"""

tree = html.fromstring(html_code)

title = tree.xpath('//title/text()')[0]

h1 = tree.xpath('//h1/text()')[0]

paragraph = tree.xpath('//p/text()')[0]

link_text = tree.xpath('//a/text()')[0]

link_href = tree.xpath('//a/@href')[0]

list_items = tree.xpath('//ul/li/text()')

print("Title:", title)

print("Heading (h1):", h1)

print("Paragraph:", paragraph)

print("Link Text:", link_text)

print("Link Href:", link_href)

print("List Items:", list_items)

How parsed results would look like:

Title: My Website

Heading (h1): Welcome to My Website

Paragraph: This is an example paragraph.

Link Text: More information...

Link Href: https://www.example.com

List Items: ['Item 1', 'Item 2', 'Item 3']

3. PyQuery

Library for parsing HTML and XML documents with jQuery syntax.

PyQuery is another Python library for parsing and manipulating HTML and XML documents. Its syntax is similar to jQuery, so it’s a good choice if you’re already familiar with the library.

PyQuery is quite intuitive – CSS-style selectors make it easy to navigate the document and extract or modify HTML and XML content. PyQuery also allows you to create document trees for easier data extraction. It works similarly to BeautifulSoup and lmxl: you can load an HTML or XML document into a Python object and use jQuery-style commands to interact with it, so the key difference is the syntax. PyQuery also has many helper functions, so you won’t have to write that much code yourself.

The library is efficient for static content, but it does not natively handle dynamic content – it needs headless browsers to render JavaScript-driven pages before parsing the content.

To install PyQuery, run pip install pyquery in your terminal, and add from pyquery import PyQuery as pq in your project to use it.

Here’s an example of how to use PyQuery to parse a simple HTML document:

from pyquery import PyQuery as pq

html_code = """

<html>

<head>

<title>My Website</title>

</head>

<body>

<h1>Welcome to My Website</h1>

<p>This is an example paragraph.</p>

<a href="https://example.com">Click here</a>

<ul>

<li>Item 1</li>

<li>Item 2</li>

<li>Item 3</li>

</ul>

</body>

</html>

"""

doc = pq(html_code)

title = doc("title").text()

h1 = doc("h1").text()

paragraph = doc("p").text()

link_text = doc("a").text()

link_href = doc("a").attr("href")

list_items = [li.text() for li in doc("ul li").items()]

print("Title:", title)

print("Heading (h1):", h1)

print("Paragraph:", paragraph)

print("Link Text:", link_text)

print("Link Href:", link_href)

print("List Items:", list_items)

And here’s how PyQuery would print the results:

Title: My Website

Heading (h1): Welcome to My Website

Paragraph: This is an example paragraph.

Link Text: More information...

Link Href: https://www.example.com

List Items: ['Item 1', 'Item 2', 'Item 3']

4. requests-html

Parsing library that supports static and dynamic content.

requests-html is a Python HTML parsing library capable of rendering HTML that supports both static and dynamic content. It combines the convenience of the requests library (HTTP client for fetching web pages) with JavaScript rendering abilities of a headless browser, so there are less libraries for you to use.

With requests-html, you can easily send HTTP requests to a webpage and receive the fully rendered HTML. requests-html is great for static pages as you can send requests and parse raw data with one package. However, the library stands out because it can scrape JavaScript-based web pages, too – it relies on a Chromium web browser for handling dynamic content natively. Additionally, it has multiple parsing strategies, including CSS selectors and XPath, so it’s very convenient.

requests-html also supports multi-threaded requests, so you can interact with several web pages at once. However, this makes it much harder to learn, and it’s significantly slower than traditional parsers due to requiring additional processing power to render the JavaScript.

To install requests-html, run pip install requests-html in your terminal. Once installed, add from requests_html import HTMLSession to your scraping project.

Here’s how to use requests-html to parse a simple website:

from requests_html import HTMLSession

session = HTMLSession()

response = session.get('https://example.com')

doc = response.html

title = doc.find('title', first=True).text

h1 = doc.find('h1', first=True).text

paragraph = doc.find('p', first=True).text

link_text = doc.find('a', first=True).text

link_href = doc.find('a', first=True).attrs['href']

list_items = [li.text for li in doc.find('ul li')]

print("Title:", title)

print("Heading (h1):", h1)

print("Paragraph:", paragraph)

print("Link Text:", link_text)

print("Link Href:", link_href)

print("List Items:", list_items)

The parsed results will look like this:

Title: My Website

Heading (h1): Welcome to My Website

Paragraph: This is an example paragraph.

Link Text: More information...

Link Href: https://www.example.com

List Items: ['Item 1', 'Item 2', 'Item 3']

The Differences Between Python HTML Parsers

The choice of HTML parser boils down to what your project needs – while some projects might require native JavaScript rendering, some can do without that. Also, check if speed and efficiency are up to your expectations. Here’s how the libraries compare:

|

Library |

Speed |

Ease of Use |

Native Dynamic Content Handling |

Ideal Use Case |

|

BeautifulSoup |

Fast |

Very easy |

No |

Simple HTML parsing |

|

lxml |

Very fast |

Moderate |

No |

Fast parsing |

|

PyQuery |

Fast |

Easy |

No |

Scraping with CSS selectors |

|

requests-html |

Fast (static content); moderate (dynamic content) |

Easy |

Yes |

Scraping and parsing dynamic web pages |

In short, use BeautifulSoup or lxml for static HTML content. They are efficient and relatively easy to learn. If you want to handle dynamic content, use requests-html which integrates a headless browser. If you’re planning to scrape with CSS selectors, use PyQuery for easy navigation and data manipulation.