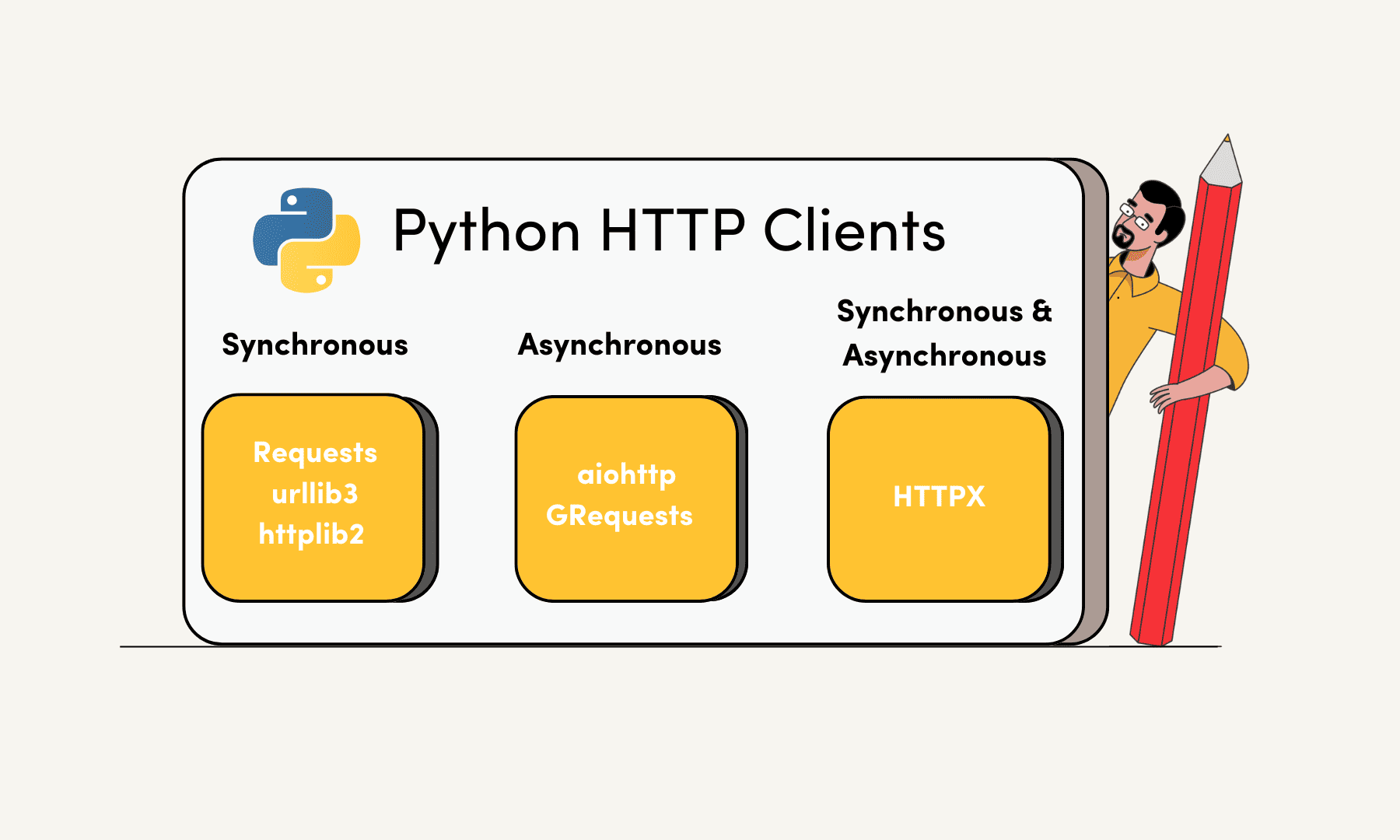

The Best Python HTTP Clients (Python Requests Alternatives)

Looking for Python Requests alternatives? We compiled a list of the best Python HTTP clients for you to try.

What Is a Python HTTP Client?

An HTTP client is a library for making HTTP requests like GET or POST to communicate with web servers and retrieve information from them. In essence, a Python HTTP client fetches you raw HTML from a web page.

However, raw HTML data is very messy and impossible to read. That’s why an HTTP client is usually paired with parsing libraries like Beautiful Soup or lxml. In addition, HTTP clients may not work with all targets. If you want to extract data from dynamic websites that use JavaScript to load their content, you’ll need a headless browser library like Selenium or Puppeteer.

For web scraping purposes, HTTP clients are often used together with a proxy server. Changing your IP address and location can be crucial for your project’s success. Why? Because websites today are well protected and apply various anti-bot techniques to stop you from getting the information.

Why Is Python Requests So Popular?

Requests is the go-to choice for both web scraping veterans and beginners. Compared to other HTTP clients, Requests is easy to use and requires writing less code to get the data.

With Requests, you don’t need to add query strings to the target URL manually. It’s built upon Python’s urllib3 library, which allows you to make requests within a session.

If your target website has an official API, Requests allows you to directly connect to the API and get access to specific information. What sets Requests apart from other HTTP clients is that it has a built-in JSON decoder. It lets you retrieve and decode JSON data with just a few lines of code.

Among all the functionalities, Requests can automatically follow and handle HTTP redirects and decode the content based on the response headers, making it easier to work with compressed data. What’s more, the library comes with SSL verification and connection timeouts.

Requests is flexible in terms of dealing with web scraping tasks like handling cookies, headers, and errors. However, it’s synchronous by default, so you won’t be able to send multiple requests at once.

Here is an example of making a GET request using Requests:

import requests

def main():

url = 'https://example.com'

response = requests.get(url)

Why Look for Python Requests Alternatives?

Python’s Requests library has been a popular choice for years, but it comes with some limitations:

- Performance. Some alternative libraries are faster than Requests.

- Additional features. Other libraries may offer features not available with Requests: for example, HTTP/2 support or automatic caching.

- Async capabilities. Asynchronous web scraping allows you to gather data from multiple pages in parallel, and the Requests library doesn’t support this feature.

The Best Python Requests Alternatives for Web Scraping

1. urllib3 – Fast HTTP Client for Handling Multiple Requests

The urllib3 library is another synchronous Python HTTP client. The creators themselves state that it brings many features not included in other standard libraries. So, while not as user-friendly as Requests, urllib3 is still a popular choice.

First things first. urllib3 is designed to be thread-safe. It allows you to use techniques like multithreading – separating web scraping tasks into several threads. The library supports concurrent requests, so you can scrape multiple pages at the same time.

Another great benefit of the library is that it allows connection pooling. In simple words, instead of opening a new connection with every request, it calls the Get() function to reach the existing connection. Performance-wise, the feature makes urllib3 fast and efficient since it requires less computing resources than Requests. Additionally, you can handle multiple requests over a single connection.

However, using the connection pooling has some downsides – it doesn’t support cookies, so you’ll have to pass them as a header value.

What’s more, urllib3 supports SSL/TLS encryption and allows you to specify connection timeout and set up retries. The library also handles retries and redirects.

The following code uses the urllib3 library to send a GET request:

import urllib3

http = urllib3.PoolManager()

url = 'https://www.example.com'

response = http.request('GET', url)

Visit the official website for further instructions.

2. aiohttp – Robust Asynchronous Web Scraping Library

aiohttp is a library designed for asynchronous web scraping. It works best in cases where you need high concurrency.

aiohttp is built on the asyncio library and supports asynchronous I/O operations for handling requests and responses. This means that it can manage multiple asynchronous requests without blocking the main program’s execution. So, your scraper can work on other tasks while waiting for a response.

Like Requests, aiohttp supports standard HTTP methods. It can handle various request and response types.

What’s more, the library is not limited to being an HTTP client; it’s also used for developing web applications and APIs that can handle a high volume of asynchronous connections. While not a primary use case for web scraping, this is useful when you want to create a custom API or endpoint for your web scraper or to make HTTP requests in high-concurrency environments.

Additionally, aiohttp supports session management that allows you to maintain state between requests. For example, you can use it to manage cookies, store session data, or handle authentication. Additionally, you can add plugins, middleware, or modify request headers.

This is an example of making an asynchronous request using aiohttp:

import aiohttp

import asyncio

async def main():

async with aiohttp.ClientSession() as session:

async with session.get("https://example.com") as response:

print(await response.text())

asyncio.run(main())

Refer to the official website for further instructions.

3. HTTPX – Supports HTTP/2 & Asynchronous Requests

HTTPX is a feature-rich HTTP client used for all types of web scraping projects.

The library supports synchronous API by default, but you can also use HTTPX for asynchronous web scraping. Actually, the latter is the preferred method because of better performance and it allows you to use connections like WebSockets.

Additionally, HTTPX comes with HTTP/2 support, which can reduce your block rate compared to HTTP/1. It uses a single TCP connection that enables concurrent resource loading at once. This makes it harder for websites to track your browser fingerprint. None of the libraries on this list have this feature.

Another great benefit of the HTTPX library is its built-in support for streaming responses. This feature is useful if you want to download large amounts of data that don’t load the entire response into memory at once.

HTTPX includes automatic decoding of JSON. So, when you receive a response from an HTTP request, HTTPX detects and handles the response body if it’s in JSON.

Performance-wise, HTTPX is faster than Requests but slower than the Aiohttp library. What’s more, it doesn’t follow redirects by default.

Let’s look at how to make a GET request using HTTPX:

import httpx

import asyncio

async def main():

url = 'https://example.com'

async with httpx.AsyncClient() as client:

response = await client.get(url)

asyncio.run(main())

Refer to the official website for more information.

4. GRequests – an Asynchronous Supplement for the Requests Library

GRequests is built on top of the Requests library but with the ability to deal with asynchronous requests. It’s a user-friendly library, usually used together with Requests.

GRequests leverages the asynchronous capabilities of Python through libraries like Gevent, so you can send multiple HTTP requests at a time.

The library can be integrated into existing web scraping projects that use the Requests library. And the best part – you don’t have to rewrite your entire codebase.

If you’re already familiar with Requests, using GRequests is relatively straightforward. It has similar syntax and methods for making HTTP requests, and the library has much of the same functionality. However, it’s not a very popular or actively maintained library.

The following is an example of making a GET request using GRequests:

import grequests

urls = ['https://example.com', 'https://example.org']

requests = (grequests.get(url) for url in urls)

responses = grequests.map(requests)

Check out GitHub for more information.

5. httplib2 – Great for Caching HTTP Responses

import httplib2

http = httplib2.Http()

url = 'https://example.com'

response, content = http.request(url, 'GET')

Visit the official website to find out more.

Comparison table

| Requests | urllib3 | aiohttp | HTTPX | GRequests | httplib2 | |

| Learning curve | Easy | Easy | Moderate | Moderate | Moderate | Easy |

| Asynchronous | No | No | Yes | Yes | Yes | No |

| Sessions | Yes | No | Yes | Yes | Yes | No |

| HTTP/2 | No | No | No | Yes | No | No |

| Performance | Slower | Fast | Fast | Moderate | Moderate | Moderate |

| Community | Large | Large | Large | Large | Small | Moderate |