Python Asynchronous Requests: A Web Scraping Tutorial with AIOHTTP

This is a step-by-step tutorial on using Python’s aiohttp library for sending asynchronous requests and web scraping multiple pages in parallel.

If you’ve ever scraped tens or hundreds of pages, you know the hustle of waiting for the request to finish before you can send another one. What if I told you there are ways to send multiple requests in parallel without waiting for a response. That’s where asynchronous web scraping steps in to save the day… and your nerves.

In this tutorial, you’ll learn all about asynchronous web scraping, the benefits it brings, and find a step-by-step example of how to scrape multiple web pages with one of the most popular Python libraries built for this task – aiohttp.

What Is Asynchronous Web Scraping?

Asynchronous web scraping is a technique that allows you to handle multiple requests in parallel. In simple words, you don’t have to wait for a response before sending a new one. To state the obvious, it saves a lot of time and increases your web scraping chances with challenging targets. Using asynchronous methods can also help you avoid being blocked by websites, as the requests are spaced out and less predictable.

Every time you send a request, you have to wait for the response. And the waiting time can get very lengthy when gathering large amounts of data. Let’s say you want to scrape ten web pages. It takes approximately 2-3 seconds to load each page. If you do that synchronously, running the code will take around 25 seconds each time. Asynchronous web scraping, on the other hand, allows you to finish the task in 2-3 seconds.

So, unlike synchronous scraping, where you have to finish one request before starting another, asynchronous web scraping works concurrently. This means you can send multiple requests at once. But be aware of the web scraping challenges associated with bot-like activities.

Why Use aiohttp for Asynchronous Web Scraping?

aiohttp is a Python library designed for asynchronous web scraping tasks. It’s built on the asyncio library and supports asynchronous I/O operations, so it can manage multiple requests without blocking the main program’s execution.

aiohttp can handle various request and response types. It supports session management to maintain the state between requests. You can use it to modify request headers, handle authentication or manage cookies. You can also add plugins and middleware.

The library is sometimes used to build web applications and APIs that manage asynchronous connections. This comes in handy when you need to set up a custom API or endpoint for your web scraper. Additionally, aiohttp offers built-in support for both client and server WebSockets.

Asynchronous Web Scraping With Python & aiohttp: a Step-by-Step Tutorial

In this step-by-step tutorial, we’ll scrape a dummy website – books.toscrape.com. We’ll show you how to build a script that goes through all the pages and scrapes book titles as well as URLs.

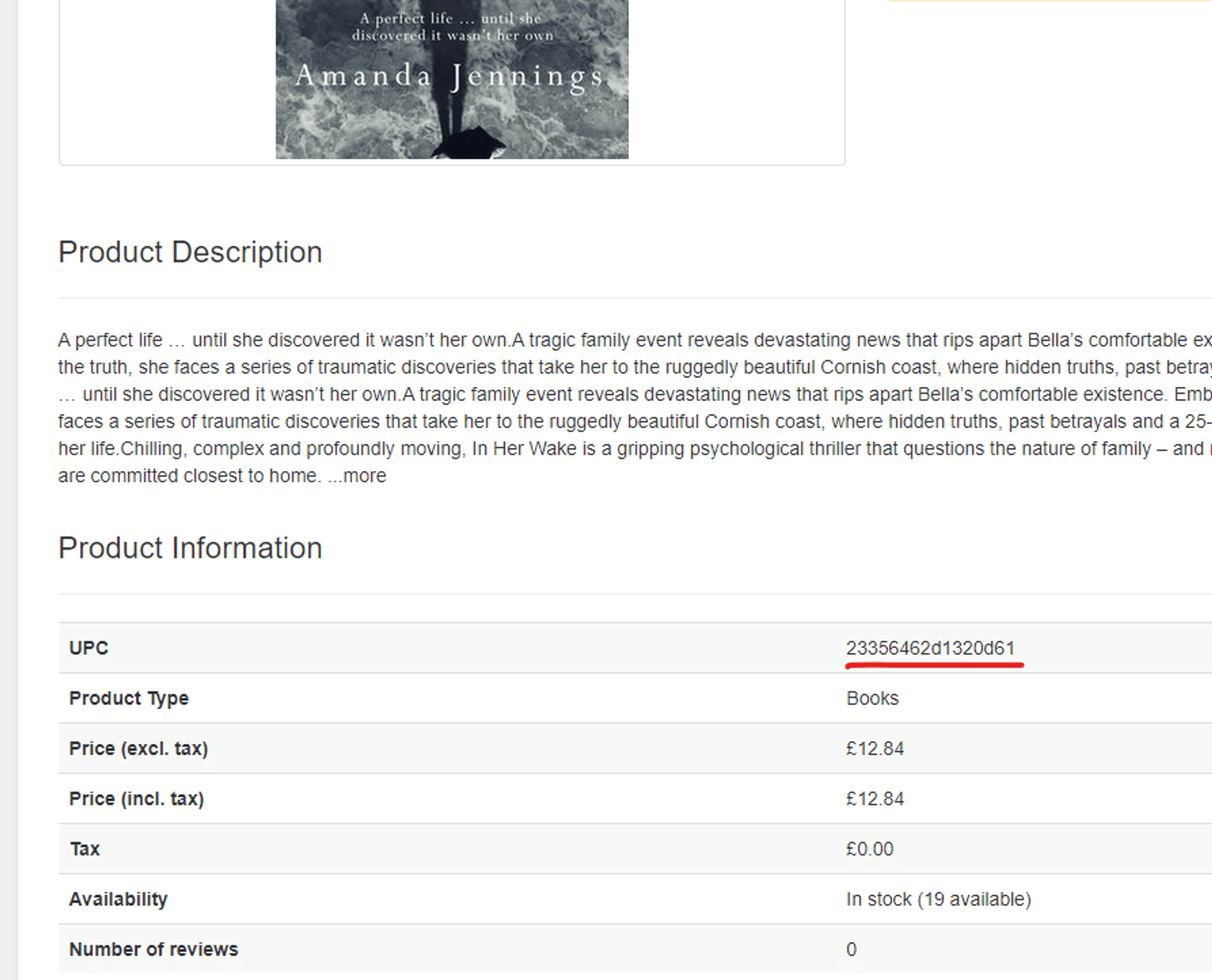

There are 1,000 books, so using regular libraries like Requests would be very slow compared to aiohttp. This example scrapes the UPC field for each book.

Prerequisites

Before you begin, you’ll need to install:

- the latest Python version on your computer,

- aiohttp by writing pip install aiohttp in your operating system’s terminal,

- asyncio by writing pip install asyncio. Python programming language is synchronous by default, so for aiohttp to work, you need to use asyncio library.

- Beautiful Soup by running pip install beautifulsoup4. The parsing library allows you to download the results in a structured format.

Setting Up Preliminaries

Step 1. First, import the two main modules.

import aiohttp

import asyncio

Step 2. Create the main() function. Then, tell asyncio to run it. Here, we specify the start_url and create a list that will hold results.

start_url = "https://books.toscrape.com/"

result_dict = []

async def main():

if __name__ == "__main__":

asyncio.run(main())

Step 3. Create a session with aiohttp, which will send the requests. Then, pass the session object along with start_url to the scrape_urls function. We’ll create them together.

async def main():

async with aiohttp.ClientSession() as session:

await scrape_urls(session, start_url)

Crawling the Page

async def scrape_urls(session, url):

async with session.get(url) as resp:

if resp.status == 200:

resp_data = await resp.text()

else:

print(f"Request failed for {url} with: {resp.status}")

# Retry functionality could be added here

Step 2. Parse the page to collect URLs.

Import Python’s parsing library Beautiful Soup.

from bs4 import BeautifulSoup

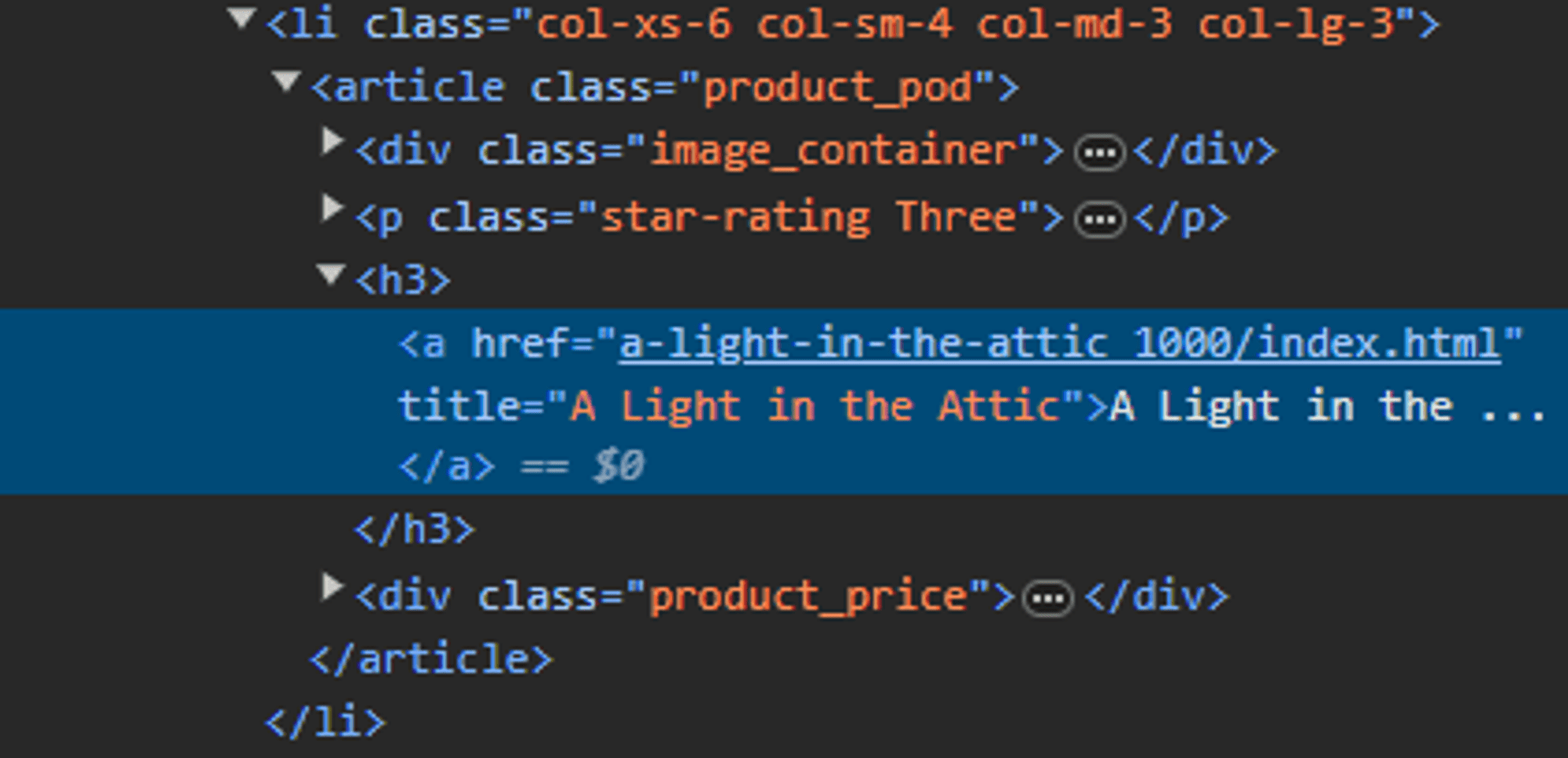

Then, add a new function to find all articles with the class “product_pod” on that page.

async def parse_page(resp_data):

soup = BeautifulSoup(resp_data, "html.parser")

books_on_page = soup.findAll("article", class_="product_pod")

After creating the soup object, you can locate all book elements on the page. To find each book, select the article tag with the class product_pod. As a result, you’ll retrieve a list of elements named books_on_page.

The title and the URL are located within the first <a> element child of an <h3> tag. So, you’ll need to add a loop that iterates through all the article elements.

Step 3. Then, use Beautiful Soup to locate the link element and the title.

async def parse_page(resp_data):

soup = BeautifulSoup(resp_data, "html.parser")

books_on_page = soup.findAll("article", class_="product_pod")

for book in books_on_page:

link_elem = book.find("h3").a

book_title = link_elem.get("title")

Step 4. Now, you can get the link to the product pages of each book. While extracting the href attribute is straightforward, you still need to format it into an absolute link and validate it. The make_url function will handle this task.

Then, check if the string “catalogue/” is in a link, and if so, it’ll form a functional URL; otherwise, it appends “catalogue/” to the start URL to create the full link.

from urllib.parse import urljoin

def make_url(href):

if "catalogue/" in href:

url = urljoin(start_url, href)

return url

url = urljoin(f"{start_url}catalogue/", href)

return url

Step 5. We now extract the href attribute from the link element and format it using the make_url function.

async def parse_page(resp_data):

soup = BeautifulSoup(resp_data, "html.parser")

books_on_page = soup.findAll("article", class_="product_pod")

for book in books_on_page:

link_elem = book.find("h3").a

book_title = link_elem.get("title")

book_url = make_url(link_elem.get("href"))

Step 6. Append the results.

Now that you’ve got the URL, you can create a dictionary object containing the scraped data and append the dictionary to your results list. It will allow you to store all the information you’ve scraped.

result_dict.append({

'title': book_title,

'url': book_url,

})

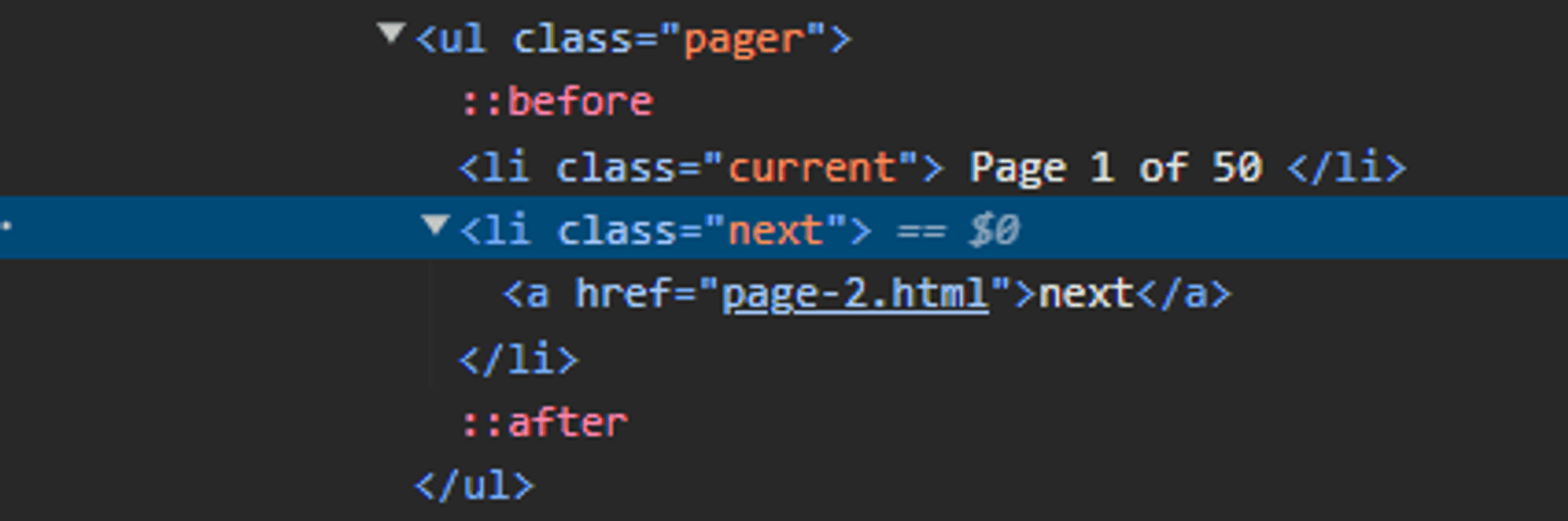

Step 7. Get the link to the next page.

To crawl all pages, the script needs to find the link to the next page. This link is inside the a tag, which is within the li tag with the class next.

You can send the href to the make_url() created earlier to get the whole URL.

However, since the last page won’t display a next page button, Beautiful Soup won’t locate it. So, the script will fail. That’s why you need to wrap this code within a try-except block.

try:

next_page_link = soup.find("li", class_ = "next").a

next_page_url = make_url(next_page_link.get("href"))

return next_page_url

except:

print ("No link to next page. Done")

return None

This function will return the URL to the next page or “None” if you’ve reached the end.

The whole parse_page function:

async def parse_page(resp_data):

soup = BeautifulSoup(resp_data, "html.parser")

books_on_page = soup.findAll("article", class_="product_pod")

for book in books_on_page:

link_elem = book.find("h3").a

book_title = link_elem.get("title")

book_url = make_url(link_elem.get("href"))

print (f"{book_title}: {book_url}")

result_dict.append({

'title': book_title,

'url': book_url,

})

try:

next_page_link = soup.find("li", class_ = "next").a

next_page_url = make_url(next_page_link.get("href"))

return next_page_url

except:

print ("No link to next page. Done")

return None

Step 7. Finish the scrape_urls function.

Let’s call the parse_page() function. If it returns the URL, then you’ll need to call the scrape_urls() function again to scrape the next page. If the scrape_urls() has returned “None”, then the scraping is finished.

async def scrape_urls(session, url):

async with session.get(url) as resp:

if resp.status == 200:

resp_data = await resp.text()

next_url = await parse_page(resp_data)

if next_url is not None:

await scrape_urls(session, next_url)

else:

print(f"Request failed for {url} with: {resp.status}")

# Retry functionality could be added here

Scraping the Book Information

Now that you’ve gathered all the book titles and URLs, you can scrape book information.

Step 1. Let’s iterate through the result_dict list and scrape every book. You can do that by adding a couple of lines to the main() function.

async def main():

async with aiohttp.ClientSession() as session:

await scrape_urls(session, start_url)

for book in result_dict:

await scrape_item(session, book)

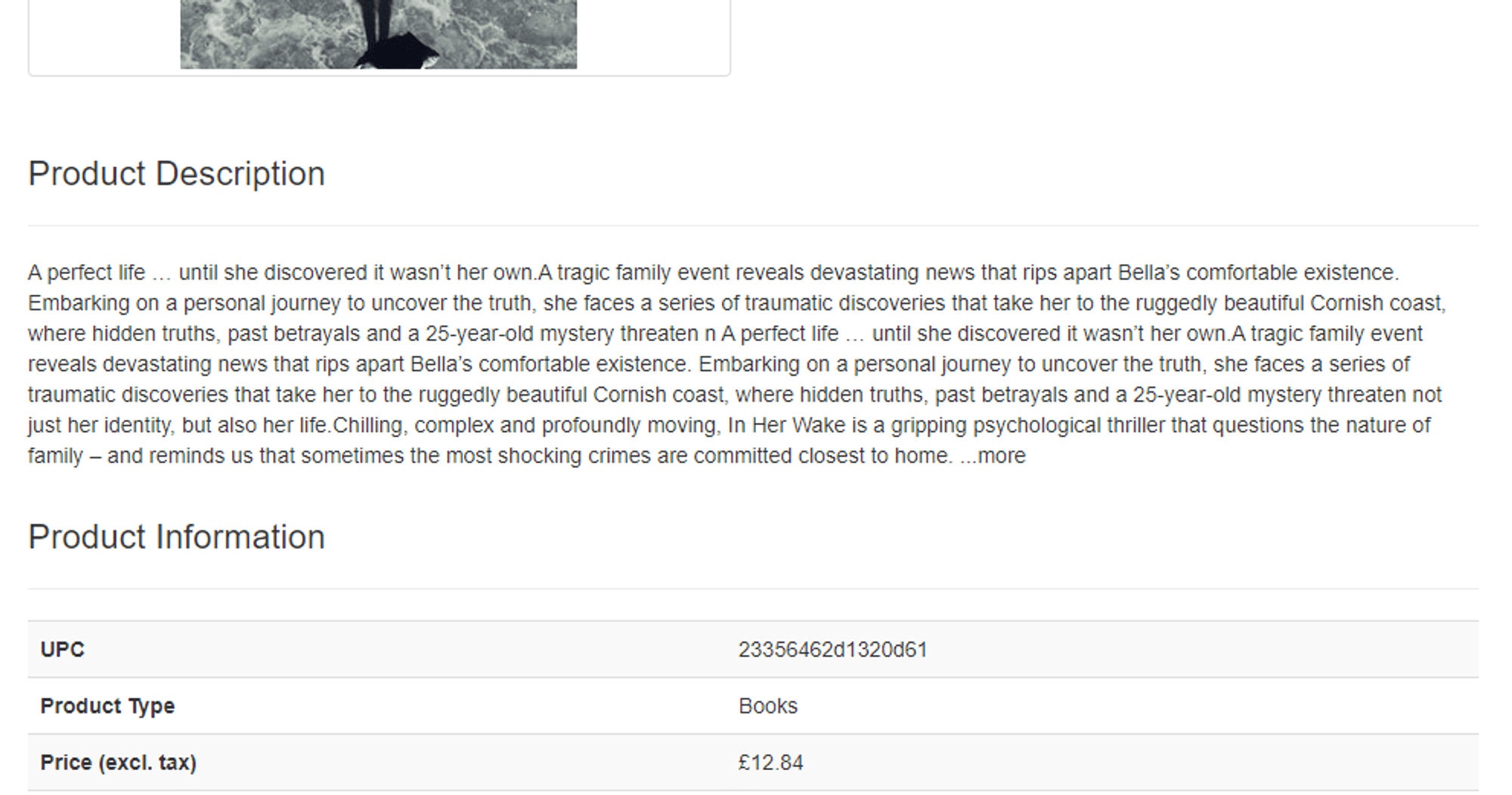

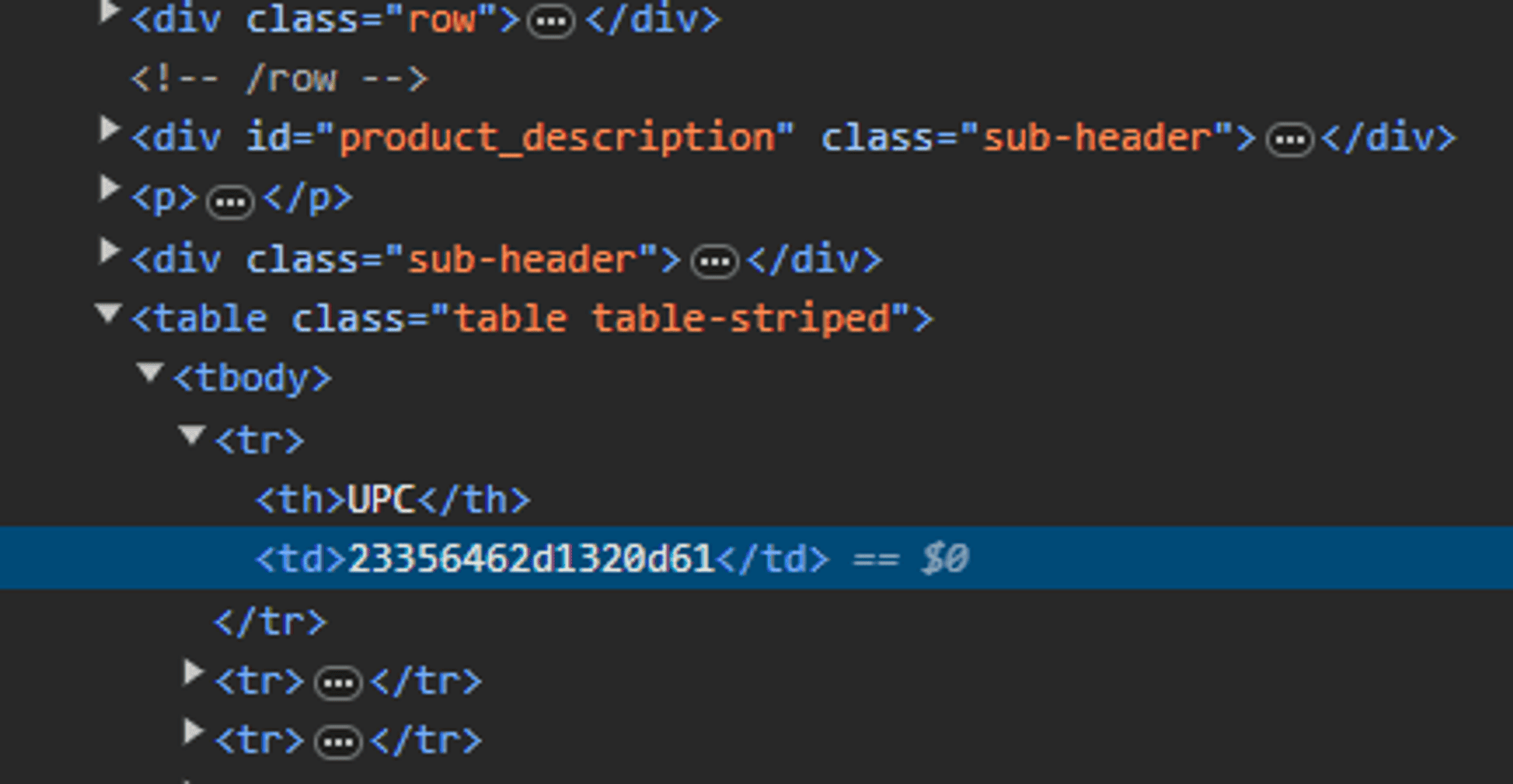

The book information is hidden in a table, so inspect the element to find where it is. As you can see, the element is located under the first <td> element in the table body. After identifying the table element on the page, you can pick the first <td> and retrieve its text.

table_elem = soup.find("table", class_="table table-striped")

book_upc = table_elem.select("td:nth-of-type(1)")[0].get_text()

Step 3. To get information, we need to add some more steps.

We’ll create the scrape_item() function now. This function will use aiohttp and details about the book (title and scraped URL).

All you have to do is give this function the link of the book. Then, it will go and fetch the information from the website using that link.

async def scrape_item(session, book):

print (f"Scraping: {book['url']}")

async with session.get(book['url']) as resp:

if resp.status == 200:

resp_data = await resp.text()

Step 4. Then, parse the HTML response with Beautiful Soup to extract specific text.

soup = BeautifulSoup(resp_data, "html.parser")

table_elem = soup.find("table", class_="table table-striped")

Step 5. Finally, add a new key – [‘upc’] – to the dictionary and assign the extracted upc value to it. It’ll store and keep track of the UPC, so that the scraper can identify each book.

book_upc = table_elem.select("td:nth-of-type(1)")[0].get_text()

book["upc"] = book_upc

else:

print(f"Request failed for {book['url']} with: {resp.status}")

# Retry functionality could be added here

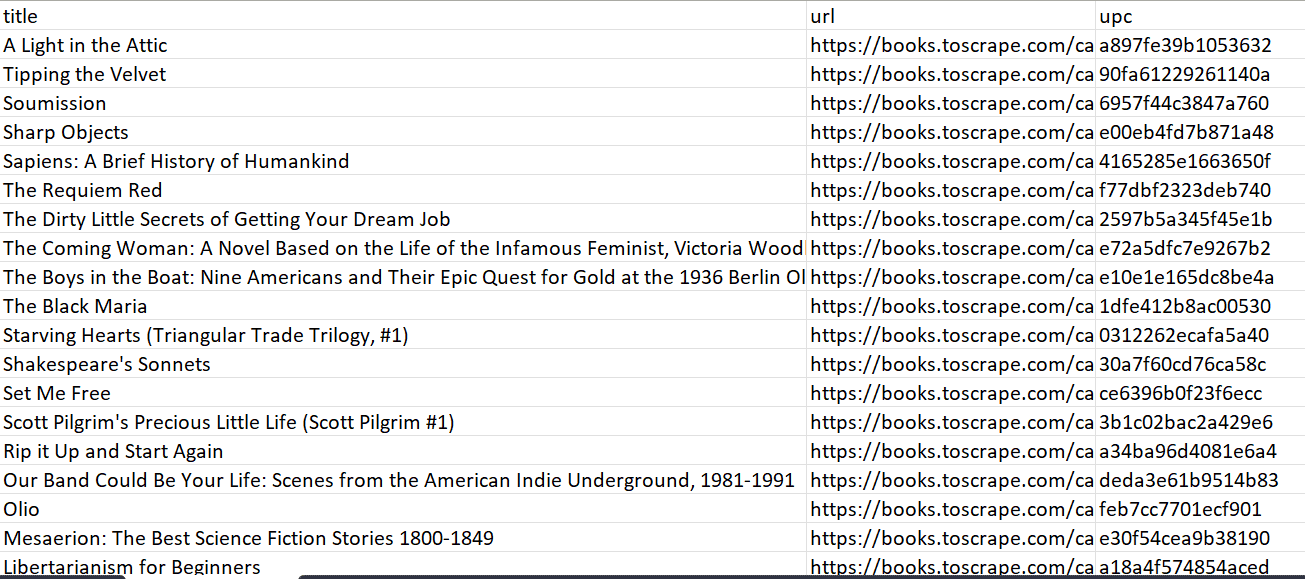

Writing Data to a CSV File

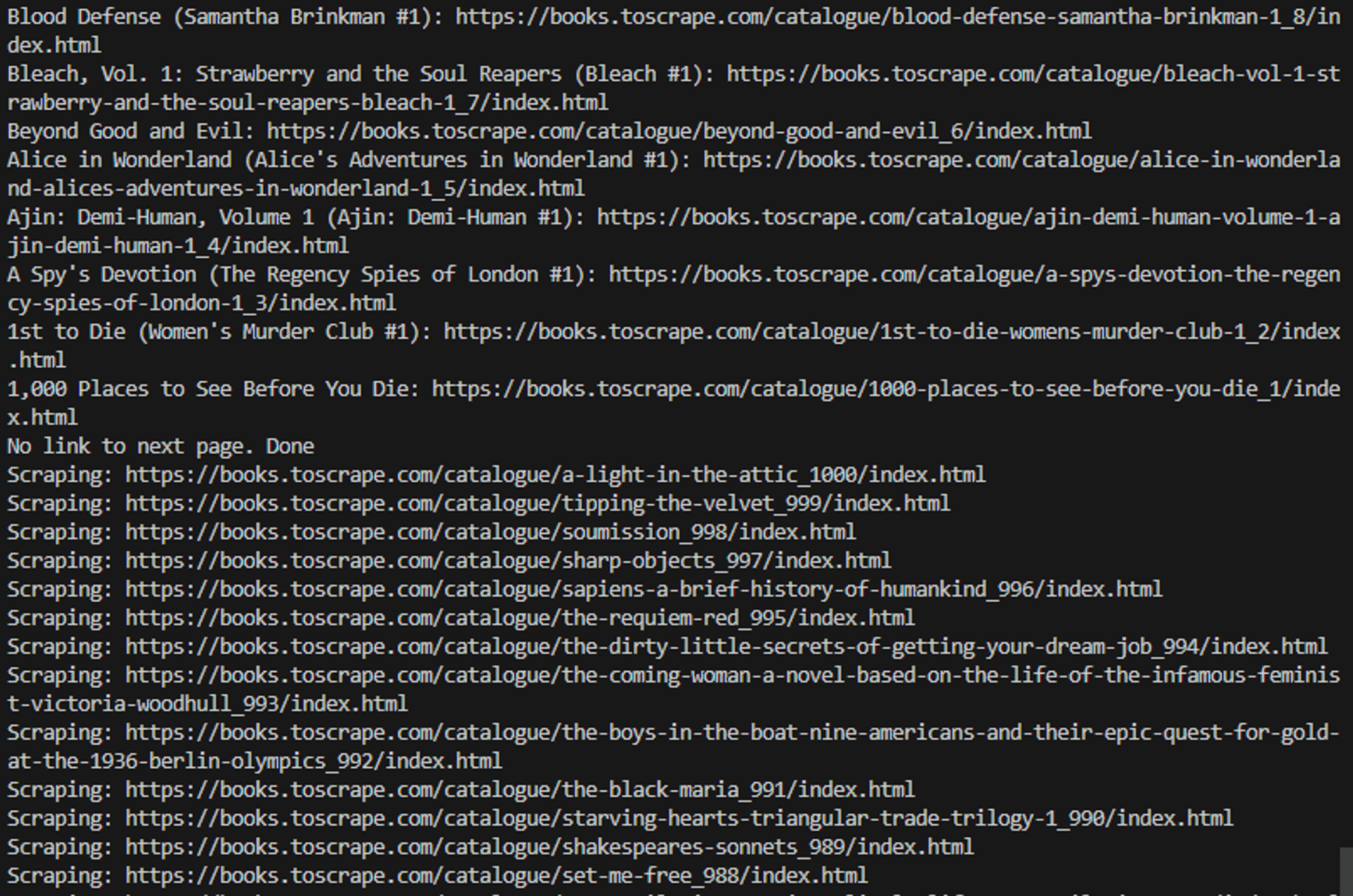

Step 1. First, let’s look at the example of terminal output:

The scraper has reached the end of the books.toscrape.com. It couldn’t find the next page link, so it scraped individual books.

You can print out the result_dict in the terminal after you’re done, but considering that you have 1000 dictionary objects in that list, create a function to write the data to a CSV file.

async def main():

async with aiohttp.ClientSession() as session:

await scrape_urls(session, start_url)

for book in result_dict:

await scrape_item(session, book)

print(result_dict)

write_to_csv()

Step 2. Then, import the csv.

import csv

Step 3. Write a function to create a file and write the result_dict into it in a CSV format.

def write_to_csv():

with open ("books_result.csv", "w", newline='') as f:

writer = csv.DictWriter(f, fieldnames=['title','url','upc'])

writer.writeheader()

writer.writerows(result_dict)

Here’s the full code:

import aiohttp

import asyncio

from bs4 import BeautifulSoup

from urllib.parse import urljoin

import csv

start_url = "https://books.toscrape.com/"

result_dict = []

def make_url(href):

if "catalogue/" in href:

url = urljoin(start_url, href)

return url

url = urljoin(f"{start_url}catalogue/", href)

return url

async def parse_page(resp_data):

soup = BeautifulSoup(resp_data, "html.parser")

books_on_page = soup.findAll("article", class_="product_pod")

for book in books_on_page:

link_elem = book.find("h3").a

book_title = link_elem.get("title")

book_url = make_url(link_elem.get("href"))

print (f"{book_title}: {book_url}")

result_dict.append({

'title': book_title,

'url': book_url,

})

try:

next_page_link = soup.find("li", class_ = "next").a

next_page_url = make_url(next_page_link.get("href"))

return next_page_url

except:

print ("No link to next page. Done")

return None

async def scrape_urls(session, url):

async with session.get(url) as resp:

if resp.status == 200:

resp_data = await resp.text()

next_url = await parse_page(resp_data)

if next_url is not None:

await scrape_urls(session, next_url)

else:

print(f"Request failed for {url} with: {resp.status}")

# Retry functionality could be added here

async def scrape_item(session, book):

print (f"Scraping: {book['url']}")

async with session.get(book['url']) as resp:

if resp.status == 200:

resp_data = await resp.text()

soup = BeautifulSoup(resp_data, "html.parser")

table_elem = soup.find("table", class_="table table-striped")

book_upc = table_elem.select("td:nth-of-type(1)")[0].get_text()

book["upc"] = book_upc

else:

print(f"Request failed for {book['url']} with: {resp.status}")

# Retry functionality could be added here

def write_to_csv():

with open ("books_result.csv", "w", newline='') as f:

writer = csv.DictWriter(f, fieldnames=['title','url','upc'])

writer.writeheader()

writer.writerows(result_dict)

async def main():

async with aiohttp.ClientSession() as session:

await scrape_urls(session, start_url)

for book in result_dict:

await scrape_item(session, book)

print(result_dict)

write_to_csv()

if __name__ == "__main__":

asyncio.run(main())