How to Use cURL With Python for Web Scraping

This tutorial will show you the basics to use cURL with Python for gathering data.

cURL is a popular command line tool for web scraping and testing APIs. Using cURL with Python comes with many benefits. For example, it’s much faster than traditional Python’s HTTP clients like Requests.

This tutorial will teach you about web scraping with cURL and its benefits. You’ll also find a step-by-step example of how to scrape data with cURL and Python by installing the PycURL module.

What Is cURL?

cURL, short for client URL, is an open-source command-line tool that’s run in the terminal for transferring data over networks. It’s widely available and has a simple text interface that works with almost every system.

By using cURL with Python, you can navigate your scraper and manipulate headers like cookies or user agents. You can also use cURL with proxies, and the tool allows you to test different API endpoints by simply entering a cURL command rather than using API calls.

Benefits of Using cURL with Python when Web Scraping

cURL brings several advantages compared to Python’s in-built libraries for making an HTTP request.

cURL is very fast compared to traditional HTTP clients like Python’s Requests, especially if you need to send many requests over multiple connections.

Another great benefit of cURL is that the module supports the most common internet protocols like HTTP(S) (POST, GET, PUT), FTP(S), IMAP, MQTT, SMB, and POP3. Additionally, it works with most operating systems.

Compared to Requests, cURL has more features: for example, more authentication options, the ability to use several TLS backends, and others.

A word of warning, cURL has a steep learning curve – even the creators themselves recommend it to advanced developers. But don’t let that scare you – once you get familiar with cURL, it will get easier.

Use cURL with Python: a Step-by-Step Guide

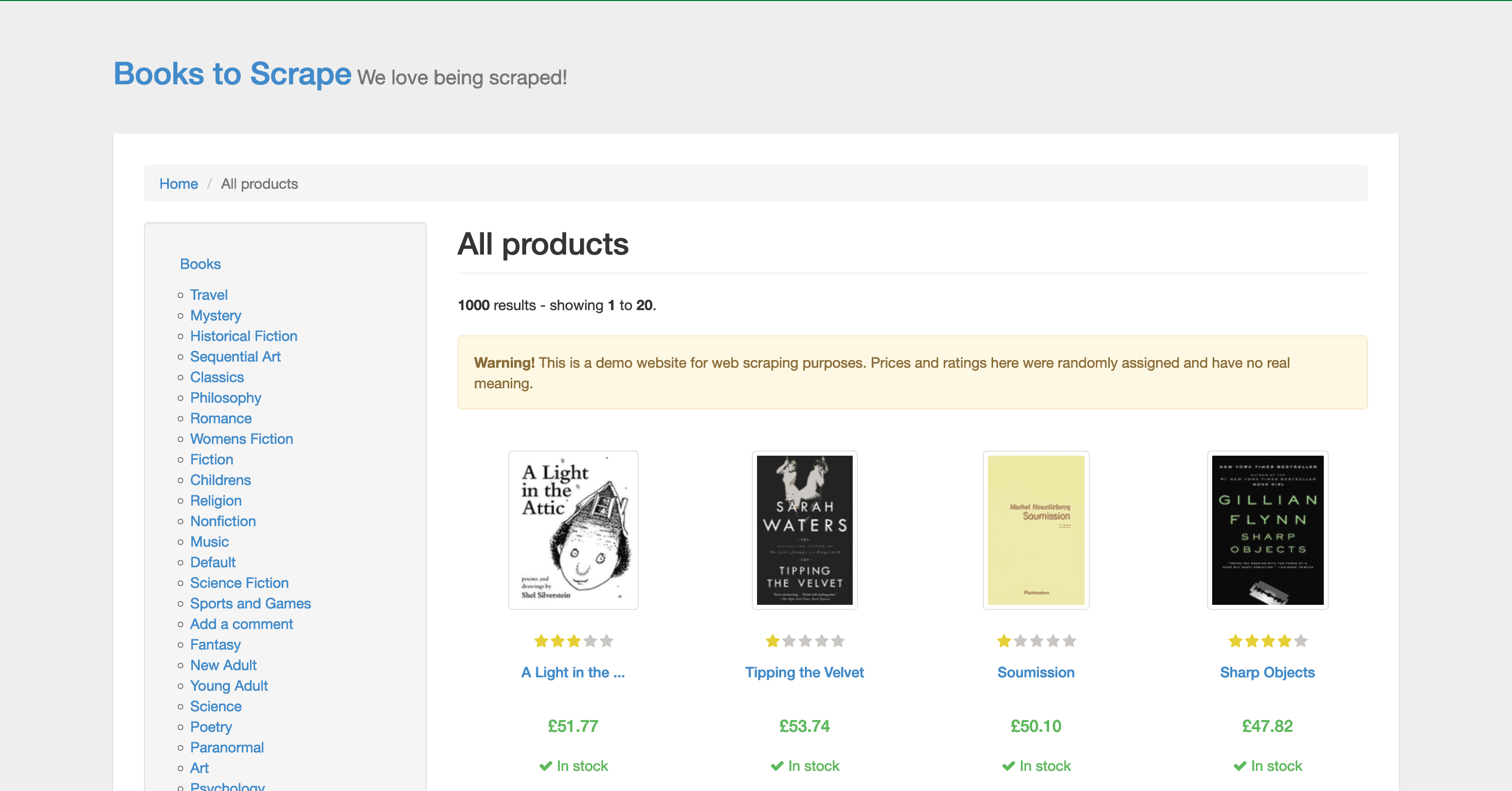

In this real-life example, we chose to scrape book information from books.toscrape.com. It’s a web scraping sandbox designed for such purposes. The website has 20 books per page and a total of 1,000 books. Imagine scraping everything by hand – it would take ages.

This tutorial will show you how to collect the title, price, rating, and availability using cURL, specifically the PycURL module. In addition, we’ll show you how to write the information into a CSV file using Python’s parser Beautiful Soup.

Prerequisites

- Python 3: install the latest version of Python on your computer. Refer to the official website.

- BeautifulSoup: add BeautifulSoup with Pip in your operating system’s terminal:

pip install beautifulsoup4. - PycURL: add

pip install pycurl. If you want to use pyCURL on windows, you have to build it from source; refer to the official website. - libcurl: if you’re Linux/Mac user, install libcurl on the system for pyCURL to work.

How to Do a cURL Request with Python

Step 1. The first thing we’ll need to do is to import Requests, Beautiful Soup, and BytesIO. The latter helps to manage file-related input and output.

from bs4 import BeautifulSoup

import pycurl

from io import BytesIO

Step 2. Pass the URL you want to scrape. In this case, we’ll be using books.toscrape.com

url = "http://books.toscrape.com/"

Step 3. Create a pycurl instance. Then, set the request options.

buffer = BytesIO()

curl = pycurl.Curl()

curl.setopt(curl.URL, url)

curl.setopt(curl.WRITEDATA, buffer)

Step 4. Make the request.

curl.perform()

Close the connection

curl.close()

response = buffer.getvalue()

print (response)

How to Parse the HTML You’ve Downloaded

Step 1. Now, let’s extract the data with Beautiful Soup. First, you’ll need to create a Beautiful Soup object for the page you scraped.

soup = BeautifulSoup(response , "html.parser")

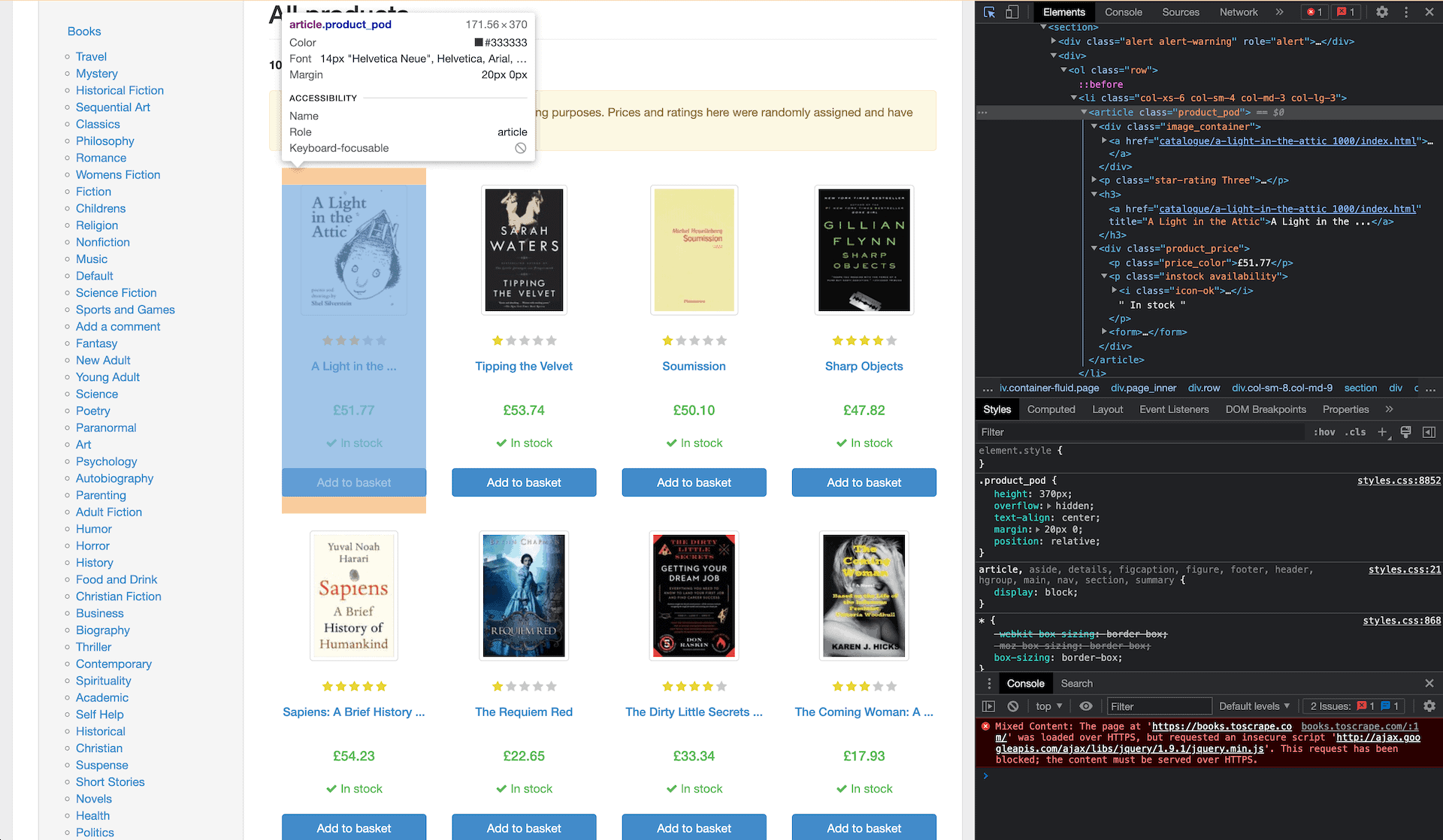

Step 2. Then, you need to find where the data points we’ll scrape are located (the book title, price, rating, and availability). Inspect the page by right-clicking on the page. The data is under a class called product_pod:

Step 3. Books will appear in a list of dictionaries.

books = []

1) The first page has 20 books. You’ll need to create a loop for the relevant elements of each product_pod:

for element in soup.find_all(class_='product_pod'):

2) Then, extract the page title. You’ll find it in H3, under the tag. Use H3 to get rid of unnecessary data like the URL. You can do that by specifying a string.

book_title = element.h3.string

If you want more details, you can get the URL for the book.

book_url = url + element.h3.a['href']

3) Now, let’s extract book prices, which are under price_color, and lose the pound sign.

book_price = element.find(class_='price_color').string.replace('£','')

#getting the rating

#finding element class but it has two: star-rating and 'num' e.g. 'One' so we're only getting the second one

4) The ratings are under the p tag. However, the rating is in the name itself, so it gets a bit tricky here. The name includes two words, and you only need one. You can bypass this problem by extracting the class as a list.

book_rating = element.p['class'][1]

5) The last data point is the stock availability under a class instock. Instead of find, we’ll use the CSS selector. It’s a more direct approach to locate the element. We’ll get the text and strip away unnecessary elements like blank spaces.

book_stock = element.select_one(".instock").get_text().strip()

#same as:

#book_stock = element.find(class_="instock").get_text().strip()

Step 4. Print out to double check.

print(book_title)

print(book_url)

print(book_price)

print(book_rating)

print(book_stock)

How to Export the Output to a CSV

Step 1. Now, let’s append the book’s details to its list.

books.append({

'title': book_title,

'price': book_price,

'rating': book_rating,

'stock': book_stock,

'url': book_url

}

)

print (books)

Step 2. Then, write to a csv file.

with open("books_output.csv", "a") as f:

Step 3. If you choose “a” with no header row, you can open an existing file for appending. Alternatively, you can use “w” to overwrite the file if it already exists.

for book in books:

f.write(f"{book['title']},{book['price']},{book['rating']},{book['stock']},{book['url']}\n")

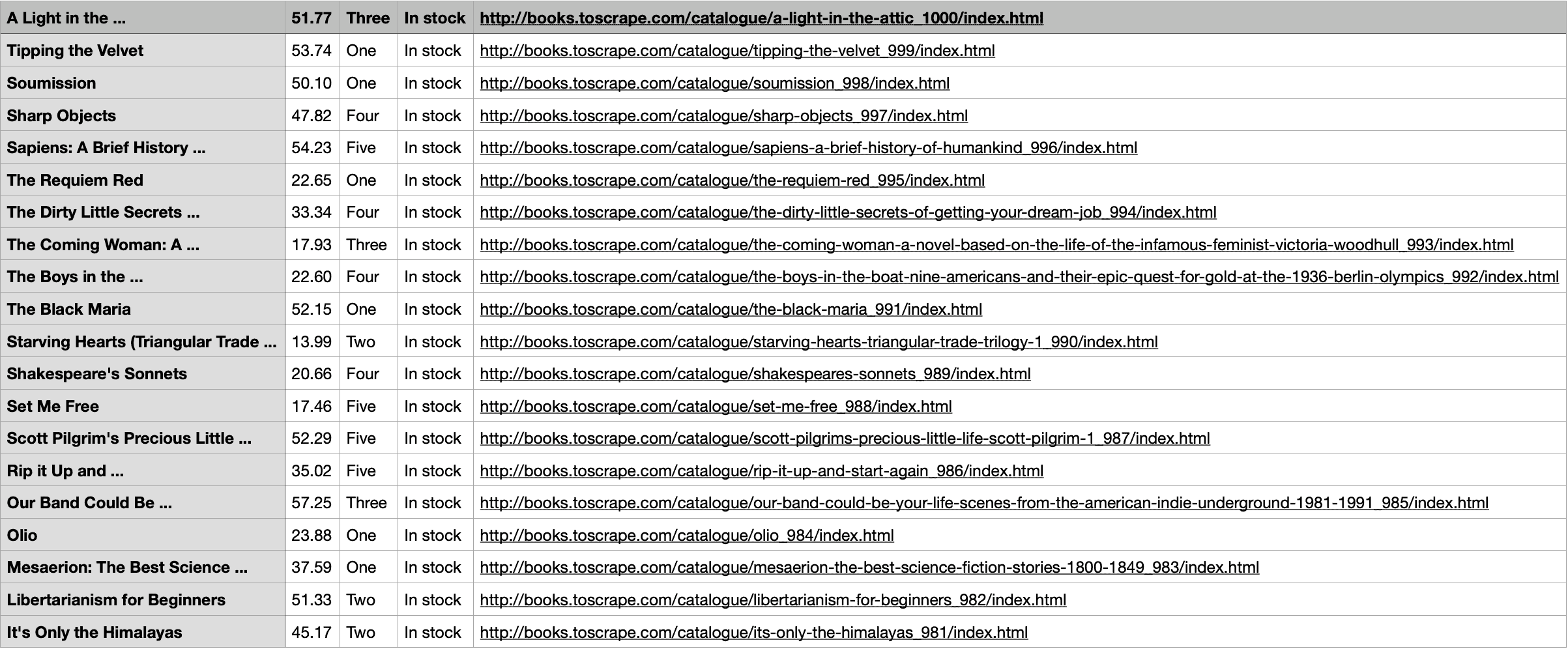

The output:

Here’s the complete script:

from bs4 import BeautifulSoup

import pycurl

from io import BytesIO

url = "http://books.toscrape.com/"

buffer = BytesIO()

curl = pycurl.Curl()

curl.setopt(curl.URL, url)

curl.setopt(curl.WRITEDATA, buffer)

curl.perform()

curl.close()

response = buffer.getvalue()

print (response)

soup = BeautifulSoup(response , "html.parser")

#books will be a list of dicts

books = []

for element in soup.find_all(class_='product_pod'):

book_title = element.h3.string

#getting the url for the book if we ever want to get more details

book_url = url + element.h3.a['href']

#getting the price and ridding ourselves of the pound sign

book_price = element.find(class_='price_color').string.replace('£','')

#getting the rating

#finding element class but it has two: star-rating and 'num' e.g. 'One' so we're only getting the second one

book_rating = element.p['class'][1]

#finding availability

#can also use css selector instead of find

book_stock = element.select_one(".instock").get_text().strip()

#same as:

#book_stock = element.find(class_="instock").get_text().strip()

#printing out to double check

print(book_title)

print(book_url)

print(book_price)

print(book_rating)

print(book_stock)

#lets append it to the books list now

books.append({

'title': book_title,

'price': book_price,

'rating': book_rating,

'stock': book_stock,

'url': book_url

}

)

print (books)

#write it to a csv file

with open("books_output.csv", "a") as f:

#not writing in a header row in this case

#if using "a" then you can open a file if it exists and append to it

#can also use "w", then it would overwrite a file if it exists

for book in books:

f.write(f"{book['title']},{book['price']},{book['rating']},{book['stock']},{book['url']}\n")