Web Scraping with Beautiful Soup: An Easy Tutorial for Beginners with Python

A step-by-step guide to web scraping with Beautiful Soup.

What is Web Scraping with Beautiful Soup?

Web scraping with Beautiful Soup is the process of extracting data from the HTML code you’ve downloaded and structuring the results for further use. In essence, Beautiful Soup is a Python library that structures HTML and XML pages. It works by selecting the data you need and extracting it in an easy to read format. For example, you get an HTTP client like Requests that fetches you the target web page. Then, you create a Beautiful Soup object which allows you to navigate through your target page. You can extract HTML tags or attributes and any content inside them, and get the results in formats like CSV or JSON. So technically, the process of using Beautiful Soup for web scraping is called web parsingWhy Choose Beautiful Soup for Web Scraping?

There are several good reasons for choosing Beautiful Soup:- Easy to use. Even if you’re unfamiliar with the Python programming language, it won’t be difficult to learn Beautiful Soup. With just a few lines of code, you can build a basic scraper and structure the target data into a readable format.

- Powerful parsing capabilities. Beautiful Soup comes with three inbuilt HTML parsers (html.parser, HTML5lib, and lxml), so you can use any of them to your advantage. For example, HTML5lib is great for flexibility, and lxml – for speed.

- Light on resources. The parser doesn’t need much computing power, which makes Beautiful Soup faster than many other libraries.

- Works well with broken pages. Sometimes web pages are awfully written, or the HTML is broken. Unlike other libraries like Selenium, Beautiful Soup can still get you accurate results and automatically detect page encoding.

- Large community. Beautiful Soup is an open-source library with millions of users, so you won’t lack support on StackOverflow or Discord channels.

Steps to Build a Beautiful Soup Web Scraper

Let’s say you want to start web scraping using Beautiful Soup. These two steps will help you to write your first script.Step 1: Choose Your Web Scraping Tools for Downloading the Page

Beautiful Soup is a powerful parser, but it alone won’t fetch you data, or deal with dynamic content. That’s why you’ll need to use other Python web scraping libraries to build a fully working scraper. First, you’ll need an HTTP client to download web pages. There are multiple options available like Requests, urllib3, or aiohttp. If you’re not familiar with any of them – stick with Requests. It’s easy to use, and you can build a simple web scraper with a few lines of code. The library is also very customizable – it supports custom headers, cookies, and handles redirects. If you need to scrape dynamic content, you should look into headless browser libraries like Selenium. Much of the web today uses JavaScript to load their content. In such cases, a simple HTTP client like Requests won’t be able to deal with client-side rendered websites like Twitter or Reddit. And third, if you’re serious about your project, you should get yourself rotating proxies to circumvent IP address blocks. They’re intermediary servers that change your IP and location.Step 2: Pick a Website and Review Web Scraping Guidelines

Now that you have the libraries ready, you need to pick a website to scrape. You can go two ways: 1) practice with dummy websites or 2) scrape real targets like Amazon or Google. There are several websites that provide a safe environment for practicing web scraping skills. These websites are publicly available and can be scraped without infringing on copyright or privacy laws. You can choose from our list of recommended sandboxes to find the one that suits you best. If you don’t have a particular project in mind, you can refer to our guide with multiple Python ideas for beginners and advanced users. However, scraping real targets is challenging – webmasters use anti-bot measures that might interrupt your scraper if not careful. Those include IP address blocks, CAPTCHAs, and other web scraping roadblocks. And most importantly, always respect the website you’re scraping – adhere to robots.txt instructions, avoid sending too many requests, and don’t scrape data behind a login (this can result in serious legal troubles). You can find more tips in our list of web scraping best practices.Beautiful Soup Web Scraping: A Step-by-Step Tutorial

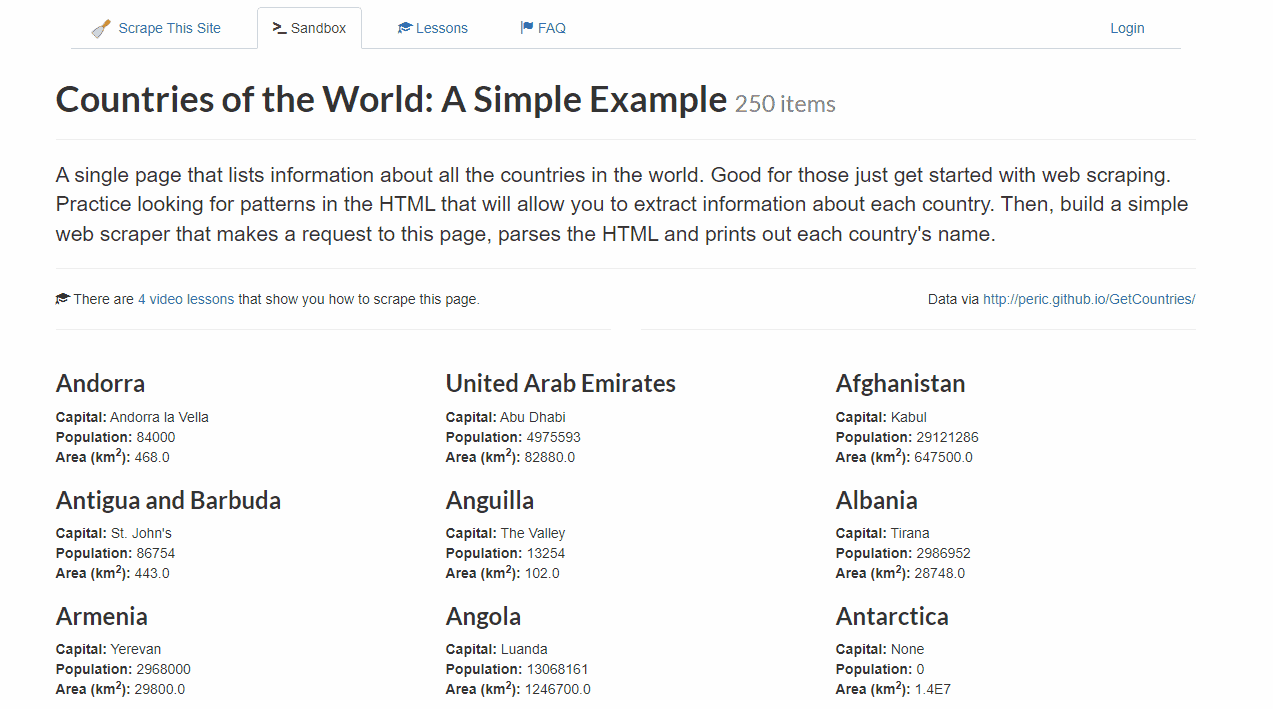

Imagine you’re a traveler that wants to plan your next trip by getting a list of all the countries from Scrapethissite.com. Manually gathering this information can be exhausting. In this web scraping tutorial with Beautiful Soup, you’ll build a scraper to do all your work.

Prerequisites

- Python 3. Usually, the latest version is already installed on your computer. If not, download it from Python.org.

- Requests. You can write

pip install requestsin your operating system’s terminal. - Beautiful Soup. Add it by running

pip install beautifulsoup4. - Code Editor. You can pick any code editor that you’re comfortable working with: Visual Studio Code, Notepad++, or your operating system’s text editor.

A step-by-step tutorial showing how to set up Beautiful Soup on Windows 10

Importing the Libraries

Import Beautiful Soup and Requests libraries.

from bs4 import BeautifulSoup

import requests

Additionally, import the csv library to export data into a CSV file.

import csv

Getting the HTML Content

Now, get the Scrapethissite’s page with all the countries of the world.

url="http://www.scrapethissite.com/pages/simple/"

response=requests.get(url)

Parsing the HTML with Beautiful Soup

Let’s move on to structuring the data by creating a Beautiful Soup object for the page you’ve downloaded. It will allow you to access all the information you want to get.

soup = BeautifulSoup(response.content, 'html.parser')

Now that you have the parsed HTML content, you can start looking for the data points you want to extract. In this case, let’s scrape the country names, capital, population, and area size.

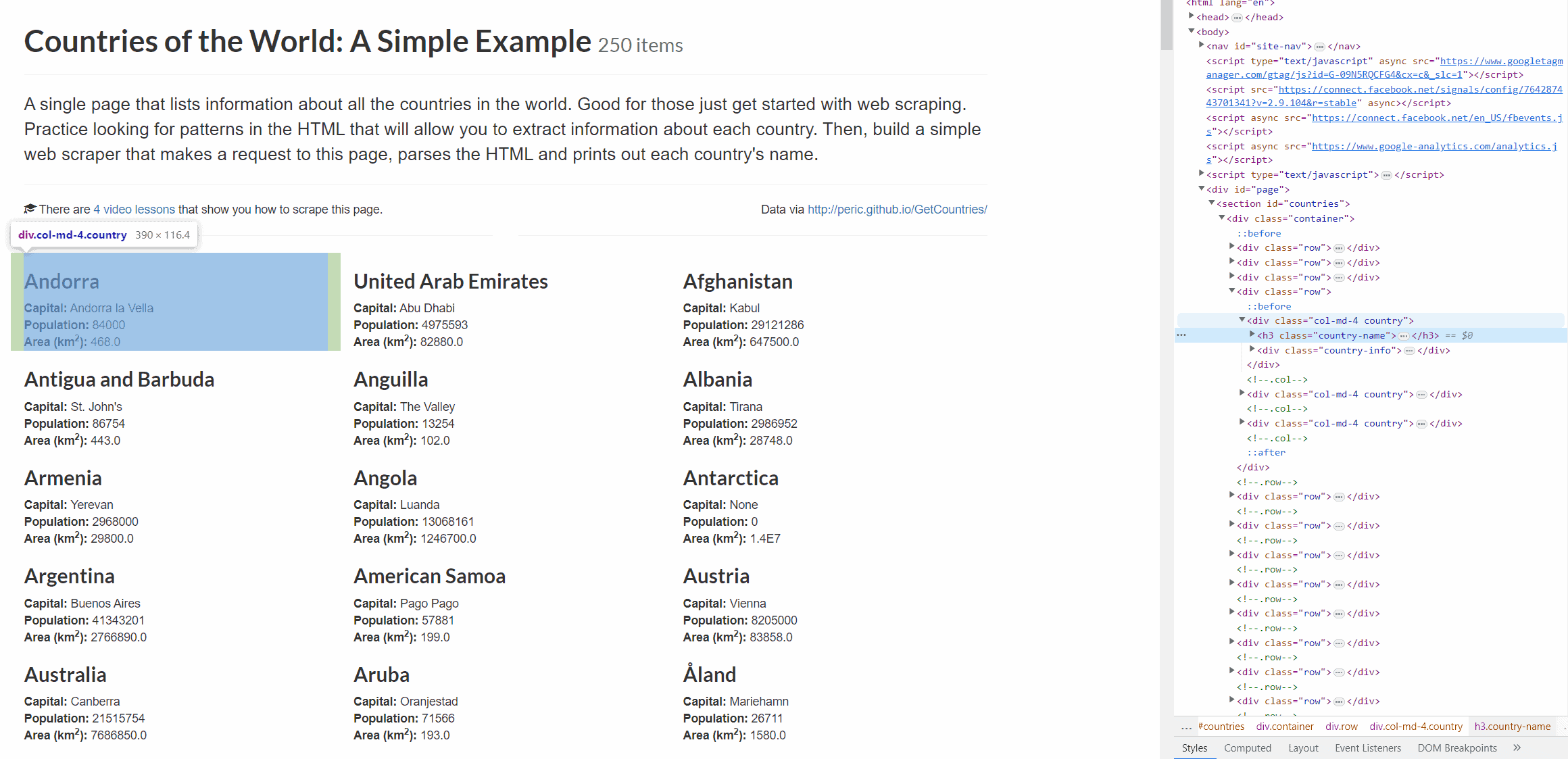

To find the elements, just right-click anywhere on the page and press Inspect or use the shortcut key Ctrl+Shift+I. As you can see, all the data is in div under the class country.

divs = soup.find_all("div", class_="country")

If your target website includes images, you can locate elements by class.

A step-by-step guide showing how to scrape images.

You can extract a list of URLs from an HTML document by finding the href attribute.

A step-by-step tutorial on how to extract the URL elements.

Step 2. If you want to write a csv file, you’ll need to create a dictionary with an element to store information.

countries_dict = []

Step 3. Now, let’s loop through each element in the rows to extract country name, capital, population, and area from each div with the class of country we’ve scraped.

for div in divs:

Note that some of the values are empty (or missing), so you’ll need to handle errors to assign an empty string to the corresponding variable.

One way to get all of this information is by using the BeautifulSoup find() function and selecting each element by tags and classes.

1) Let’s extract the country name in a h3 tag with the class country-name.

country_elem = div.find("h3", class_="country-name")

if country_elem is not None:

country = country_elem.text.strip()

else:

country = ""

2) Now, get the capital name, in a span tag with the class country-capital.

capital_elem = div.find("span", class_="country-capital")

if capital_elem is not None:

capital = capital_elem.text.strip()

else:

capital = ""

3) You can find the population with the class of country-population.

population_elem = div.find("span", class_="country-population")

if population_elem is not None:

population = population_elem.text.strip()

else:

population = ""

4) Then, repeat the same to get area information from the country-area.

area_elem = div.find("span", class_="country-area")

if area_elem is not None:

area = area_elem.text.strip()

else:

area = ""

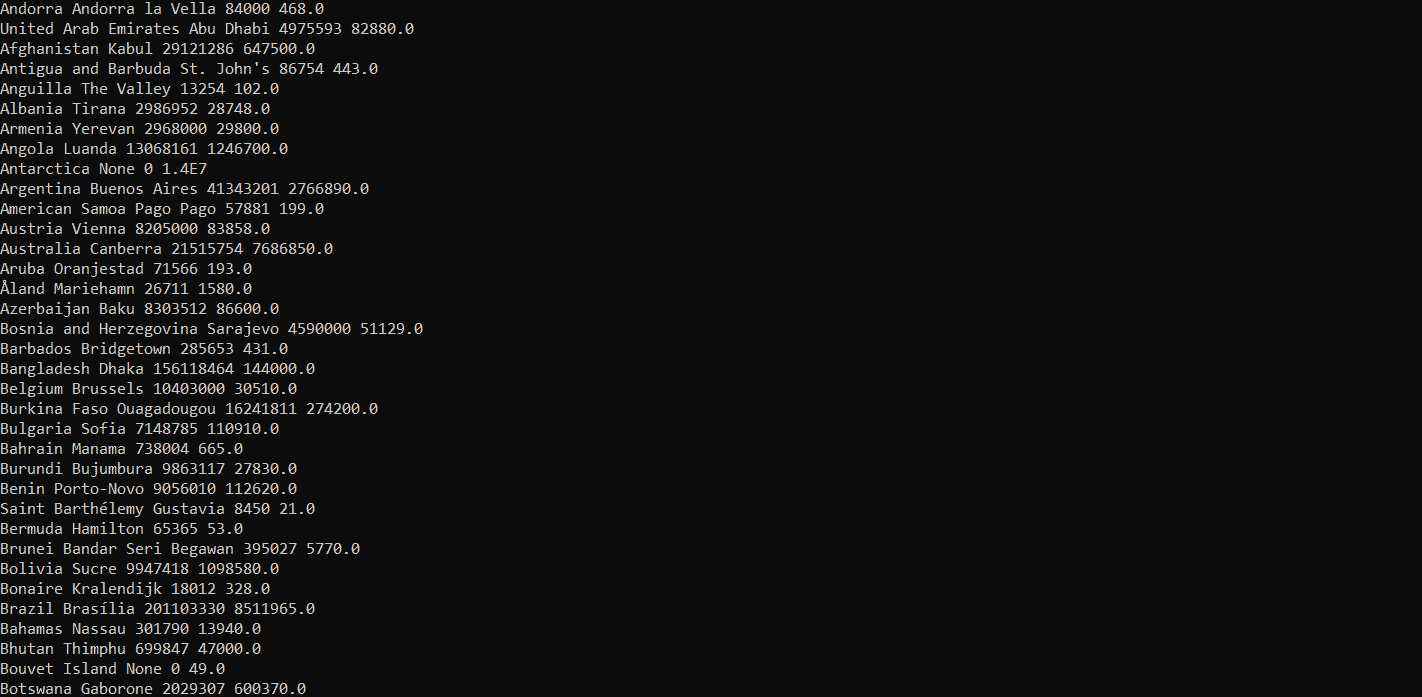

Step 4. Finally, print the results to see if your code works.

print(country, capital, population, area)

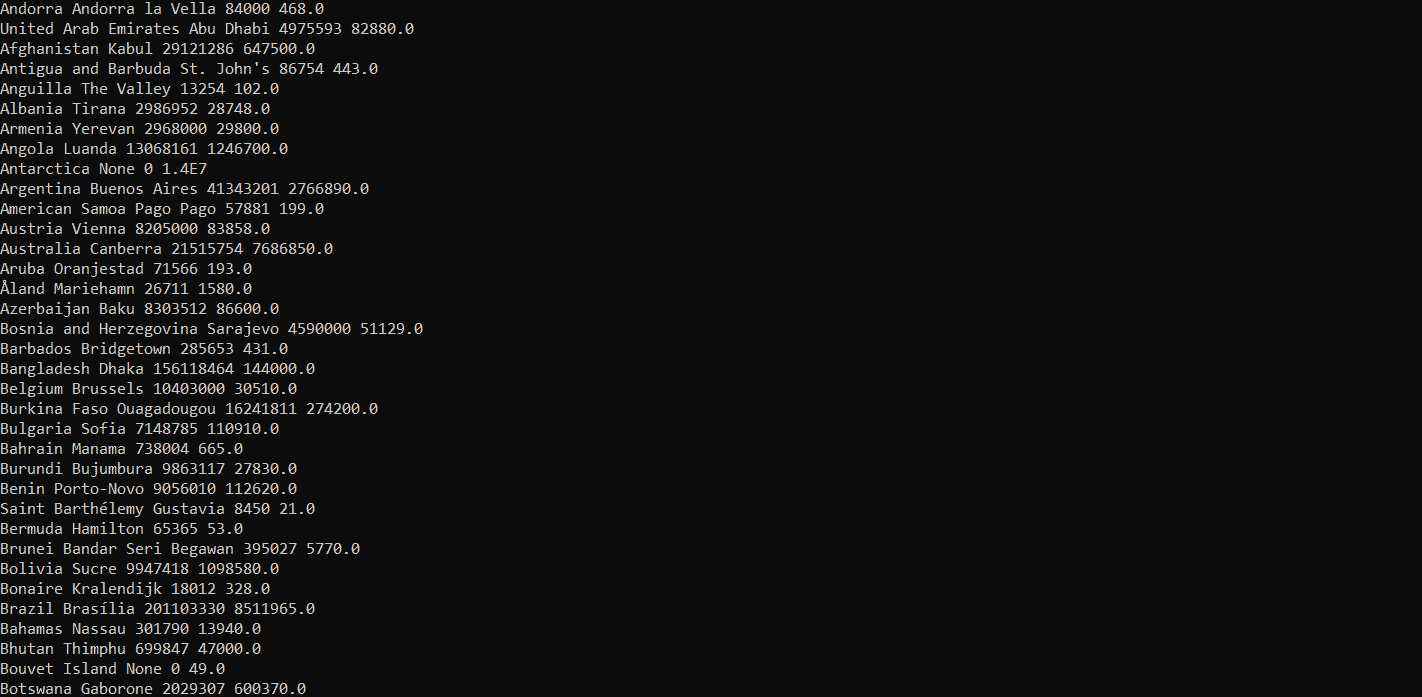

Here’s the output:

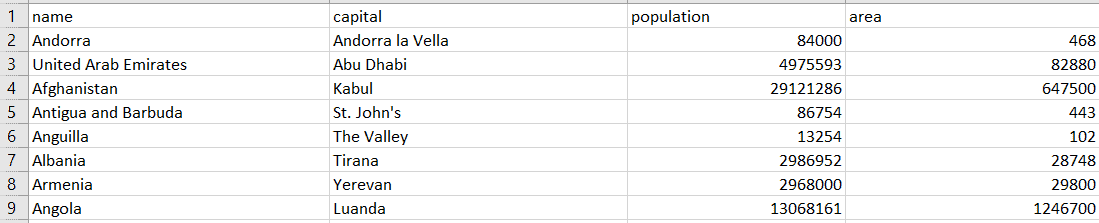

Writing Data to a CSV

Even though you’ve just scraped all the necessary information, it still looks too messy for further analysis. We’ll export everything onto an Excel spreadsheet.

Step 1. Add the scraped country information to a dictionary.

countries_dict.append({

"name": country,

"capital": capital,

"population": population,

"area": area,

})

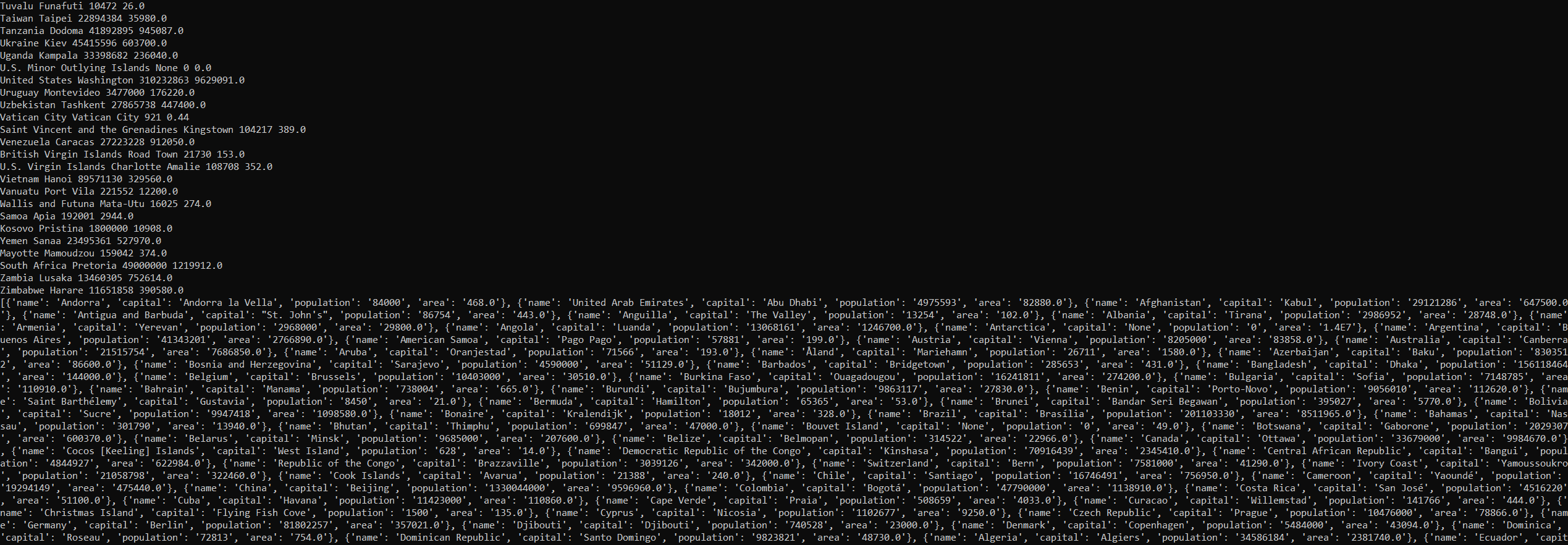

Step 2. Print out the dictionary.

print (countries_dict)

Step 3. Write your dictionary field names in the order you want them to appear in the csv.

field_names = ["name", "capital", "population", "area"]

Step 4. Create a file name for your output.

output_filename = "country_info.csv"

Step 5. Open that file and set encoding. Then write to it using the csv library.

with open (output_filename, 'w', newline='', encoding="utf-8") as f_output:

writer = csv.DictWriter(f_output, fieldnames = field_names)

writer.writeheader()

writer.writerows(countries_dict)

And that’s it. You have nicely structured results in a CSV file.

Here’s the full script:

from bs4 import BeautifulSoup

import requests

import csv

url="http://www.scrapethissite.com/pages/simple/"

response=requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

# fnd all country rows instead of all rows

divs = soup.find_all("div", class_="country")

# we need an element to store info to csv

# creating a dictionary for that

countries_dict = []

# looping through the rows

for div in divs:

country_elem = div.find("h3", class_="country-name")

if country_elem is not None:

country = country_elem.text.strip()

else:

country = ""

capital_elem = div.find("span", class_="country-capital")

if capital_elem is not None:

capital = capital_elem.text.strip()

else:

capital = ""

population_elem = div.find("span", class_="country-population")

if population_elem is not None:

population = population_elem.text.strip()

else:

population = ""

area_elem = div.find("span", class_="country-area")

if area_elem is not None:

area = area_elem.text.strip()

else:

area = ""

# printing the results

print(country, capital, population, area)

# adding the scraped country info to a dictionary

countries_dict.append({

"name": country,

"capital": capital,

"population": population,

"area": area,

})

# printing out the dictionary

print (countries_dict)

# write field names of your dictionary in the order you want them to appear in the csv

field_names = ["name", "capital", "population", "area"]

# filename for your output csv

output_filename = "country_info.csv"

# opening the file to write and setting the encoding, then writing to it using the csv library

with open (output_filename, 'w', newline='', encoding="utf-8") as f_output:

writer = csv.DictWriter(f_output, fieldnames = field_names)

writer.writeheader()

writer.writerows(countries_dict)

Advanced Web Scraping with Beautiful Soup

If you want to try other sandboxes, you can go to Toscrape and try to extract some book titles, prices, stock data, authors, and other information. You can refer to our step-by-step tutorial on Web Scraping with Python.Web Scraping with Python: All You Need to Get Started.

Now that you know how to scrape a single web page, you should also practice your other web scraping skills. You won’t be scraping in sandboxes for much long, and websites like Amazon or eBay hold large amounts of data that don’t fit into one page.

Scrapethissite provides an extensive list of NHL hockey team statistics since 1990! So, it’s time to deal with pagination – scraping multiple pages. Here’s a tutorial for guidance:

A step-by-step tutorial on how to extract multiple web pages with Beautiful Soup.

But that’s not the only challenge the web page hides – all the data is nested in a table. Scraping tables is really useful, especially if you’re into stocks or other numbers. But don’t worry, we’ve got you covered as well.

A step-by-step tutorial showing how to extract a table with Beautiful Soup.

Modern websites today include many complex elements in their pages, but Beautiful Soup is capable of handling most of them. If you want to master this parser, you can find the full list of guides in our knowledge base.