How to find all ‘href’ attributes using Beautifulsoup

Important: we will use a real-life example in this tutorial, so you will need requests and Beautifulsoup libraries installed.

Step 1. Let’s start by importing the Beautifulsoup library.

from bs4 import BeautifulSoup

Step 2. Then, import requests library.

import requests

Step 3. Get a source code for your target landing page. We will be using our homepage in this example.

r=requests.get("https://proxyway.com/")

An universal code might look like this:

r=requests.get("Your URL")

Step 4. Parse HTML code into a Beautifulsoup object named soup.

soup=BeautifulSoup(r.content,"html.parser")

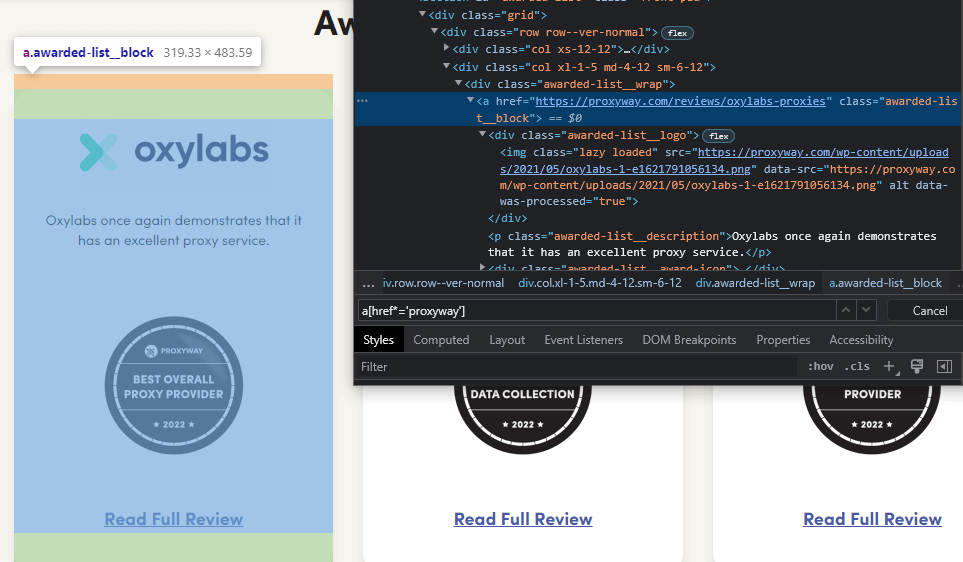

Step 5. Then, find all the links with href attribute. We will be using this tag as an example:

link_elements = soup.find_all("a", href=True)

NOTE: You can also specify a class.

link_elements = soup.find_all("a", class_=”some_class”, href=True)

Step 6. Put all the links you’ve found into a dictionary to keep track of them.

dict_of_links = {}

NOTE: The link would be assigned to the text found in the a tag.

Step 7. If a string exists for a particular element, you can iterate through all the link_elements that you’ve scraped and put them into the dictionary.

for element in link_elements: if element.string: dict_of_links[element.string] = element['href']

Step 8. Let’s check if our code works by printing it out.

print (dict_of_links)

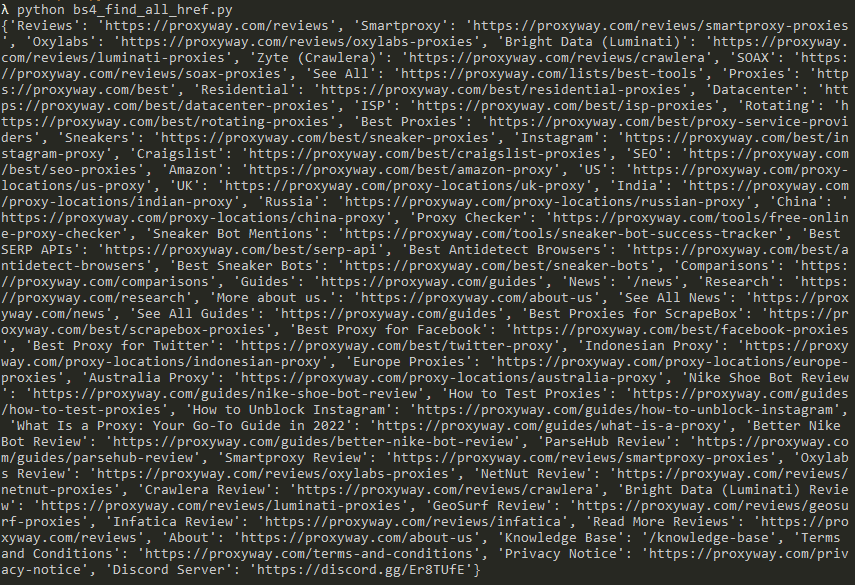

Results:

Congratulations, you’ve extracted all the URLs. Here’s the full script:

from bs4 import BeautifulSoup

import requests

r = requests.get("https://proxyway.com")

soup = BeautifulSoup(r.content, "html.parser")

link_elements = soup.find_all("a", href=True)

dict_of_links = {}

for element in link_elements:

if element.string:

dict_of_links[element.string] = element['href']

print (dict_of_links)