How to scrape multiple pages using Beautifulsoup

Important: we’ll use a real-life example in this tutorial, so you’ll need requests and Beautifulsoup libraries installed.

Step 1. Let’s start by importing the Beautifulsoup library.

from bs4 import BeautifulSoup

Step 2. Then, import requests library.

import requests

Step 3. Get a source code of your target landing page. We will be using our Guides page in this example.

r=requests.get("https://proxyway.com/guides/")

Universally applicable code would look like this:

r=requests.get("Your URL")

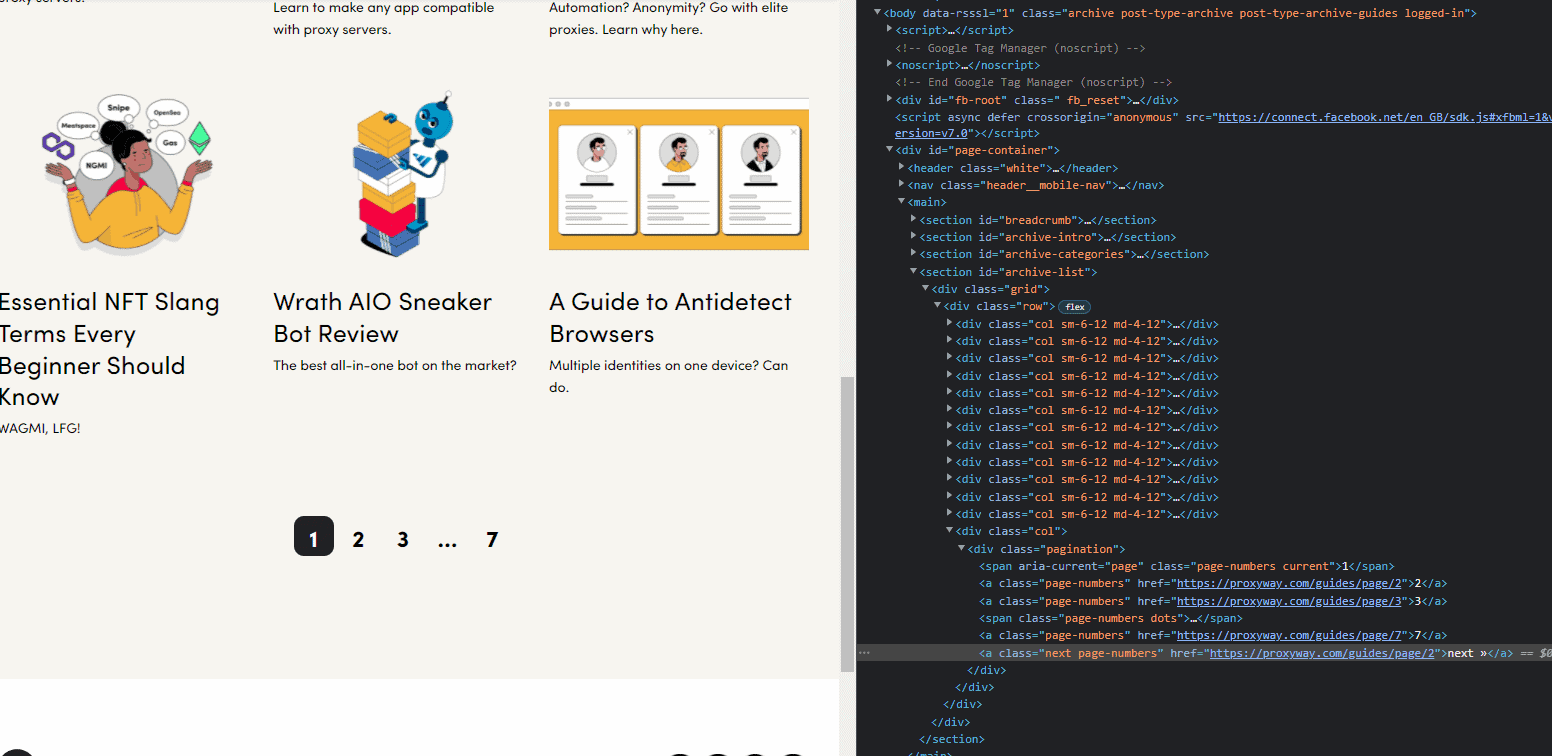

Step 4. Get the link to the next page by finding the a tag with the class of next. Then, you only need to get the href from this element and perform a new request.

Step 5. You can put the entire code into a single function:

def scrape_page(url):

print ("URL: " + url)

r = requests.get(url)

soup = BeautifulSoup(r.content, "html.parser")

get_data(soup)

next_page_link = soup.find("a", class_="next")

if next_page_link is not None:

href = next_page_link.get("href")

scrape_page(href)

else:

print ("Done")

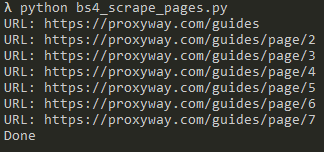

This is the output of the script. It shows the page URL you’ve just scraped.

Let’s look at the code step-by-step:

1. Performing a request to get the page.

r = requests.get(url)

2. Parsing the page and turning into a BeautifulSoup object.

soup = BeautifulSoup(r.content, "html.parser")

3. Passing the soup object into a different function where you could scrape page data before moving on to the next page.

get_data(soup)

4. Finding the next link element.

next_page_link = soup.find("a", class_="next")

5. If such an element exists, then there is another page you can scrape; if not, you’re done.

if next_page_link is not None:

6. Getting the href attribute from the link element. This is the URL of the next page you’re scraping.

href = next_page_link.get("href")

7. Calling the same function again and passing it the new URL to scrape the next page.

scrape_page(href)

Results:

Congratulations, you’ve scraped multiple pages using Beautifulsoup. Here’s the full script:

from bs4 import BeautifulSoup

import requests

start_url = "https://proxyway.com/guides"

def scrape_page(url):

print ("URL: " + url)

r = requests.get(url)

soup = BeautifulSoup(r.content, "html.parser")

get_data(soup)

next_page_link = soup.find("a", class_="next")

if next_page_link is not None:

href = next_page_link.get("href")

scrape_page(href)

else:

print ("Done")

def get_data(content):

#we could do some scraping of web content here

pass

def main():

scrape_page(start_url)

if __name__ == "__main__":

main()