Web Scraping with Python’s lxml : A Tutorial for Beginners

A step-by-step guide to web scraping with Python’s lxml.

Python has many great libraries used in web scraping, and lxml is one of them. In this guide, you’ll learn why you should choose Python’s lxml library for web scraping, how to prepare for your project, and how to build a robust lxml scraper with a real-life example. You’ll also find some general tips to help you get more successful requests.

What is Web Scraping with lxml in Python?

Web scraping with Python’s lxml is the process of getting and structuring data from the HTML or XML code you’ve downloaded. In simple words, the tool selects the necessary data points and extracts them in a readable format.

Python’s lxml isn’t a standalone library – it requires other tools to build a scraper. To make a request and download the page’s HTML code, you’ll need to use an HTTP client like Requests. Once you’ve downloaded the data, lxml can only then define elements and their attributes, and structure the data.

So, in essence, web scraping with lxml in Python means parsing the data after an HTTP client has fetched it.

Why Choose Python’s lxml for Web Scraping?

There are several reasons for choosing lxml:

- Extensible. lxml wraps two C libraries – libxml2 and libxalt – that make the parser highly extensible. It includes features like speed, simplicity of a native Python API, and XML characteristics.

- Specifies XML structure. The library supports three schema languages that help to specify the XML structure. Additionally, it can fully implement XPath. It’s a useful web scraping technique for identifying elements in XML documents.

- Allows to traverse data. lxml is able to navigate through different XML and HTML structures. The library can traverse through children, siblings, and other elements, which parsers like Beautiful Soup can’t.

- Light on resources. One of the best parts about lxml is that it doesn’t use a lot of memory. This makes the library fast and suitable for parsing large amounts of data.

But lxml isn’t suitable for every parsing task. If your target web page has poorly written or broken HTML, the library will likely fail. In this case, it includes a fallback to Beautiful Soup.

Steps to Build a lxml Parser in Python

Step 1: Choose Appropriate Tools

To build a fully functioning scraper with lxml, you’ll need other Python dependencies.

First, choose an HTTP client that will fetch you data. You can use Python’s libraries like Requests, HTTPX or aiohttp. The one you choose depends on your preferences and the web scraping project you have in mind. In case you’re not familiar with any, go with Requests – it’s the oldest one and very customizable.

If you decide to take your skills to the next level by scraping dynamic websites like some e-commerce or social media websites, an HTTP client and a parser won’t be enough. You need a tool that can render JavaScript. In this case, use a headless browser library like Selenium to handle asynchronous and AJAX requests.

Step 2: Identify Your Target Web Page

Now that you have your tools ready identify your target web page. For example, you may want to scrape Google Search results to monitor your and the competition’s Google rankings or gather product information from an e-commerce store such as Amazon.

There are many projects for you to try – we’ve even prepared a separate guide on some web scraping ideas. Otherwise, you can practice your skills on dummy websites – they provide all the necessary elements to get the hang of the web scraping process.

Step 3: Review Web Scraping Guidelines

If you’re unaware of some web scraping challenges, the process can turn into a real can of worms. From CAPTCHA prompts to IP address bans, these roadblocks can hinder your project’s success. So, if you’re serious about your project, I’d recommend getting a rotating proxy server that will act on your behalf by changing your IP address and location as needed.

No matter the target (except the web scraping sandboxes), you should know that websites usually have instructions called robots.txt to manage bot traffic. It’s a set of rules on what pages you can and can’t scrape and how often you can do so. In any case, web scraping includes following these and other guidelines – take time to familiarize yourself with web scraping best practices.

Additionally, requests made from a browser include headers with your device information. Why should you care? The page may not allow your scraper to get the data if the user-agent string (an important header) is missing or malformed. Most HTTP clients send their user-agent header, so you need to change it when scraping real targets. But don’t worry – I’ll show you how later on.

Web Scraping with Python’s lxml: A Step-By-Step Tutorial

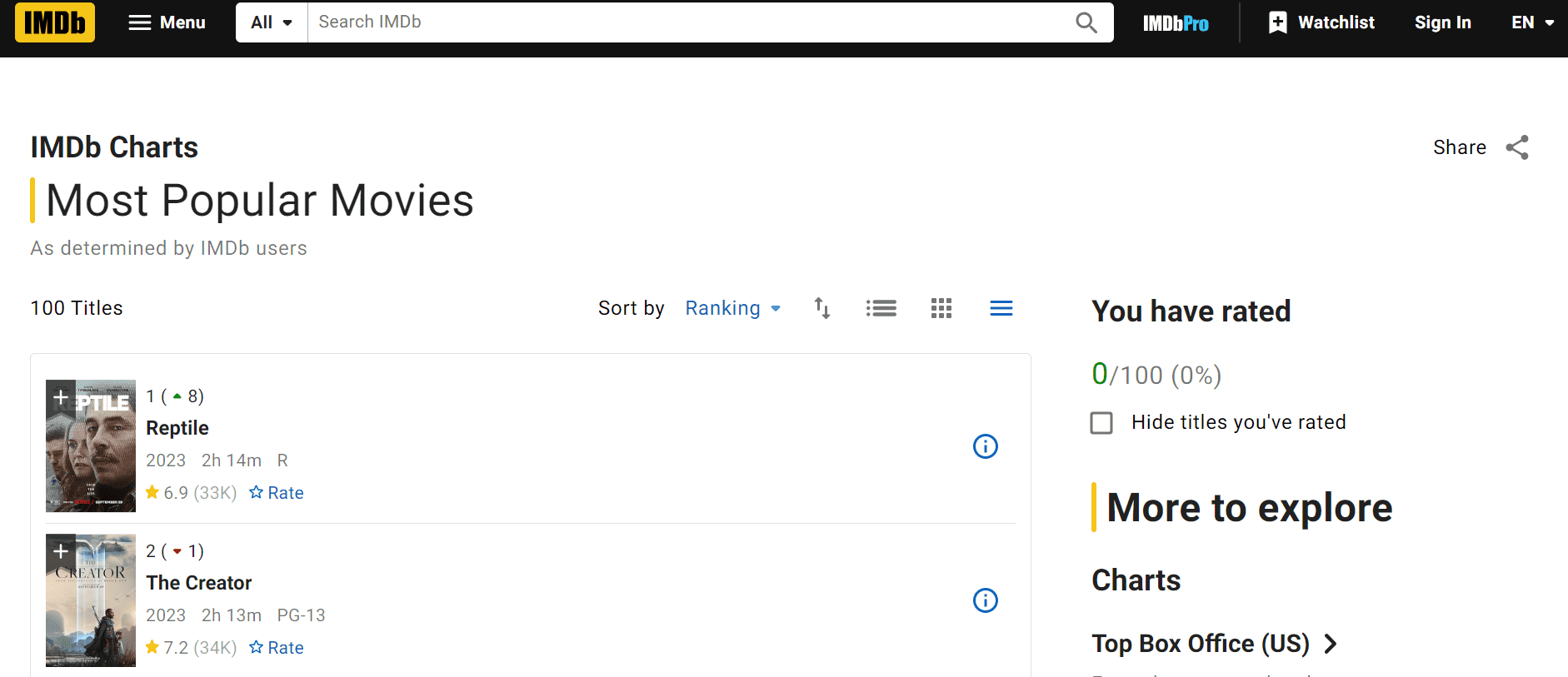

In this step-by-step tutorial, we’re going to scrape the most popular movies (and all the information within) of the month from IMDb. Since Python’s lxml is a web parsing library, we’ll also use Requests to download the data.

The platform provides more fun lists to scrape – but remember to check the robots.txt and respect other web scraping guidelines!

Prerequisites

- Python 3. Check if the latest version is installed on your device. Otherwise, download it from Python.org.

- Requests. Write

pip install requestsin your operating system’s terminal. - lxml. Add it by running

pip install lxml. - Code Editor. You can choose any code editor. For example, Notepad++, Visual Studio Code, or your operating system’s text editor.

Importing the Libraries

Step 1. First, let’s import the necessary libraries.

import requests

from lxml import etree

Step 2. Then, define the URL you’re going to scrape.

url = "https://www.imdb.com/chart/moviemeter/"

Adding the Fingerprint (Headers)

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Referer": "https://www.google.com/",

"Sec-Ch-Ua": "\"Chromium\";v=\"116\", \"Not)A;Brand\";v=\"24\", \"Google Chrome\";v=\"116\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "cross-site",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36",

}

Getting the HTML Content

Step 1. Define the main web scraping function.

def request():

Step 2. Then, make a request to IMDb and send your fingerprint along with it.

response = requests.get(url, headers=headers)

Step 3. Check the status code. Look for 200 – it shows a successful request.

print(response.status_code)

Step 4. After receiving the response, parse the HTML content using etree.HTML() parser provided by lxml.

tree = etree.HTML(response.text)

Extracting the Movie Data

Step 1. Create a new list to hold movie information that you’ll scrape.

movies_list = []

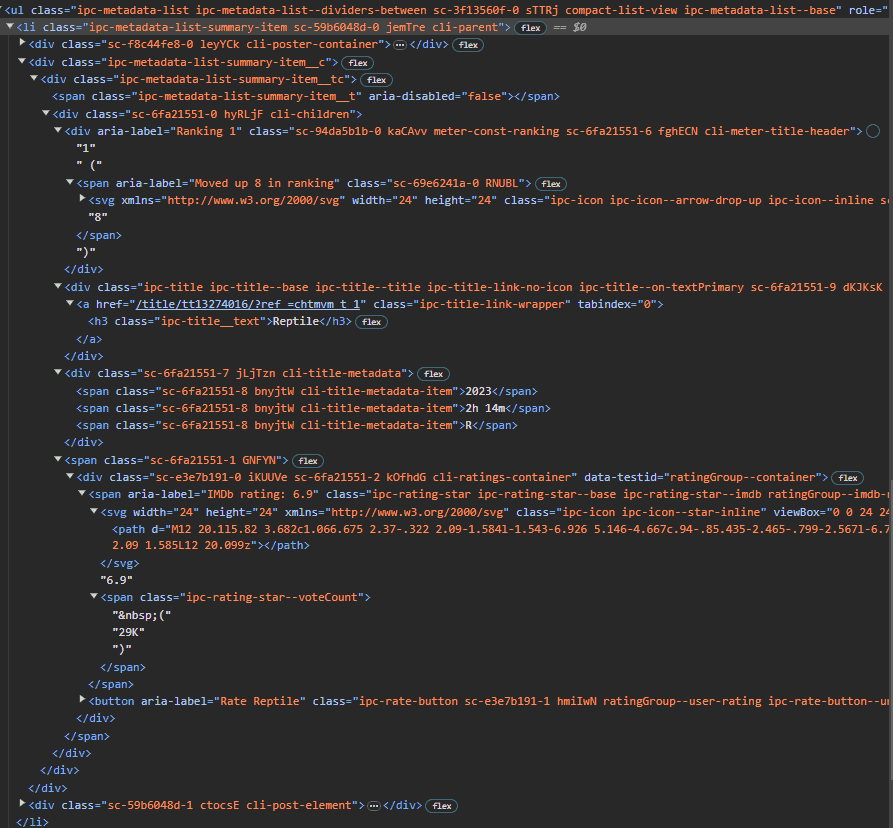

Step 2. To find the elements you want to scrape, right-click anywhere on the page and press Inspect. Look for the <ul> tag, grab the contents from the HTML and all of the list items within.

list_elem = tree.xpath(".//ul[contains(@class,'ipc-metadata-list')]/li")

Let’s have a closer look at the code:

- ul – unordered list tag.

- li – list items. This selector finds the <ul> element that contains a ipc-metadata-list class among others. You’ll also need the <li> element.

- tags – incorporates the selected list item and assigns the result to a new variable.

- list_elem – a list of all list item objects.

Step 3: Now, you can iterate through all of the list items.

for list_item in list_elem:

Step 4. Create a json object to store the information.

json = {}

Step 5. Now, let’s get movie information rankings and the title.

1) First, get the movie information rankings. Look for a <div> element with a class of meter-const-ranking using XPath and assign it to a variable movie_rank_elem.

movie_rank_elem = list_item.xpath(".//div[contains(@class, 'meter-const-ranking')]")

2) The movie_rank_elem itself is also an lxml element, so we can use the xpath() function to select within this element. Get the text from within the element and, since the function returns a list that contains a single item, simply take the first item from the list and assign it to the json dictionary.

json['rank_num'] = movie_rank_elem[0].xpath(".//text()")[0]

3) Let’s move on to extracting the title. Here, the difference is that you’ll select an <h3> tag with a class of ipc-title__text and directly assign the text to the title key in your dictionary.

json['title'] = list_item.xpath(".//h3[@class='ipc-title__text']/text()")[0]

Step 6. Now, get the information about release date, length and content rating. The process is slightly different because these data points lack specific classes to select them directly. Instead, you need to select elements with the same tag and classes, resulting in a list containing only 1 to 3 items.

movie_metadata_elems = list_item.xpath(".//span[contains(@class, 'cli-title-metadata-item')]")

Note: As the movie_metadata_elems list contains lxml elements, you can access each by their index.

1) Release date is the first item, so its index is 0, length is 1 and the content rating would be 2.

NOTE: Not every movie has a content rating, so check the length to make sure that there’s one. After accessing each element by their index, use XPath to get the text value and assign them to the dictionary.

json['release_date'] = movie_metadata_elems[0].xpath(".//text()")[0]

json['length'] = movie_metadata_elems[1].xpath(".//text()")[0]

if len(movie_metadata_elems) > 2:

json['content_rating'] = movie_metadata_elems[2].xpath(".//text()")[0]

else:

json['content_rating']: None

2) To get the rating and vote count of each movie, use a similar approach as before, selecting each element and getting the text within.

NOTE: Not every movie has one or both of these elements. To handle this, add an “if” statement, so that a list has been returned before adding the results to your dictionary.

movie_rating_elem = list_item.xpath(".//span[contains(@class, 'ipc-rating-star')]/text()")

if movie_rating_elem:

json['rating'] = movie_rating_elem[0]

movie_vote_count_elem = list_item.xpath(".//span[contains(@class, 'ipc-rating-star--voteCount')]/text()")

if movie_vote_count_elem:

json['vote_count'] = movie_vote_count_elem[1]

json['url'] = "https://www.imdb.com/"+list_item.xpath(".//div[contains(@class, 'ipc-title')]/a/@href")[0]

Step 7. Then, add the json dictionary to the movies_list list and let the script move on to parsing a list item for the next movie.

movies_list.append(json)

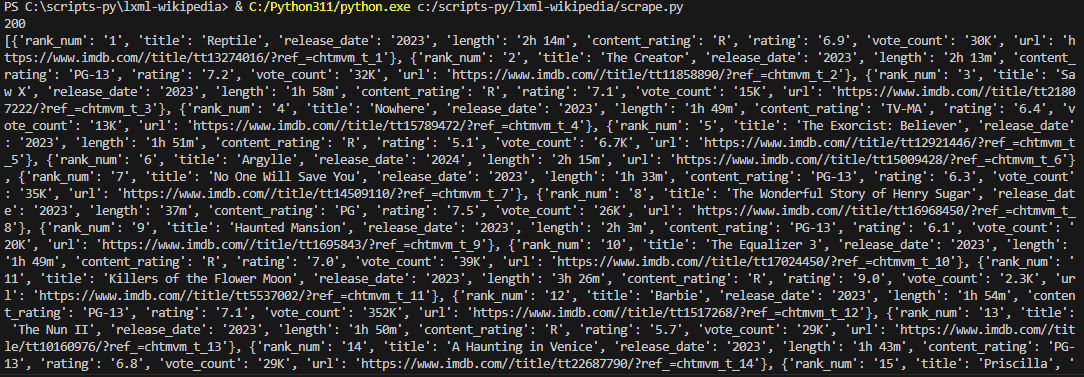

Step 8. Finally, print out the output.

print (movies_list)

if __name__ == "__main__":

request()

Here’s the full code:

import requests

from lxml import etree

url = "https://www.imdb.com/chart/moviemeter/"

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Referer": "https://www.google.com/",

"Sec-Ch-Ua": "\"Chromium\";v=\"116\", \"Not)A;Brand\";v=\"24\", \"Google Chrome\";v=\"116\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "cross-site",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36",

}

def request():

response = requests.get(url, headers=headers)

print(response.status_code)

tree = etree.HTML(response.text)

movies_list = []

list_elem = tree.xpath(".//ul[contains(@class,'ipc-metadata-list')]/li")

for list_item in list_elem:

json = {}

movie_rank_elem = list_item.xpath(".//div[contains(@class, 'meter-const-ranking')]")

json['rank_num'] = movie_rank_elem[0].xpath(".//text()")[0]

json['title'] = list_item.xpath(".//h3[@class='ipc-title__text']/text()")[0]

movie_metadata_elems = list_item.xpath(".//span[contains(@class, 'cli-title-metadata-item')]")

json['release_date'] = movie_metadata_elems[0].xpath(".//text()")[0]

json['length'] = movie_metadata_elems[1].xpath(".//text()")[0]

if len(movie_metadata_elems) > 2:

json['content_rating'] = movie_metadata_elems[2].xpath(".//text()")[0]

else:

json['content_rating'] = None

movie_rating_elem = list_item.xpath(".//span[contains(@class, 'ipc-rating-star')]/text()")

if movie_rating_elem:

json['rating'] = movie_rating_elem[0]

movie_vote_count_elem = list_item.xpath(".//span[contains(@class, 'ipc-rating-star--voteCount')]/text()")

if movie_vote_count_elem:

json['vote_count'] = movie_vote_count_elem[1]

json['url'] = "https://www.imdb.com/" + list_item.xpath(".//div[contains(@class, 'ipc-title')]/a/@href")[0]

movies_list.append(json)

print(movies_list)

if __name__ == "__main__":

request()