The Main Web Scraping Techniques: A Practical Guide

Explore various web scraping techniques to improve your scraper.

Web scraping isn’t easy since each website’s structure requires different methods to gather data successfully. Knowing which web scraping technique to use can help you avoid making unnecessary requests, find data nested in JavaScript elements, and extract only the specific elements you wish to scrape.

Continue reading this guide and find out various techniques for gathering data and how they can improve your web scraper.

Choosing The Right Tools for Your Project

Programming-minded users often build a scraper themselves using web scraping frameworks like Scrapy and Selenium or libraries like BeautifulSoup. You’ll find relevant libraries in various programming languages, but Python and Node.js generally have the best ecosystems.

Alternatively, you can offload some work by using a web scraping API. It’s a less complicated approach that lets you send requests to the API and simply store the output. Providers like Oxylabs, Decodo (formerly Smartproxy), or Bright Data offer commercial APIs to users.

If you’ll be using your own scraper at any larger scale, consider getting a proxy server to hide your IP address. This way, you’ll avoid IP blocks, CAPTCHAs, and other roadblocks along the way. If you’re going after major e-commerce shops or other well-protected websites, stick to residential proxies. Otherwise, datacenter proxies from cloud service providers will suffice.

Popular Web Scraping Techniques

1. Manual Web Scraping

The most basic technique of data gathering is manual scraping. It includes copying content and pasting it into your dataset. Even though it’s the most straightforward way to collect information, it’s repetitive and time-consuming.

Websites target their efforts at stopping large-scale automated scripts. So, the one advantage of copy-pasting information by hand is that you won’t have to deal with strict rules imposed by the target website. Otherwise, if you need vast amounts of data, consider automated scraping.

2. HTML Parsing

3. JSON for Linking Data

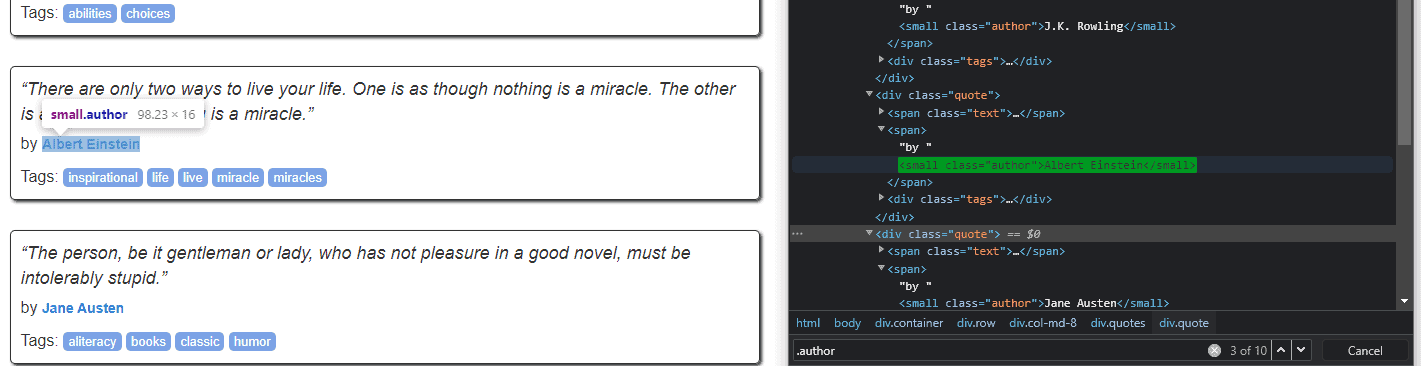

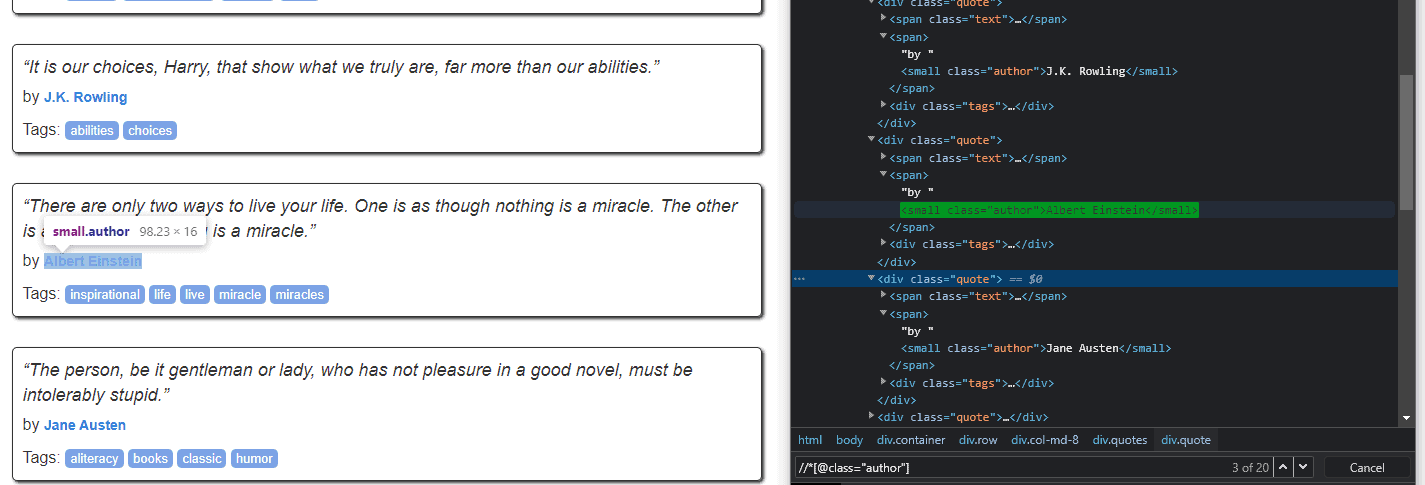

Web pages consist of HTML tags that tell a browser how to display the information included in the tag. Search engines parse through the HTML code to find logical sections. However, they have limited understanding; if the tag element doesn’t include additional tags, Google, Bing, Yahoo, or other search engines won’t be able to display your content correctly.

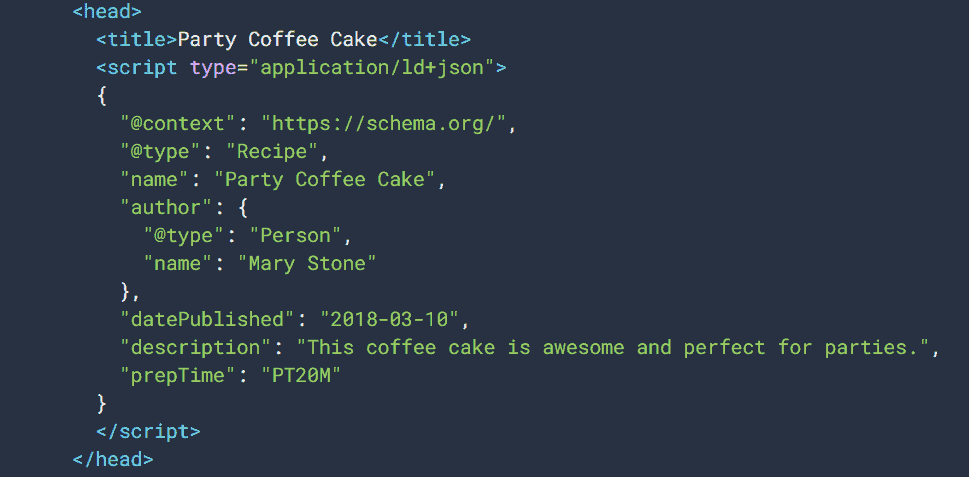

JavaScript Object Notation for Linked Data (JSON-LD) tag annotates elements embedded in the page and structures the data for search engines. Websites use it so that search engines can return more accurate results and improve how they’re represented in SERPs. You can find the JSON-LD (application/ld+json) tag in the <head> section of a page under <script> element, and use it in your script to extract the data.

4. XHR Requests

Other Useful Methods to Improve Your Script

Cache HTTP Requests

When it comes to scraping multiple pages, you’ll have to build a scraper with crawling logic, which would go through thousands of URLs. However, once you know what pages have already been visited or need to revisit the same pages to get more data, you’ll need to cache HTTP requests. This technique allows you to store the response in a database which you can reuse for subsequent requests.

This method improves load performance since the server won’t need to parse or route every request again. Eliminating these steps reduces the load on the server, and there’s no need to re-download the same resource each time.

Canonical URLs

Some websites store several URLs that display the same content. For example, a site can include desktop and mobile versions, making the URL tag slightly different, yet your scraping bot recognizes the data as duplicate. A canonical URL is an HTML code snippet that defines the main version for duplicates or near-duplicates.

Canonical tags (rel=” canonical”) help developers and crawlers to specify which version of the same or similar content under different URLs is the main one. This way, you can avoid scraping duplicates. Web scraping frameworks like Scrapy handle the same URLs by default. You can find canonical tags within a web page’s <head> section.