How to find all URLs using Selenium

A step-by-step guide on how to find all URLs using Selenium.

Important: we’ll use a real-life example in this tutorial, so you’ll need Selenium library and browser drivers installed.

from selenium.webdriver.common.by import By

link_elements = driver.find_elements(By.TAG_NAME, "a")

for link in link_elements:

print (link.get_attribute("href"))

NOTE: In the code above, we’re getting all elements with <a> tag and iterating through them to print out their href attribute.

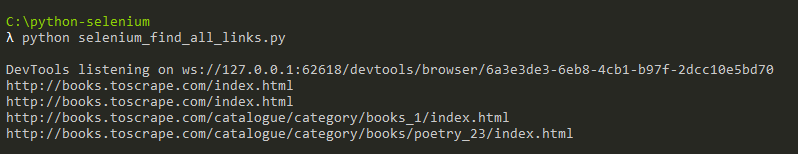

This is the output of the script. It shows the elements you’ve just scraped.

Full code so far:

from selenium import webdriver

from selenium.webdriver.common.by import By

import re

driver = webdriver.Chrome()

driver.get("http://books.toscrape.com/catalogue/a-light-in-the-attic_1000/index.html")

link_elements = driver.find_elements(By.TAG_NAME, "a")

for link in link_elements:

print (link.get_attribute("href"))

driver.quit()

Find Links Using CSS or XPath Selectors

Step 1. We’ll use our main page in the example to get the URLs of the reviews we can find on our home page.

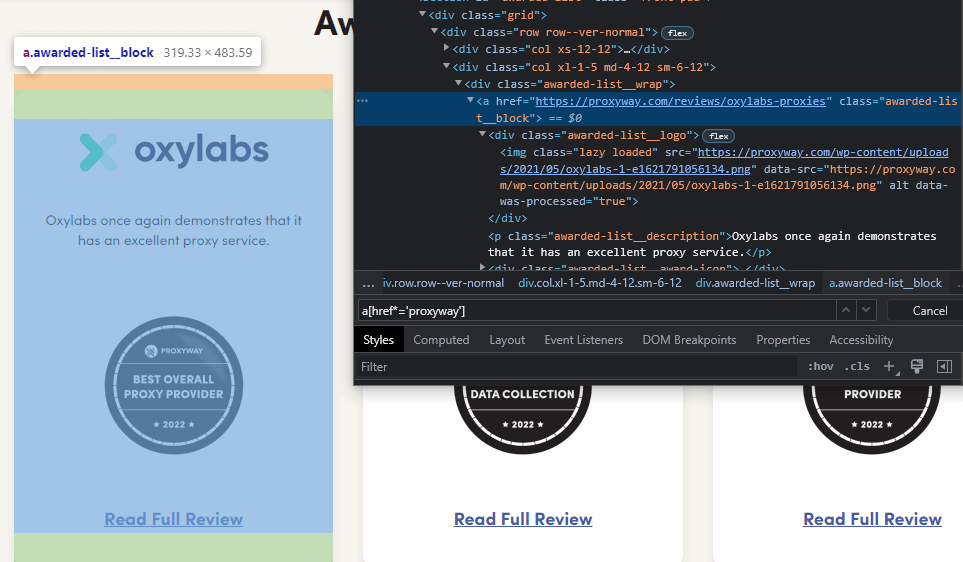

Inspect the page source. You can see that all of these links have one thing in common – reviews/ string present in their href attributes. That’s what you need to select.

Step 2. You can write the CSS and XPath selectors in this way:

elements_by_css = driver.find_elements(By.CSS_SELECTOR, "a[href*='reviews/']")

elements_by_xpath = driver.find_elements(By.XPATH, "//a[contains(@href,'reviews/')]")

NOTE: Both of them are looking for <a> tags within the document with a href tag containing a reviews/ substring.

Step 3. You can add a couple more lines of code to print everything out. The whole script looks like this:

from selenium import webdriver

from selenium.webdriver.common.by import By

driver = webdriver.Chrome()

driver.get("https://proxyway.com")

elements_by_css = driver.find_elements(By.CSS_SELECTOR, "a[href*='reviews/']")

elements_by_xpath = driver.find_elements(By.XPATH, "//a[contains(@href,'reviews/')]")

print ("By CSS:")

for link in elements_by_css:

print (link.get_attribute("href"))

print ("By XPath:")

for link in elements_by_xpath:

print (link.get_attribute("href"))

driver.quit()

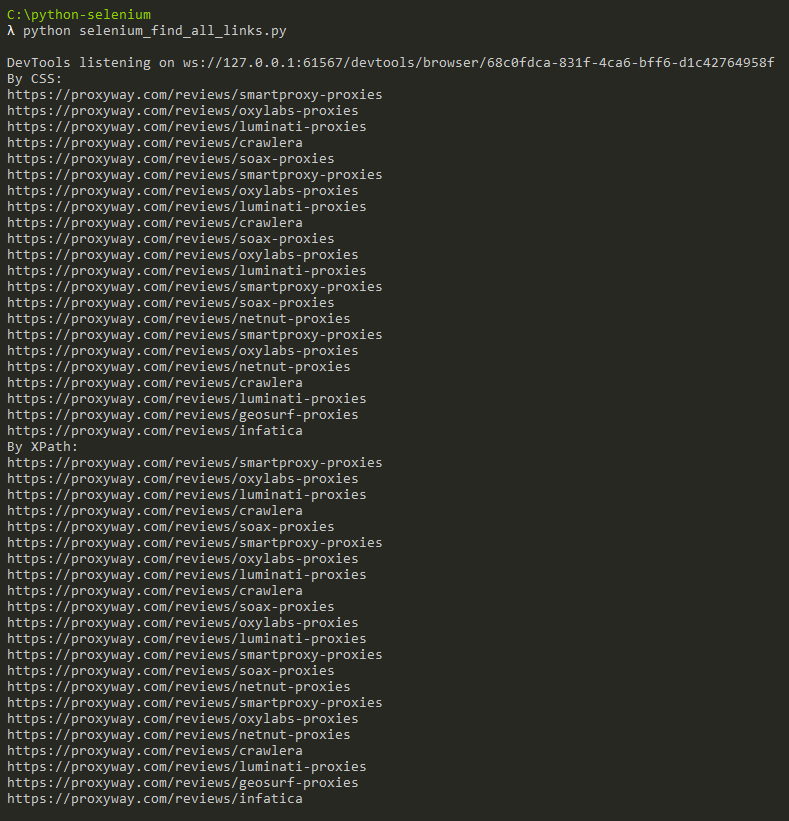

This is the output of the script. It shows the links you’ve just scraped.

NOTE: You can see that both selectors return the same links. Some duplicates can be easily filtered out after creating a new list to store unique online links.