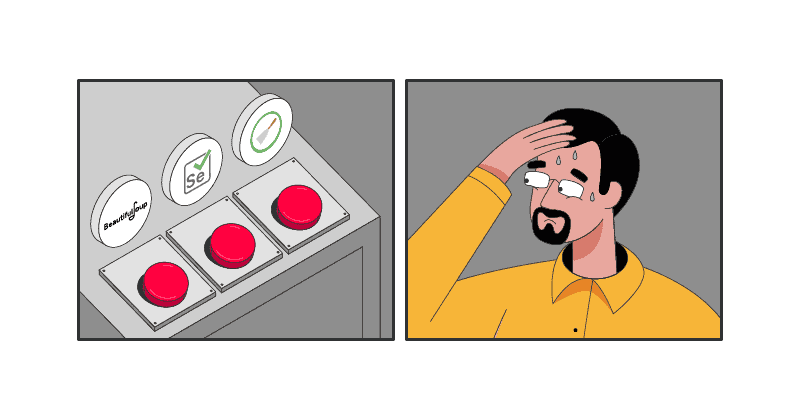

Scrapy vs Beautiful Soup vs Selenium – Which One to Use?

We compare three popular web scraping tools.

Scrapy, Beautiful Soup, and Selenium are three popular web scraping tools. If you’re new to scraping – or simply haven’t tried all of them yet – you might find it hard to figure out which one you might need. This guide briefly goes through their capabilities and situations where each of the tools works best. If you’re in a hurry, you can jump straight to the comparison table below.

Scrapy – a Full Package for Large-Scale Scraping

Scrapy is a so-called web crawling and scraping framework. Unlike some other tools, it doesn’t require any further dependencies to work (unless you’re dealing with JS). In other words, it contains everything you need to crawl pages, download and parse, and finally store the data you’ve scraped.

Scrapy is Python-based and open source. These two features make it a popular choice for web scraping, meaning that you’ll find much information about using it: both by reading comprehensive documentation and consulting with other scrapers on sites like StackOverflow.

By design, Scrapy was made to be greatly extensible. Aside from its rich basic features, it supports middleware and extensions, both of which bring custom functionality to Scrapy scripts (also called spiders). The framework allows adding proxies, controlling crawl depth, cookie and session handling. There’s even an interactive console for seeing if you’ve selected the right CSS or XPath expressions live. In short, Scrapy is powerful.

One of Scrapy’s best features is that it can handle and process requests asynchronously. So, you can extract data from many pages at once, which makes the framework very fast and well suited for large-scale scraping.

However, all this functionality and extensibility means that Scrapy isn’t the easiest tool to use. Despite the generous docs, you’ll have to invest a good deal of time to get the hang of things.

It also doesn’t render JavaScript out of the box, so you’ll have to use a headless browser like Puppeteer, Splash, or Selenium for that.

Overall, you should be looking at Scrapy if you have a big web scraping project to do, or with a view to scraping at scale in the future.

Beautiful Soup – a Simple Parser for Beginners and Small Jobs

As Beautiful Soup’s developers put it, they’re here to help you extract data from that awfully-written page. And they do so by giving you a Python-based data parsing library.

In essence, that’s what Beautiful Soup is – a library that structures an HTML or XML page, selects the data you need, and helps you extract it in a proper format. Unlike Scrapy, it can’t crawl pages or make GET requests. So, you’ll have to use another library like Requests to achieve that.

In reality, Beautiful Soup isn’t one parser, but rather a bundle of several parsing tools in one. It uses html.parser, HTML5lib, and lxml in the backend, so you can experiment with various parsing approaches. For example, lxml is the fastest, while HTML5lib is slow but very flexible.

Beautiful Soup’s biggest benefit is that it’s very simple to use: you can write a basic scraper in minutes and with few lines of code. It also doesn’t break very easily. These features give Beautiful Soup great appeal among web scrapers, so you can benefit both from great documentation and a lively community of developers online.

Beautiful Soup allows parallelizing requests. But setting it up isn’t very easy, and it still fails to compare with Scrapy in speed.

Overall, Beautiful Soup is a great choice for one-off or smaller web scraping jobs, where you don’t need to consistently extract data on a large scale.

Selenium – a Web Driver for JavaScript-Dependent Pages

Selenium is an API that lets you programmatically control a headless browser. Its primary purpose is to help with automated web testing, but Selenium has also found a role in web scraping. And the reason for that is simple – it’s able to deal with JavaScript.

Over the years, more and more websites have introduced functionality that depends on JS to work. Some examples could be asynchronous loading or those bottomless pages you can scroll endlessly. Regular web scraping scripts can’t scrape content nested in JavaScript elements, so you need to load the full page first. Being able to do so has been Selenium’s claim to fame.

Aside from being an early solution to the JavaScript problem, Selenium is also very versatile. It runs on multiple programming languages, including Python, Java, Ruby, and node.js. It can control every major browser there is: Chrome, Firefox, even Internet Explorer. And as a major tool, it has a large community, with a long history of issues and solutions.

Selenium lets you not only load a website, but also interact with it: emulate actions, fill in forms, click on buttons, and do other things. In other words, it includes full functionality of a proper headless browser.

However, precisely because it controls a whole headless browser, Selenium isn’t light on resources. It doesn’t help that the only way to multithread it is to fire up a new browser instance. The efficiency simply isn’t there. Nowadays, there are arguably better options for headless web scraping, such as Puppeteer or Playwright.

Still, Selenium is a good choice if you need to scrape a small to moderate number of pages that depend on JavaScript. Otherwise, you’d better have a lot of computing power, or your scraping will become very slow.

Comparing the Three Options

Here’s a brief table that displays the main features of Scrapy, Beautiful Soup, and Selenium side by side:

| Scrapy | Beautiful Soup | Selenium | |

| Web crawling | Yes | No | Yes |

| Data parsing | Yes | Yes | Yes |

| Data storage | Yes | No | Yes |

| Asynchronous | Yes | No | No |

| JavaScript rendering | With external libraries | No | Yes |

| Selectors | CSS, XPath | CSS | CSS, XPath |

| Proxies | Yes | With external libraries | Yes |

| Performance | Fast | Average | Slow |

| Extensibility | High | Limited | Limited |

| Learning curve | Steep | Easy | Steep |

| Best for | Continuous large-scale scraping projects | Small to average scraping projects | Small to average scraping projects that require JavaScript |

Frequently Asked Questions About Scrapy vs Beautiful Soup vs Selenium

Yes. Scrapy doesn’t need to render the full page – and it’s asynchronous – so it’s much faster than Selenium.

While not designed for web scraping (but rather automated testing), Selenium works well for scraping websites that rely on JavaScript.

Beautiful Soup is the best option for beginners who want to try out web scraping. Couple it with a request library like Requests, and you’ll be able to write simple web scraping script in no time.