Scrapy vs Selenium: Which is Better for Web Scraping in 2026?

Two web scraping frameworks, different capabilities, and one choice to make – which tool better fits your project needs?

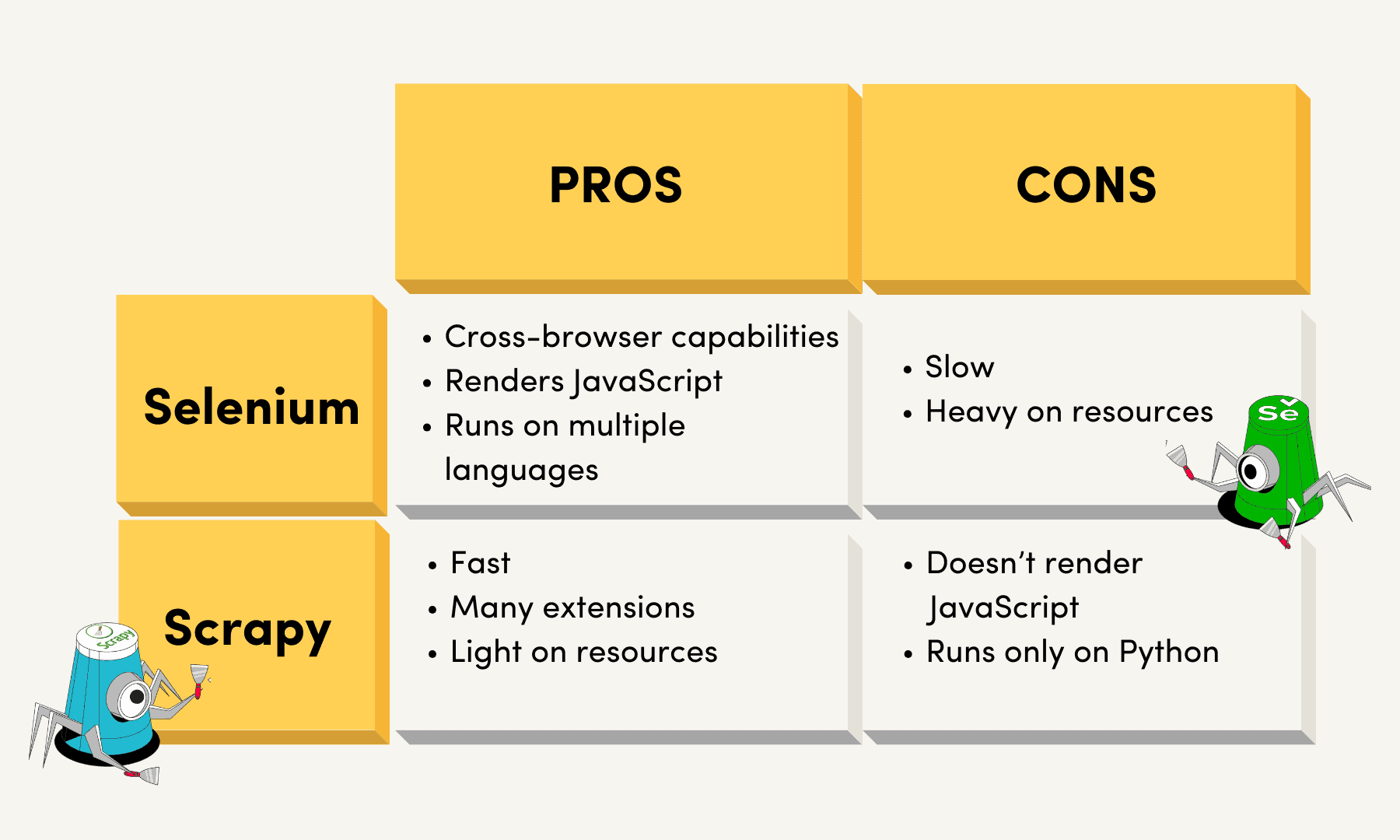

Scrapy and Selenium are two very popular yet different tools used in web scraping. The choice mainly depends on your web scraping project requirements: do you need to deal with elements like infinite scrolling, or maybe you want to scrape multiple pages? If you’re unsure which – Scrapy or Selenium – you should use for your project, read on. In this guide, you’ll learn all about both tools, their performance and features.

Scrapy vs Selenium Frameworks for Web Scraping

What is Selenium?

Selenium is a framework mostly known for testing and automating web browsers but can also be used in web scraping. In essence, it’s a headless browser that doesn’t render visual elements like tab or URL bars.

The way Selenium works is it launches a web browser, such as Chrome or Firefox, and then controls it programmatically. It handles JavaScript-based elements like infinite scrolling or lazy loading and allows you to interact with websites by mimicking human browsing behavior.

Additionally, Selenium is a standalone library, so you don’t need other dependencies to build a scraper. It allows you to navigate between websites, click buttons, and fill out forms. This makes Selenium a good choice for scraping dynamic websites that rely on JavaScript to render content.

What is Scrapy?

Scrapy, on the other hand, is a framework specifically designed for web crawling and scraping. It is written in Python and built around the spider concept – classes you define that Scrapy uses to navigate through websites, extract data, and store it.

One of Scrapy’s main strengths is the ability to handle large-scale web scraping projects. It supports concurrent requests, so the tool can crawl and scrape multiple pages or entire websites.

Like Selenium, Scrapy is a complete toolset. This means it can fetch, download, and structure data. The main difference is that Scrapy alone can’t deal with JavaScript-rendered content. In this case, the framework is used with a headless browser.

Selenium vs Scrapy for Web Scraping: A Detailed Overview

Prerequisites and Installation

Scrapy. The library has a straightforward installation procedure. Since Scrapy is a Python library, you’ll need to have the latest version of Python on your device. To install Scrapy, open a terminal or command prompt. Run the following command using Pip:

pip install Scrapy

A step-by-step guide to web scraping with Selenium.

Features

Selenium. To access content on a website, Selenium uses a browser driver. This also means it can handle content nested in JavaScript, which Scrapy can’t. So, Selenium is the go-to choice for scraping dynamic websites.

Another great feature of Selenium is that it can mimic a real person’s behavior. This is particularly important if you don’t want to seem like a bot and deal with reappearing CAPTCHAs. Additionally, it can take screenshots, click buttons, and handle pop-ups.

One of the biggest advantages is that Selenium can emulate all major browsers like Chrome, Firefox, and Microsoft Edge. What’s more, it’s also flexible in terms of programming languages – the tool runs Python, Ruby, NodeJS, and Java. If you set up client language bindings, you can use Selenium with PHP, Go, Perl, Haskell, Dart, and R.

With Selenium’s selenium-stealth package, you can rotate your user agent, send cookies, and manipulate fonts. This helps to overcome digital fingerprinting issues when web scraping.

Scrapy. Scrapy supports middleware and extensions that make the tool greatly extensible. The framework uses spiders – classes that allow you to specify how a website is crawled and parsed. You can build your own web crawling logic for each of your target websites.

The framework allows you to control the crawling speed (Autothrottle extension). This functionality is based on Scrapy’s server and the target website’s load. It adjusts the download delays and only then sends the request. This way, you don’t overload the target website.

Scrapy has an in-built feature – Feed export – that allows you to export data in several formats like JSON, JSON lines, XML, and CSV.

And these are just a few advantages Scrapy has to offer. That aside, you can further manipulate your scraper – from controlling cookies and sessions to seeing whether you’ve selected the right CSS or XPath expressions live with an interactive console.

Request Handling

Selenium. The framework primarily deals with synchronous requests – a single request at a time. Even though you can scrape multiple pages at the same time (asynchronously), Selenium will use many resources, slowing down your scraper. This is because Selenium needs to load a full browser for each website, so it takes up more computing power.

Scrapy. Scrapy is asynchronous by default – it’s built on top of the Twisted asynchronous networking library. It lets you respond to several events at the same time. This approach is useful if you want to make concurrent requests. For example, scrape multiple pages. Scrapy can also prioritize requests and handle automatic retries in case the request fails.

Performance

Selenium. Even though Selenium is a powerful tool in web scraping, it’s relatively slow performance-wise. To interact with a browser and its drivers, Selenium requires installing a WebDriver API. It first translates data into JSON and only then sends an HTTP response back. Simply put, your request is run through the browser and via multiple connections.

Scrapy. One of the best things about Scrapy is its speed. Since the framework is asynchronous by default, it can run concurrent requests, so you don’t need to wait until the request is finished. What’s more, unlike Selenium, Scrapy doesn’t need to load a full browser every time.

Playwright vs Selenium: A Comparison Table

| Selenium | Scrapy | |

| Year | 2004 | 2008 |

| Primary purpose | Web testing and automation | Web scraping |

| Prerequisites | WebDriver | – |

| Programming languages | Python, JavaScript, NodeJS, Java and others with language binding | Python |

| Difficulty setting up | Difficult | Moderate |

| Learning curve | Difficult | Moderate |

| Asynchronous | No | Yes |

| JavaScript rendering | Yes | No |

| Extensibility | Lower | High |

| Performance | Slow | Fast |

| Proxy integration | Yes | Yes |

| Community | Large | Large |

| Best for | Small to mid-sized projects | Small to large-sized projects |

Alternatives to Scrapy and Selenium

Web scraping can be done with other libraries as well. For example, if you’re looking for a headless library, you can try out web scraping with Playwright. It’s lighter on resources, easier to use, and has similar functionalities as Selenium. To find out more, you can check our guide where we compare Playwright with Selenium.

A guide on what each tool can do.

Puppeteer is another great tool for scraping dynamic websites. It’s a Node.js library for controlling a headless Chrome browser. Similar to Selenium, it can also fully automate most browser interactions like moving the mouse or filling out forms.

A guide of two popular headless browser libraries.