How to Scrape Google Flights With Python: A Step-by-Step Tutorial

Instead of having multiple browser tabs open to check every destination, you can scrape Google Flights with a Python-based scraper, and get structured flight data in minutes.

Planning trips online has become significantly more convenient, but there are still roadblocks – booking flights can still be time-consuming due to the sheer amount of data. While platforms like Google Flights offer a neat way to check all necessary information and compare it across different airlines, manually looking through each date and destination can be daunting. By automating this process with a Google Flights scraper, gathering large volumes of data and comparing it becomes less of a hassle.

Whether you’re a person looking for a bargain on flight tickets, a business analyst, or a scraping enthusiast searching for a new challenge, this guide will help you build a scraper that collects Google Flights data from scratch.

Why Scrape Google Flights?

Google Flights offers a vast amount of valuable data – from flight times and prices to the environmental impact of the flight. By scraping flight pages you can extract prices, schedules, and availability, as well as plan trips and stay updated when changes are made.

Platforms like Google Flights offer flight information based on your requirements (departure and arrival location, dates, number of passengers), but it’s not always easy to compare it – you need to expand the results to see all relevant information, such as layovers. And having several expanded results can be hardly readable. Scraping real-time data can help you find the best deals, and plan itineraries better. Or, if you’re a business owner, it can help gather market intelligence and analyze customer behavior.

What Google Flight Data You Can Scrape?

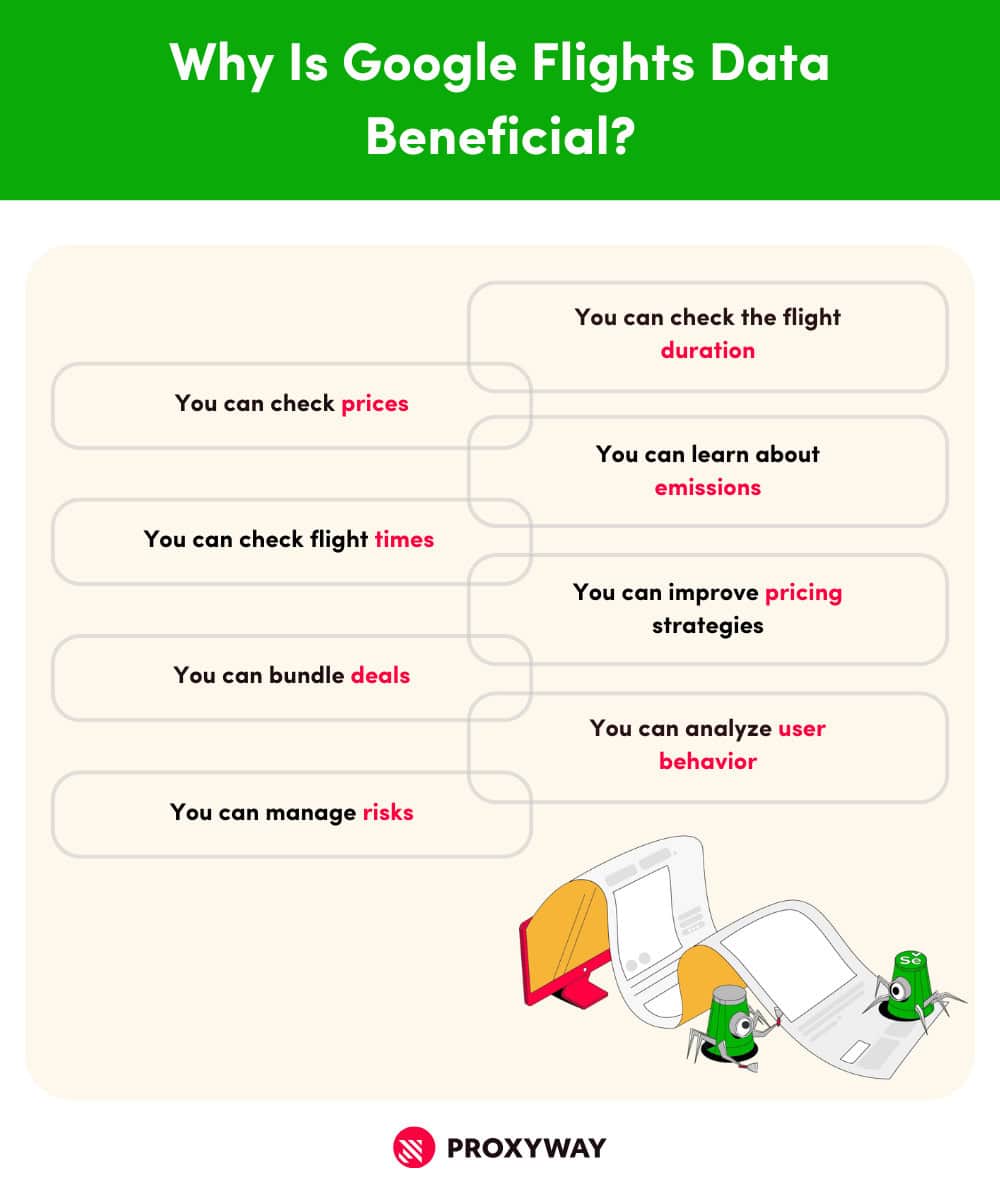

There are dozens of reasons to scrape Google Flights data. While the intention might vary based on what you’re trying to accomplish, both travelers and businesses can benefit from it.

If you’re simply planning a trip, scraping Google Flights data might help you to:

- Compare prices. Getting information about pricing is one of the key reasons why people choose to scrape Google Flights. Structured scraped results can help to evaluate ticket prices, and compare them across different airlines.

- Check flight times. Another major reason to extract Google Flights data is flight times. You can collect departure and arrival times and dates, compare them, and select the option that fits your itinerary best.

- Find out about stops. Most people prefer direct flights. Google Flights has data that allows you to check if there will be any layovers until you reach your destination.

- Review duration. Knowing how long the flight is going to take will help you plan the trip better, and see how the flight fits into your schedule. Such data can give you insights on the duration of your flights between specific locations.

- Learn about emissions. Scraped data from Google Flights can help you to evaluate carbon emissions of the flights, and make more sustainable choices.

If you’re looking to scrape Google Flights for business purposes, you can:

- Analyze user behavior patterns. There are specific times when people tend to travel to certain destinations, such as during winter holidays, summer vacations, and more. By reviewing these behavior patterns, companies can segment user bases and target advertisements better.

- Improve pricing strategies. Flight information is relevant for more businesses than just airports and airlines. Hotels, taxi services, car rental companies, travel insurance companies can review the increase or decrease of demand for specific locations, and adjust their pricing accordingly.

- Create bundle deals. Accurate flight data can help travel agencies create better travel deals by bundling tickets, hotels, transportation, and activities for customers.

- Improve risk management. Travel insurance companies can leverage flight data to identify popular destinations, and adjust policies and pricing to better align with customer demand.

Is Scraping Google Flights Legal?

Data on Google Flights is public, and there are no laws prohibiting the collection of publicly available information. However, there are several things to keep in mind to avoid legal implications.

Here are several tips on how to scrape Google Flights data ethically:

- Comply with Google’s terms of use. Take the time to go over Google’s terms of service to make sure you don’t violate any of their guidelines.

- Read the robots.txt file. The file gives instructions to robots (such as scrapers) about which areas they can and cannot access (e.g., admin panels, password-protected pages). Be respectful and follow the given commands.

How to Scrape Google Flights with Python: Step-by-Step Guide

If you’re looking to build your own Google Flights scraper, here’s a comprehensive guide on how to do so from scratch.

In this example, we’ll use Python with Selenium to build the scraper. Python is a great choice due to its straightforward syntax – it’s relatively easy to write, maintain, and understand. Additionally, since Google Flights is a highly dynamic website, we’ll use Selenium to handle dynamic content and interactive elements, such as buttons.

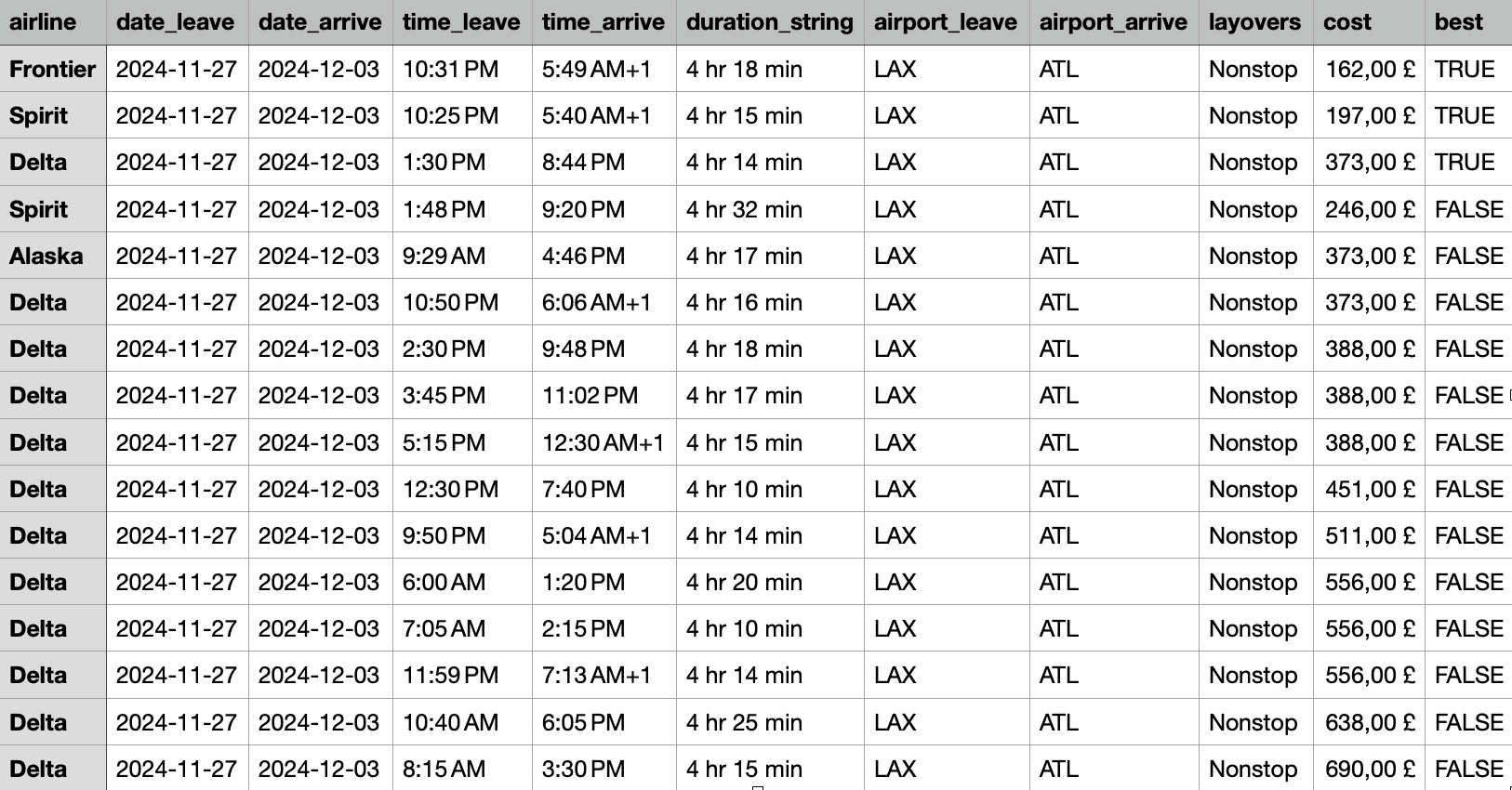

Below is a table containing all information about the scraper we’re going to build.

| Programming language | Python |

| Libraries | Selenium |

| Target URL | https://www.google.com/travel/flights/ |

| Data to scrape | 1. Departure date from the origin location 2. Return date from the destination 3. Operating airline 4. Departure time 5. Arrival time 6. Flight duration 7. Departure airport 8. Arrival airport 9. Layovers 10. Cost of the trip 11. Best offer |

| How to save data | CSV file |

Prerequisites

Before the actual scraping begins, you’ll need to install the prerequisites.

- Install Python. You can download the latest version from Python’s official website. If you’re not sure if you have Python installed on your computer, check it by running python –version in your terminal (Terminal on MacOS or Command Prompt on Windows).

- Install Selenium. To use Selenium with Python for this scraper, install it by running pip install selenium in the Terminal.

- Install Chrome WebDriver. Selenium helps to control headless browsers, such as Chromium (which powers Google Chrome). Download the Chrome WebDriver that corresponds to your Chrome browser.

- Get a text editor. You’ll need a text editor to write and execute your code. There’s one preinstalled on your computer (TextEditor on Mac or Notepad on Windows), but you can opt for a third-party editor, like Visual Studio Code, if you prefer.

Importing the Libraries

Once all your tools are installed, it’s time to import the necessary libraries. Since we’ll be using Python with Chrome, we need to import the WebDriver to the system Path for the browser to work with Selenium.

Step 1. Import WebDriver from Selenium module.

from selenium import webdriver

Step 2. Then, import the By selector module from Selenium to simplify element selection.

from selenium.webdriver.common.by import By

Step 3. Import all necessary Selenium modules before moving on to the next steps.

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

from selenium.webdriver import Keys, ActionChains

Step 4. We want to save our results into a CSV file, so let’s import the CSV module, too.

import csv

Setting Up Global Variables and Parameters

After importing all the necessary libraries, we need to to set up global variables to store key values. These include the target URL, a timeout (to accommodate page loading time), and any specific parameters.

Step 5. So, let’s set up global variables.

start_url = "https://www.google.com/travel/flights"

timeout = 10 #seconds

Step 6. Next, set up the parameters for the scraper – specifically, the criteria you’re looking for in the flights. These include departure and arrival locations, as well as travel dates.

my_params = {

'from': 'LAX',

'to': 'Atlanta',

'departure': '2024-11-27',

'return': '2024-12-03'

}

Note: You can also define parameters for one-way flights, too.

my_params = {

'from': 'LAX',

'to': 'Atlanta',

'departure': '2024-11-27',

'return': None

}

When browsing Google Flights, you don’t need to specify the exact airport for departure or arrival – you can simply enter a city (or even a country) instead because we’re using the auto-complete feature. It simplifies location input by suggesting relevant options. For example, typing Los will display suggestions that match the input – LOS airport in Nigeria, Los Angeles in the U.S., or Los Cabos in Mexico.

You can edit these values as you see fit – your ‘from’ value can be set to ‘Los Angeles’, and the scraper will target any airport in Los Angeles for departure. You can also specify a different airport, like ‘JFK’ or change the dates completely. But, for the sake of this example, let’s use LAX for departure and any airport in Atlanta for arrival.

Setting Up the Browser

Step 7. Before we start scraping with Selenium, you need to prepare the browser. As mentioned earlier, we’ll be using Chrome in this example.

def prepare_browser() → webdriver:

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument(‘--disable-gpu’)

driver = webdriver.Chrome(options = chrome_options)

return driver

Note: This browser setup will allow you to see the scraping in action. However, you can add an additional chrome_options line to run Chrome in headless mode.

def prepare_browser() → webdriver:

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument(‘--disable-gpu’)

chrome_options.add_argument(“--headless=new”)

driver = webdriver.Chrome(options = chrome_options)

return driver

Step 8. It’s also important to set up the main() function. It calls the prepare_browser function, which returns a Chrome driver. Additionally, we need to instruct the driver to execute the scraping, and close when it’s finished.

def main() -> None:

driver = prepare_browser()

scrape(driver)

driver.quit()

if __name__ = ‘__main__’:

main()

Scraping Google Flights

When the browser is prepared, we can actually start scraping the results from the Google Flights page.

Handling Cookies on Google Flights with Python

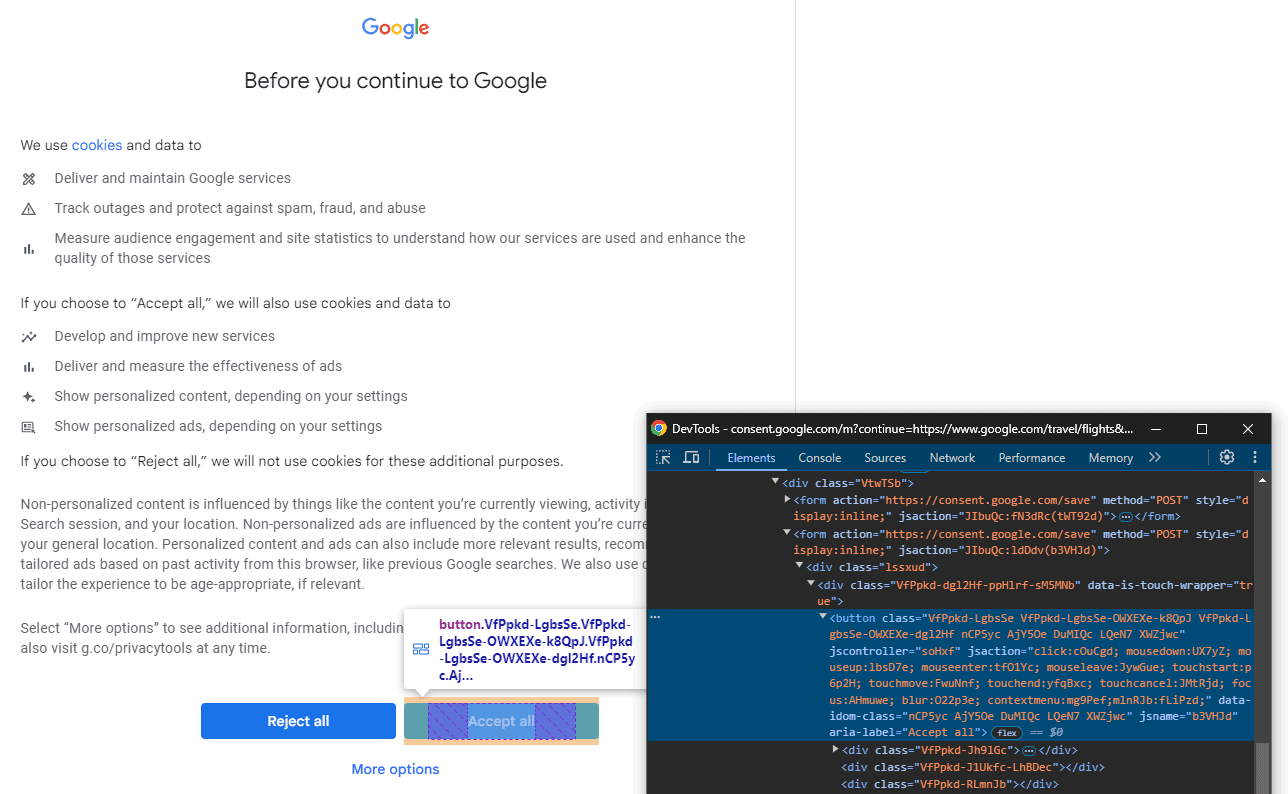

While the start_url is the Google Flights main page, the scraper might bump into a cookie consent page first. Hence, we need to instruct our scraper to handle it.

Step 9. Let’s provide the scraper with some extra information to handle the cookie consent page. Namely, find and copy the CSS selectors for the “Accept” button. We can do this by using Inspect Element.

If the scraper successfully clicks the “Accept” button on the cookie consent page, we’ll still need to wait until the actual Flights page loads. In this example, we’re using the “Search” button’s appearance as an indication that our target page has loaded.

Step 10. Using the search button’s CSS selector, instruct the scraper to wait for it to appear before moving on to the next step. So, let’s write a function that will print “Search button found, continuing.” if everything went well, and “Something went wrong.” if the scraper couldn’t locate said button.

Here’s how the function for accepting cookies and locating the “Search” button looks like:

def scrape(driver: webdriver) -> None:

driver.get(start_url)

if driver.current_url.startswith("https://consent.google.com"):

print ("Hit the consent page, handling it.")

btn_consent_allow = driver.find_element(

By.CSS_SELECTOR, 'button.VfPpkd-LgbsSe[jsname="b3VHJd')

btn_consent_allow.click()

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((

By.CSS_SELECTOR, 'button[jsname="vLv7Lb"]'))

)

print ("Search button found, continuing.")

prepare_query(driver)

except Exception as e:

print("Something went wrong: {e}")

Continuing in the def scrape function, let’s add some code instructing the scraper to locate and click on the “Search” button, and print “Got the results back.” when the scraping is finished.

search_btn = driver.find_element(By.CSS_SELECTOR, 'button[jsname="vLv7Lb"]')

search_btn.click()

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((

By.CSS_SELECTOR, 'ul.Rk10dc'))

)

print ("Got the results back.")

Scraping Google Flights

At the beginning of our script, we defined our parameters: origin location (‘from’), destination (‘to’), a date for departure (‘departure’), and a date for return (‘return’). These parameters will help the scraper fill in the query fields. To allow def scrape to function properly, we need to instruct the scraper about how it should prepare the search query.

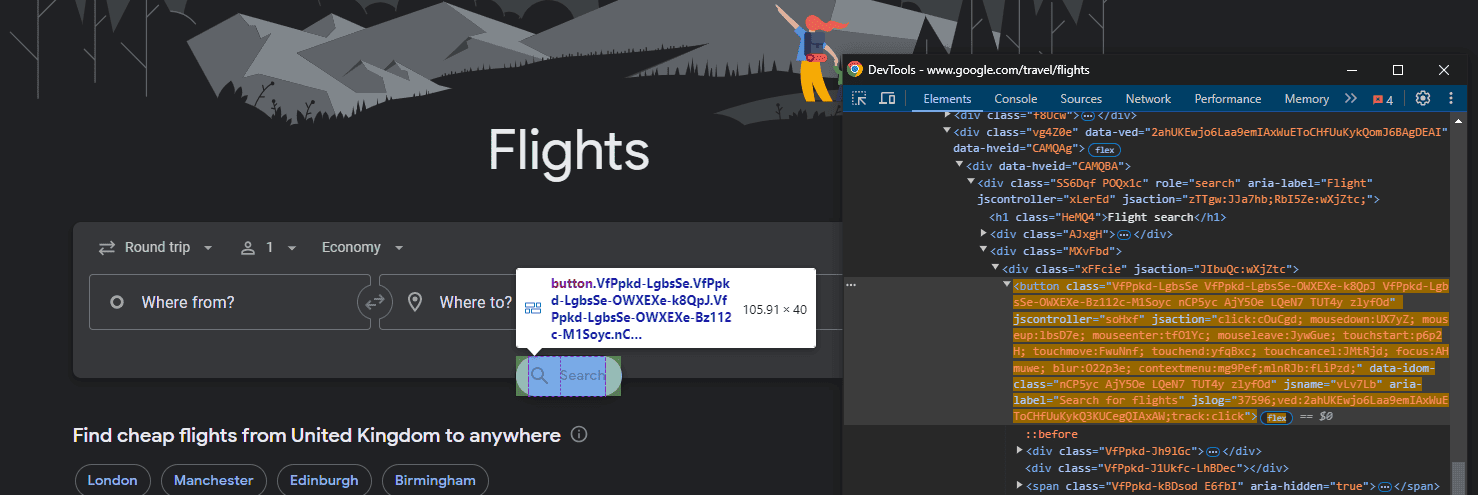

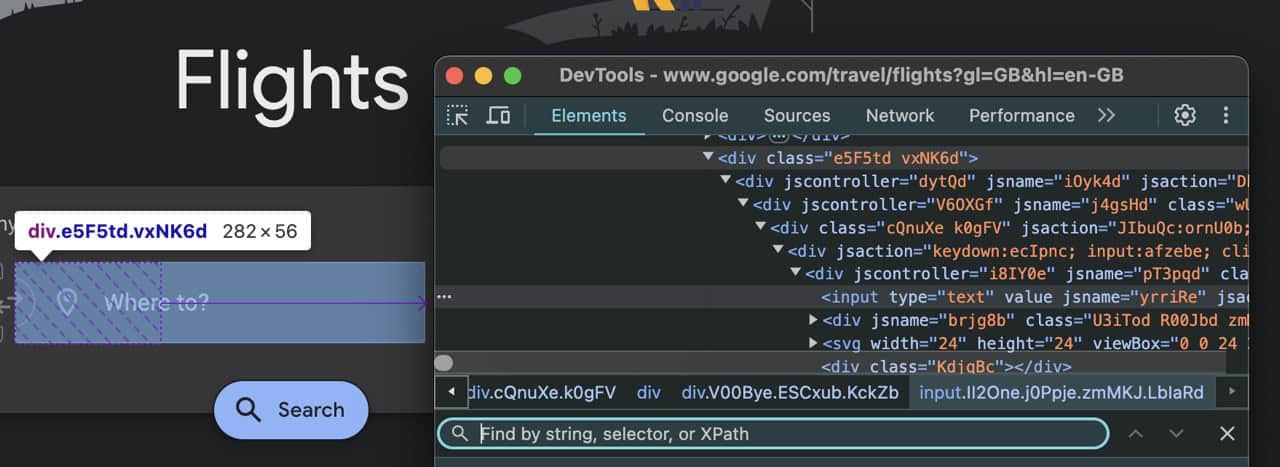

Step 11. While we have our values ready, the scraper needs to know where to use them. For that, we’ll need to find and copy another set of CSS selectors for “Where from?”, “Where to?”, and date fields.

However, we need to prepare our scraper for two potential date_to options – if the return date is defined in my_params, and if it’s not.

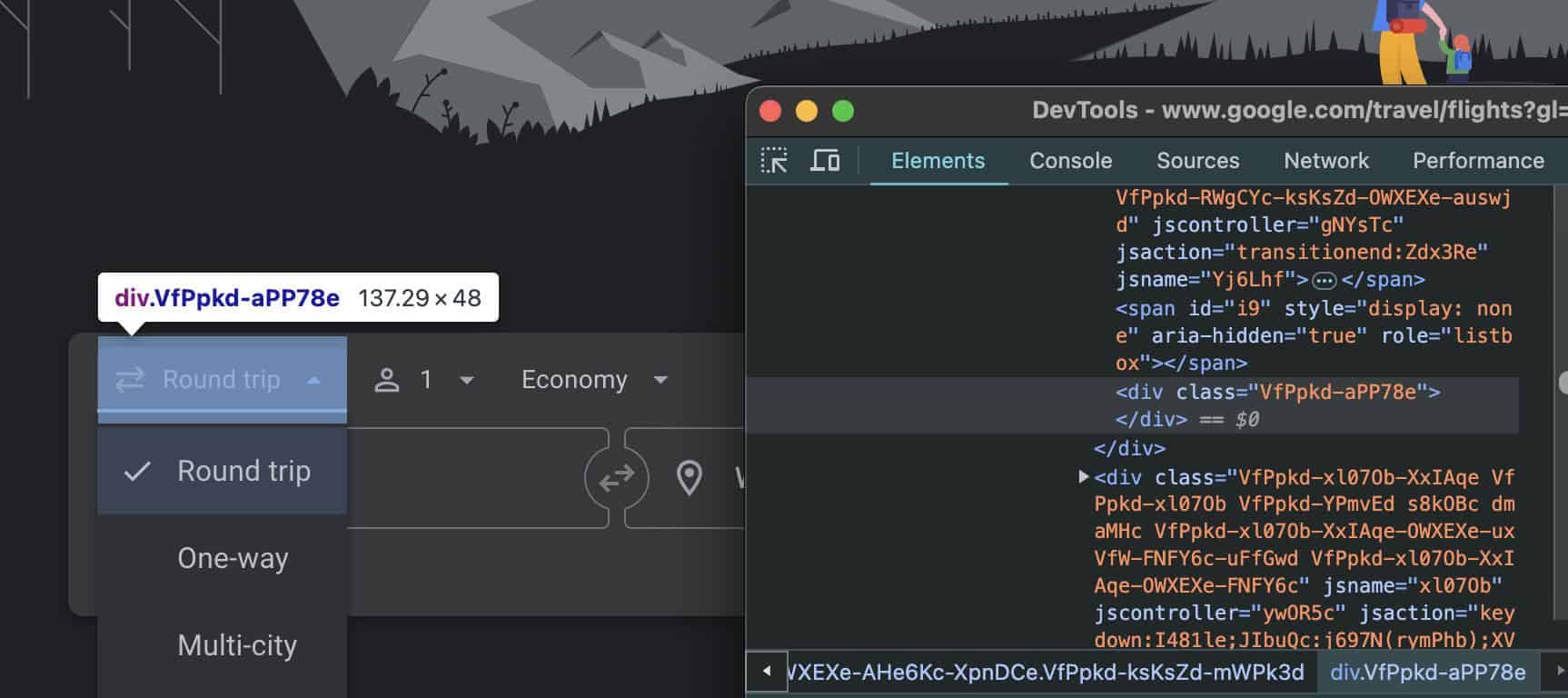

However, if the return date is set to None, we’ll also need to change the selection to One-way (instead of Round trip) in the dropdown menu. Thus, we’ll need a CSS selector for the menu as well.

Step 12. Instruct the scraper about how it should fill in the “Where from?”, “Where to?”, and date fields.

def prepare_query(driver) -> None:

field_from = driver.find_element(By.CSS_SELECTOR, 'div.BGeFcf div[jsname="pT3pqd"] input')

field_to = driver.find_element(By.CSS_SELECTOR, 'div.vxNK6d div[jsname="pT3pqd"] input')

date_from = driver.find_element(By.CSS_SELECTOR, 'div[jsname="I3Yihd"] input')

date_to = None

if my_params['return'] is None or my_params['return'] == '':

dropdown = driver.find_element(By.CSS_SELECTOR, 'div.VfPpkd-aPP78e')

dropdown.click()

ActionChains(driver)\

.move_to_element(dropdown)\

.send_keys(Keys.ARROW_DOWN)\

.pause(1)\

.send_keys(Keys.ENTER)\

.perform()

The if function might find a pre-defined return date in my_params. If that’s the case, we need to find a CSS selector for the return date field instead of changing the the value in the dropdown menu. The scraper will fill in the form using data from my_params.

else:

date_to = driver.find_element(By.CSS_SELECTOR,

'div.K2bCpe div[jsname="CpWD9d"] input')

field_input(driver, field_from, my_params['from'])

field_input(driver, field_to, my_params['to'])

field_input(driver, date_from, my_params['departure'])

if date_to is not None:

field_input(driver, date_to, my_params['return'])

ActionChains(driver)\

.send_keys(Keys.ENTER)\

.perform()

print("Done preparing the search query")

Step 13. Once all the fields we need to fill in are defined, instruct the scraper to enter the information into the selected fields.

We’ll use ActionChains to send the text that needs to be typed in. Additionally, let’s instruct the scraper to press Enter, so that the first suggested option for departure and arrival dates is selected from the dropdown menu.

def field_input(driver, element, text) -> None:

element.click()

ActionChains(driver)\

.move_to_element(element)\

.send_keys(text)\

.pause(1)\

.send_keys(Keys.ENTER)\

.perform()

Note: In Step 10, we instructed the scraper to click on the “Search” button to run this search query.

Returning the Results

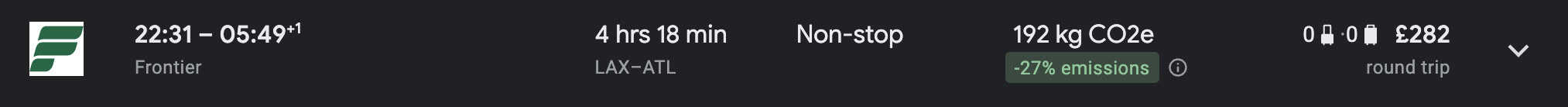

If you check the Google Flights page source, you’ll notice that the results come in an unordered list, where one list item contains all the information about a single trip – the dates, times, price, layovers, and more. When browsing the page, each list item should look something like this:

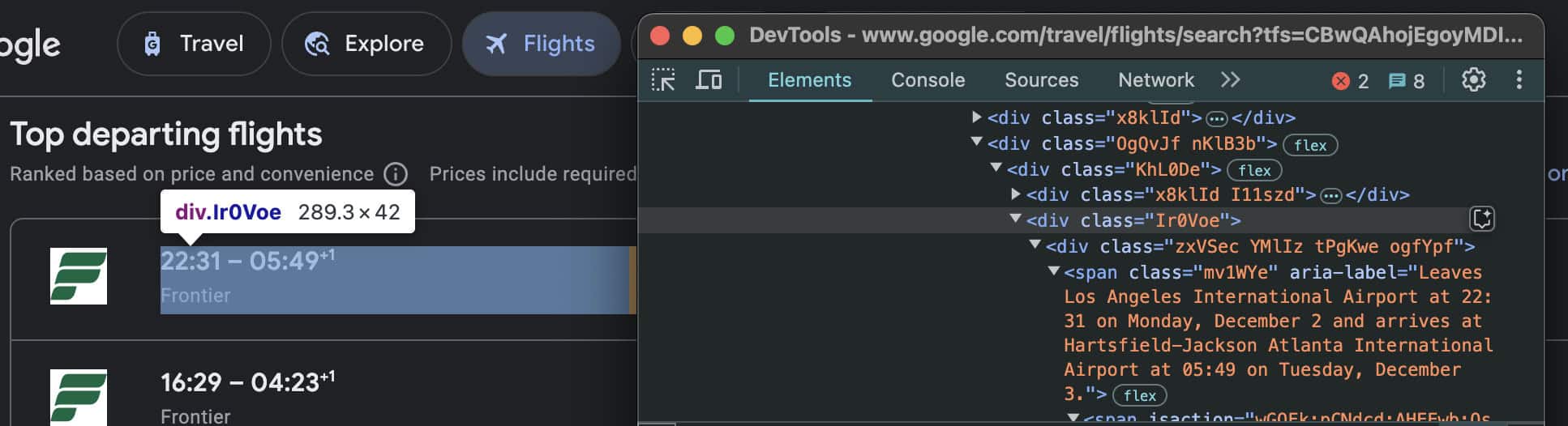

Step 14. If we want these results to sit neatly in a table when we save them, we need to store them into our “dictionary”. To do this, we need to collect the CSS selectors for each element in the result.

def get_flight_info(element, best) -> dict:

Let’s begin with flight times. The departure time time[0] will be time_leave, and arrival time – time[1] as time_arrive.

times = element.find_elements(By.CSS_SELECTOR,

'div.Ir0Voe span[role="text"]')

Let’s do the same thing with airports.

airports = element.find_elements(By.CSS_SELECTOR,

'div.QylvBf span span[jscontroller="cNtv4b"]')

And the rest of the provided information – airlines, layovers, cost, and suggested best result.

flight_info = {

'airline': element.find_element(By.CSS_SELECTOR,

'div.Ir0Voe > div.sSHqwe.tPgKwe.ogfYpf > span:last-child').text,

'date_leave': my_params['departure'], #This will be filled in from my_params

'date_arrive': my_params['return'], #This will also be filled from my_params

'time_leave': times[0].text,

'time_arrive': times[1].text,

'duration_string': element.find_element(By.CSS_SELECTOR,

'div.Ak5kof > div.gvkrdb.AdWm1c.tPgKwe.ogfYpf').text,

'airport_leave': airports[0].text,

'airport_arrive': airports[1].text,

'layovers': element.find_element(By.CSS_SELECTOR,

'div.BbR8Ec > div.EfT7Ae.AdWm1c.tPgKwe > span').text,

'cost': element.find_element(By.CSS_SELECTOR,

'div.U3gSDe div div.YMlIz.FpEdX span').text,

'best': best #True for flights from the suggested best list, or False for everything else

}

return flight_info

Extracting and Parsing the Page Data

Google Flights has a neat feature that provides you with the best results (the shortest flight duration, fewest layovers, the cheapest flight), as well as all available results based on your query. You may not like the suggested best results, so let’s save both best and all other remaining results in a list list_elems.

Step 15. Let’s adjoin these two lists, and return them as a single item under one name – list_of_flights.

def find_lists(driver):

list_elems = driver.find_elements(By.CSS_SELECTOR, 'ul.Rk10dc')

list_of_flights = parse(list_elems[0], True) + parse(list_elems[1], False)

return list_of_flights

It’s important to parse the downloaded page to collect only the necessary information – in this case, the flight lists. As mentioned before, we have two of them – the best results list and the rest. But we don’t want them to be separated in our final saved list of all flights.

Step 16. Let’s parse our page data. The list_of_flights will contain the final results.

def parse(list_elem: list, best: bool) -> list:

list_items = list_elem.find_elements(By.CSS_SELECTOR, 'li.pIav2d')

list_of_flights = []

for list_item in list_items:

list_of_flights.append(get_flight_info(list_item, best))

return list_of_flights

Saving the Output to CSV

At the very beginning, we imported the CSV library to save our data.

Step 17. Let’s add a few extra lines of code so that all flight information we previously defined in our dictionary and scraped results are saved.

def write_to_csv(flights):

field_names = ['airline','date_leave','date_arrive','time_leave',

'time_arrive','duration_string','airport_leave',

'airport_arrive','layovers','cost','best']

output_filename = 'flights.csv'

with open (output_filename, 'w', newline='', encoding='utf-8') as f_out:

writer = csv.DictWriter(f_out, fieldnames = field_names)

writer.writeheader()

writer.writerows(flights)

Here’s the entire script for this Google Flights scraper:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions

from selenium.webdriver import Keys, ActionChains

import csv

start_url = "https://www.google.com/travel/flights"

timeout = 10

my_params = {

'from': 'LAX',

'to': 'Atlanta',

'departure': '2024-11-27',

'return': '2024-12-03'

}

my_params2 = {

'from': 'LAX',

'to': 'Atlanta',

'departure': '2024-11-27',

'return': None

}

def prepare_browser() -> webdriver:

chrome_options = webdriver.ChromeOptions()

chrome_options.add_argument('--disable-gpu')

driver = webdriver.Chrome(options=chrome_options)

return driver

def field_input(driver, element, text) -> None:

element.click()

ActionChains(driver)\

.move_to_element(element)\

.send_keys(text)\

.pause(1)\

.send_keys(Keys.ENTER)\

.perform()

def prepare_query(driver) -> None:

field_from = driver.find_element(By.CSS_SELECTOR, 'div.BGeFcf div[jsname="pT3pqd"] input')

field_to = driver.find_element(By.CSS_SELECTOR, 'div.vxNK6d div[jsname="pT3pqd"] input')

date_from = driver.find_element(By.CSS_SELECTOR, 'div[jsname="I3Yihd"] input')

date_to = None

if my_params['return'] is None or my_params['return'] == '':

dropdown = driver.find_element(By.CSS_SELECTOR, 'div.VfPpkd-aPP78e')

dropdown.click()

ActionChains(driver)\

.move_to_element(dropdown)\

.send_keys(Keys.ARROW_DOWN)\

.pause(1)\

.send_keys(Keys.ENTER)\

.perform()

else:

date_to = driver.find_element(By.CSS_SELECTOR, 'div.K2bCpe div[jsname="CpWD9d"] input')

field_input(driver, field_from, my_params['from'])

field_input(driver, field_to, my_params['to'])

field_input(driver, date_from, my_params['departure'])

if date_to is not None:

field_input(driver, date_to, my_params['return'])

ActionChains(driver)\

.send_keys(Keys.ENTER)\

.perform()

print("Done preparing the search query")

def get_flight_info(element, best) -> dict:

times = element.find_elements(By.CSS_SELECTOR, 'div.Ir0Voe span[role="text"]')

airports = element.find_elements(By.CSS_SELECTOR, 'div.QylvBf span span[jscontroller="cNtv4b"]')

flight_info = {

'airline': element.find_element(By.CSS_SELECTOR, 'div.Ir0Voe > div.sSHqwe.tPgKwe.ogfYpf > span:last-child').text,

'date_leave': my_params['departure'],

'date_arrive': my_params['return'],

'time_leave': times[0].text,

'time_arrive': times[1].text,

'duration_string': element.find_element(By.CSS_SELECTOR, 'div.Ak5kof > div.gvkrdb.AdWm1c.tPgKwe.ogfYpf').text,

'airport_leave': airports[0].text,

'airport_arrive': airports[1].text,

'layovers': element.find_element(By.CSS_SELECTOR, 'div.BbR8Ec > div.EfT7Ae.AdWm1c.tPgKwe > span').text,

'cost': element.find_element(By.CSS_SELECTOR, 'div.U3gSDe div div.YMlIz.FpEdX span').text,

'best': best

}

return flight_info

def parse(list_elem: list, best: bool) -> list:

list_items = list_elem.find_elements(By.CSS_SELECTOR, 'li.pIav2d')

list_of_flights = []

for list_item in list_items:

list_of_flights.append(get_flight_info(list_item, best))

return list_of_flights

def find_lists(driver):

list_elems = driver.find_elements(By.CSS_SELECTOR, 'ul.Rk10dc')

list_of_flights = parse(list_elems[0], True) + parse(list_elems[1], False)

return list_of_flights

def write_to_csv(flights):

field_names = ['airline', 'date_leave', 'date_arrive', 'time_leave',

'time_arrive', 'duration_string', 'airport_leave',

'airport_arrive', 'layovers', 'cost', 'best']

output_filename = 'flights.csv'

with open(output_filename, 'w', newline='', encoding='utf-8') as f_out:

writer = csv.DictWriter(f_out, fieldnames=field_names)

writer.writeheader()

writer.writerows(flights)

def scrape(driver: webdriver) -> None:

driver.get(start_url)

if driver.current_url.startswith("https://consent.google.com"):

print("Hit the consent page, dealing with it.")

btn_consent_allow = driver.find_element(By.CSS_SELECTOR, 'button.VfPpkd-LgbsSe[jsname="b3VHJd')

btn_consent_allow.click()

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((

By.CSS_SELECTOR, 'button[jsname="vLv7Lb"]'))

)

print("Search button found, continuing.")

prepare_query(driver)

except Exception as e:

print("Something went wrong: {e}")

search_btn = driver.find_element(By.CSS_SELECTOR, 'button[jsname="vLv7Lb"]')

search_btn.click()

try:

WebDriverWait(driver, timeout).until(

expected_conditions.presence_of_element_located((

By.CSS_SELECTOR, 'ul.Rk10dc'))

)

print("Got the results back.")

flights = find_lists(driver)

write_to_csv(flights)

except Exception as e:

print(f"Something went wrong: {e}")

def main() -> None:

driver = prepare_browser()

scrape(driver)

driver.quit()

if __name__ == '__main__':

main()

Avoiding the Roadblocks When Scraping Google Flights

Building a Google Flights scraper can be a pretty daunting task, especially if you’re new to scraping but it can become even more difficult if you’re going to scrape it a lot. While we have solved issues like the cookie consent page already, other issues can arise if you’re scraping at scale.

Use Proxies to Mask Your IP

Websites don’t like bot traffic, so they try to prevent it by using tools like Cloudflare. While scraping the Google Flights page once or twice probably won’t get you rate-limited or banned, it can happen if you try to scale up.

You can use proxy services to prevent that. Proxies will mask your original IP by routing the requests through different IP addresses, making them blend in with regular human traffic. Typically, human traffic comes from residential IPs, so this type of proxy is the least likely to be detected and blocked.

This is a step-by-step guide on how to set up and authenticate a proxy with Selenium using Python.

Use the Headless Browser Mode

The Google Flights page is a dynamic website that heavily relies on JavaScript – not only for storing data, but also for anti-bot protection. Running your scraper in headless Chrome mode allows it to render JavaScript like a regular user would and even modify the browser fingerprint.

A browser fingerprint is a collection of unique parameters like screen resolution, timezone, IP address, JavaScript configuration, and more, that slightly vary among users but remain typical enough to avoid detection. Headless browsers can mimic these parameters to appear more human-like, reducing the risk of detection.

Step 7 in Setting Up the Browser gives two examples of how to set up Chrome for scraping, one of them containing this line of code: chrome_options.add_argument(“–headless=new”).

Adding this chrome_option will run the browser in headless mode. You may not want to use it now, but it’s good to know how to enable it if necessary.

Be Aware of Website’s Structural Changes

This Google Flights scraper relies heavily on CSS selectors – they help to find the specific input fields and fill them in. However, if Google makes adjustments to the Flights page, the scraper might break. That’s because the CSS selectors can change when a site developer modifies the HTML structure.

If you plan to use this Google Flights scraper regularly, keep in mind that selectors can change over time, and you’ll need to update them to keep the scraper functional.

Conclusion

Scraping Google Flights with Python is no easy feat, especially for beginners, but it offers a great deal of information useful for travelers and businesses alike. Despite the project’s difficulty, this data will be helpful when planning a trip, or gathering market intelligence, analyzing trends, and better understanding your customer needs.