How to Scrape Facebook in 2026

This is a step-by-step example of how to scrape publicly available Facebook data using Python.

Businesses gather Facebook data to perform sentiment and competitor analysis, protect their online reputation, or find influencers. However, the platform is hostile towards scrapers – from IP blocks to rate throttling, data gathering can become cumbersome without the right tools and knowledge.

In this guide, you’ll learn how to legally scrape Facebook, what tools are necessary for a high success rate, and how to avoid an IP address ban. Also, we’ll provide you with a real-life example of scraping Facebook pages using a Python-based scraper.

What is Facebook Scraping – the Definition

Facebook scraping is a way to collect data from the social media platform automatically. People usually scrape Facebook data using pre-made web scraping tools or custom-built scrapers. The collected data is then parsed (cleaned) and exported into an easy-to-analyze format like .json.

By scraping data points such as posts, likes, or followers, businesses collect customer opinions, analyze market trends, monitor online branding efforts, and protect their reputation.

Is Scraping Facebook Data Legal?

So, What Facebook Data Can You Scrape?

First and foremost, if you want to scrape social media data, you need to make sure it’s 1) publicly available, and 2) not protected by copyright law. Here’re the main publicly available categories on Facebook:

Profiles: latest posts, username, profile URL, profile photo URL, following and followers, likes and interests, and other public information included in profiles.

Posts: latest posts, date, location, likes, views, comments, text and media URLs.

Hashtags: post URL, media URL, post author ID.

Facebook business pages: URL, profile image, name, likes, story, followers, contact information, website, category, username, avatar, type, verified, related pages information.

If you’ll be collecting personal information (which is very likely), more rules apply, like the need to inform the person and give them the right to opt out. It’s always a good idea to consult a lawyer to make sure you’re in the right.

How to Choose a Facebook Scraper

One way to go about Facebook scraping is to build your own scraper using frameworks like Selenium and Playwright. Both are popular tools for controlling headless browsers that are necessary for scraping Facebook. However, the platform is scraper-hostile, so a self-built tool is best for intermediate to advanced users.

An easier solution is to use a pre-made scraper. Let’s take Facebook-page-scraper as an example. It’s a Python package created to scrape the front-end of Facebook pages. Such scrapers already include the logic for extracting and structuring relevant data. However, they won’t work without additional tools like proxies which help to mask the web scraper’s digital fingerprint.

The most straightforward option is to buy a commercial web scraper. There are several options to choose from based on your technical knowledge and needs:

- If you don’t want to meddle with the code, go with a no-code scraper. Services like Parsehub, PhantomBuster, or Octoparse offer scrapers that let you extract data by clicking on visual elements. They’re handy for small-scale data collection or when you don’t need to run a complex setup.

- Alternatively, you can get a web scraping API. They resemble pre-made web scrapers but are better maintained and have all the necessary elements built in. So all you need to do is send requests and store the output. Companies like Decodo (formerly Smartproxy), or Bright Data all offer APIs that are able to scrape Facebook.

How to Scrape Facebook Posts: A Step-By-Step Example Using Python

Necessary Tools to Start Scraping Facebook

For the scraper to work, you’ll need to use a proxy server and a headless browser library.

Facebook takes various actions against scrapers from request limiting to IP addresses blocks. A proxy can help circumvent this outcome by masking your IP address and location. If you don’t know where to find quality IPs, we’ve made a list of best Facebook proxy providers.

We’ll need a headless browser for two reasons. First, it will help us load dynamic elements. And second, since Facebook uses anti-bot protection, it will allow us to mimic a realistic browser fingerprint.

Managing Expectations

Before going into the code, there’re a few things you need to know.

The Facebook scraper is limited to publicly available data. We’re not encouraging you to scrape data behind a login, but some of you might find this a downside.

Recently, Facebook has made some updates that impacted the scraper we’ll be using. If you want to scrape multiple pages or avoid the cookie consent prompt, you’ll have to make some adjustments to the scraper files. But don’t worry – we’ll guide you every step of the way.

If you want to go into more detail about web scraping, have a look at the best web scraping practices in our guide.

Preliminaries

Before we start, you’ll need to have Python and the JSON library. Then, you’ll need to install Facebook-page-scraper. You can do this by typing a pip install command into the terminal:

pip install facebook-page-scraper

Alterations to the Code

Now, let’s make some changes to the scraper files.

To avoid the cookie consent prompt, you’ll first need to revise the driver_utilities.py file. Otherwise, the scraper will continue to scroll the prompt, and you won’t get any results.

1) Use the show command in your console to find the files. It will return the directory in which files are saved.

pip show facebook_page_scraper

2) Now, in driver_utilities.py, add the code to the end of wait_for_element_to_appear definition.

allow_span = driver.find_element(

By.XPATH, '//div[contains(@aria-label, "Allow")]/../following-sibling::div')

allow_span.click()

The whole function looks like this:

@staticmethod

def __wait_for_element_to_appear(driver, layout):

"""expects driver's instance, wait for posts to show.

post's CSS class name is userContentWrapper

"""

try:

if layout == "old":

# wait for page to load so posts are visible

body = driver.find_element(By.CSS_SELECTOR, "body")

for _ in range(randint(3, 5)):

body.send_keys(Keys.PAGE_DOWN)

WebDriverWait(driver, 30).until(EC.presence_of_element_located(

(By.CSS_SELECTOR, '.userContentWrapper')))

elif layout == "new":

WebDriverWait(driver, 30).until(

EC.presence_of_element_located((By.CSS_SELECTOR, "[aria-posinset]")))

except WebDriverException:

# if it was not found,it means either page is not loading or it does not exists

print("No posts were found!")

Utilities.__close_driver(driver)

# exit the program, because if posts does not exists,we cannot go further

sys.exit(1)

except Exception as ex:

print("error at wait_for_element_to_appear method : {}".format(ex))

Utilities.__close_driver(driver)

allow_span = driver.find_element(

By.XPATH, '//div[contains(@aria-label, "Allow")]/../following-sibling::div')

allow_span.click()

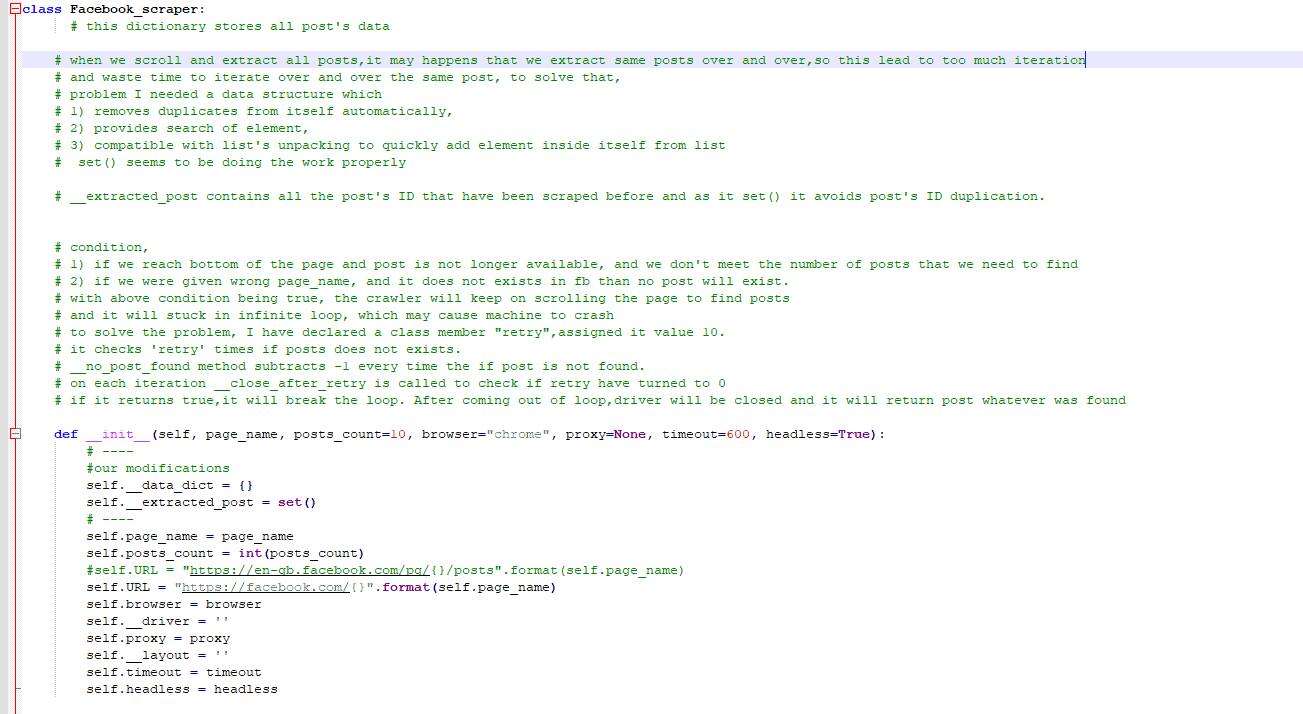

3) If you plan to scrape multiple pages simultaneously, you must modify the scraper.py document. This update will segregate information from different scraping targets to separate files.

Move the following lines to the init() method. Also, add the self. parameter to the beginning of those lines, so these variables could be instantiated.

__data_dict = {}

and __extracted_post = set()

This is how the file should look like after making the change:

Save the updated code, and let’s move on to scraping.

How to Scrape Facebook Posts

This is a real-life example using residential proxies and Selenium. We’re using residential addresses because Facebook is smart enough to identify and block datacenter IPs.

Step 1. Create a new text file in a directory of your choice and rename it to facebook1.py. Then, open the document, and start writing the main code.

1) Import the scraper.

from facebook_page_scraper import Facebook_scraper

2) Then, let’s choose what pages you want to scrape. I picked several public profiles and entered them as string values. Or, you can always scrape one page at a time.

page_list = ['KimKardashian','arnold','joebiden','eminem',''SmoshGames','Metallica','cnn']

Step 2. Now, let’s set up our proxies and headless browser.

1) Create a variable for a proxy port with a numeric value. You can use any rotating residential or mobile proxy provider, but today we’ll stick to Smartproxy’s IP pool.

proxy_port = 10001

2) Next, write the number of posts you want to scrape for the posts count variable.

posts_count = 100

3) After that, specify the browser. You can either use Google Chrome or Firefox – it’s a question of preference.

browser = "firefox"

4) The timeout variable will end scraping after a set period of inactivity. When assigning it, write time in seconds. 600 seconds is the standard, but you may adjust it to your needs.

timeout = 600

5) Moving on to the headless browser variable. Type false as a boolean if you want to see the scraper in action. Otherwise, write true and run the code in the background.

headless = False

Step 3. Now, let’s run our scraper. If your proxy provider requires authentication, place your password and username in the proxy variable line. Then, separate them by a colon.

for page in page_list:

proxy = f'username:password@us.smartproxy.com:{proxy_port}'

After that, initialize the scraper. Here, page title, posts count, browser type and other variables pass as function arguments.

scraper = Facebook_scraper(page, posts_count, browser, proxy=proxy, timeout=timeout, headless=headless)

Step 4. The output can be presented in two ways. So, pick one and type the code.

1) The first one is scraping and printing out the result into the console window. For this, you’ll need JSON. Write the following lines.

json_data = scraper.scrap_to_json()

print(json_data)

If you wish to export it into a CSV file, create a folder called facebook_scrape_results or whatever works for you, and then leave it as a directory variable.

directory = "C:\\facebook_scrape_results"

2) Then, with two following lines, data from each Facebook page will be stored in a file with a respective title.

filename = page

scraper.scrap_to_csv(filename, directory)

scraper.scrap_to_csv(filename, directory)

Finally, for either method, add proxy rotation code that will rotate your IP after each session. This way, you’ll be safe from IP bans. In our case, this means iterating the port number; other providers may require you to change the session ID.

proxy_port += 1

Save the code and run it in your terminal. I’ve picked the first output presentation method. So, the results will show up on screen in a few seconds.

Here’s the full script:

from facebook_page_scraper import Facebook_scraper

page_list = ['KimKardashian','arnold','joebiden','eminem','smosh','SmoshGames','ibis','Metallica','cnn']

proxy_port = 10001

posts_count = 100

browser = "firefox"

timeout = 600 #600 seconds

headless = False

# Dir for output if we scrape directly to CSV

# Make sure to create this folder

directory = "C:\\facebook_scrape_results"

for page in page_list:

#our proxy for this scrape

proxy = f'username:password@us.smartproxy.com:{proxy_port}'

#initializing a scraper

scraper = Facebook_scraper(page, posts_count, browser, proxy=proxy, timeout=timeout, headless=headless)

#Running the scraper in two ways:

# 1

# Scraping and printing out the result into the console window:

# json_data = scraper.scrap_to_json()

# print(json_data)

# 2

# Scraping and writing into output CSV file:

filename = page

scraper.scrap_to_csv(filename, directory)

# Rotating our proxy to the next port so we could get a new IP and avoid blocks

proxy_port += 1

Frequently Asked Questions About Facebook Web Scraping

Facebook scraping is the process of automatically gathering publicly available Facebook data.