In-Depth Look into Popular Proxy APIs (Web Unblockers)

This investigation takes a look at five popular proxy APIs like Bright Data’s Web Unlocker and Oxylabs’ Web Unblocker. We compare their features, pricing models, as well as ability to unblock websites behind major bot protection systems, such as DataDome or Shape.

Key Findings

- Proxy APIs integrate using the hostname:port format and remove the burden of managing proxy servers, running headless browsers, or dealing with blocks.

- All five participants managed to open protected websites over 90% of the time. Shape’s antibot gave them the most trouble, followed by the photo-focused social media platform.

- Proxy APIs allow modifying the request to an extent, such as sending custom headers. Several APIs offer structured data functionality, and proxy vendors like Bright Data and Oxylabs support precise filtering options. Their main drawback is limited interaction with dynamic pages.

- It makes more sense to pay for requests than traffic, unless you’re scraping small pages or API endpoints. Zyte’s dynamic pricing is very efficient for pages that don’t need JavaScript rendering.

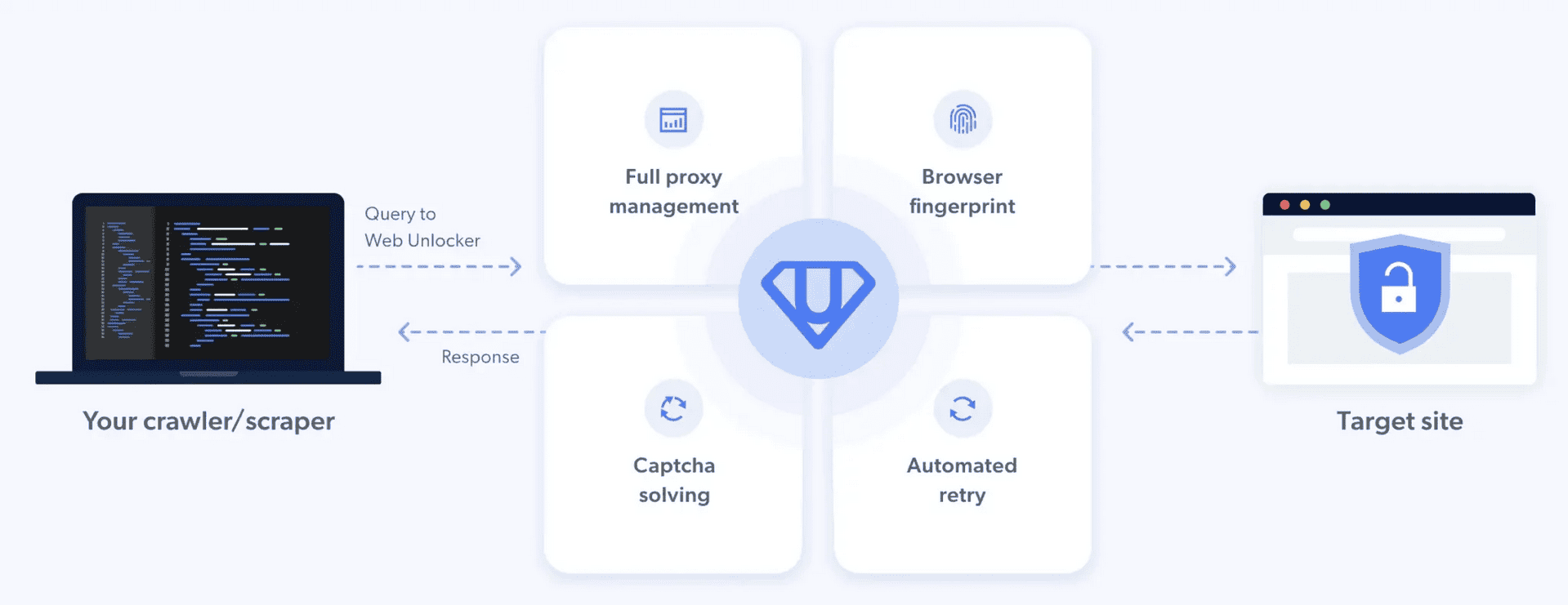

A Primer on Proxy APIs

So in addition to buying a bunch of IPs, you get a tool that takes care of CAPTCHAs, bot protection systems, and often even renders JavaScript on its own. This reduces web scraping’s elaborate cat-and-mouse game to sending requests and receiving the page’s HTML. Most vendors promise a nearly perfect success rate – even with something as simple as a cURL command.

That said, you shouldn’t treat a proxy API as just a CAPTCHA solver. Though they do remove the need to engage with streetlight marking and button holding, this is usually achieved by avoiding the challenge and not brute-forcing through it. Pages that have configured CAPTCHAs to pop-up every time may still need your attention.

How do you use a proxy API? The process differs little from any other rotating proxy server: there’s a hostname and port with authentication details. Then, you can append various parameters – such as location preferences – to the credentials or send them as a custom header. The proxy API intercepts the request and fetches the page based on your configuration.

In our previous research on web scraping APIs, we noticed that some had the ability to integrate as a proxy. But lately proxy APIs have been emerging as a separate category – whether for marketing purposes or based on real technical considerations. You’ll often find them called unblockers or smart proxy managers.

Participants & Methodology

We approached multiple companies with a proposal to participate in the research. Five providers agreed. For transparency, we disclosed which websites we’d test in advance, so it was possible to prepare accordingly.

These are the participants:

- Bright Data – one of the biggest companies in the field of data collection. We tested its Web Unlocker, together with SERP API for Google. While these are two separate tools, Bright Data has a unified system which lets customers use any product without getting a new subscription. Bright Data’s been offering Web Unlocker for at least several years, and it was the company’s main growth driver in 2022.

- Crawlbase – a well established provider of web scraping tools with over 45,000 paying customers. We tested Crawling API – primarily a web scraping API with a proxy mode. We chose it over Crawlbase’s designated proxy API, Smart Proxy, because Crawling API allows paying as you go and doesn’t lock features behind pricing tiers. Otherwise, there’s little difference between the two – they even share the same parameters.

- Oxylabs – Bright Data’s main competitor in the proxy server market with excellent infrastructure. We tried Web Unblocker – a not-exactly-new proxy API that looks new because it rebranded from Next-Gen Residential Proxies in early 2023.

- Smartproxy – another major proxy provider looking to branch out into web scraping tools. We tested Site Unblocker – a very recent proxy API launched in July 2023.

- Zyte API – a long-standing data collection company that focuses on e-commerce and maintains several popular open source tools like Scrapy. We wanted to use Smart Proxy Manager, which is one of the oldest proxy APIs. But it turns out Zyte is sunsetting the tool. So we ended up testing Zyte’s API after an unintentional bait-and-switch. As a silver lining, Zyte plans to add a proxy mode to Zyte API soon.

Testing Methodology

Unblocking Popular Websites & Anti-Bot Systems

We wanted to see how good the APIs are at their main job – unblocking challenging websites. We selected seven targets protected by various anti-bot systems:

| Anti-bot | Rendering required? | |

|---|---|---|

| Amazon | In-house CAPTCHA, returns empty 200-coded responses | ❌ |

| reCAPTCHA | ❌ | |

| Photo-focused social media network | In-house protection, asks for login if triggered | ✅ (We accessed the web interface and not the GraphQL endpoint) |

| Kohls | Akamai | ✅ |

| Nordstrom | F5/Shape | ✅ |

| Petco | DataDome, Cloudflare | ❌ |

| Walmart | Akamai, FingerprintJS, PerimeterX, ThreatMetrix | ❌ |

Which Target Was the Hardest to Unblock?

That’d be Nordstrom, which is behind Shape. In addition, it took the longest to return results, even though Kohls also required JavaScript and its pages weighed 3 MB – five times more. The social media network was another tough cookie for some, redirecting the APIs to its login page.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Kohls | 99.01% | 28.97 s |

| Amazon | 98.65% | 5.73 s |

| Walmart | 96.38% | 12.68 s |

| 96.00% | 5.41 s | |

| Petco | 95.48% | 11.20 s |

| Photo-focused social media network | 92.19% | 19.74 s |

| Nordstrom | 80.16% | 33.1 s |

How Did the APIs Do?

All participants managed to succeed at least nine times out of ten, so the answer is – pretty well:

- Zyte had a particularly strong showing, both in terms of success rate and response time. However, the provider did have to fix its social media scraper during our tests to perform as well as it did.

- Bright Data was amazingly fast with targets that required JavaScript, but it also slowed down to a crawl with Petco, which others had no issues with.

- Oxylabs and Smartproxy prioritized success rate and would’ve aced the tests if not for Nordstrom. However, their headless implementations were among the slowest.

- Crawlbase was relatively fast, but it failed more requests across the board, even with non-problematic targets like Google.

| Oxylabs | Bright Data | Smartproxy | Zyte | Crawlbase | |

|---|---|---|---|---|---|

| Avg. success rate | 93.37% | 94.65% | 93.79% | 97.82% | 90.00% |

Hover on a label to highlight, click to hide.

| Oxylabs | Bright Data | Smartproxy | Zyte | Crawlbase | |

|---|---|---|---|---|---|

| Avg. response time | 20.43 s | 15.05 s | 20.05 s | 11.70 s | 16.21 s |

Hover on a label to highlight, click to hide.

Breakdown by Individual Target

Amazon is one of the biggest web scraping targets. However, it’s not that hard to unblock – companies are still able to scrape the platform at scale with datacenter addresses. This shows looking at the results – everyone except Zyte had nearly perfect success rates.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Crawlbase | 100% | 11.05 s |

| Bright Data | 99.54% | 5.59 s |

| Oxylabs | 99.17% | 4.14 s |

| Smartproxy | 99.08% | 3.99 s |

| Zyte | 95.64% | 3.90 s |

Google is another major target that’s inspired a whole subset of web scrapers called SERP APIs. While more reliant on IP quality, its protection is also not that challenging to bypass. Only Crawlbase had some issues that manifested in 520 error codes.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Zyte | 100% | 2.16 s |

| Bright Data | 100% | 4.63 s |

| Smartproxy | 99.94% | 6.91 s |

| Oxylabs | 99.91% | 6.66 s |

| Crawlbase | 78.61% | 6.71 s |

There are two ways to access the photo-focused social media platform: via a hidden API endpoint or the front-end. The former is actually preferred, as it doesn’t require JavaScript rendering. But we wanted to load the website fully.

This social media platform was among the hardest nuts to crack. Bright Data was extremely fast, but 15% of the requests were redirected to login. Oxylabs and Smartproxy loaded the pages nearly perfectly at the expense of speed. Crawlbase accomplished neither very well, and Zyte aced both.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Zyte | 99.61% | 19.78 s |

| Smartproxy | 99.79% | 27.92 s |

| Oxylabs | 99.72% | 28.39 s |

| Bright Data | 85.84% | 5.16 s |

| Crawlbase | 76.01% | 17.45 s |

Kohls relies on JavaScript to load, which reflected in the response time. Other than that, Akamai failed to pose a challenge for any proxy API. Bright Data’s Web Unlocker used some voodoo magic (or caching) to outpace all competitors by five to eight times.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Bright Data | 99.78% | 4.98 s |

| Smartproxy | 99.11% | 41.89 s |

| Zyte | 99.10% | 29.44 s |

| Crawlbase | 98.38% | 25.44 s |

| Oxylabs | 98.66% | 43.08 s |

Nordstrom is protected by Shape and requires JavaScript – a scary combination given that Shape’s one of the hardest anti-bot systems to overcome. Crawlbase allowed us to run only one request per minute, making the scraping process significantly more drawn out than we intended it to be.

Oxylabs and Smartproxy found Nordstrom especially challenging; we saw a large number of 520 codes and internal errors signaling too many retry attempts. Overall, Zyte did the best job in both performance metrics.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Zyte | 99.38% | 20.42 s |

| Crawlbase | 89.16% | 40.58 s |

| Bright Data | 88.98% | 39.97 s |

| Smartproxy | 62.07% | 31.34 s |

| Oxylabs | 61.20% | 33.19 s |

DataDome was a surprisingly easy obstacle to overcome. Add in the fact that Petco doesn’t need JavaScript, and you can scrape it quickly and efficiently. At least that’s the case with Smartproxy and Oxylabs. For some reason, Bright Data struggled, especially looking at the response time. Maybe it had to spin up headless instances to unblock the website?

| Avg. success rate | Avg. response time | |

|---|---|---|

| Smartproxy | 99.88% | 2.79 s |

| Oxylabs | 99.56% | 2.91 s |

| Zyte | 94.68% | 3.49 s |

| Crawlbase | 91.75% | 5.96 s |

| Bright Data | 91.55% | 40.82 s |

Walmart actually uses four different anti-bot systems, which is insane. The company evidently doesn’t want to be scraped. That said, even these extreme measures failed to stop the proxy APIs. Some took time to retrieve responses, while others like Zyte were extremely fast. But in general, all tools sustained a success rate of over 95%.

| Avg. success rate | Avg. response time | |

|---|---|---|

| Bright Data | 96.83% | 4.23 s |

| Smartproxy | 96.64% | 25.50 s |

| Zyte | 96.53% | 2.69 s |

| Crawlbase | 96.50% | 6.31 s |

| Oxylabs | 95.38% | 24.66 s |

Features and Limitations of Proxy APIs

Proxy APIs are designed to be a drop-in replacement for proxy servers. Accordingly, their main expected features are location targeting and ability to establish sessions.

As we can see below, all participants support this. Oxylabs, Smartproxy, and Bright Data are foremost proxy server providers, so they can afford offering granular location settings that reach co-ordinate and ISP level.

| Oxylabs | Bright Data | Smartproxy | Zyte | Crawlbase | |

|---|---|---|---|---|---|

| Localization | Countries (all), states, cities, co-ordinates | Countries (all), states, cities, ASNs | Countries (all), states, cities, co-ordinates | Countries (50) | Countries (26) |

| Sessions | ✅ | ✅ | ✅ | ✅ | ✅ |

| Proxy selection | Automated | Automated | Automated | Automated | Automated, with an option to route via TOR |

But proxy APIs aren’t just regular proxies. They mediate all communication with the target, which means you’re inevitably giving away some level of control. Let’s see how you can interact with the APIs and what’s out of their reach. We’ll omit Zyte for now, as it’s unclear which features will carry over to the proxy format.

| Oxylabs | Bright Data | Smartproxy | Crawlbase | |

|---|---|---|---|---|

| JavaScript | ✅ (options to render JS, return page screenshot) | ✅ (handled automatically) | ✅ (options to render JS, return page screenshot) | ✅ (different API token, option to return page screenshot) |

| Request modifiers | Custom headers, cookies | Custom parameters for search engines | Custom headers, cookies | Custom headers, cookies |

| Page interactions | POST requests | ❌ | POST requests | POST requests, wait for load, scroll |

| Other | Asynchronous requests, parsers for search engines | CSS selectors, parsers for select websites |

Bright Data’s implementation is the purest – it doesn’t expose anything beyond expected proxy functionality, with the exception of an asynchronous API endpoint (no longer a proxy!). The tool even decides when to render JavaScript on its own, which makes it very simple to use.

Bright Data’s specialized SERP API is a different beast – it offers custom parameters for building the request, such as search query, pagination, and location. It can also parse various properties of Google and other search engines for structured data. Most of this is achieved by appending parameters to the URL.

Search engines aside, the proxy APIs of Oxylabs and Smartproxy are more versatile: they accept custom cookies and request headers. There’s also an option to send POST requests with form or other data. And, you can opt to receive a screenshot instead of the HTML source.

Crawlbase’s Smart Proxy inherits the parameters from the provider’s other APIs. So in addition to achieving everything mentioned above, you can also interact with the page by waiting for it to load or scrolling down. Like Bright Data, Crawlbase offers parsers for several search engines, social media, and e-commerce websites, with an option to extract particular CSS elements from any website.

Main Limitation of Proxy APIs

The main drawback of proxy APIs lies in their inflexibility with JavaScript-dependent content. Most can render JavaScript (which is great), but they don’t expose the parameters needed to interact with the page. So you may not be able to properly scrape some websites that need extra time to load or depend on scroll events to reveal all content.

You’d think this could be solved by simply plugging the proxy API into Puppeteer or another headless library. But with a few exceptions, the APIs are incompatible by design.

Navigating the Different Pricing Models

Though some providers categorize proxy APIs as proxy servers (and not web scrapers), it doesn’t mean they follow the same conventions. Let’s have a look at the table below:

| Oxylabs | Bright Data | Smartproxy | Crawlbase | Zyte | |

|---|---|---|---|---|---|

| Format | Traffic | Successful requests | Traffic | Successful requests | Successful requests |

| Modifiers | ❌ | Premium domains, city & ASN targeting | ❌ | JS rendering | Dinamically adjusted by target difficulty, JS rendering |

| Max price difference | x1 | x2 + $4/CPM | x1 | x2 | x30+ |

There are several things to take away. First, that there’s no one pricing format. Three providers charge for successful requests, which is the standard for web scraping tools. Oxylabs and Smartproxy, on the other hand, try to continue with the proxy paradigm where residential and mobile proxy networks usually monitor traffic use.

The second aspect to look at is price modifiers. The most frequent multiplier is JavaScript rendering, as a full browser is computationally much more taxing than an HTTP library. Once you enable it, Crawlbase doubles the rate, and Zyte becomes an order of magnitude more expensive.

Zyte’s pricing is interesting in general. The provider adjusts request cost dynamically, based on the website’s difficulty and optional parameters. This means your rates may become more or less expensive over time, even when accessing the same website. There’s a calculator on the dashboard where you can check how much a request will cost.

Bright Data also segments pricing based on targets, but the approach here is simpler – you can enable a list of premium domains like Zillow or Nordstrom that cost an additional $4 per 1,000 requests.

Which Approach Makes More Sense?

It depends on the provider’s rates. But given that proxy APIs are supposed to be an upsell to residential proxies, they tend to be pretty expensive when metered by traffic. In this case, we’re partial to request-based pricing, especially if the website has large page sizes.

Among the five participants, Zyte’s dynamic pricing proved to be the least expensive in scenarios that didn’t require JavaScript. Otherwise, the other request-based providers gave it a tough competition. The traffic-based tools were less cost-efficient, but we could see them shine with small pages or when scraping API endpoints.