Zyte’s 2022 Extract Summit: A Recap

- Published:

Organizational Matters

We weren’t able to attend the event live, so we can only comment on its virtual aspect. We’d like to thank Zyte for graciously providing us with a free ticket.

Zyte ran ticket sales through EventBrite, which had a decently streamlined experience. The only confusing time was after completing the purchase flow, when it wasn’t clear what would happen next. Thankfully, Zyte sent an email shortly with a link to the streaming page.

The livestream itself was hosted on YouTube. You could easily open it on the platform, so I imagine those £25 tickets went a long way in some companies. There was also a Slido widget for asking questions.

The virtual event started with serious technical issues, making online viewers miss the first two presentations. But once the livestream was brought online, things went smoothly.

The Talks

Extract Summit had a whopping 12 talks in one day – the same number as OxyCon’s two days combined. They covered a variety of topics ranging from data collection trends and developments to the more practical questions of running and scaling web scraping infrastructure.

Here’s the full list. Clicking on a talk’s name will take you to its description:

- State of the Web Data Industry in 2022

- Practical machine learning to accelerate data intelligence

- How to ensure high quality data while scaling from 100 to 100M requests/day

- Sneak peek at the new innovations at Zyte

- How the data maturity model can help your business upscale

- Architecting a scalable web scraping project

- Ethics and compliance in web data extraction

- Crawling like a search engine

- Challenges in extracting web data for academic research

- Data mining from a bomb shelter in Ukraine

- How to source proxy IPs for data scraping

- The future of no-code web scraping

Talk 1: State of the Web Data Industry in 2022

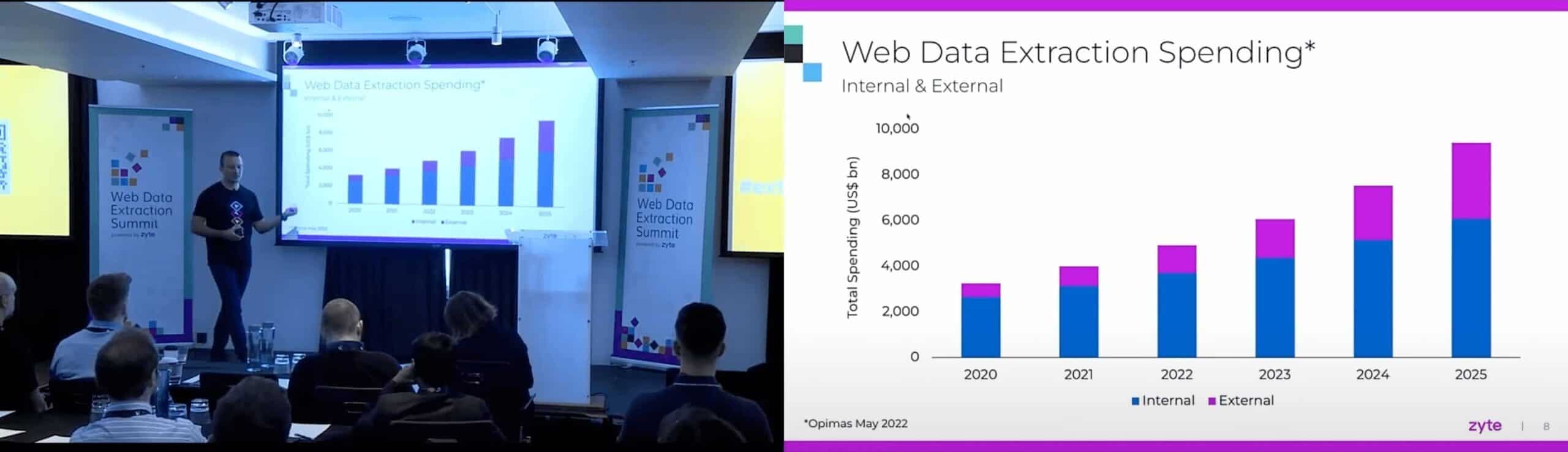

As it has become tradition, the conference kicked off with a presentation by Shane Evans, Zyte’s CEO. Shane briefly ran through the data collection trends he had identified since the last Extract Summit. The 12 minutes gave quite a few interesting (though sometimes predictable) insights.

To very briefly summarize them, companies have started treating data as an increasingly strategic priority. Some are even implementing organizational changes to build dedicated data teams. Furthermore, web scraping has become clearer from the legal standpoint, which helps with adoption. According to a study, spending on web data should grow at a mid-double digit rate year over year.

The presentation featured a breakdown of Zyte’s clients by industry and use case. Unsurprisingly, e-commerce took the first place, but Zyte also has quite a few customers in finance. Finally, Shane pondered on the build-buy dilemma, highlighting the challenges of modern data collection.

Talk 2: Practical machine learning to accelerate data intelligence

A talk by Peter Bray, CEO at Versionista. His service monitors website changes for clients in pharmaceutics and other industries. Peter gave a utilitarian demonstration how off-the-shelf machine learning tools can create value at scale and limited cost.

As a change monitoring service, Versionista needed a way to categorize content, so that it could provide insights into the changes. Peter showed how his team applied Google Vertex, ElasticSearch, and Named Entity Recognition to create models for various page and content types. He also gave advice for simplifying data labeling and overcoming cases that lack context for natural language processing.

We feel like this presentation goes very well with Allen O’Neill’s crash course on machine learning. Together, they introduce the machine learning techniques relevant for web scraping and give a concrete example of how companies apply them in real life. Consider watching both.

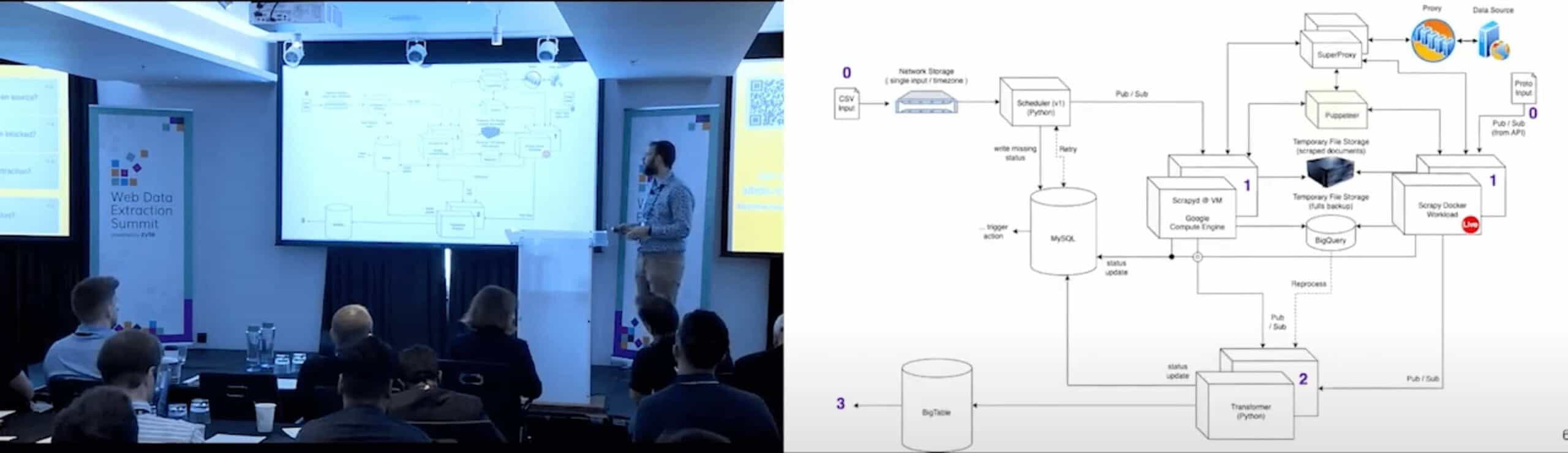

Talk 3: How to ensure high quality data while scaling from 100 to 100M requests/day

A walkthrough of how a company’s web scraping infrastructure evolved throughout 10 years in business. It was an interesting presentation that moved step by step, providing the reasoning behind each, together with increasingly complex diagrams of the company’s infrastructure. Worth a watch.

The speaker, Glenn de Cauwsemaecker from OTA Insight, gave the same talk in OxyCon, so you can read our more detailed impressions here.

Talk 4: Sneak peek at the new innovations at Zyte

The (understandably) longest talk, where Zyte’s CPO Iain Lennon and Head of Development Akshay Philar introduced the company’s new developments.

In brief, Zyte spent the year working on three issues:

- Solving the site ban problem. According to Zyte, the tech is there, but the real pain is balancing out effectiveness and cost.

- Creating a browser that’s designed for web data extraction, and

- Solving scaling challenges to faster growth.

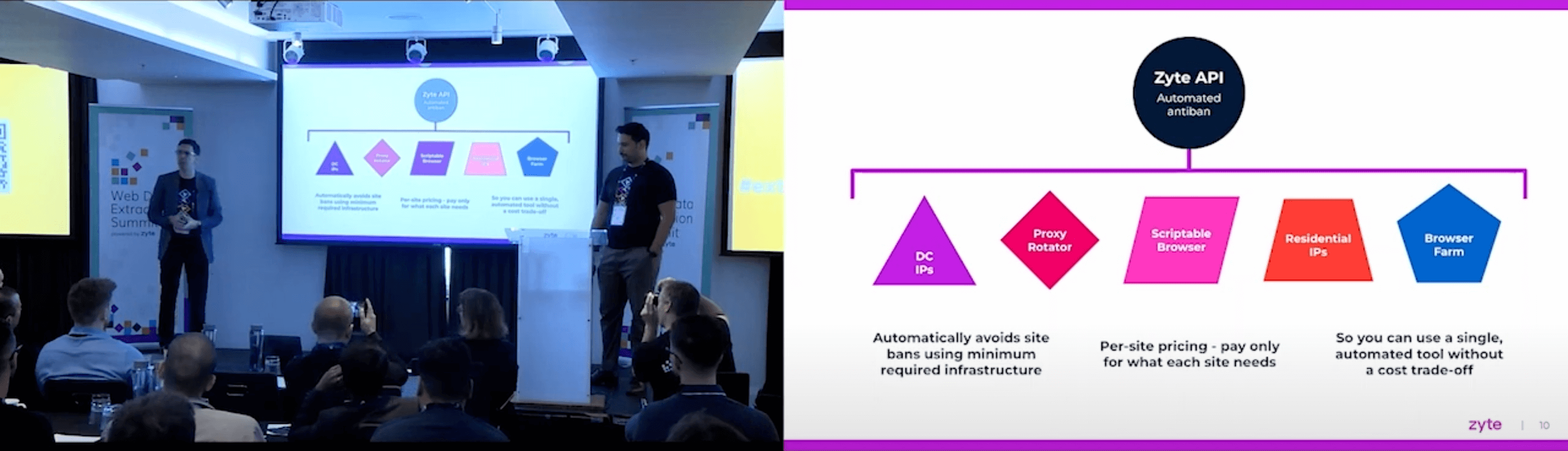

To address the first two, the company is releasing Zyte API. It automatically selects proxies, solves CAPTCHAs, and runs headless browsers if needed to ensure scraping success. In this, it strongly resembles Bright Data’s Web Unlocker or Oxylabs’ APIs. However, Zyte also brings some innovations to differentiate it from the competition:

- Different targets will have dynamic pricing based on how much it costs to extract data from them. You’ll be able to see this information in the dashboard and play around with various parameters to reduce the expenses.

- The API will allow you to make various page actions for JavaScript-dependent pages, such as scrolling or clicking on buttons.

- Zyte will provide a cloud-based IDE for scripting browser actions.

For the third issue, Zyte is introducing crawling functionality exposed via the Zyte API. The company will maintain custom spiders for high-volume sites and use machine learning for the long tail. This functionality will require no contracts or minimum commitment, which should make it great for quick prototyping.

Zyte API is set for release on October 27, with web crawlers coming in early 2023.

Talk 5: How the data maturity model can help your business upscale

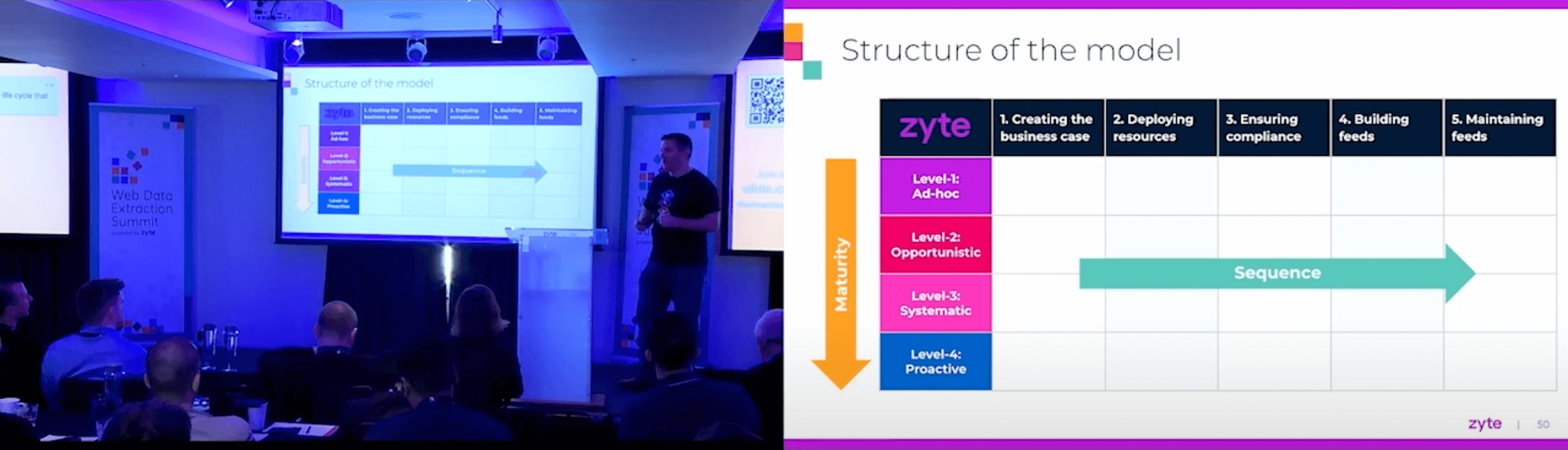

One more by Zyte. James Kehoe, a product manager, presented a data maturity model the company created after interviewing 40 industry representatives. The model aims to help businesses identify where they stand in their data collection operation and what they can expect going forward. It was a business-oriented talk with very fast delivery.

James outlined a grid: its columns listed the five sequential steps of a data collection operation and the rows their maturity level. James gradually went through each step, explaining what it looks like at different maturity levels. He then showed where the interviewees placed themselves on the grid.

In a nutshell, the model looked pretty useful, even if a little theoretical. The talk should translate well into a blog post if Zyte ever decides to publish one.

Talk 6: Architecting a scalable web scraping project

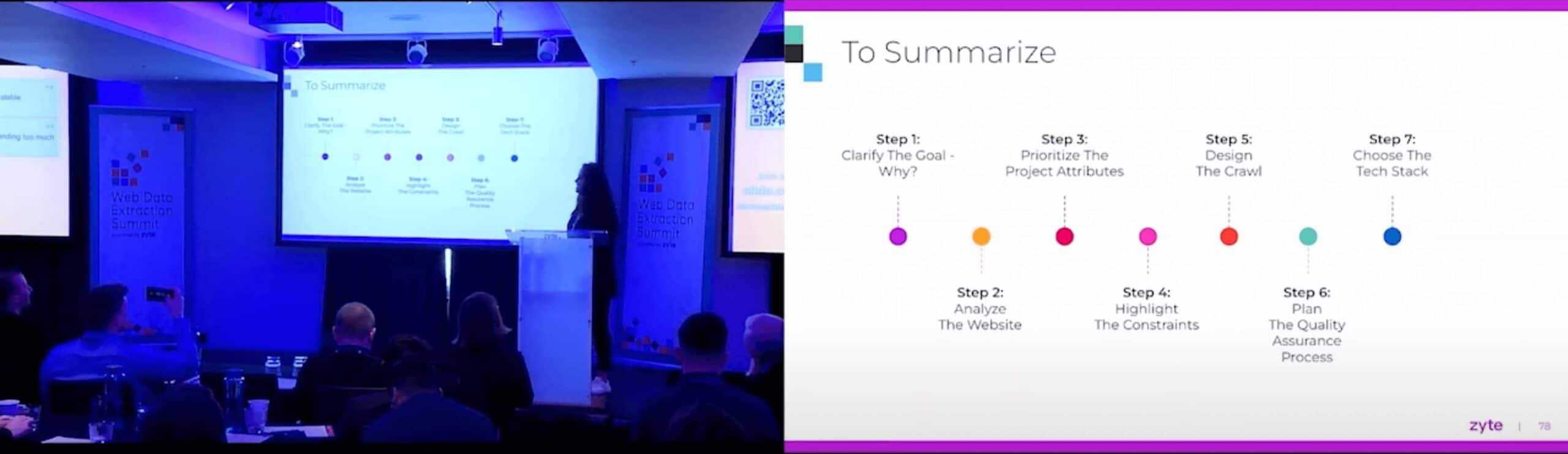

Another presentation from Zyte given by developer advocate Neha Setia Nagpal. It resembled the previous talk in that the presenter introduced a framework. But where the data maturity model was meant more for evaluating a data scraping operation, this one aims to help design one.

Neha outlined eight steps (and also best practices) that should help developers architect a scalable solution:

- Clarify the goal.

- Analyze the website.

- Prioritize the project attributes like scalability and extensibility.

- Highlight the constraints.

- Design the crawl.

- Ensure data quality.

- Choose the tech stack.

- Brace for impact.

Overall, it’s a handy list of things to consider, especially if you’re moving from ad-hoc scraping to a sustained operation or preparing to collect data in a company setting.

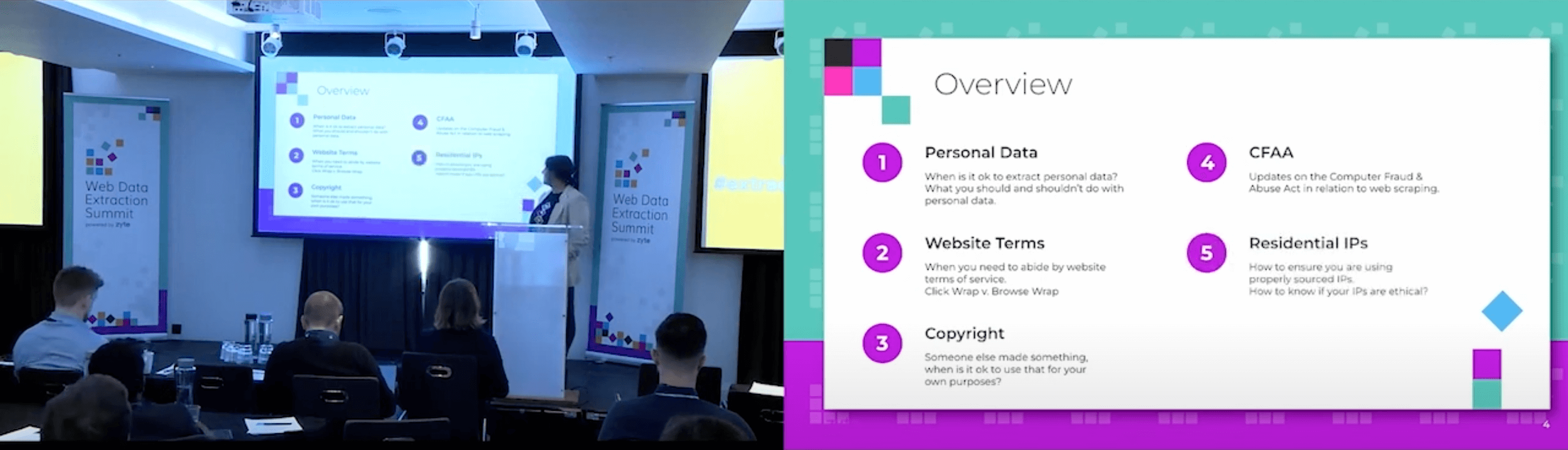

Talk 7: Ethics and compliance in web data extraction

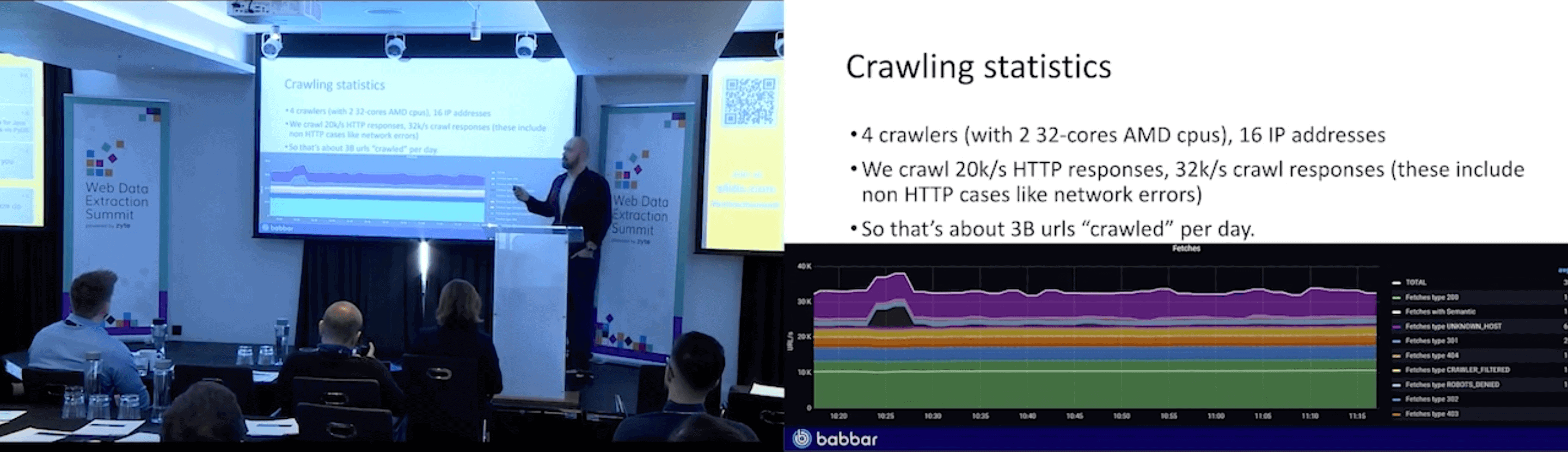

Talk 8: Crawling like a search engine

A talk by Guillaume Pitel, CTO of Babbar. His company crawls over 1 billion pages daily to help SEO marketers with their backlinking efforts. Similarly to Glenn’s presentation, this one also recounted Babbar’s journey to achieve its current scale. It should be interesting to companies doing heavy web crawling; maybe less so to regular web scrapers.

According to Guillaume, much of the web is crap. So, he and the team had to figure out how to continuously crawl only the most interesting parts of the web, compute Page-Rank like metrics on a graph, and then analyze the context to create a semantically-oriented index.

A good part of the talk covered technical implementation. It described how Guillaume’s team adapted the BUbiNG crawler for their needs, managed the WWW graph, and architectured the whole system to successfully handle billions of URLs per day. Curiously, the infrastructure runs on Java, uses just 16 IPs, and doesn’t process dynamic content for now.

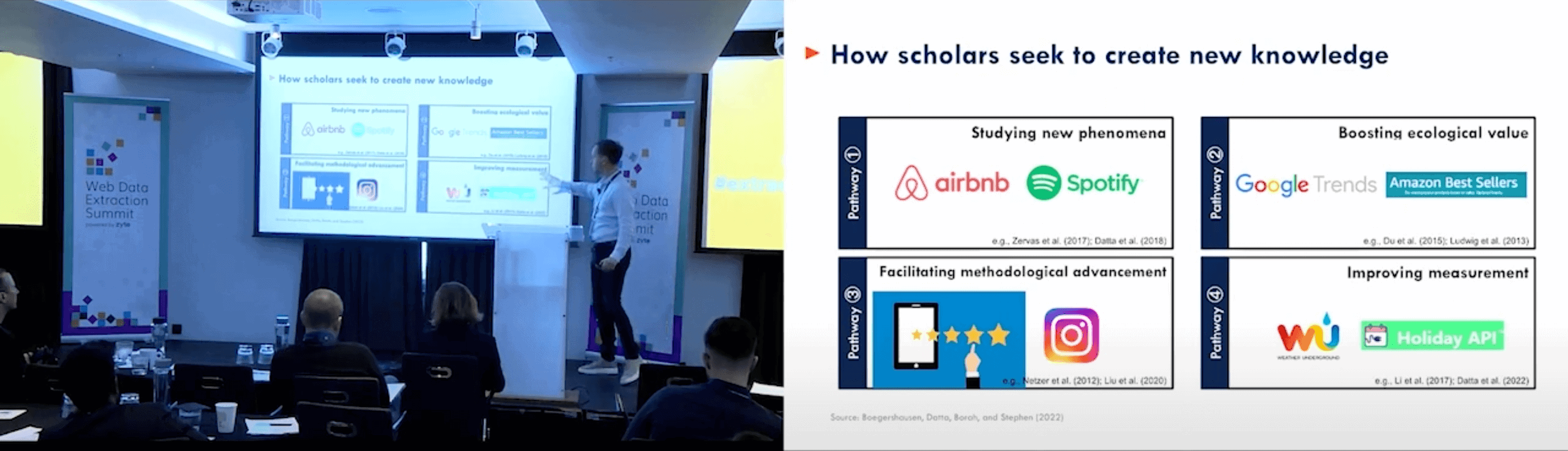

Talk 9: Challenges in extracting web data for academic research

A refreshing perspective that we rarely see in similar conferences. Dr. Hannes Datta from Tilburg university spoke about the whys and hows of data collection in a university setting. It’s worth watching to expand your horizons and understand how different the constraints can be for others.

It turns out that web data is getting increasingly popular in fields like marketing research. In 2020, it played a part in 15% of all studies there. Scientists use this data to investigate new phenomena, improve methodology, and for various other tasks. Hannes himself studied the impact of Spotify’s playlist algorithms when the platform was still emerging.

Dr. Datta (a satisfyingly fitting name) brought forth some of the peculiar challenges that academics experience. For example, they care deeply about the validity of data, which can be impacted by website changes or even personalization algorithms when accessing content from a residential IP. Scientists also have to worry about legal and ethical questions. All in all, there are many considerations to take into account, and Hannes described a good deal of them.

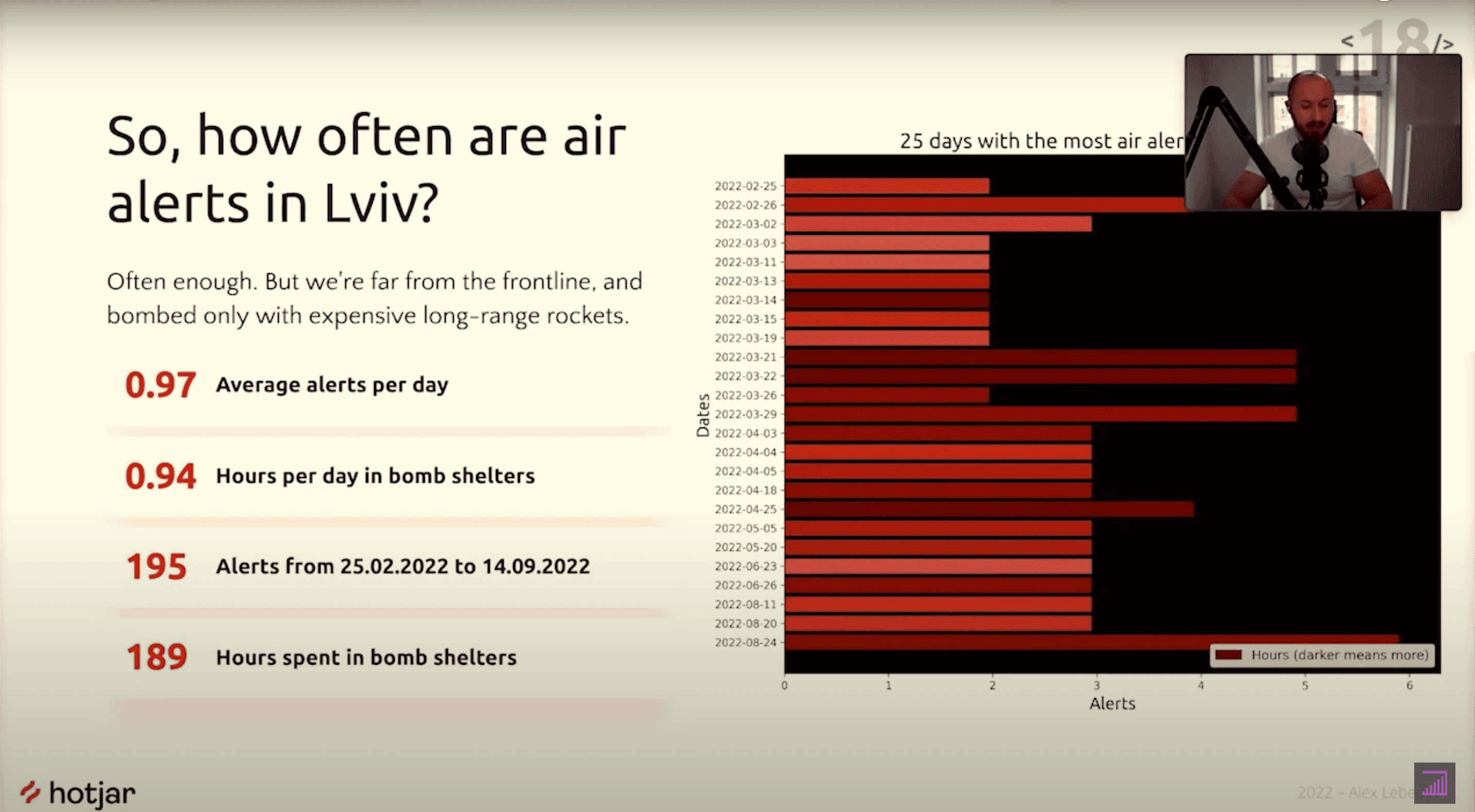

Talk 10: Data mining from a bomb shelter in Ukraine

A touching presentation by Zyte’s Ukrainian software engineer Alexander Lebedev. He got stranded in the country when the war started. Being an engineer he is, Alex decided to use a data-driven approach to organize his sleep and other activities with the fewest interruptions.

In essence, Alex wrote a Telegram scraper that collected air alerts from two channels. He then mapped the frequency of those air alerts during different times of day on a graph. Once a siren gets sounded, people rush to bomb shelters, so Alex reasoned that finding out the patterns could help his family organize their life around them.

Alex was in a relatively calm region, so we’re not sure how useful this data actually was in his case. But the project definitely helped him keep himself occupied and gain control over the uncertainty. Alex also managed to extract some broader insights about the bombing frequency of different regions and their change over time.

It was definitely one of the more unique demonstrations of the uses of web scraping.

Talk 11: How to source proxy IPs for data scraping

A talk by Neil Emeigh, CEO of Rayobyte. Recently rebranded from Blazing SEO, Rayobyte controls hundreds of thousands of datacenter proxies. Neil shared insights into how his company sourced those IPs and what customers should look out for. The delivery was over the top at times (throwing money at the audience? Come on.), but it made the talk entertaining to watch.

Neil spoke about renting versus buying addresses, the importance of IP diversity and ASN quality. He gave some strategies for working with datacenter proxies (the control vs diversity option), together with interesting tidbits of knowledge. Did you know that Google can ban a subnet for as little as 200 requests per hour? That you should never get AFRINIC IPs? Or that IPv6 proxies suck (for now)? Well, there are good reasons why.

To top it all off, Neil told a story of how the FBI came to his house and started interrogating him about proxies. Turns out, the IP address industry is pretty controversial, especially if you’re from Africa. But you’ll learn more by watching the talk.

Talk 12: The future of no-code web scraping

The final speaker, Victor Bolu, runs a no-code data collection tool called Web Automation. He overviewed the types, potential, and limitations of no- and low-code web scraping tools, all the while trying to persuade an audience of web scraping professionals that they’re the future.

That didn’t exactly work out: in a poll, something like 85% voted that no-code tools won’t replace code-based web scrapers anytime soon. But then again, maybe it wasn’t the right question to ask. Victor himself spent a lot of time speaking about expanding the market, which no-code has a fair chance to achieve.

Whatever your stance is, the talk provided plenty of material for understanding the landscape and selling points of no-code data collection, especially if you’re thinking of introducing a similar tool of your own.

Conclusion

Despite the initial technical issues, we believe that Zyte held a successful conference that was well worthy of the entry fee. We’ll be waiting for the next Extract Summit with excitement – maybe even attend it live? See you there!