OxyCon Day 2 Recap

- Published:

Talk 1: A Crash Course in Machine Learning for Text Using Web Scraping

A fascinating introduction to machine learning by Allen O’Neill, CTO at Dataworks. Fittingly for a PhD, Allen’s presentation felt almost like a university lecture. The talk wasn’t very heavy on the tech; it rather focused on the business end of things.

Allen’s talk had several main points. The first was that machine learning isn’t some kind of magic. But it can predict patterns that maybe we don’t see.

The second point was that data extraction isn’t the same as information extraction. The latter is much more valuable. He considered information extraction as providing context to data, such as identifying that in the string “Intel i9”, Intel refers to the brand and i9 to a processor model. ML models can help transform data into valuable information.

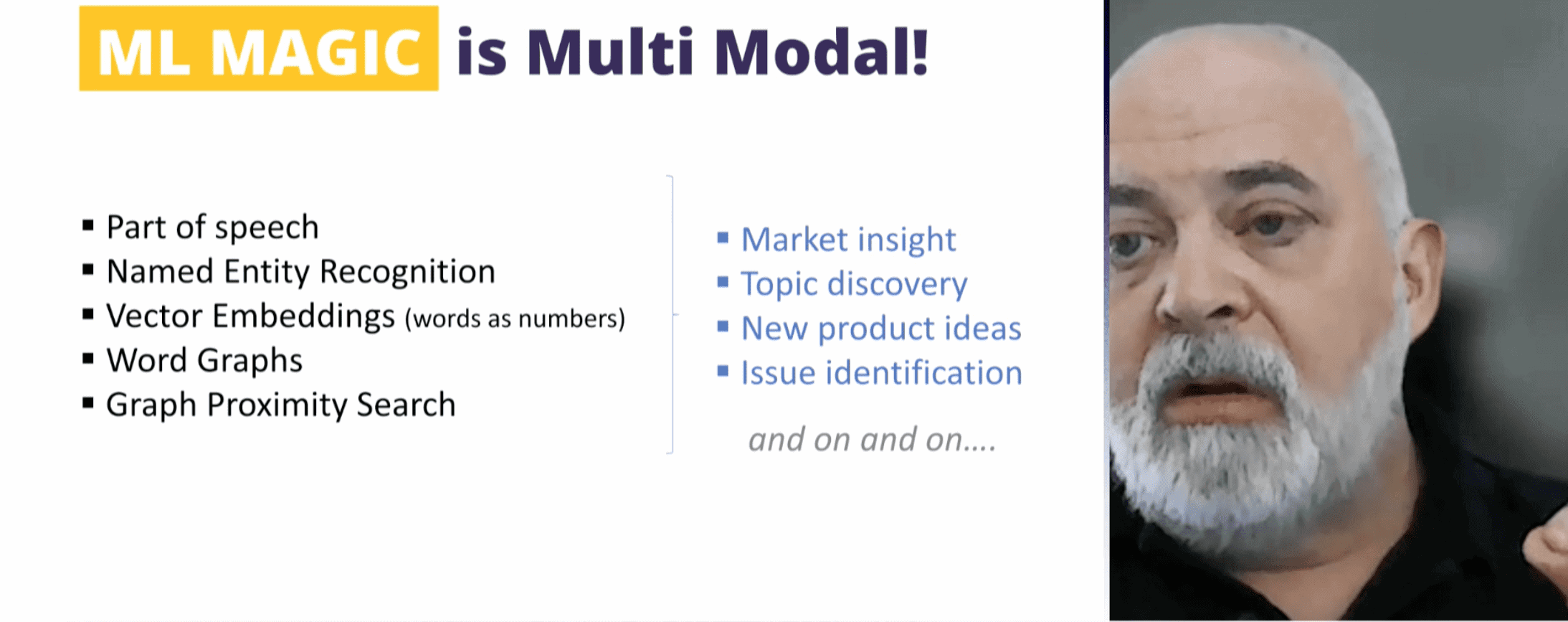

Allen went through a number of machine learning techniques: named entity recognition, assigning likelihood numbers to words (how likely that a dog is human) and then creating proximity graphs, and more. He stressed that alone these techniques don’t amount to much, but you can combine them to achieve pretty cool things: remove ambiguity from a taxonomy (is 1080p and 1920×1080 the same?), enrich product data, mine reviews for issues, and more.

The presentation likely won’t blow your mind if you’re doing machine learning already. But if you’re not, it gives a compelling overview of what you can achieve and which tools you can use for that.

Talk 2: How Data Scraping and Creative Algorithms Can Lead to Exciting Products

Another talk that was light on tech but interesting to listen to. The presenter, Karsten Madsen, runs a tool for search engine optimization called Morningscore. He gave a case study of how you can compete in a tough market by focusing on compelling ways to present data.

When Karsten entered the SEO niche, he found over 200 competitors. Some of them, like Ahrefs, had a boatload of servers and impressive in-house infrastructure. Instead of getting the heck away as most sane people do, Karsten did two things:

- He outsourced all data collection to multiple providers. Morningscore’s code looks at all several sources, compares them, and refines data. This allows them to easily replace providers as needed, costing around $20k per month to run.

- He focused on user experience. One way was to gamify the tool by suggesting optimization “missions” and awarding experience points. The second was to build a different and more transparent link scoring algorithm. And the third example he gave was a health score that can instantly respond to a website’s changes.

So, in short, Morningscore sacrificed data quantity and comprehensiveness for a more personalized approach. One that’s based on instant feedback (probably by scraping SERPs upon request) and a clear sense of direction through gamification and context. We’re not sure how well this will work in the long run, but Karsten’s talk showed that some creativity can wedge a niche even under tough conditions.

Talk 3: Observability and Web Scrapers: Filling the Unknown Void

Eccentric, over the top, and… fun? I’m not sure if anyone has ever uttered these words to describe a talk on logging. But then again, I’m also not sure how many of them start with aphorisms in Ancient Greek. So here we are.

The presenter, Martynas Saulius from Oxylabs, gave a comprehensive introduction to observing web scraping infrastructure. He distinguished the trifecta of observability – logs, traces, and metrics – describing each and their implementation.

The talk also had examples and tips for building your own logging infrastructure. In Oxylabs, Martynas uses the ELK stack to process and visualize data outputs. As for good indicators for alerting, he distinguished the RED method, which stands for rate, errors, and duration.

At the end of the talk, there was a good question about alert fatigue, which can become an issue as you scale up. The presenter’s advice was to start simple and alert only important things.

Talk 4: Practical applications of common web scraping techniques

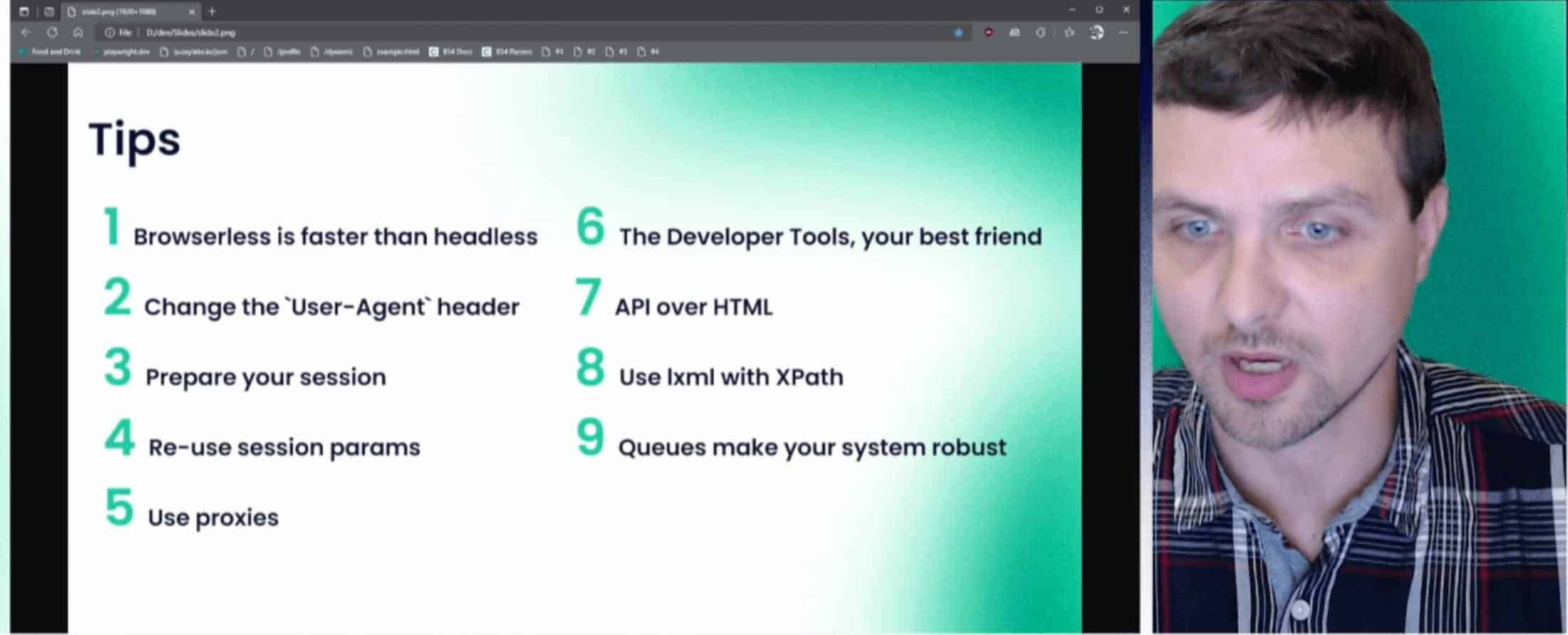

This one wasn’t a talk but rather a demonstration. Another presenter from Oxylabs, Eivydas Vilčinskas, gave nine tips for improving web scraping success. They might not impress seasoned professionals but could be helpful to many beginner and intermediate web scrapers.

Some of the tips are pretty basic: for example, to use proxies or change the User-Agent header. Others are less obvious, such as preparing a session in a headless browser and then reusing the parameters with an HTTP client. Or preferring APIs over HTML code. But taken together, they add up to a stellar collection of best practices for collecting data.

There were few slides – Eivydas demonstrated the tips using a browser and IDE. The code samples are available live; so if you’re new or less experienced with these techniques, it can be a great opportunity to try them out yourself.

Some of the more interesting tidbits:

- One headless request amounts to 800 cURL requests.

- The HTTP client you use matters – they work differently and have different TLS parameters.

- You can often reuse the same session parameters with different proxies if the website doesn’t link headers to an IP.

Talk 5: Data collection: Orchestration, Observability, and Introspection

A talk by Paul Morgan, technical team lead at Datasembly, a company that tracks local pricing and product data. Like Glenn from Day 1, Paul makes up to 100 million requests per day. This is a relatively high level presentation on managing, monitoring web scrapers, and adapting to changes that occur during scraping runs.

In terms of orchestration, the three challenges are managing resources, scheduling runs, and sizing jobs. According to Paul, it’s best to distribute workload among several proxy providers to handle failures. You should have gatekeepers to start jobs and reapers to kill slow jobs. There’s also the dilemma between running granular and bulk jobs: the former are easier to prioritize and restart but create more overhead.

The observability part includes logging and monitoring. Rejection rate is a good metric, but they can also include the number of redirects, speed based on historical averages, and more. Paul recommended using Prometheus and Grafana for monitoring.

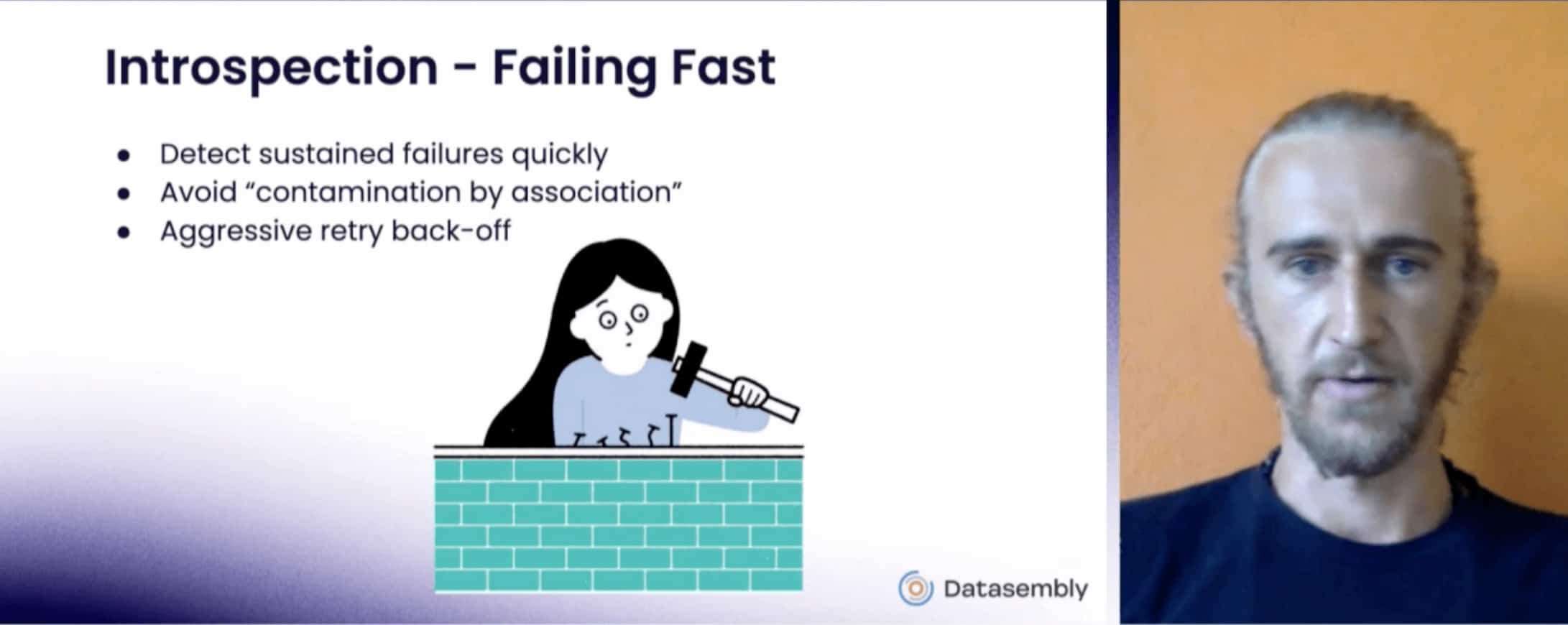

The part about introspection was particularly interesting. Paul stressed the importance of quickly detecting and killing failing jobs; otherwise, they’ve noticed that it can “contaminate” other runs. He’s also taken the habit of evolving the setup mid-run (headers, fingerprints, etc.), so that the highest success rate is usually at the end of the run.

The audience gave some good questions about block avoidance, emulating the TLS fingerprint, and problematic anti-bot systems. The talk – and answers given – are based on extensive experience, so we recommend watching it.

Talk 6: Data collection: Orchestration, Observability, and Introspection

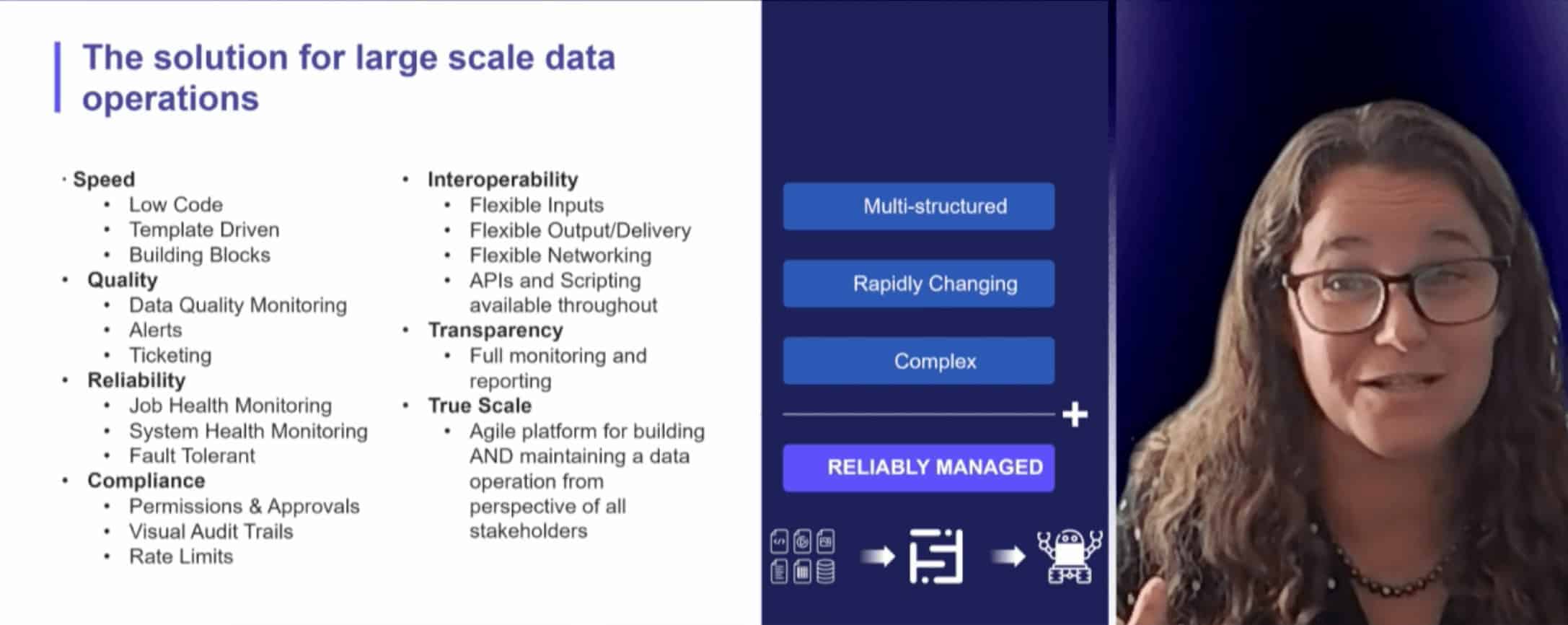

The final talk of the day was given by Sarah McKenna, CEO of Sequentum. The company runs an enterprise-grade platform for automating data pipelines. Sarah undertook to cover all aspects necessary to build a robust and legal data collection infrastructure, often from the point of view of her own low code tool.

Despite providing useful information about what it takes to run a large-scale operation, the talk wasn’t our favorite. First, it necessarily had to cover things at a very high level. Second, there was quite a bit of repetition. And third, despite taking 40 minutes, the presentation had like eight slides, so at times it felt a bit of a slog.

That said, you may find more value in it. We recommend skimming through the slides to gauge their relevance. They’re packed with info and change infrequently, so this shouldn’t be hard.

Conclusion

That’s it for this year’s OxyCon! It was a great – and intense! – event, and we’ll be looking forward to next year.