ScrapeCon 2024: A Recap

Our impressions from Bright Data’s first virtual conference on web scraping.

- Published:

Organizational Matters

ScrapeCon was initially planned to take place on November 7, 2023. Unfortunately, it had to be delayed due to events in Israel. No news was given for a while, and then Bright Data announced a new date some time in March.

Registration was free, which is nice and, by now, an industry standard for online events. You had to fill out a form with basic details like name, surname, and email. The application also asked to select which sessions you expected to attend. (Was it to gauge interest? Were you unable to watch the presentations you didn’t choose? I ticked all the boxes, so I couldn’t test what happened in that scenario.) Then, ScrapeCon’s team would verify your application and manually approve (or deny?) entry.

I believe Bright Data used a platform called Cvent to host the conference. The hub was well-made, with notifications and chat boxes for Q&A. Unlike Extract Summit or OxyCon, each session had a different page with a description and Vimeo player. Some talks took around 15 minutes, so you had to jump in and out constantly.

Bright Data opted to host the conference between 3PM and 7PM CEST. For us – and quite a few businesses in Europe – the time was unusual, but it was likely chosen to include both European and American audiences. All sessions were on the dot. The schedule had no formal breaks, but you’d typically get between five and ten minutes before the next talk.

One feeling I couldn’t shake throughout the event was that I acted much more as a viewer rather than an active participant. Sure, you were free to ask questions. But all answers were given in text, and speakers never actually interacted with the audience directly. Everything might as well have been pre-recorded, and you wouldn’t have missed much.

The Talks

The conference comprised six presentations, three panels, and a six-minute introduction by Bright Data’s CEO Or Lenchner. Let’s go through them one by one.

Here’s a quick navigation for the headline divers:

- Opening Speech: The State of Public Web Data

- Cloud-Native Scraping Made Simple

- Decoding Scraping Strategies: Build, Buy, or API?

- Panel 1: The Future of Data for AI: Balancing Legal and Operational Challenges

- From AI-Powered Insights to Training LLMs

- A Blueprint for Building a Reliable Dataset

- Panel 2: The Executive Playbook

- From Clicks to Captures: Mastering Browser Interactions for Scrapers

- Beyond IP Bans & CAPTCHAs

- Panel 3: From Initial Request to Final Analysis

Opening Speech: The State of Public Web Data

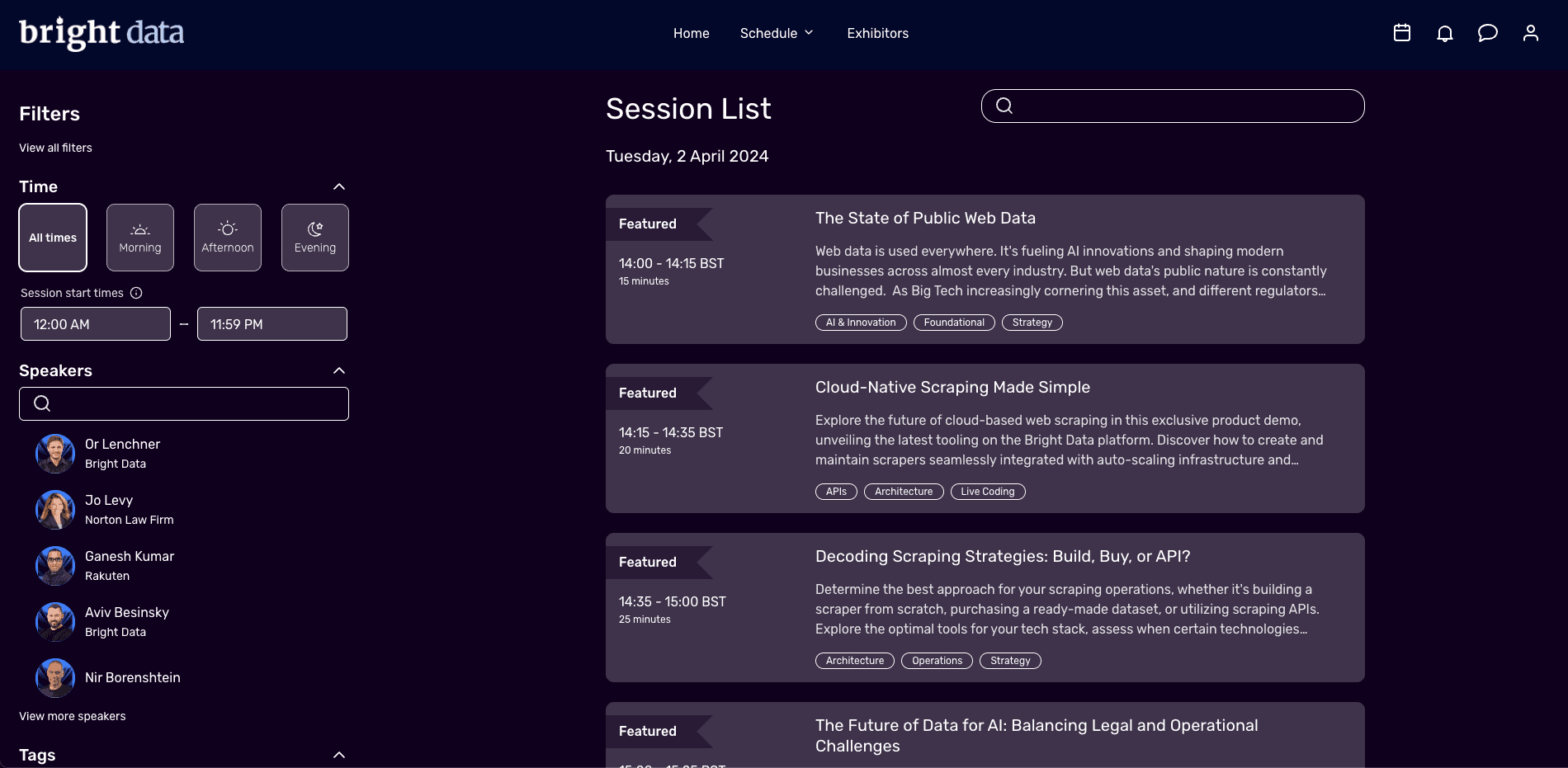

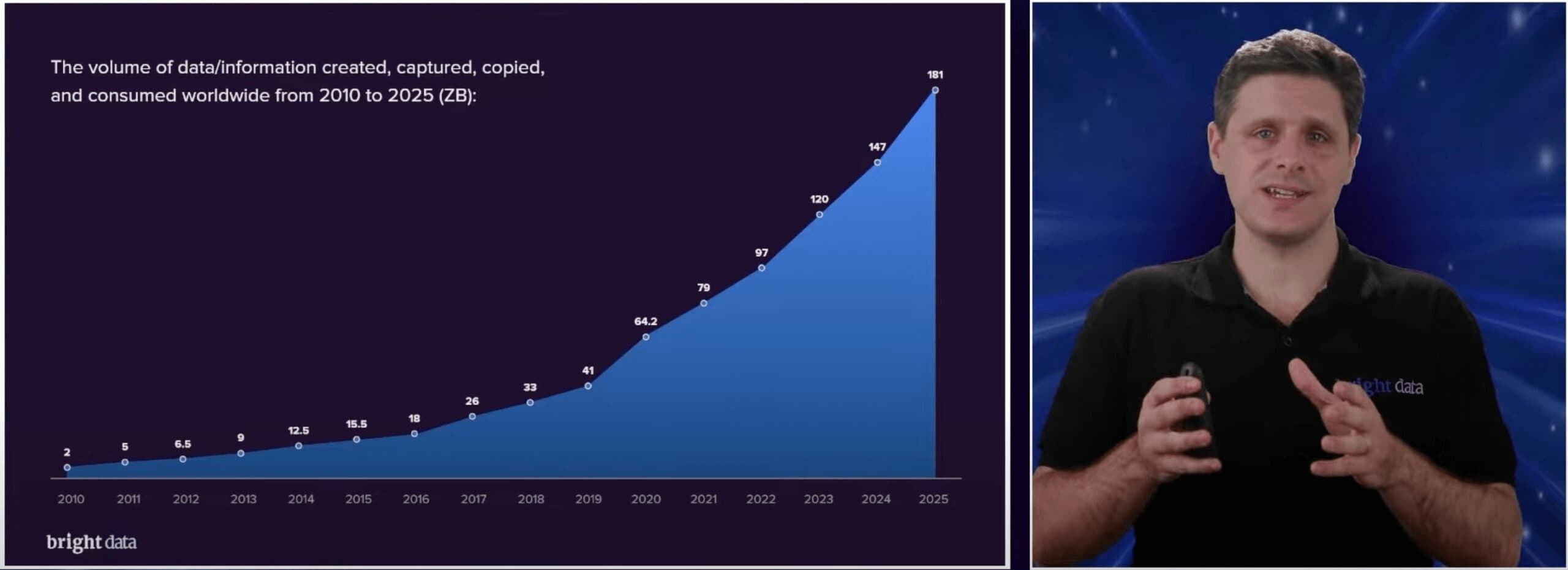

Or Lenchner kicked off the conference with Bright Data’s narrative of the status quo. It pepped up the event and gave a good idea of what to expect.

In brief, the demand for data is growing exponentially, and like drinking water, it’s poised to shape the future of humanity. AI is the next big revolution, and naturally, it requires data to train. Big corporations are gating off, but Bright Data secured some important victories (mostly notably against Meta), and regulation is the next frontier.

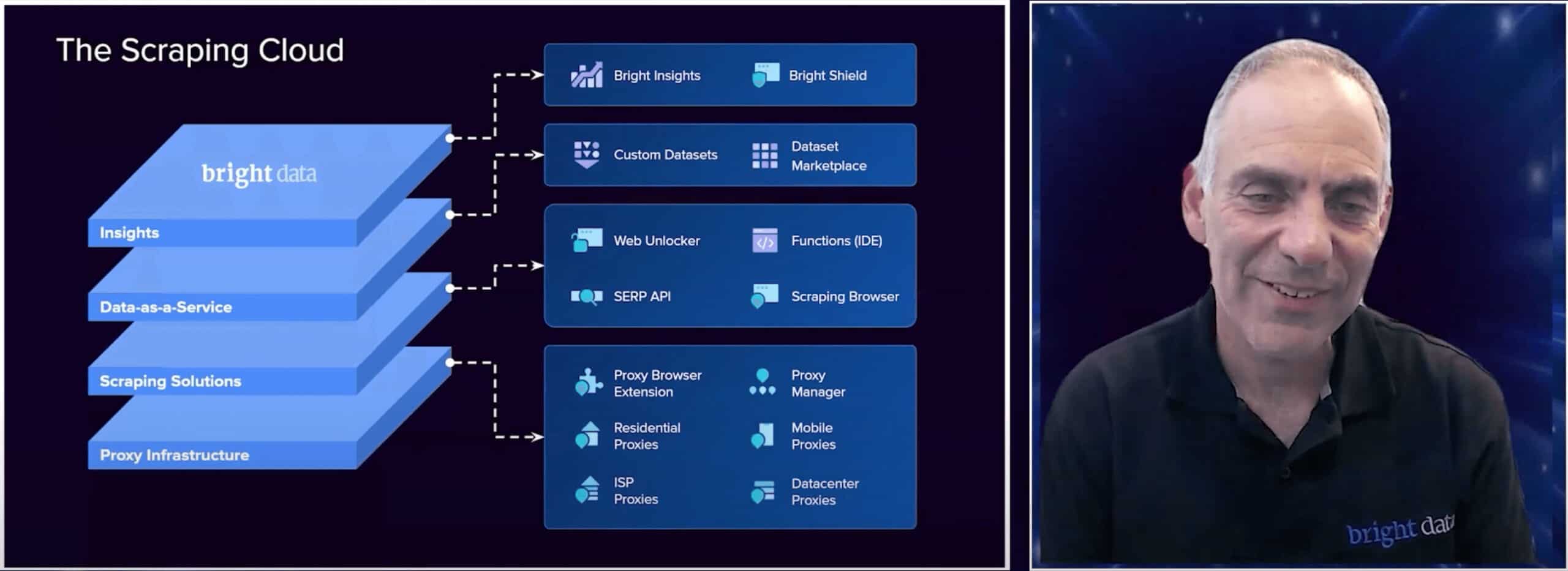

Or mentioned something called the scraping cloud – I assume this is how Bright Data will position itself from this point on. He also teased Bright Shield, a two-pronged product currently in beta:

- Bright Data’s clients can use it to track and enforce their web scraping policies, while

- Webmasters are able to see which parts of their properties are scraped and – to an extent – influence data collection activities on those domains.

Talk 1: Cloud-Native Scraping Made Simple

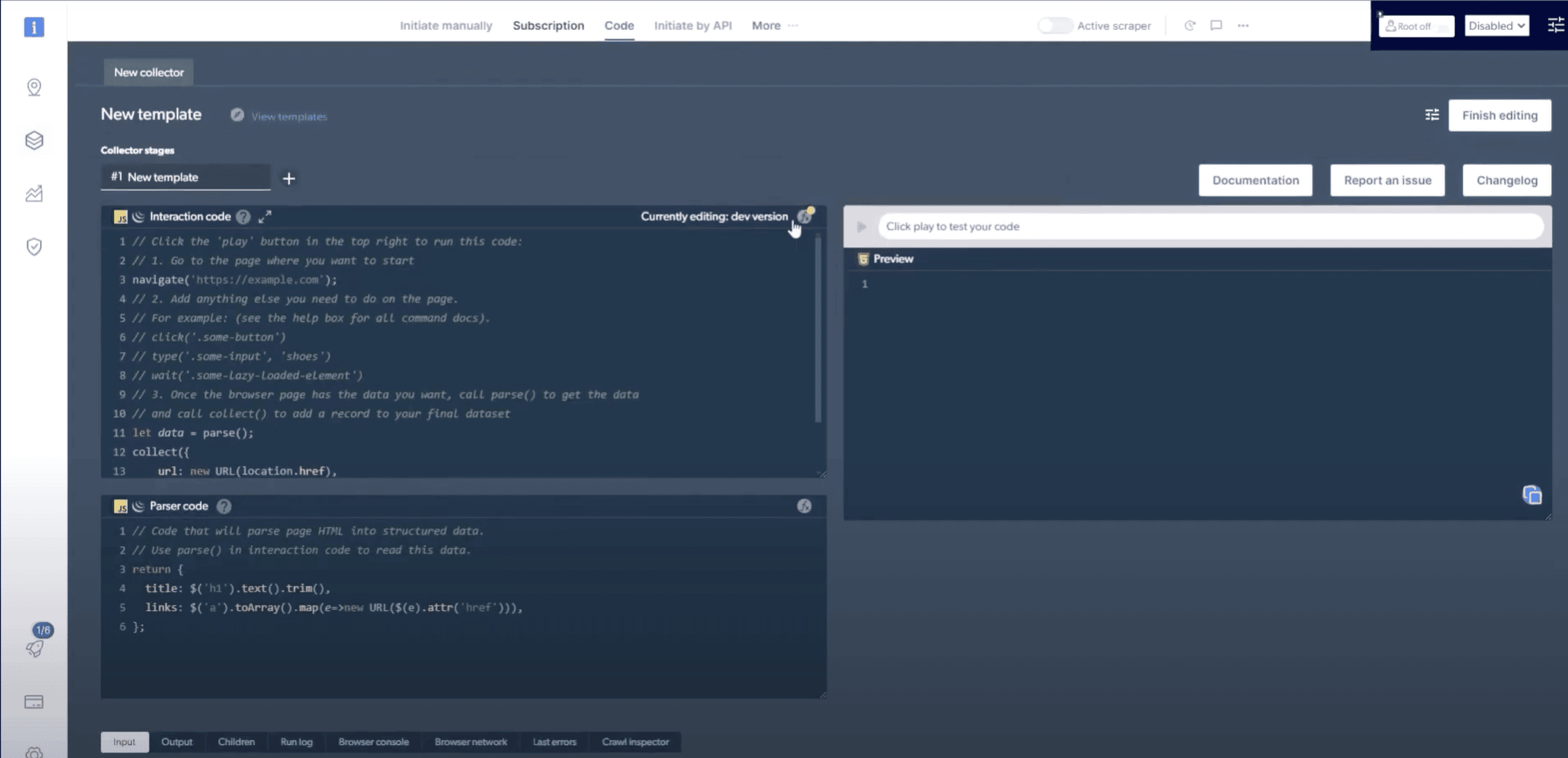

In the first presentation, Itzhak Yosef Friedman, Head of R&D at Bright Data, spoke about serverless web scraping infrastructure. More precisely, he introduced Bright Data Functions, previously called Web Scraper IDE, previously called Data Collector.

In brief, Functions is a cloud-based development environment accessible through Bright Data’s dashboard. It includes pre-built code functions, templates for popular websites, and uses the provider’s unblocking infrastructure in the backend. There are other neat features built in, such as tools for debugging and monitoring. If needed, Functions can run headless browsers, also in the cloud.

Itzhak then quickly built an interactive scraper to collect data from Lazada to show off the product. I found it nice that Functions separates the scraping and parsing stages and caches pages, so you can adjust the parser without reloading the URL. This was the first product advertisement of the day.

A few sidenotes. It’s interesting to see how Functions evolved. The initial iteration, Data Collector, was actually marketed as a no-code tool: the templates had a UI layer, and there was an extension for visually building scrapers . Bright Data then pivoted toward developers, leaving the no code part to datasets.

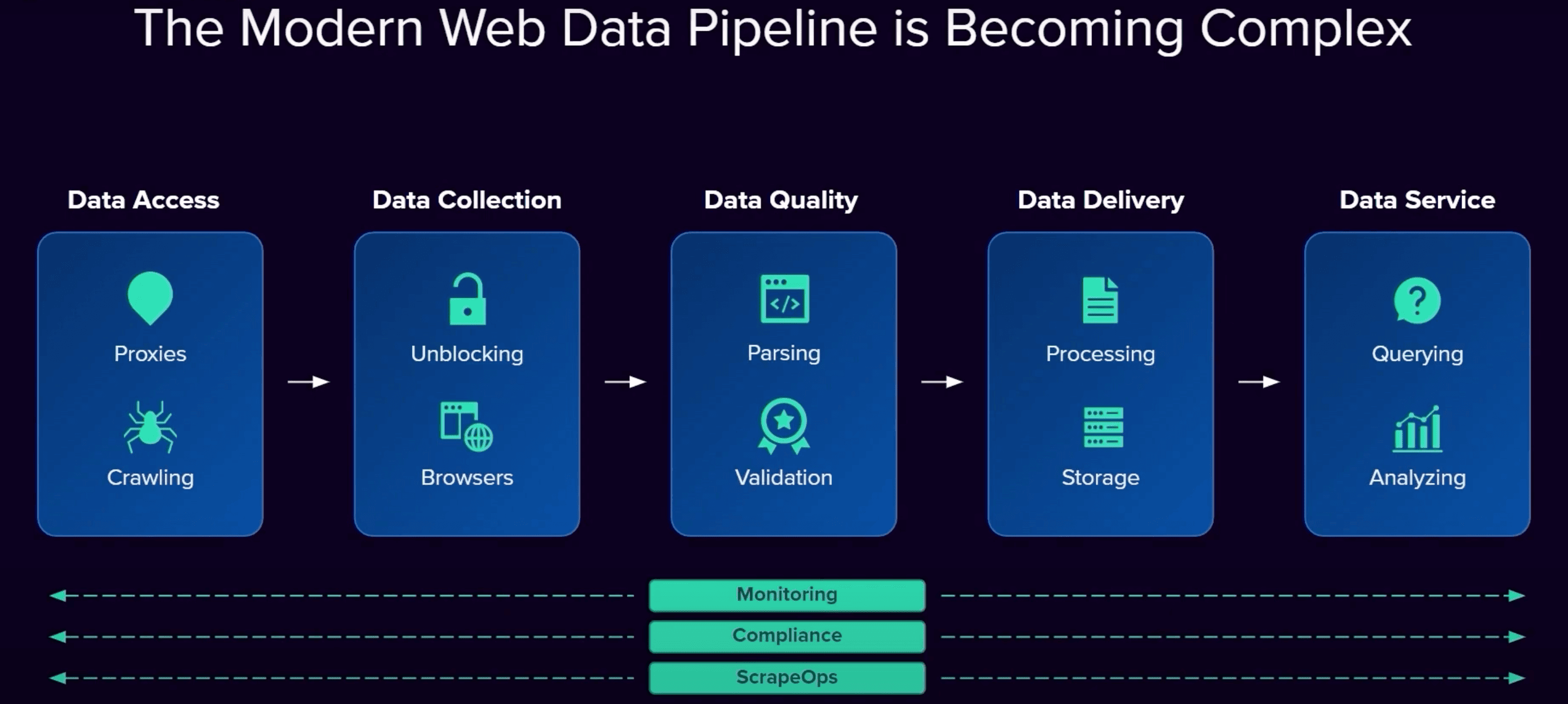

This was also the first presentation to feature Bright Data’s diagram of the modern web pipeline. It would appear time and time again throughout the conference.

Talk 2: Decoding Scraping Strategies: Build, Buy, or API?

Nir Borenshtein, Bright Data’s COO, spoke about the considerations of building a scraper in-house versus outsourcing parts of the process. The presentation was once again very much focused on Bright Data’s tooling, but in a more general, here’s what our platform (scraping cloud) looks like, kind of way.

Nir began by elaborating on the web data pipeline we saw in the previous session, speaking about the challenges that arose throughout the years and how they led to the creation of Web Unlocker. He then characterized three models for data collection: in-house, hybrid, and data-as-a-service. Afterwards, Nir illustrated his points with several case studies and finally presented Bright Data platform’s layers.

Overall, it was a decent high-level overview, perhaps more from a business executive’s point of view. I’m not sure why this talk didn’t go after the intro – maybe that was the original design, but it got rearranged somewhere in the process.

Panel 1: The Future of Data for AI: Balancing Legal and Operational Challenges

Bright Data’s CEO Or Lenchner, Jo Levy from Norton Law Firm, and Anthony Goldbloom, Co-founder of Kaggle, discussed the role of web data in AI, the challenges collecting this data raises, and how to navigate them in the day-to-day. Jennifer Burns from Bright Data moderated the discussion.

Being accustomed to specialist panels, I found this intersection interesting, but it turned out to provide useful insights. Anthony, whose company uses web data to fine-tune LLMs, outlined a framework for data collection. It includes knowing your sources, depth of coverage, and the possible legal implications.

Or raised thought-provoking points about the inherent bias in selecting datasets for LLMs and the increasing importance of data freshness. Jo’s most valuable argument, in my opinion, was that lenses like copyright or bias should be addressed in the curation rather than the selection stage. Other topics were touched upon as well, such as self-regulation.

All in all, I enjoyed the discussion and recommend watching it. I also found it amusing how self-effacing Bright Data appeared compared to the other sessions: Or suggested that their services may not be necessary at first, and Anthony admitted using multiple vendors to mitigate risk.

Talk 3: From AI-Powered Insights to Training LLMs

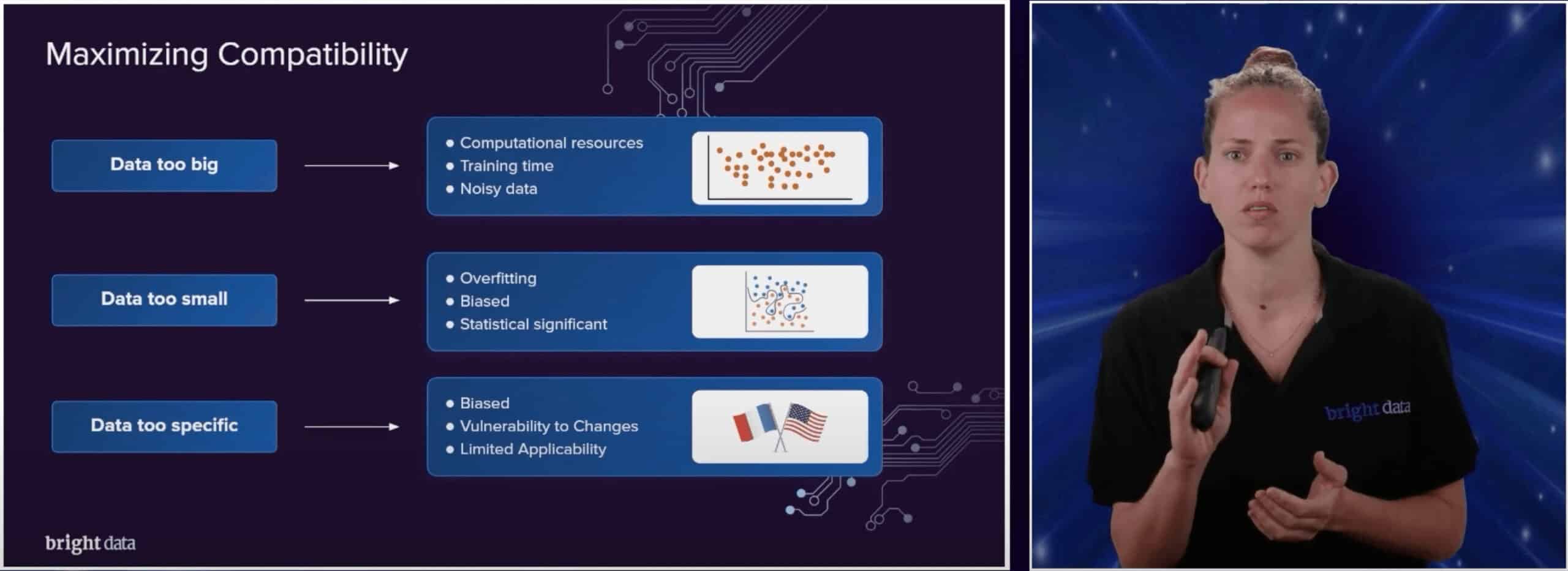

Lior Levhar from Bright Data ran through the best practices for creating datasets for large language models. The target audience was probably business types looking to get into LLM training, as most advice turned out pretty basic: tailor the dataset for your use case, don’t make it too big or small, remove duplicates, and validate unreliable sources like social media.

Throughout the session, Lior subtly referred to Bright Data’s datasets several times and whipped up a practical demonstration with Snowflake as the analytics tool. Although the session has limited value for data professionals, it’s a decent introduction for others.

Talk 4: A Blueprint for Building a Reliable Dataset

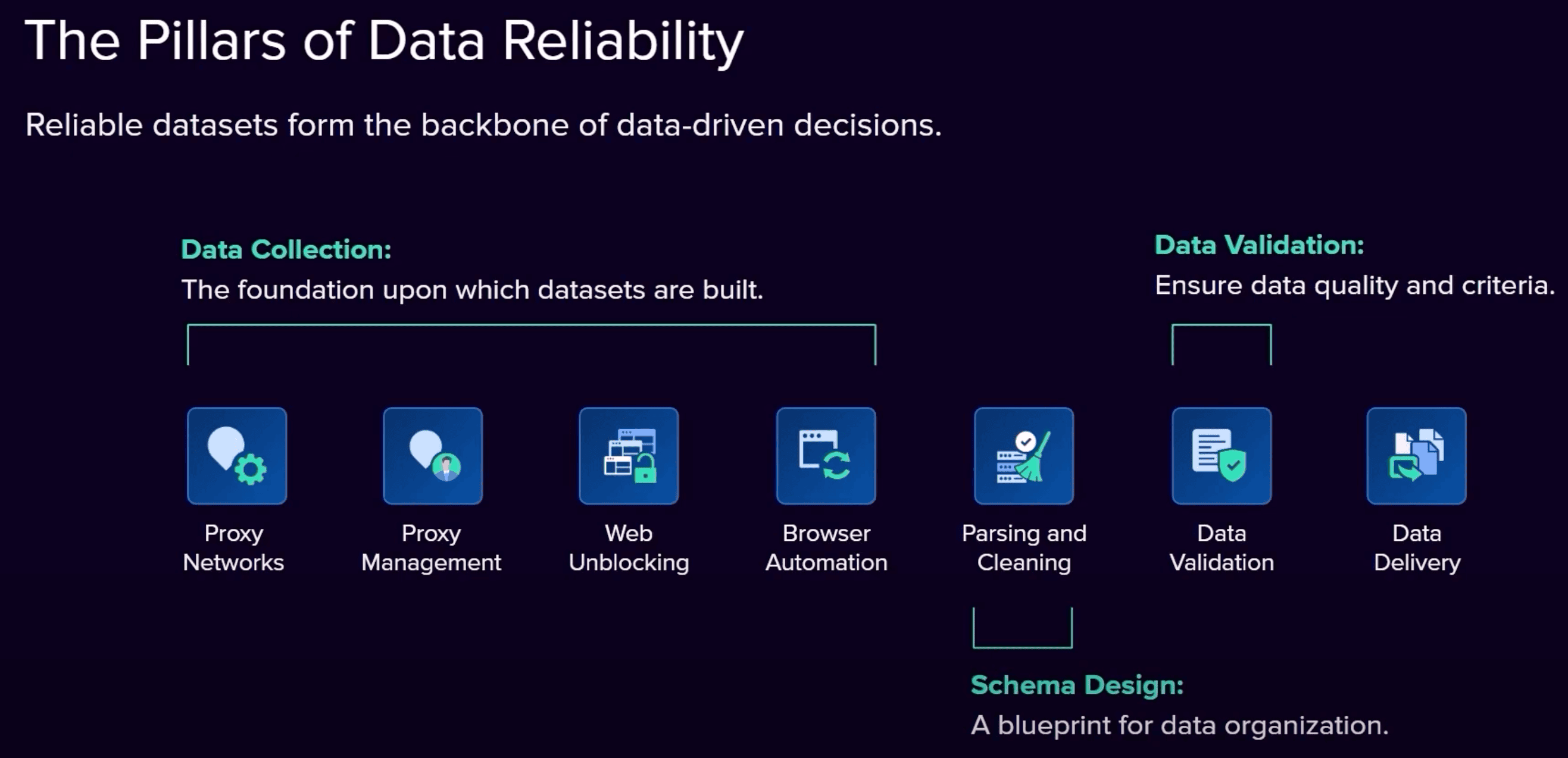

Itamar Amramovich from Bright Data continued the topic of datasets, diving deeper into how they’re crafted at his company. The presentation was heavily sales-driven, leaning on marketing lingo and rhetorical techniques.

In brief, Itamar went through what he called the pillars of data reliability. The first, data collection, relies on proxies and unblocking infrastructure and applies various strategies, such as discovering sources through search engines and scraping internal APIs. Schema design requires defining core elements and cleaning raw output, while data validation looks into fill rate, uniqueness, min-max thresholds, and other metrics.

Marketing aside, the talk delivered on its promise of providing a blueprint, all the while letting us gleam into Bright Data’s internal tooling, such as its universal schema for datasets.

Panel 2: The Executive Playbook

The second panel featured Ernesto Cohnen from Ixigo, Michael Beygelman from Claro Analytics, and Ganesh Kumar from Rakuten. It was hosted by Bright Data’s CCO Omri Orgad. The discussion’s professed aim was to discuss the best practices for operational and financial aspects of real-time data collection.

In reality, it touched upon many topics, including but very much not limited to LLMs and their impact on business operations. The participants brought expertise from their respective fields, sharing individual challenges that sometimes intersected around the topics of multimodality of data, the growing importance of real-time decisions, and the need to discern data originators from regurgitators.

To give you some examples, Ernesto encountered that around 10% of new Google Images are now AI-generated, which is a problem for a travel portal. Ganesh has found it crucial to understand the references that customers use to shop for products, and sometimes, you need to search in unlikely places (such as ticketing websites). And Michael emphasized that we live in an era of just-in-time where businesses require prescriptive data.

Ernesto concluded with a beautiful thought that the value of data lies in the stories you build with it. All in all, I found the panel to be one of the highlights of the conference.

Talk 5: From Clicks to Captures: Mastering Browser Interactions for Scrapers

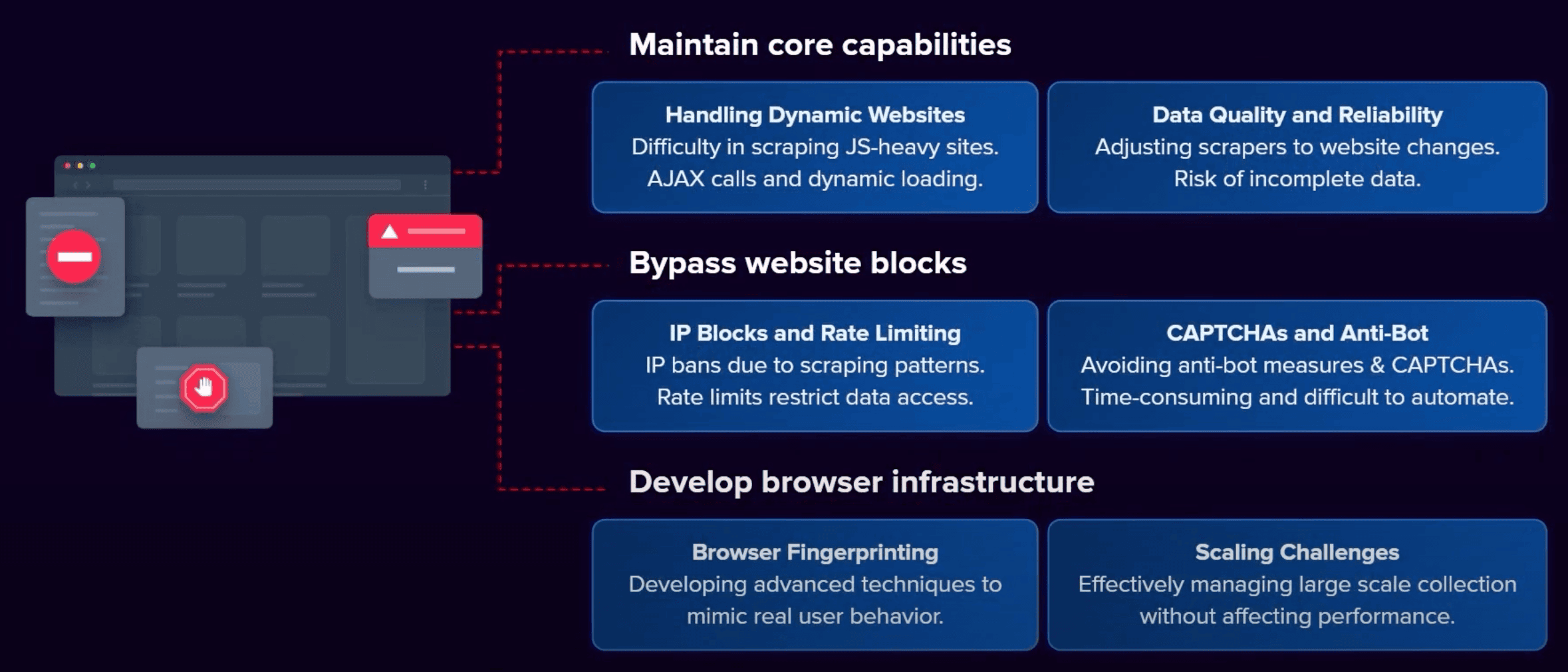

Aviv Besinsky and Ilya Kolker from Bright Data introduced the challenges of scraping dynamic websites and their approach to solving them. Some of the roadblocks include maintaining headless browser infrastructure, avoiding blocks, and parsing rendered content. Hard, right?

Well, you can always use Scraping Browser to overcome them. It scales automatically and has unblocking built in. Aviv provided a case study where a customer saved $5,000 by switching, and Ilya proceeded to demonstrate a scenario where he programmed a scraper to visit Amazon, type in Laptop, and download the page. All in all, it was a product pitch if you’re in the market for it.

Talk 6: Beyond IP Bans & CAPTCHAs

Another presentation by the Aviv Besinsky and Ilya Kolker duo. This time, they discussed the challenges stemming from website protection against web scraping. These range from simple IP blocks to sophisticated behavior tracking.

Aviv first showed a timeline with the evolution of anti-bot technologies. He then overviewed the main anti-scraping methods in order of difficulty and provided some ways to overcome them. The guidelines were on a pretty high level (e.g. emulate real user interactions), which serves more as a starting point for further research than directly applicable advice.

In the second part, Ilya demoed how datacenter and residential proxies fare in two scenarios: accessing Amazon and G2. He used a basic scraper without and then with headers. After both failed with G2, Ilya switched to Bright Data’s Web Unlocker (which runs headless browsers and solves CAPTCHAs if needed) and successfully scraped the page.

Panel 3: From Initial Request to Final Analysis

A panel with five tech influencers: Tech with Tim, Coding with Lewis, TiffinTech, Python Simplified, and Tech Bible. I expected a discussion format, but it looked like the participants were given a list of questions and recorded their answers individually.

In any case, they covered a variety of topics, ranging from the very basics like what is web data? to the challenges developers face while scraping and their favorite tech stacks. I wouldn’t consider this panel crucial to watch, but it was entertaining and ended the conference on a lighter note.

Conclusion

That was ScrapeCon. Despite being heavier on sales than we’re used to, I believe it was a success. Congratulations to Bright Data on their first online conference!

If you’ve reached this far and would like to read up on other major industry events, here’re our recaps of 2023’s Extract Summit and OxyCon.