Extract Summit 2023: A Recap

- Published:

Organizational Matters

Same as last year, 2023’s event had a physical venue. This time it was hosted in Dublin, Zyte’s hometown, but you could also join online. Aside from networking opportunities, live attendees had the chance to participate in four workshops that took place a day before the talks.

An early bird’s ticket cost €159 (plus an extra €80 for access to the workshops). Virtual attendance was actually free this year, which marked a welcome change for those who couldn’t (or wouldn’t) make it live. Unfortunately, that included us. Zyte used Eventbrite for all tickets, so those who found out about the conference once it had started were out of luck, as registration had closed.

Zyte streamed the event over YouTube, used Slido setup for asking questions, and had a Slack channel for less immediate discussions.

Main Themes

We knew that the conference would spend time on AI, but it really took the center stage this year. ChatGPT, other LLMs, and machine learning in general permeated nearly every talk, even the flavor presentations that usually introduce some niche use case. This is understandable given how hyped and compatible with our industry the new tech is.

Secondly, Zyte really made an effort to make the conference hands-on, or at least applicable in practice. And we’re not talking about the workshop. During the talks, participants were given access to Zyte’s new no-code interface, a POC tool that (of course) integrates ChatGPT, a form to calculate their web scraping expenses, and so on. Even the presentation on legal matters provided an actionable checklist for four relevant use cases (two of which, once again, involved AI).

Zyte’s web scraping API has matured a lot in a year. Knowing that, the host dedicated three presentations to promote the tool. This was definitely noticeable; but it didn’t feel pushy or tacky watching online.

The Talks

Let’s run through the conference’s 13 talks. They cover various topics from an exploration of ChatGPT for the purposes of data collection to a deep dive into current bot protection systems.

Here’s the line-up. Clicking on a title will take you there:

- Introduction by Zyte’s CEO: Why I Replaced My Most Popular Product

- Innovate or Die: The State of The Proxy Industry In 2023

- Can ChatGPT Solve Web Data Extraction?

- Enterprise-Grade Scraping with AI

- Detect, Analyze & Respond. Harnessing Data to Combat Propaganda and Disinformation

- Anatomy of Anti-Bot Protection

- Soaring Highs and Deep Dives of Web Data Extraction In Finance

- Taming the World Wide Web

- Spidermatch: Harnessing Machine Learning and OpenStreetMap to Validate And Enrich Scraped Location Data

- A Step-by-Step Guide to Assessing Your Web Scraping Compliance

- Dynamic Crawling of Heavily Trafficked Complex Web Spaces at Scale

- Using Web Data to Visualise and Analyse EPC ratings

- The Future of Data: Web-scraped Data Marketplaces and the Surge of Demand from the AI Revolution

Talk 1. Introduction by Zyte’s CEO: Why I Replaced My Most Popular Product

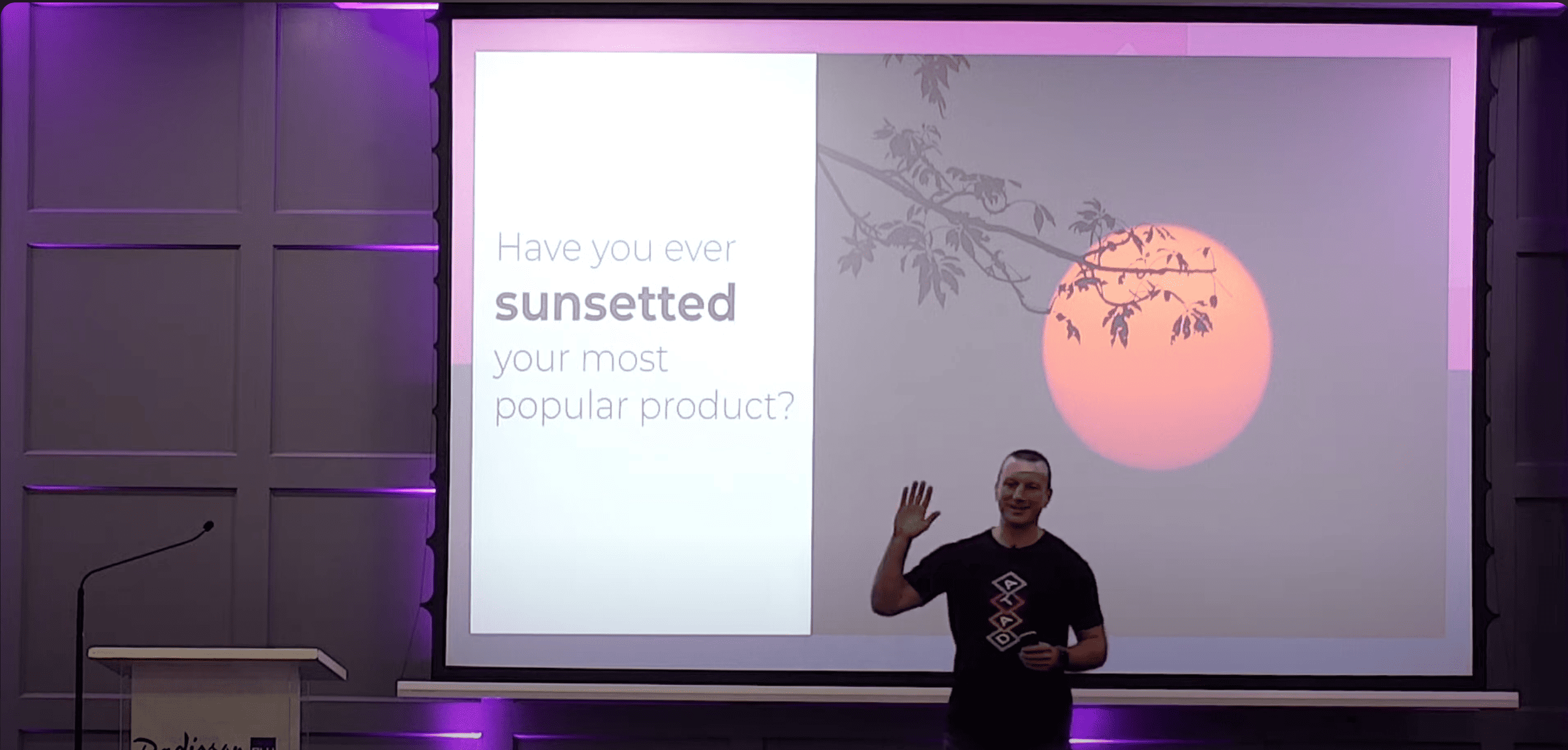

Intriguing title, right? Zyte’s CEO Shane Evans kicked off the presentations with a walk down memory lane. He recounted the story of how Crawlera (Zyte’s proxy management layer) came to be, how it evolved, and why it had to give way to Zyte API. It was both a feature run through (look what we can do now!) and a deprecation notice for the old tool.

The talk was kind of promotional (it even included our benchmarks!) but still interesting, as it described the problem space. Overall, it was a good way to start the day.

Talk 2. Innovate or Die: The State of the Proxy Industry in 2023

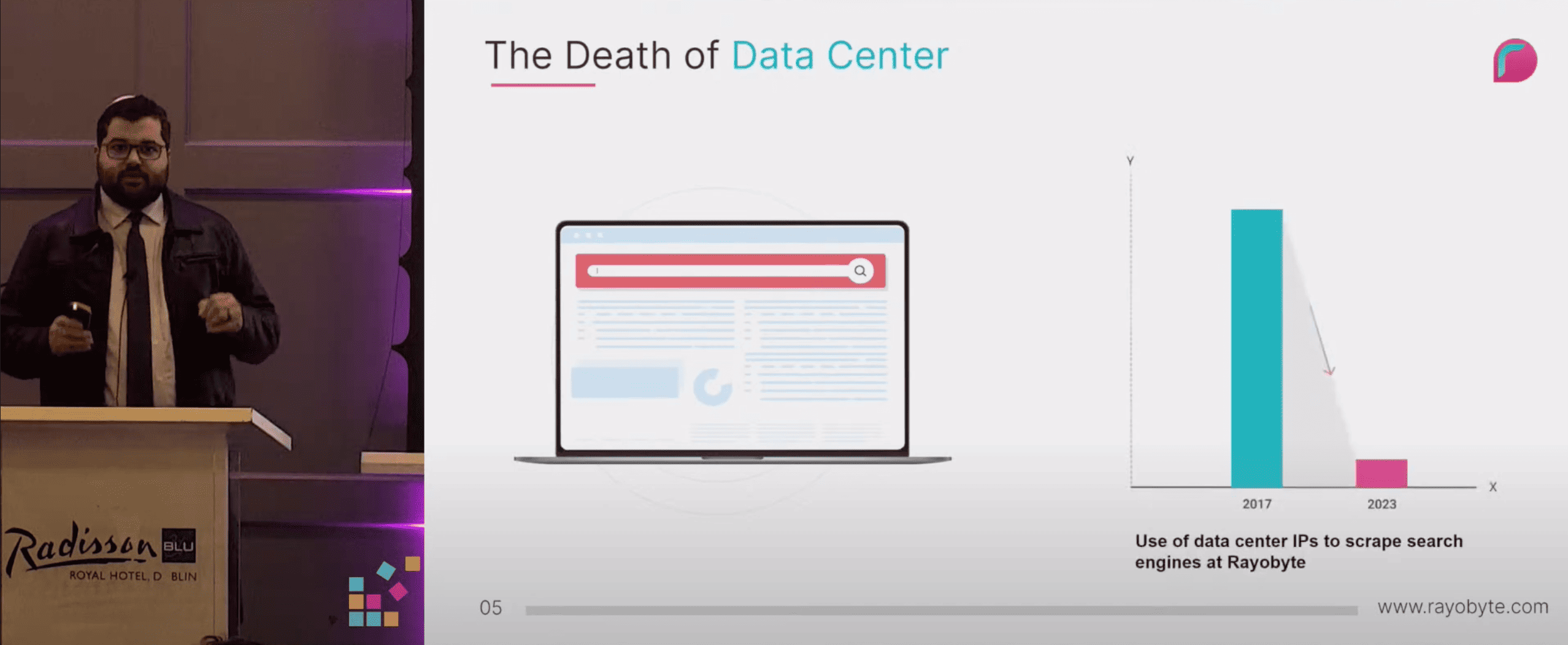

Isaac Coleman, VP of marketing at Rayobyte, gave an obituary for its main product – datacenter proxies. I don’t know what public speaking classes they take, but Rayobyte’s speakers all have the mannerisms of American preachers. Truth be told, it was very fitting for the occasion.

Isaac spoke about the three shifts that decimated datacenter proxies for three major use cases, sometimes overnight. The market for this proxy type has reportedly shrunk and even the remaining major vertical is at risk. Scary, right? Isaac then broke down the cost of web scraping operations and provided a worksheet for calculating your costs. Handy? Yes. Worth watching? If you use proxies, then definitely yes.

Talk 3. Can ChatGPT Solve Web Data Extraction?

Konstantin Lopuhkin, head of data science at Zyte, tried to answer what many have on our minds: can I use ChatGPT for scraping, to what extent, and is it worth it? Konstantin went over the price considerations of using OpenAI’s APIs, different scraping approaches (generating code vs extracting directly with LLM), and compared commercial models with open source alternatives. Finally, he demoed an internal tool that is no longer available.

The presentation was born from experience, so it provided concrete numbers and reasoned arguments. It may not age very well due to how fast the tech evolves, but at this moment, I consider the talk super relevant. The audience’s questions were also interesting, as they touched upon the considerations many of us have with LLMs.

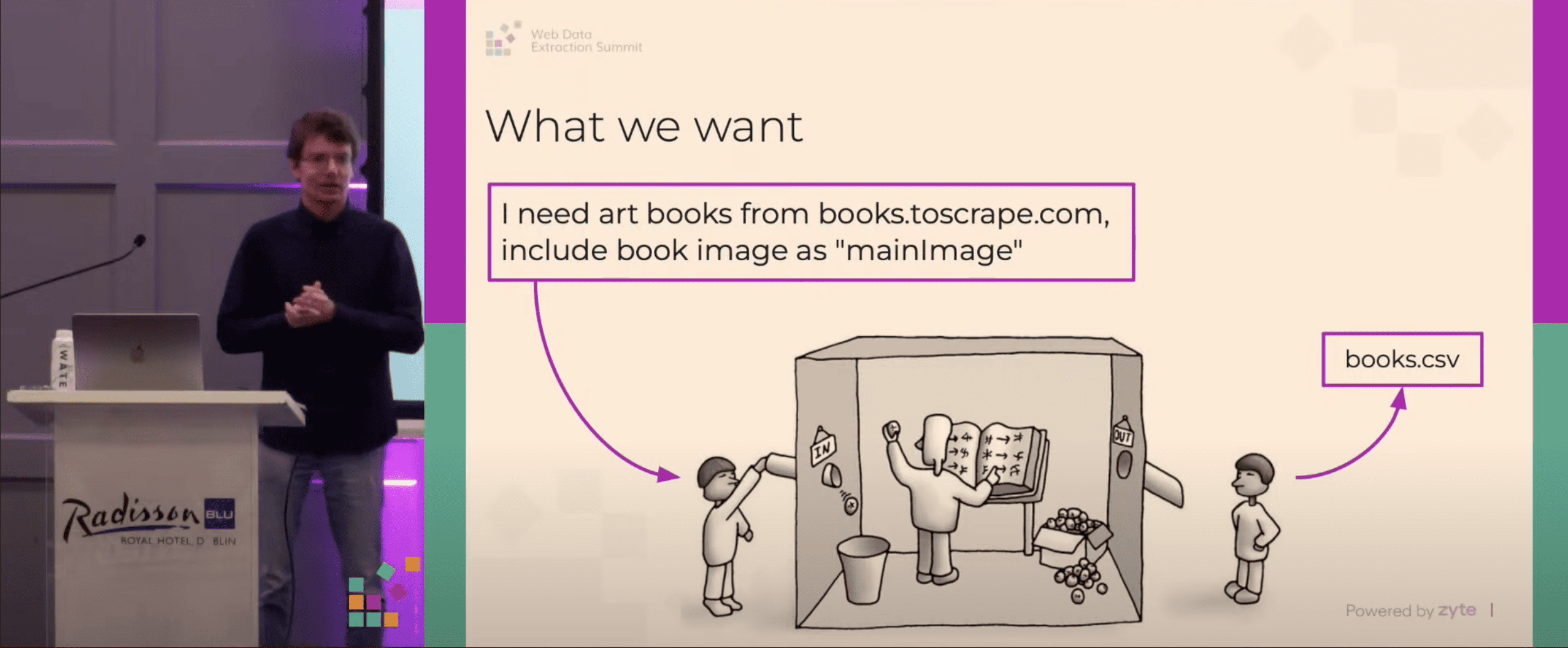

Talk 4. Enterprise-Grade Scraping with AI

Another talk by Zyte’s crew that builds upon the previous presentation, so the two may be worth watching back to back. In particular, Ian from Zyte talked about the problem of horizontal scaling (parsing many pages) and how AI can be used to address it.

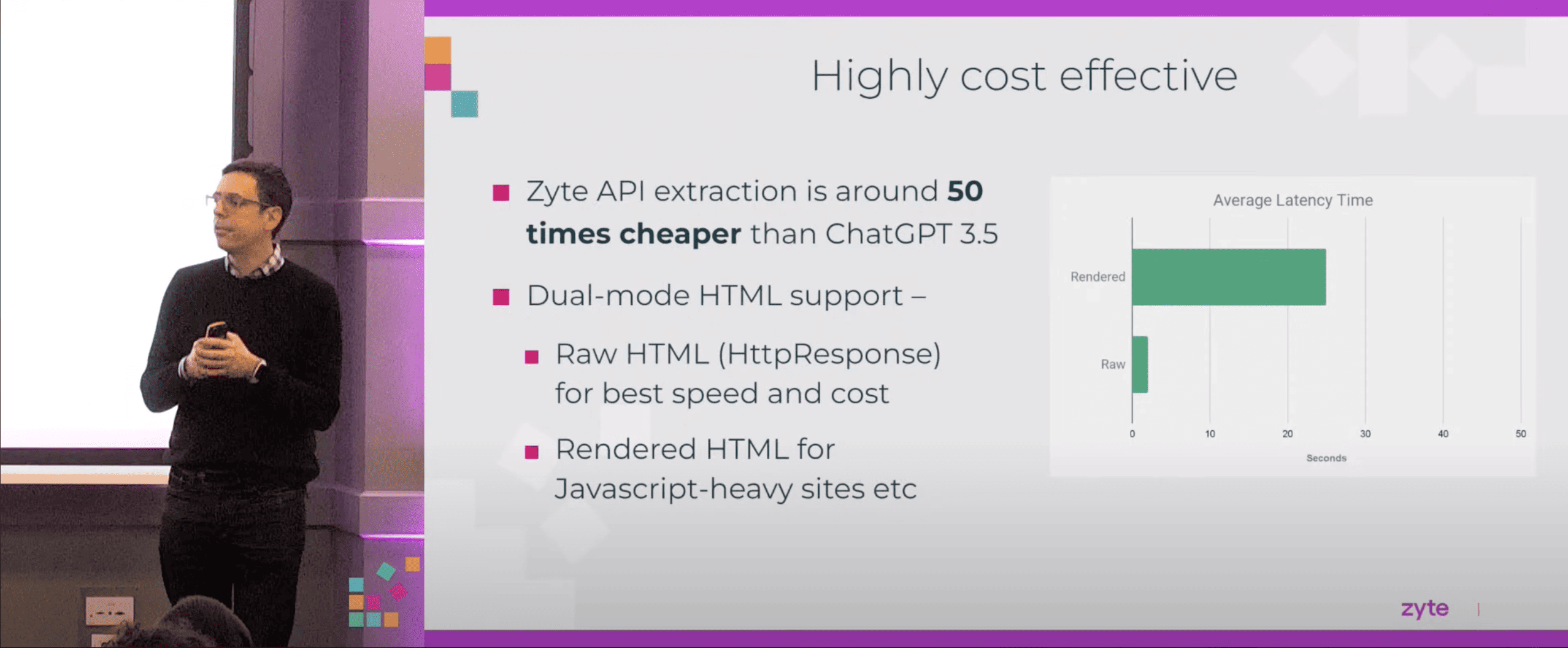

Curiously, the hero of this story wasn’t LLMs: they were briefly mentioned and shoved away as an immature tech. Rather, it was Zyte’s own supervised ML model that the provider has been perfecting for over four years. It runs on every page, is reportedly more accurate, and up to 50 times cheaper than ChatGPT 3.5. Ian dove into the model’s innards, while his colleague Adrian demonstrated a no-code wrapper that crawled and parsed an e-commerce page.

Talk 5. Detect, Analyze & Respond. Harnessing Data to Combat Propaganda and Disinformation

Nesin Veli from Identrics gave a flavor presentation on the methods and prevention of cognitive warfare. Doesn’t ring a bell? The term defines techniques used to manipulate public perception for various ends.

Nesin introduced his company’s web scraping stack and showed how they trained an ML model to recognize hate speech in a news site’s dataset. But to us, the fascinating part was how Identrics applies the tools to combat cognitive warfare. The range of activity is very broad and includes things like narrative tracking across media channels and outlet credibility checks. Considering how prevalent and insidious information warfare has become, this was definitely educational.

Talk 6. Spidermatch: Harnessing Machine Learning and OpenStreetMap to Validate and Enrich Scraped Location Data

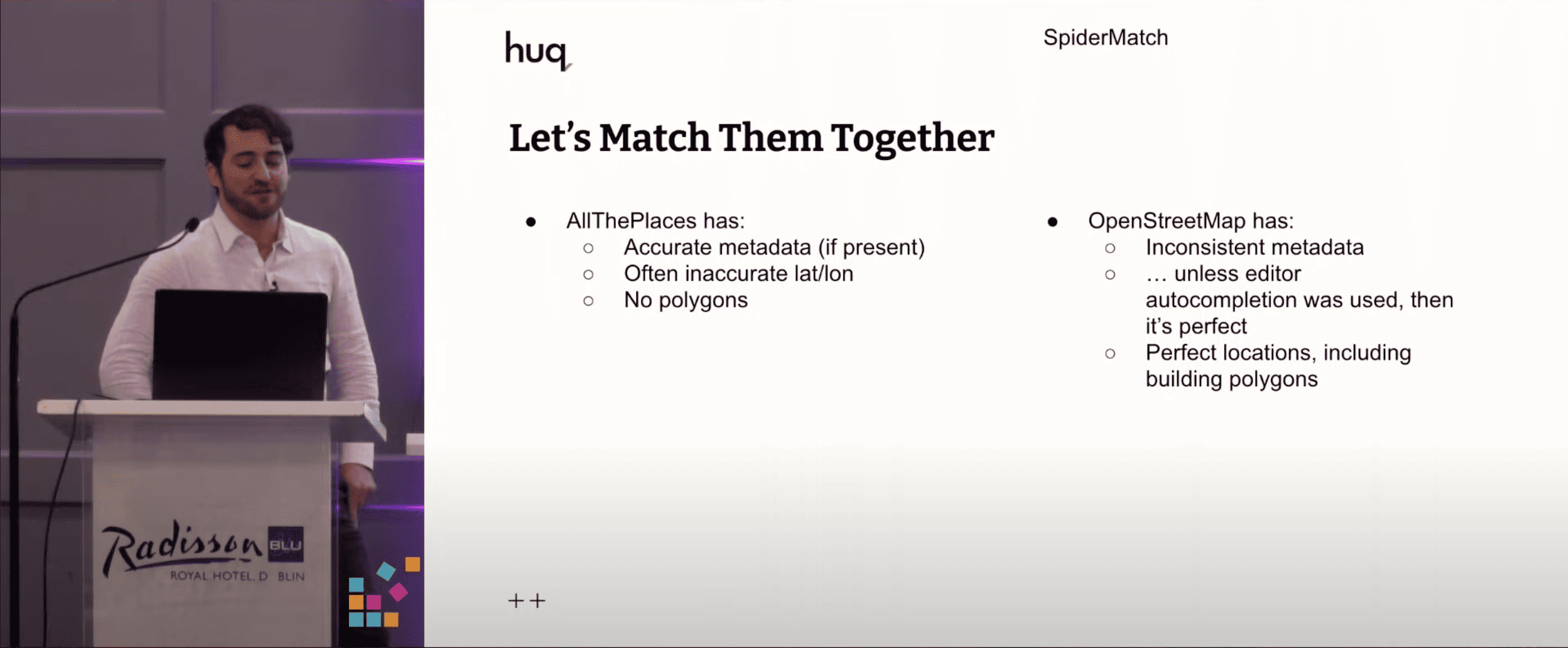

Jimbo Freedman’s company Huq Industries provides popularity, visiting time, and other data points related to geographic areas or objects. To do this, they first need to precisely map the points of interest. You wouldn’t think that’d be a challenge, but Jimbo proved us otherwise. Fun fact: some of Huq’s competitors still mark objects by physically visiting most of them!

In brief, the company’s problem space involves scraping thousands of relevant stores (via forked AllThePlaces spiders) and cross-referencing the information with OpenStreetMaps to validate accuracy. This raises multiple problems related to metadata and store co-ordinates. Jimbo described his four-step process and how the involvement of LLMs affects the output. To give you a hint: significantly, but hallucinations remain a problem.

Talk 7. Anatomy of Anti-Bot Protection

This one’s a treat. Fabien Vauchelles, the anti-detect expert at Wiremind, dissected the main bot protection methods. This would be interesting on its own, but Fabien’s French accent, enthusiastic delivery, and custom, presumably AI-made illustrations, really turned the talk into an experience.

Fabien went through the four web scraping layers – IP address, protocol, browser, and behavior – and how precisely anti-bot systems use them to identify bots. There are so many data points they can track… sometimes too many for their own good! The presenter pinpointed the main ones and listed eight steps for tackling increasingly difficult targets. Recommended.

Talk 8. Taming the World Wide Web

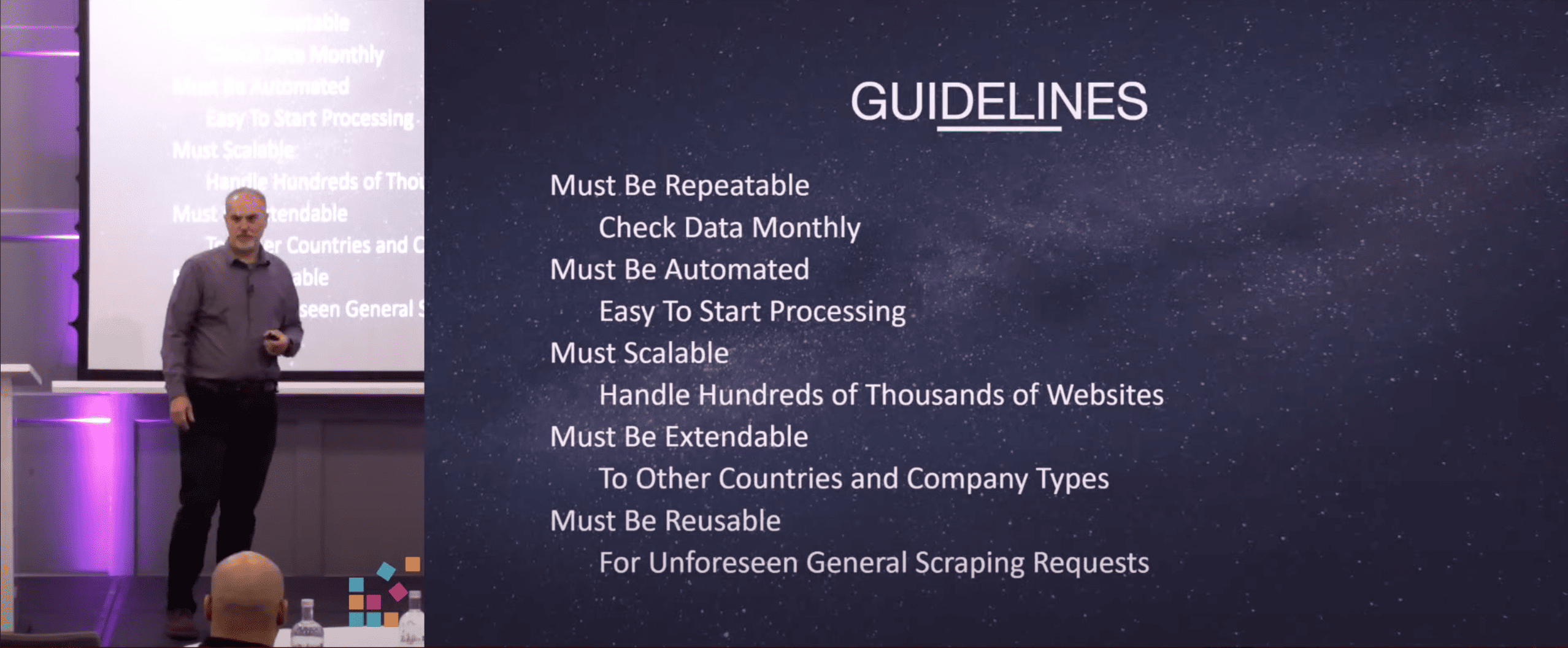

American accent, slides from the early ‘00s, and a vague title promising the world… This is what Eric Platow from LexisNexis greets you with. But that’s just the first impression. In reality, Eric walked the audience through a project he had to complete: 1) a million biographical records to scrape each month from thousands of websites, 2) a deadline of six months, and 3) minimal human resources.

The websites were related to lawyers, so they posed some peculiar challenges: old (like really old) page structures, repurposed or squatted domains, and irrelevant pages. Another big challenge was extracting and normalizing the right data; this required fuzzy matching, NLPs, and LLMs. In the end, Eric’s efforts managed to save $3.7M on manual labor by 400 people. Watch the talk to learn the specifics.

Talk 9. Soaring Highs and Deep Dives of Web Data Extraction in Finance

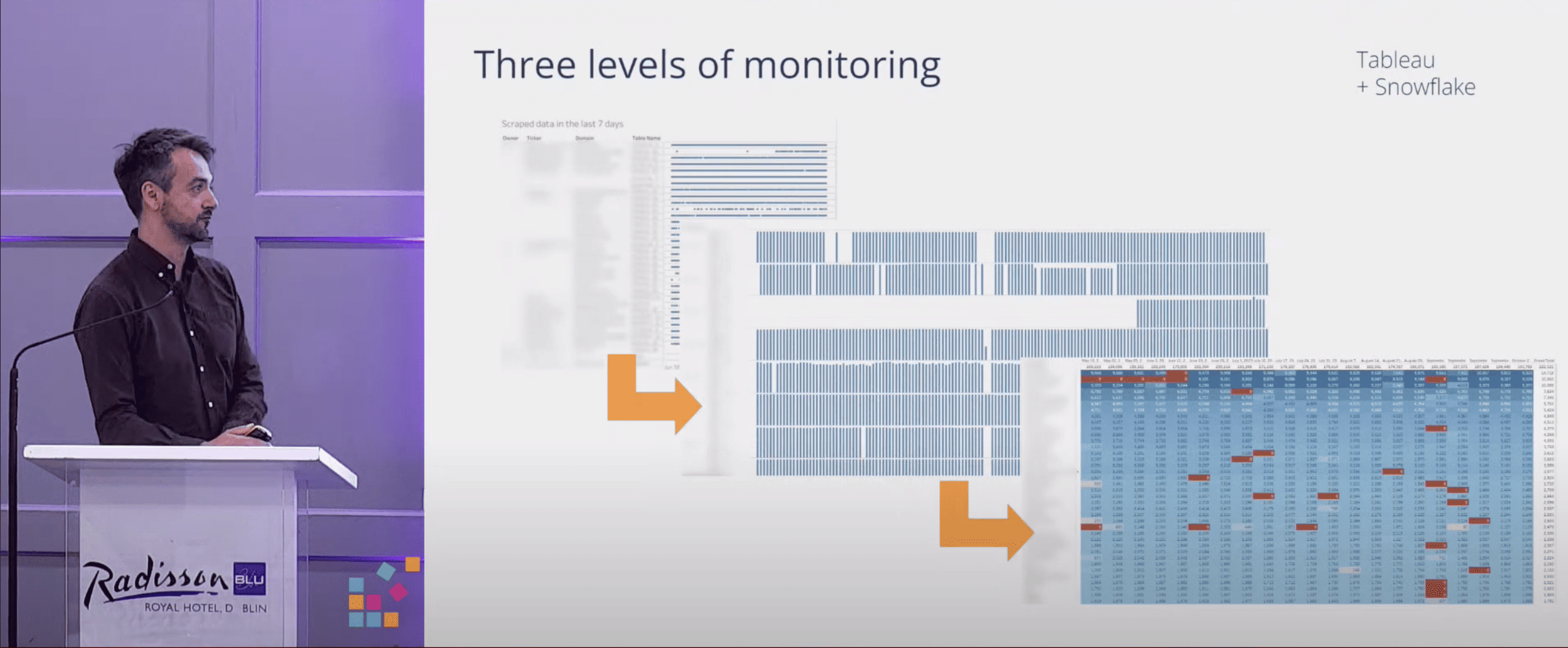

Alex Lokhov from Hatched Analytics delivered another flavor presentation on productizing alternative data for the finance vertical. It had two related but also somewhat separate parts.

The first part illustrated the relevance of alternative data and listed the requirements for productizing it. For example, we learned that financial services always require context and that datasets suffer from something called alpha decay. The second part was more technical, focusing on data storage and especially visual monitoring – the presenter’s strong suit. So, it’s possible to learn something even if you’re not particularly interested in this use case.

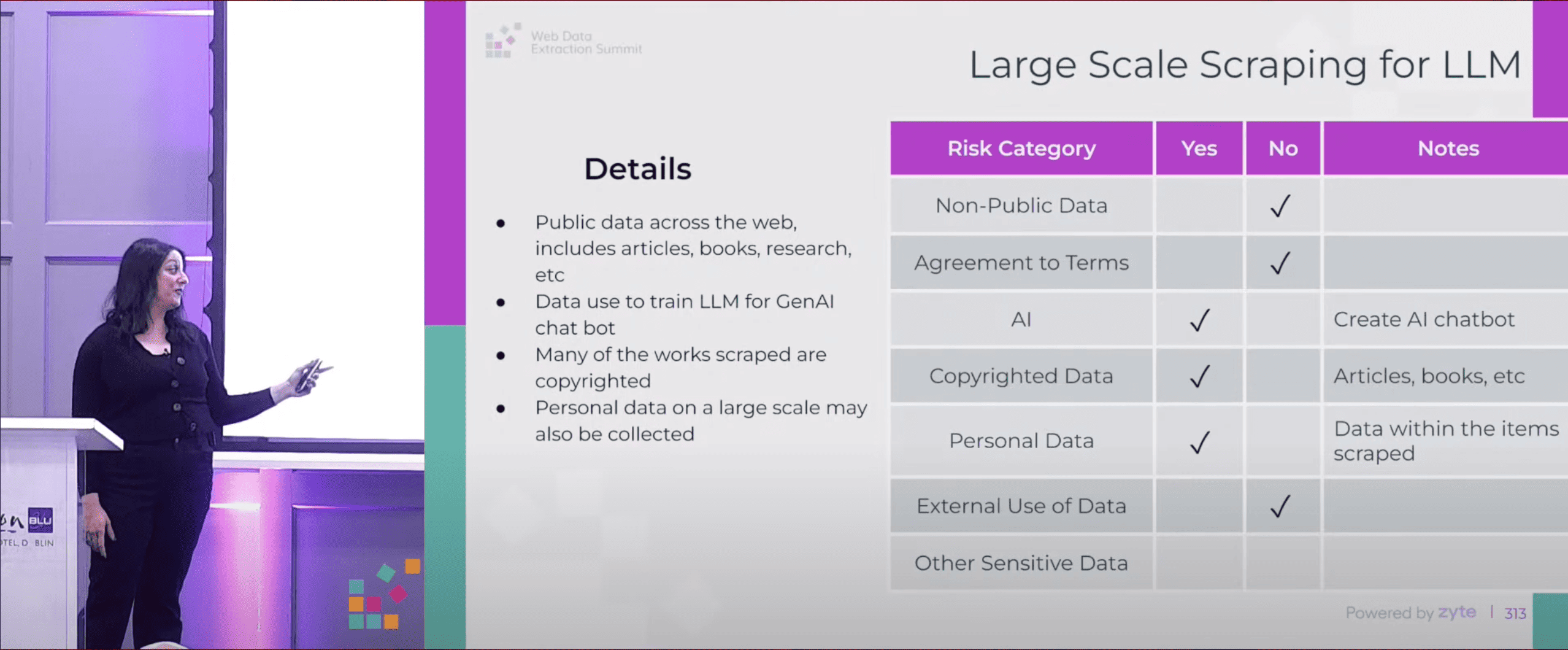

Talk 10. A Step-by-Step Guide to Assessing Your Web Scraping Compliance

Law time! The presentation was delivered by Sanaea Daruwalla, Chief Legal Officer at Zyte. We’ve seen Sanaea multiple times before; based on prior feedback, this year she chose a very specific theme. It covered four popular web scraping use cases, with a checklist of possible legal risks and their mitigation strategies.

To us, this combination really hit the mark – especially given that two of the situations dealt with AI models, a topic that’s extremely pertinent today. Sanaea gave actionable advice and outlined the upcoming regulations likely to affect web scraping operations, such as the EU’s AI Act. All in all, one of the must-watches of the conference.

Talk 11. Using Web Data to Visualise and Analyse EPC ratings

Another tech demo delivered by Neha Setia Nagpal and Daniel Cave. It demonstrated Zyte API’s no-code wrapper for e-commerce product pages, together with its flexible Scrapy cloud underpinnings.

Basically, Daniel played a data scientist who had a quick project in mind. He used the wrapper to quickly collect the energy efficiency ratings of home appliances and visualize them in Tableau. Neha took the role of an engineer. The scraper’s stock functionality didn’t fully meet Daniel’s needs, so she opened the hood and fixed this by adding a few parameters. Overall, it was an interesting but completely optional presentation.

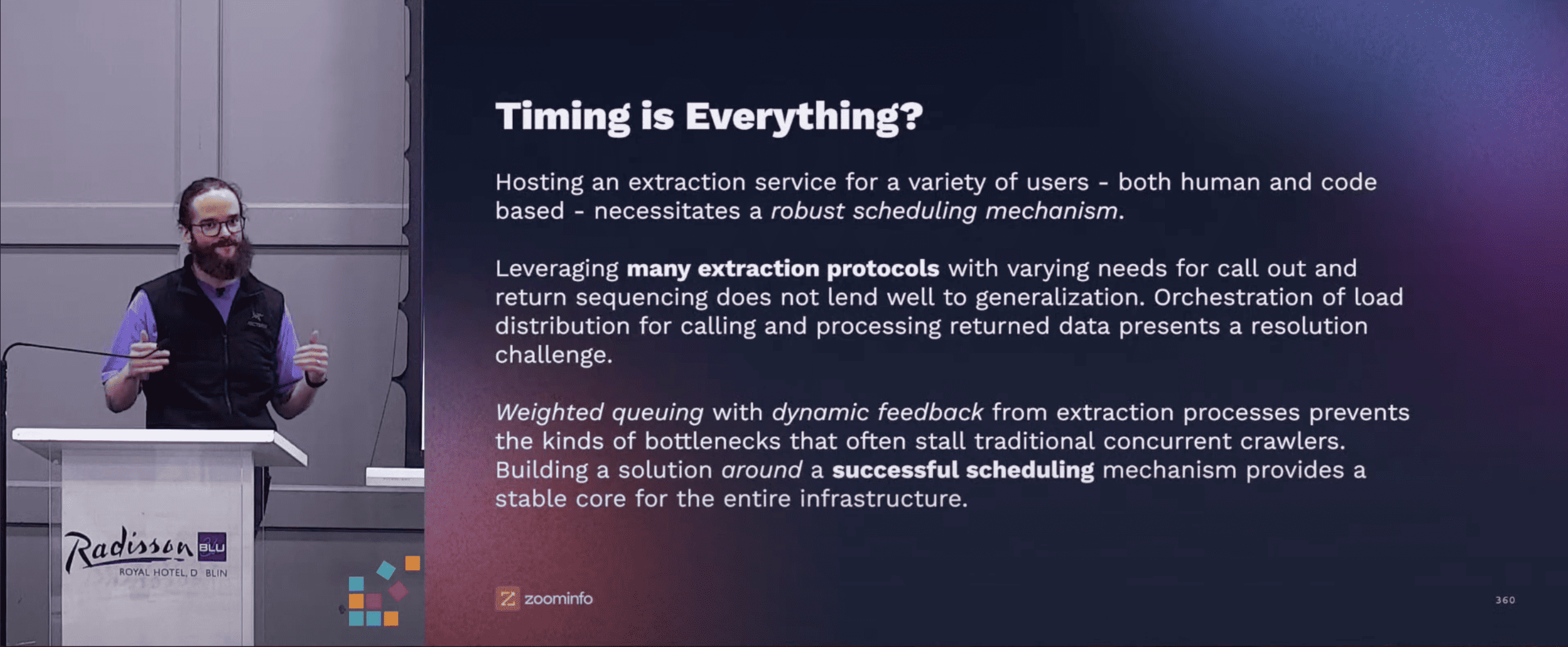

Talk 12. Dynamic Crawling of Heavily Trafficked Complex Web Spaces at Scale

A talk by Andrew Harris from Zoominfo. Complex spaces are platforms that have many users interacting with them at once: social media, search engines, and similar. Andrew’s challenge was designing a low-code platform that users with complex needs could use simultaneously. The solution, and the main focus of this presentation, was a sophisticated scheduling system with weighted queueing and other elements.

Maybe because the talk came so late in the conference and we watched it in one sitting, it was a bit of a slog. The presenter used academic language, with slides that were full of information and didn’t necessarily match what was spoken. If you care about the topic and decide to watch the talk, be prepared to pause it multiple times.

Talk 13. The Future of Data: Web-Scraped Data Marketplaces and the Surge of Demand from the AI Revolution

The final talk of the day featured Andrea Squatrito from Data Boutique, a data marketplace. It consisted of two halves. The first tried to substantiate data marketplaces using arguments that apply to most platforms: mostly economies of scale and easier distribution. The second half was more interesting, as it addressed the challenges of trust and quality assurance. AI was only mentioned in passing.

Conclusion

That was 2023’s Extract Summit! If you found any of the talks interesting, you can watch them on the event’s website. And now, we’ll be waiting for the final conference of the year – Bright Data’s ScrapeCon (which unfortunately had to be delayed due to events in Israel).