How to Scrape Reddit with Python: an Easy Web Scraping Tutorial

This is a step-by-step tutorial on how to scrape Reddit data using Python.

Reddit is a popular social news aggregation platform that holds a lot of valuable information on different topics. You can scrape it to discover market trends, improve your marketing strategy or simply for fun.

The platform was one of the few to give leeway for scrapers. However, at the beginning of the year, Reddit significantly increased its public API.

This guide will teach you all about Reddit scraping – from what tools you can use to tips on collecting data to avoid web scraping challenges. You’ll also find a step-by-step tutorial on how to scrape a subreddit using Python.

What’s Reddit Web Scraping – the Definition

Reddit scraping is a method of automatically gathering publicly available data from the platform. Before the latest changes, you could scrape Reddit without many restrictions and without putting in much money – it had its own official API that you can use for training AI models, research purposes or simply for fun. However, the company decided to increase its API price, and many popular Reddit clients like Apollo had to shut down in response.

Now with the new cost, the official Reddit API might be an overkill for simple web scraping tasks. So, you might want to look into alternatives. If you want to learn or improve your skills, you can build a web scraper yourself with programming languages like Python. It has many open-source libraries that are customizable to a great extent, and free. Another option is to buy a commercial scraper, but a word of warning – they’re pretty expensive as well.

Is Scraping Reddit Legal?

Yes. As of 2022, the Ninth Circuit Court of Appeals ruled that scraping public data isn’t in violation of the Computer Fraud and Abuse Act. But web scraping isn’t just black or white when it comes to legality.

Even if the data isn’t behind a login, some content is still a subject of intellectual property rights. If you’re working with personal information, there might also be other requirements.

But overall, Reddit is more open-minded towards web scraping. According to its privacy policy, the platform doesn’t even track your precise location or check whether your account information is legitimate.

What Data Can You Scrape from Reddit

If you want to scrape data that includes personal information, you need to make sure it’s 1) publicly available, and 2) not protected by copyright law. In essence, you can scrape:

- comments and their replies,

- post titles and their content,

- media files like images or videos that aren’t the subject of intellectual property,

- whole subreddits,

- number of upvotes/downvotes.

How to Choose a Reddit Scraper

The safest option to get data from Reddit is to use the official API. There are many packages that you can use with the tool. For example, Python Reddit API Wrapper (PRAW). Yet, you’ll have to comply with all Reddit API’s limitations – the new terms state that users with authentication can make less than 100 queries per minute using the free tier, and without – up to 10. What’s more, the API won’t come cheap.

If you want to learn or practice your Python skills, try to build a scraper yourself. Python has many great web scraping libraries and frameworks that are relatively easy to use and more extensible than Reddit’s API. For beginners, we recommend Requests and Beautiful Soup.

Last but not least, you can always go with commercial web scrapers like Apify’s Reddit templates or Decodos’s Social Media Scraping API. Such tools take care of proxy management, browser fingerprinting, and you won’t need to parse data yourself. Pre-made scrapers are great for collecting large amounts of data but might be overkill for simple web scraping projects.

Tips for Web Scraping Reddit

- Like other websites, Reddit has its own Terms of Service that you have to follow. And Reddit’s pretty generous in this matter – you have the permission to crawl any subreddit in accordance with the robots.txt file, which gives you a lot of leeway.

- Intensive scraping tasks can disrupt Reddit’s functionality, so take delays between your requests. Otherwise, the platform can block you for several hours if you exceed limits.

- Be aware that there are two Reddit versions: old and new. Even though the new Reddit is definitely more modern and comfortable to use, it includes dynamic content, which is a challenge to scrape. You’ll need to use a headless browser library like Selenium. If you don’t know how to work with such a tool, stick to the static Reddit interface – old.reddit.com.

- Reddit allows you to retrieve information in JSON format, which is easy to parse. You can do that by adding .json to your URL. For example, https://old.reddit.com/r/playstation.json

To learn more tips on web scraping Reddit, watch our video:

How to Scrape a Subreddit with Python: A Step-By-Step Tutorial

In this tutorial, we’ll be scraping the old Reddit version, specifically the PlayStation subreddit.

Necessary Tools to Start Scraping Reddit

For this tutorial, we chose two popular yet easy-to-use Python web scraping libraries Requests and BeautifulSoup.

Requests is an HTTP client that allows you to download pages. The tool lets you fetch data with a few lines of code, so you don’t need to delve much into code. You can customize the requests for more advanced Reddit web scraping by sending cookies, modifying requests headers, and changing other parameters.

Beautiful Soup is a data parsing library that extracts data from the HTML you’ve downloaded into a readable format for further use. Similar to Requests, the parser is simple to pick up

Even though Reddit has more leeway than other social media platforms, we still recommend using a proxy server and adding headers to the request.

A proxy changes your real IP address and location, which helps to avoid request limitations and IP address blocks. In this example we’ll be using residential proxies, but datacenter proxies will also do the job.

For this tutorial, we chose two popular yet easy-to-use Python web scraping libraries Requests and BeautifulSoup.

Requests is an HTTP client that allows you to download pages. The tool lets you fetch data with a few lines of code, so you don’t need to delve much into code. You can customize the requests for more advanced Reddit web scraping by sending cookies, modifying requests headers, and changing other parameters.

Beautiful Soup is a data parsing library that extracts data from the HTML you’ve downloaded into a readable format for further use. Similar to Requests, the parser is simple to pick up

Even though Reddit has more leeway than other social media platforms, we still recommend using a proxy server and adding headers to the request.

A proxy changes your real IP address and location, which helps to avoid request limitations and IP address blocks. In this example we’ll be using residential proxies, but datacenter proxies will also do the job.

Prerequisites

Before we start, you’ll need to:

- have the latest Python version installed on your computer,

- install Requests by writing

pip install requestsin your operating system’s terminal, - install Beautiful Soup by running

pip install beautifulsoup4,and - choose a code editor like Visual Studio Code or even your operating system’s editor.

Preliminaries

Step 1. Import Requests, Beautifulsoup, and Datetime libraries. The latter is the standard library for working with dates and times.

import requests

from bs4 import BeautifulSoup

from datetime import datetime

Step 2. Then, get the Playstation subreddit’s main page.

start_url = "https://old.reddit.com/r/playstation/"

Note: If you want to scrape new submissions, at the end of the URL add /new.

Step 3. Set up your proxies and request headers. In this example we’ll provide headers, but keep in mind that after a while they might be outdated.

proxy = "server:port"

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Referer": "https://old.reddit.com/",

"Sec-Ch-Ua": "\"Chromium\";v=\"116\", \"Not)A;Brand\";v=\"24\", \"Google Chrome\";v=\"116\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "cross-site",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36",

}

Step 3. Then, indicate the number of pages you want to scrape. Let’s set up three.

num_pages = 3

Starting the Script

Set up the scrape function and print out the information.

def main():

# Starting on page 1.

page = 1

# List for storing all of the scraped output.

scraped_content = []

# Calling the scrape_page() function to begin the work.

scrape_page(start_url, page, scraped_content)

# Printing out the output after scraping is finished.

print (scraped_content)

if __name__ == "__main__":

main()

Scraping the Subreddit

Step 1. Let’s scrape the page’s URL.

def scrape_page(url: str, page_num: int, scraped_content: list):

if page_num <= num_pages:

try:

resp = requests.get(

url,

proxies={'http':proxy, 'https':proxy},

headers=headers)

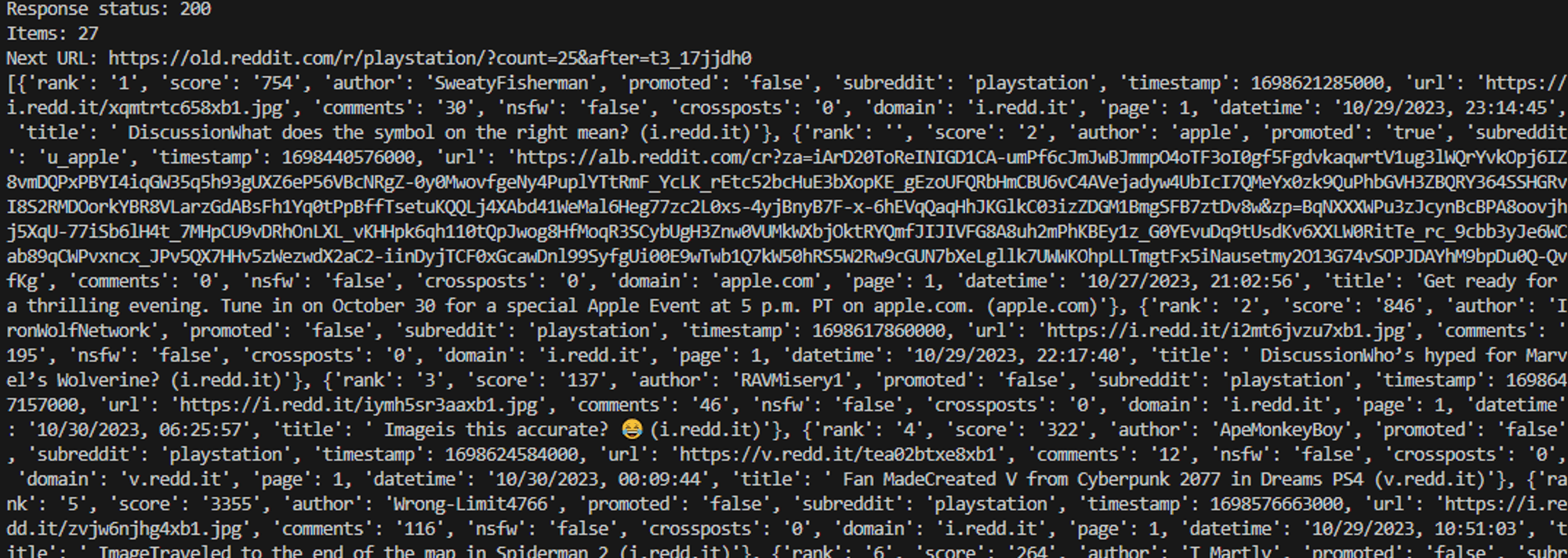

print (f"Response status: {resp.status_code}")

resp_content = resp.text

Step 2. Now, we’re going to send the response content to the parse_page() function.

scraped_items, next_url = parse_page(resp_content, page_num)

# Returned scraped items are added to the scraped_content list.

scraped_content.extend(scraped_items)

next_page_num = page_num + 1

Step 3. Then, call the scrape_page() function to scrape the next page.

scrape_page(next_url, next_page_num, scraped_content)

except Exception as exc:

Step 4. If the request fails, print the exception.

# Retry functionality can be implemented here.

print (exc)

Parsing the Page

Step 1. The following function parses all of the entries of the Reddit page. It returns a list of dictionary objects that contain the scraped information as well as the URL of the next page.

def parse_page(content: str, page: int):

1) Parse the page content with BeautifulSoup.

soup = BeautifulSoup(content, "html.parser")

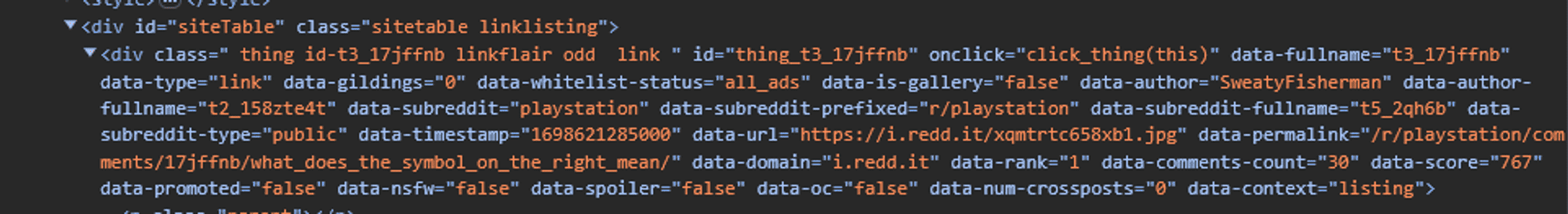

2) Find all divs that have a class thing.

items = soup.find_all("div", class_="thing")

3) Prepare a list that will hold a list of dictionaries.

scraped_items = []

4) Print out the amount found in the page.

print (f"Items: {len(items)}")

5) Iterate through each found item (div element).

for item in items:

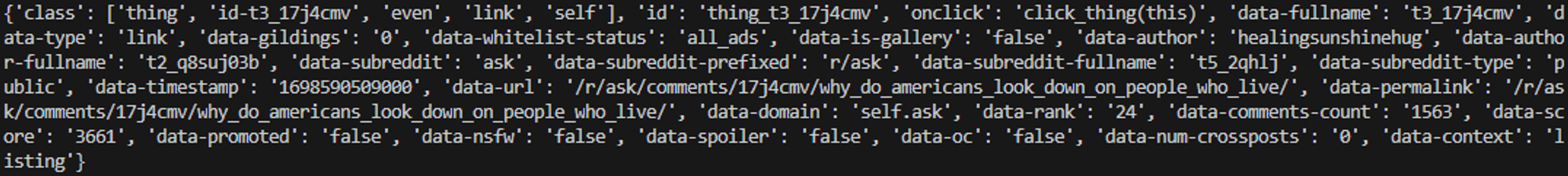

Step 2. A lot of information can be extracted from the div attributes. You can create a dictionary item_info and immediately add information that interests you.

item_info = {

"rank": item.attrs["data-rank"],

"score": item.attrs["data-score"],

"author": item.attrs["data-author"],

"promoted": item.attrs["data-promoted"],

"subreddit": item.attrs["data-subreddit"],

"timestamp": int(item.attrs["data-timestamp"]),

"url": item.attrs["data-url"],

"comments": item.attrs["data-comments-count"],

"nsfw": item.attrs["data-nsfw"],

"crossposts": item.attrs["data-num-crossposts"],

"domain": item.attrs["data-domain"],

"page": page,

}

Step 3. The timestamp is in milliseconds. Here you need to convert it to seconds and format it into a readable string.

item_info["datetime"] = datetime.fromtimestamp \

(item_info["timestamp"] / 1000).strftime("%m/%d/%Y, %H:%M:%S")

Step 4. The title is within a <p> tag with a title class. Use the BeautifulSoup .find() function to locate the element within the item div and extract its text.

item_info["title"] = item.find("p", class_="title").get_text()

Step 5. Now, append the resulting dictionary to the scraped_items list.

scraped_items.append(item_info)

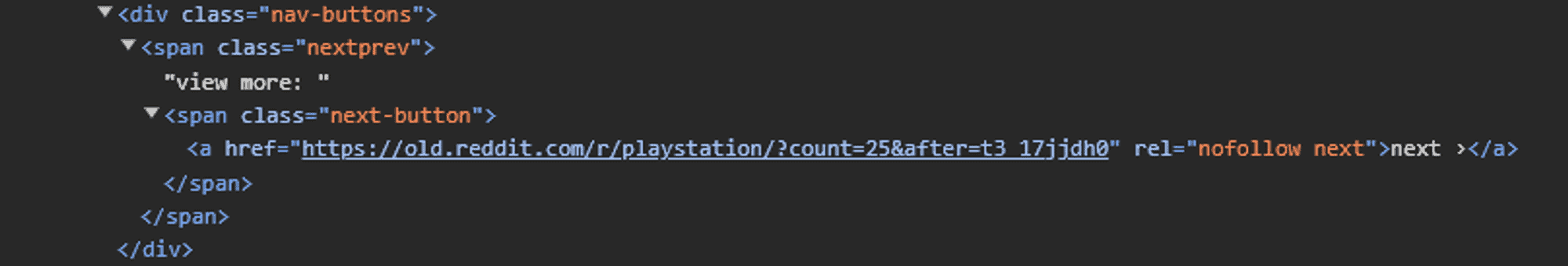

Step 6. After parsing all of the items, get the URL of the next page to scrape. You can find it by locating the <span> tag with the next-button class and then navigating down the parse tree to extract the href attribute from its <a> child element.

next_url = soup.find("span", class_="next-button").a.attrs["href"]

print (f"Next URL: {next_url}")

# Finally, we return the list of scraped items as well as the url to the

# next page.

return scraped_items, next_url

Here’s the full code:

import requests

from bs4 import BeautifulSoup

from datetime import datetime

start_url = "https://old.reddit.com/r/playstation/"

# to scrape new: https://old.reddit.com/r/playstation/new/

proxy = "server:port"

headers = {

"Accept": "text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,application/signed-exchange;v=b3;q=0.7",

"Accept-Encoding": "gzip, deflate, br",

"Accept-Language": "en-US,en;q=0.9",

"Referer": "https://old.reddit.com/",

"Sec-Ch-Ua": "\"Chromium\";v=\"116\", \"Not)A;Brand\";v=\"24\", \"Google Chrome\";v=\"116\"",

"Sec-Ch-Ua-Mobile": "?0",

"Sec-Ch-Ua-Platform": "\"Windows\"",

"Sec-Fetch-Dest": "document",

"Sec-Fetch-Mode": "navigate",

"Sec-Fetch-Site": "cross-site",

"Sec-Fetch-User": "?1",

"Upgrade-Insecure-Requests": "1",

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/116.0.0.0 Safari/537.36",

}

# Number of pages to scrape.

num_pages = 3

def parse_page(content: str, page: int):

# Parsing the page content with BeautifulSoup.

soup = BeautifulSoup(content, "html.parser")

# Finding all divs that have a class "thing".

items = soup.find_all("div", class_="thing")

# Preparing a list that will hold a list of dictionaries.

scraped_items = []

# Printing out the amount found in the page.

print (f"Items: {len(items)}")

# Iterating through each found item (div element).

for item in items:

# We can create a dictionary item_info and immediately add information.

item_info = {

"rank": item.attrs["data-rank"],

"score": item.attrs["data-score"],

"author": item.attrs["data-author"],

"promoted": item.attrs["data-promoted"],

"subreddit": item.attrs["data-subreddit"],

"timestamp": int(item.attrs["data-timestamp"]),

"url": item.attrs["data-url"],

"comments": item.attrs["data-comments-count"],

"nsfw": item.attrs["data-nsfw"],

"crossposts": item.attrs["data-num-crossposts"],

"domain": item.attrs["data-domain"],

"page": page,

}

# The timestamp is in milliseconds. Here we convert it to seconds and

# formatting it into a human readable string.

item_info["datetime"] = datetime.fromtimestamp \

(item_info["timestamp"] / 1000).strftime("%m/%d/%Y, %H:%M:%S")

# The title is contained within a

tag with a "title" class.

# Here we use a BeautifoulSoup .find() function to locate the element

# within the item div and extract its text.

item_info["title"] = item.find("p", class_="title").get_text()

# Now just append the resulting dictionary to the scraped_items list.

scraped_items.append(item_info)

# After parsing all of the items, we can get the URL of the next page to

# scrape. We can find it by finding the span tag with the class of

# "next-button" and navigating down the parse tree to the <a> child element

# and extracting its "href" attribute.

next_url = soup.find("span", class_="next-button").a.attrs["href"]

print (f"Next URL: {next_url}")

# Finally, we return the list of scraped items as well as the url to the

# next page.

return scraped_items, next_url

def scrape_page(url: str, page_num: int, scraped_content: list):

if page_num <= num_pages:

try:

resp = requests.get(

url,

proxies={'http':proxy, 'https':proxy},

headers=headers)

print (f"Response status: {resp.status_code}")

resp_content = resp.text

# Calling the parse_page() function to return a list containing

# dictionaries of parsed content and the URL of the next page to

# scrape.

scraped_items, next_url = parse_page(resp_content, page_num)

# Returned scraped items are added to the scraped_content list.

scraped_content.extend(scraped_items)

next_page_num = page_num + 1

# Calling the scrape_page() functio again to scrape the next page

# now that we have its URL.

scrape_page(next_url, next_page_num, scraped_content)

except Exception as exc:

# In case something happens and the request fails, print the

# exception.

# Retry functionality can be implemented here.

print (exc)

def main():

# Starting on page 1.

page = 1

# List for storing all of the scraped output.

scraped_content = []

# Calling the scrape_page() function to begin work.

scrape_page(start_url, page, scraped_content)

# Printing out the output after scraping is finished.

print (scraped_content)

if __name__ == "__main__":

main()