Oxylabs Launches Its Fourth Scraping Tool: Real Estate Scraper API

The provider also adds two new features applicable to all scraper APIs.

- Published:

Lithuanian proxy provider Oxylabs has introduced its new Real Estate Scraper API. The tool is optimized for gathering property data from popular real estate websites like Zillow, Zoopla, Redfin, and others. It allows for collecting information like pricing, location, property type, or amenities.

From now on, customers will have four options to choose from: SERP Scraper API, E-Commerce Scraper API, Real Estate Scraper API, and Web Scraper API.

New Real Estate Scraper API

Real Estate Scraper API runs on Oxylabs’ residential proxy network, with 195 locations worldwide. You can target any location from the pool.

The API enables scraping multiple pages at once with up to 1,000 URLs per batch. It includes JavaScript rendering, and has a built-in proxy rotator, auto retries, as well as an ability to overcome anti-bot systems.

The API can integrate like a proxy server or an API. The second option offers more features, like sending batch requests and retrieving results to cloud storage. However, it doesn’t parse information, meaning that you’ll get data in raw HTML, or PNG if you enable JavaScript rendering.

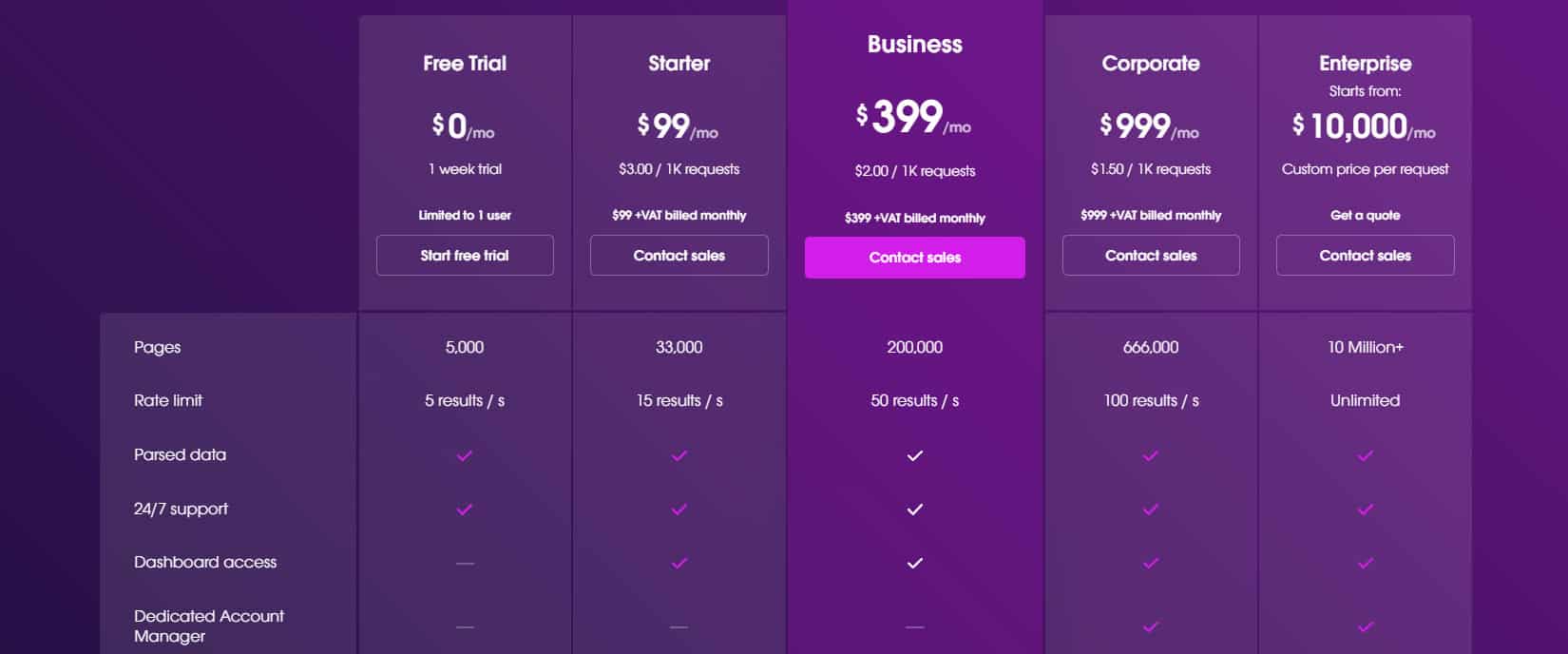

The pricing model is based on successful requests, and you can claim a 7-day free trial. There are four pricing plans to choose from. The cheapest costs $99.

Compared to Oxylabs’ other products, Real Estate Scraper APIs is among the cheapest options per 1,000 requests. It charges the same as Web Scraper API, which includes no parsing functionality.

| Starter | Business | Corporate | |

| Real Estate Scraper API | $1.30 | $1.00 | $0.60 |

| SERP Scraper API | $3.40 | $2.50 | $1.90 |

| E-Commerce Scraper API | $3.00 | $2.00 | $1.50 |

| Web Scraper API | $1.30 | $1.00 | $0.60 |

New Crawling and Scheduling Features

Oxylabs also brings two new scraper API features: crawler and scheduler. Both options come bundled with any of the four scrapers.

The Crawler feature lets you crawl any website. It’s versatile: there’s an option to traverse all pages on a website, select the crawling depth, and apply Regex-based filters. Scraping URLs with Crawler carries the same price as using Oxylabs’ regular scraping APIs.

Scheduler, on the other hand, is a free feature that allows automating scraping jobs using cron expressions. You can then fetch the results from Oxylabs’ servers or send them directly to cloud storage.

The new features are already available to Oxylabs’ customers. However, since both Crawler and Scheduler are still in beta, there might be issues concerning their functioning.