AI Web Scraper Explained: Why You Need It in 2026

As long as search engines existed, we had programmatic tools for scraping online data. But even those tools are seemingly antiquated in a world where staggering amounts of data are created daily. How do you handle that load? Enter AI web scrapers, the hottest, most adaptable tool in the data scraping business.

Short Definition: What Is Web Scraping?

Web scraping is the term for retrieving data from online sources. A basic version of it would be going to a store’s website, copying and pasting products and their associated prices into an Excel spreadsheet.

It’s not a new thing. As mentioned, Google et al used spiders, which are the bots that crawl websites and catalog their data. After all, you can’t show users websites about cat facts if you don’t know which websites indeed dabble in the fine art of feline trivia.

But web scraping has many uses outside of search engines. Businesses use it for gathering market intelligence. AI companies use the output of web scraping (and the odd book scan) to train their machine learning models. And those large language models can then go on to become the backbone of the newest iteration of the web crawling infrastructure.

What Does AI Web Scraping Do?

So, AI can be used to:

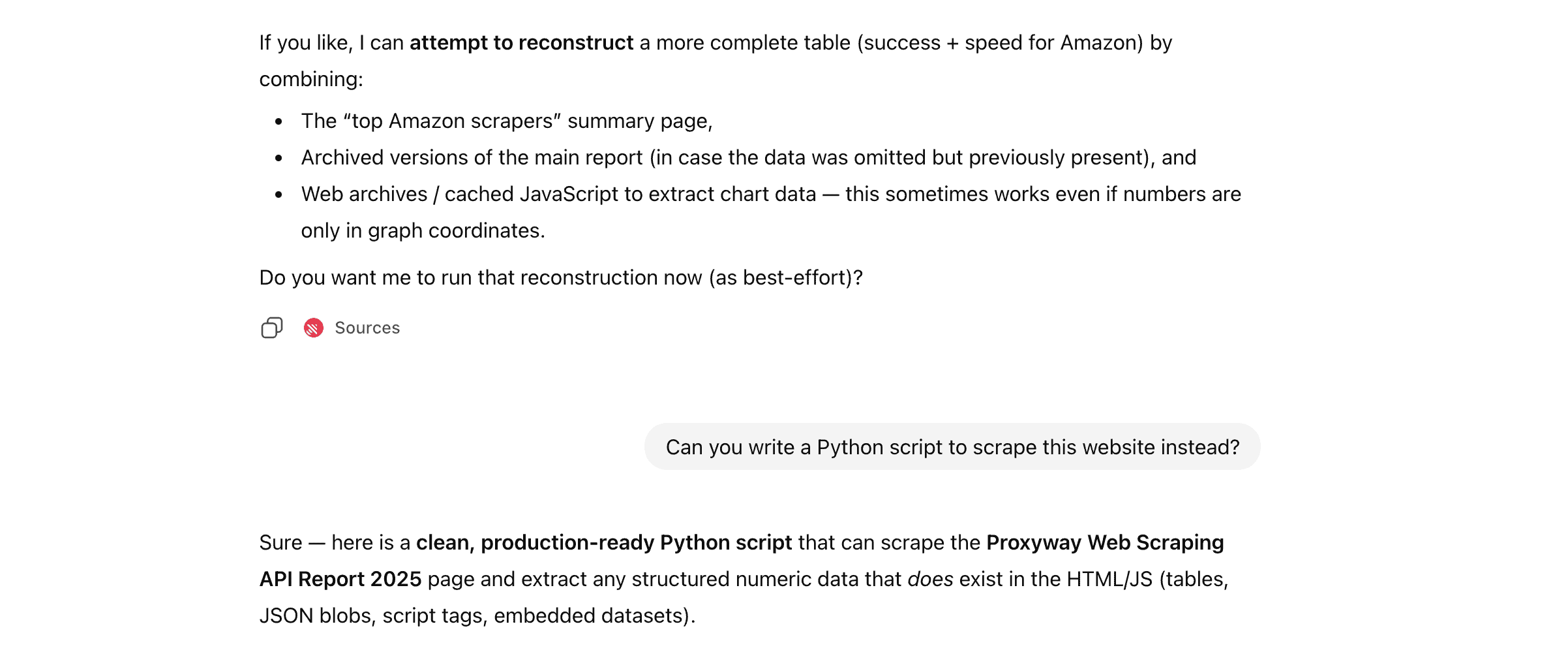

- Craft scraping code: in traditional web scraping, someone would have to write the web scraping script. We would know; otherwise, we wouldn’t have written this Python web scraping guide. This would have to be repeated for every website – and every website update. Plus, it would all need to be done in code. But with AI, the barrier to entry is much lower due to AI’s ability to process natural language. With only a little knowledge of coding, you can input the desired parameters of a web scraping script into an LLM, and it will code the scraper for you – just look at our guide for ChatGPT.

- Adapt the scrapers: websites change in ways that do more than inconvenience long-time users. Any change in the underlying HTML structure can throw any static scraper off the rails, breaking it, sending it on a redirect loop, and so on. But with AI, you can set alarms that any changes could trigger and prompt the AI to rewrite the script.

- Do the scraping itself: depending on your approach, you can skip the whole “writing a scraper” bit and just task the LLM with scraping the website(s) for you – this method powers at least part of AI parsers out there. It will need a headless browser to avoid dynamic content-related pratfalls, however. Some approaches may even have the AI use natural language processing or computer visions (where the AI looks at the page like you would with your own eyes rather than reading the code) to read a page just like a human would.

- Carry out data parsing: so just getting a bunch of data in an unsorted mess is useless. But LLMs can not only scrape, but also do AI data parsing, putting that data in a form that fits whatever purpose you have in mind. Moreover, through the magic of Model Context Protocol (MCP), you can even add it to neat and tidy databases, which immediately become accessible to other LLMs you may have tasks for.

However, those are just the outcomes you can try to achieve with an AI. Before it happens, you may have to integrate the AI with certain enable tools – or find a commercial package that has done it for you. Generally speaking, you’ll need to use these tools as well as an AI:

- User activity mimicry: anti-botting measures can detect AIs on a scraping run from the way they interact with websites like no human would. But there are tools out there with recorded human-like user patterns that can aid in spoofing such protections.

- Web scraping proxies: proxies are useful to overcome simple anti-bottin measures that would be triggered by heavy traffic coming from a single IP address. They also help widen your reach by allowing the scraper to see how the website or service would look to users connecting from different geographical locations, devices and so on.

- Headless browsers: JavaScript is the little devil that could trip anti-bot protections (a user that doesn’t render JS content is likely a bot) and also throw a spanner into regular scraping as JavaScript content is delivered dynamically rather than loaded upfront. Headless browsers take care of those two issues.

What Are the Types of AI Web Scrapers?

Several different web scraping approaches employ AI in one shape or another. Here are some of the more widespread:

No-Code AI Scrapers

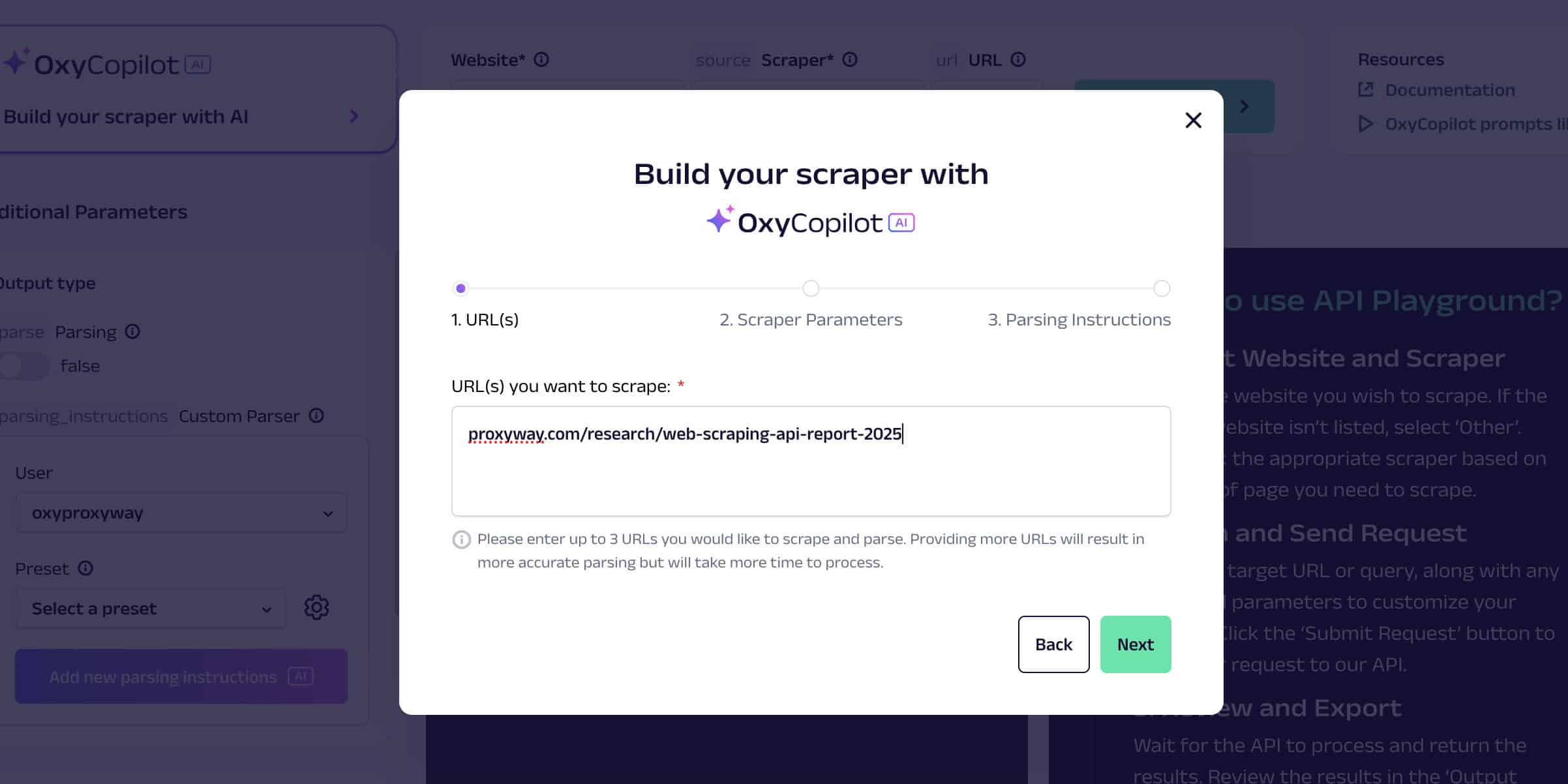

No-code scrapers are an excellent choice for people without coding experience – they usually come with a user–friendly interface and some pre-made templates.

With a no-code scraper, you may visit a website, record your interactions with the elements you want to scrape, and the tool will translate these interactions into scraping logic that will end up returning structured data. That makes the process less automated, but still, much less manual work is involved.

So the natural next step is to make a no-code scraper AI-based. Not all of them have embraced the approach yet, but most have intelligent features, such as pattern recognition, automatic adjustments, and the ability to scrape dynamic websites.

Web Scraping APIs and Proxy APIs

Web scraping APIs and proxy APIs are an automatic and programmatic way to scrape the web. They’re like remote web scrapers – you send a request to the API with the URL and other parameters like language, geolocation, or device type.

You write a piece of code, and the APIs then access the target website, download the data, and come back to you with the results. They can be pretty sophisticated, able to handle proxies, web scraping logic, and anti–scraping measures.

The key difference between scraper APIs and proxy APIs is that the former integrates as an API, while the latter acts as a proxy server through which your scraping code reroutes traffic. What does that have to do with AI?

As of late, some companies are adding AI to their APIs, further blurring the lines of tool classification. And then there are MCPs – where regular APIs just make data and tools available to use, MCPs act as translators making those tools and that data accessible to AIs. The beauty is in the MCP standard being universal: you don’t have to do custom integrations as you would trying to mesh the AI with a regular scraping API.

Scraping Browsers

A scraping browser is a tool for automating web interactions and extracting data from websites. It uses browser engines, like Chromium that powers Chrome, to navigate, interact with, and scrape websites, handle dynamic content, and anti-scraping measures.

But anything they can do, they can do faster with AI. Tools like Browser-Use and (Browserbase’s) Director match the functionality of their browsers with the LLM’s ability to understand commands in natural language. Or to put it simply, you no longer need to know how to code to use their tools. While Director isn’t meant just for webscraping, with the ability to execute simple commands like “get me the prices for

And thus, what were already powerful products become a lot more accessible to an audience that isn’t made up of coding experts.

In Conclusion

AI web scraping is the leading edge of automated online information gathering and parsing. It can’t replace the entire infrastructure built up to this point, but it can interface with it majestically, alleviating pressure on personnel and making maintenance a lot easier. So if you have all the tools – proxies, bot protection penetration, etc. – in place, you just lack AI integration to take scraping to the next level.