ParseHub Review

Our review of a popular web scraping tool.

Free Version VS Paid Plans

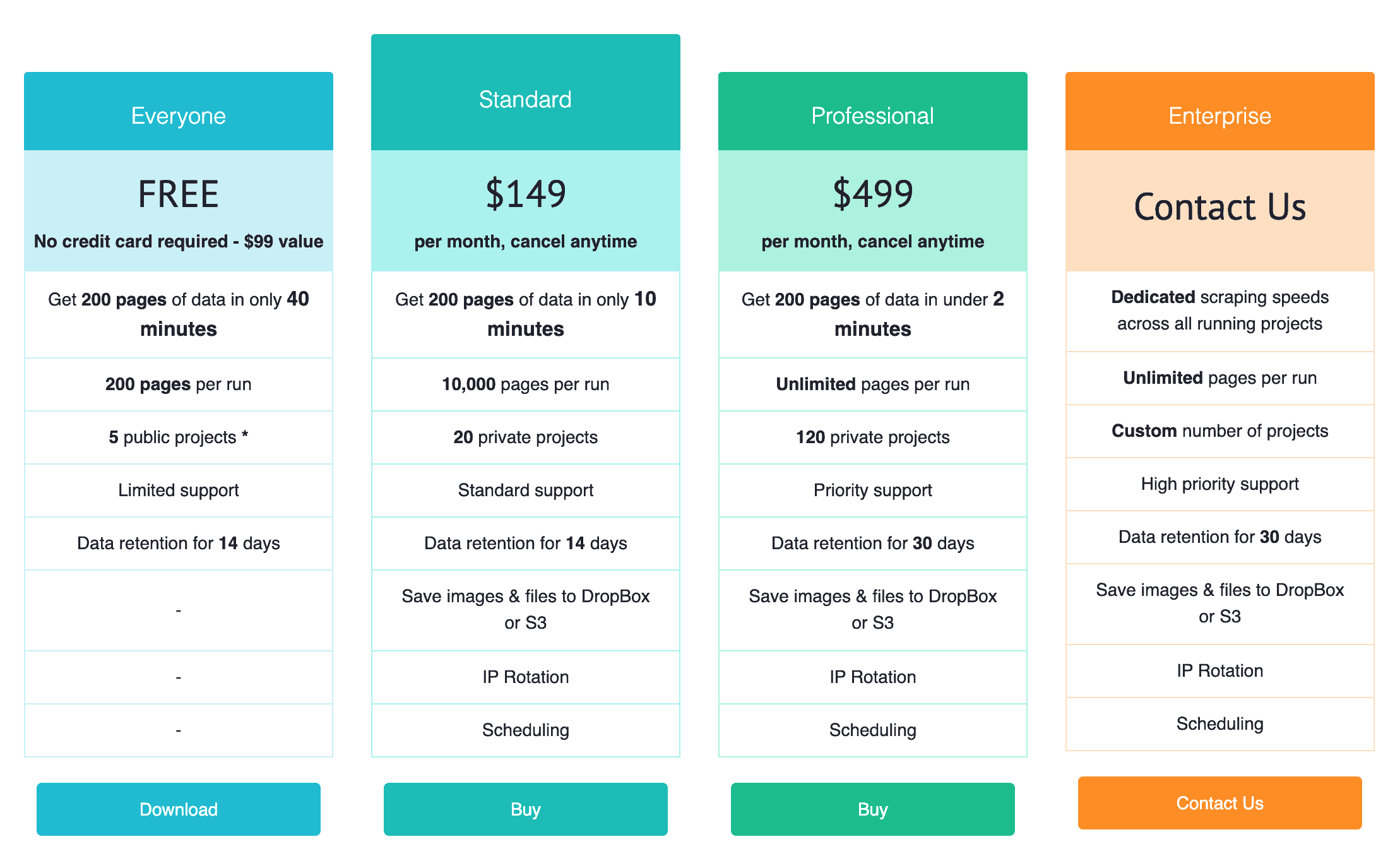

Before we get into the nitty gritty of it, let’s look through the pricing. ParseHub is most often known as the free web scraper which is only half true. There is a free version with limited features, but there are three other paid plans as well: Standard ($149 per month), Professional ($499 per months) and Enterprise (custom pricing, pricing upon request only).

Is the free version enough, then? If you’re getting ParseHub for personal use, I’d say definitely yes. ParseHub’s website claims that the free version is worth $99, and I’m inclined to believe that. It offers 200 pages of data in 40 minutes (per one run) – those 40 minutes are nothing keeping in mind that you’ll be able to launch your projects without spending any time writing scraping scripts by yourself.

Of course, it’s a bit of a shame that you can only run 5 public projects and you’d need to get proxies separately – beginning with the first paid plan, projects become private and IP rotation is included.

The paid versions sports some other useful features such as way quicker turnaround on the scraping itself (e.g. the Standard plan can get 200 pages in 10 minutes while the Professional one can handle the same number of pages under 2 minutes).

When deciding whether to go for a paid plan or stick to the free version, I’d always advise you to consider the scale of your project. If you’re a one-man-factory doing some web scraping on the side, the free version should do just fine. However, if you’re considering ParseHub as an option for multiple repeated large-scale tasks, investing in the software could be the way to go.

The software itself is free. All you need to do is download ParseHub, install it, create an account, and you’re all good to go!

Interface

When I was reading the customer reviews of ParseHub online, I noticed that one common pro that a lot of people tend to mention is that the interface is really easy to use. After trying ParseHub myself, I’ll have to agree.

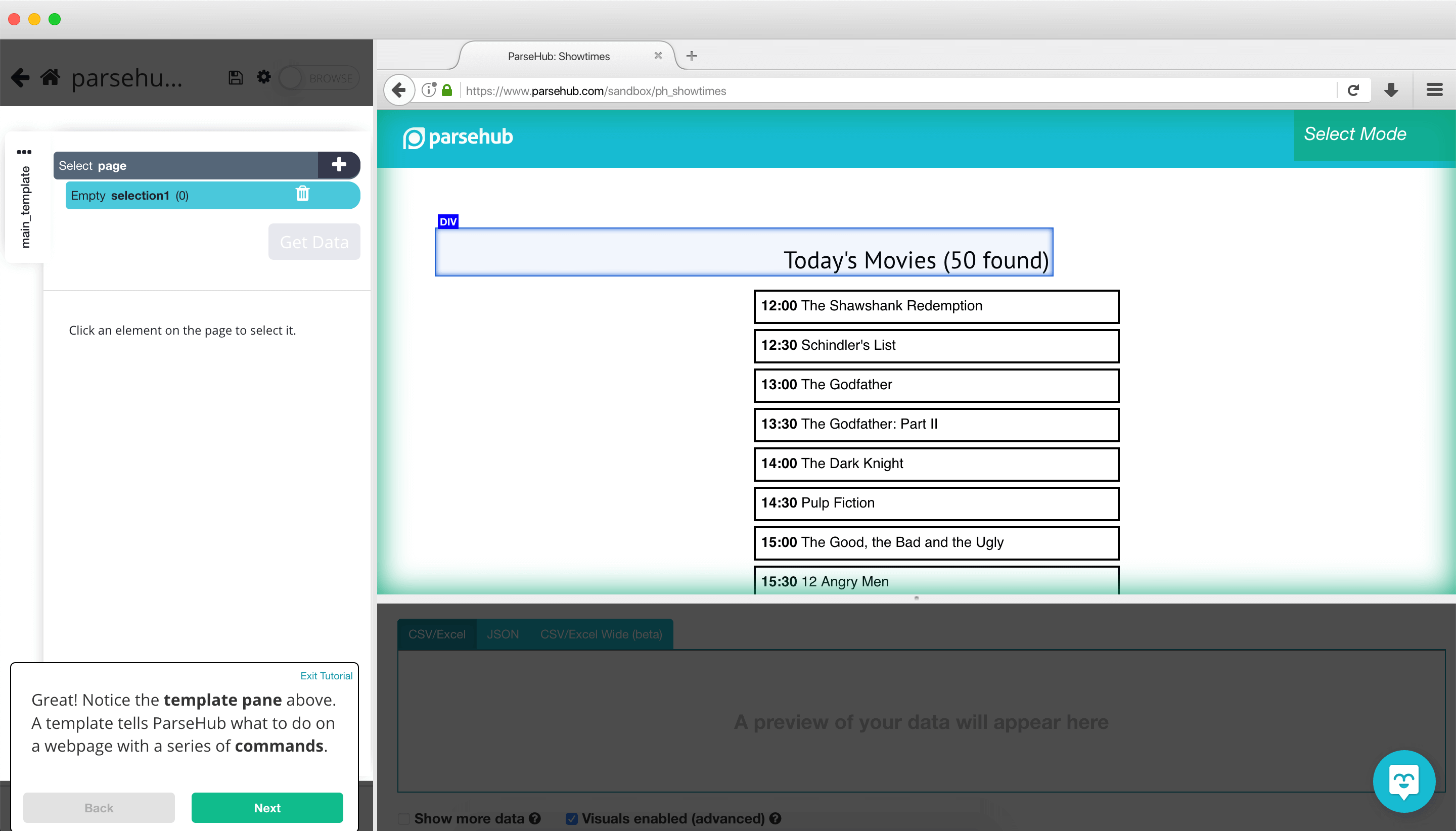

I believe that this software benefits greatly from making sure its interface is easy to understand not only to first time ParseHub users, but also people who have never scraped the web before. You have your commands on the left and the whole website view in the window on the right.

I believe that including the browser-like real-time view of the target website is definitely a big plus as you don’t have to get lost between several open windows. Also, the website view itself is clickable and directly interacts with the command panel on the left.

For example, to make a selection of what you want to scrape, all you have to do is click on, let’s say, flight prices. ParseHub then would highlight your selection, and ask to click on the next price to make sure you really want prices to be scraped. From here, ParseHub will be able to collect all flight prices from your selected page.

More complicated commands such as relative select and the command to scrape all pages can be done on screen as well (though it’s hard to call them more complicated). Relative select implies telling ParseHub to collect data that is related to your main selection. In the instance of flight prices, the relative selection could be destinations or flight companies.

At the bottom of the screen there’s a preview of the data selected. It can be viewed in CSV/Excel or JSON formats before downloading the results altogether.

If get stuck at any point of your project, ParseHub offers built-in tutorials in the form of a walk-through. At the bottom left you’ll find ParseHub’s API with an extensive knowledge base as well as a contact page (both redirect you to ParseHub’s website). And the smiley face at the bottom right? That’s the customer support chat.

Given that there’s help along each step of the process, it’s fair to say that the story checks out –ParseHub’s interface is really easy to use, even for a first timer.

Features

ParseHub allows you to gather data from all kinds of nooks and crannies: drop-down menus, images, multiple pages, it can click into the product’s page, collect the information needed there, and then come back to the original list. The scraper is also smart enough to collect information not according to the physical placement of the data on the website alone, but by scraping to find the data in any place on the page (that’s why ParseHub always asks to select the information you need twice at the beginning of each selection).

Another useful feature is selecting if you want your URLs to be extracted with each selection or not. For example, if you were collecting movie titles, you might want the URLs of each movie, but the links for the number of reviews or the director.

As for the results, they can be downloaded in CSV/Excel, JSON and API formats.

Customer Service

ParseHub seems to have this one down as well. As I mentioned before, there’s plenty of self-help in the software itself: API, tutorials, and chat. If you go to ParseHub’s website, you’ll see that it has a few more options for customer support: the classic contact form as well as a possibility to schedule a 30 minute demo call. I’m guessing the latter is aimed at those looking at ParseHub for bigger plans that require large scale (aka paid) solutions.

One con of the customer service is that it has the normal 9-to-5 operating hours, so if you needed some urgent help in the off-hours, you’d have to figure it out yourself.

What about Proxies?

If you know anything about web scraping, you’ll agree when I say this: the proxies you choose are just as important as the web scraper itself. If you run the best web scraper in the history of web scrapers, unreliable and easily blockable proxies will mess your project up in no time.

As I mentioned before, ParseHub’s free plan doesn’t have an IP rotator included but the paid plans do. While it does mean you’ll need to spend a bit of extra money, choosing your own proxies is a good thing as it gives you the chance to maximize the potential of your project.

In general, when choosing proxies for a scraping project, you’d want them to be rotating and residential. We’ve compiled a list of the best web scraping proxies according to providers to make your choice easier.

Conclusion

Overall, ParseHub is a reliable web scraper that’s perfect for beginners and efficient people alike. No need to know how to code, easy-to-use interface, and a great knowledge base – what’s not to like? Of course, if we’re looking at the free version only, there are a few downsides: no private projects, slower data extraction, no Dropbox integration. But given the efficiency that ParseHub offers, I strongly believe that the pros outweigh the cons.

Frequently Asked Questions About ParseHub

ParseHub is a visual web scraper. You can use it to collect information from websites without having prior programming experience.

ParseHub does have a free tier, but it’s pretty limited. For any larger scraping projects, you’ll have to pay for it.

ParseHub runs on Windows, macOS, and Linux-based distributions.