What Is an MCP Server? Explaining The Important AI Enabler

MCP servers are a crucial tool for AI development and the future of agentic internet. They’re an important enabler for providing AIs with tools that allow them to not just talk, but act. This is how large language models can easily access databases, interact with text-to-speech services and 3D modeling applications, and yes, scrape websites. But what exactly is an MCP server?

What Is MCP?

The MCP (Model Context Protocol) server is a major component of the MCP: an open standard for tools that LLMs can use. The protocol was launched by the Claude creators Anthropic on November 26, 2024.

LLMs can talk all day long based on their training data – but that’s it. By default, the AI doesn’t have access to real-time data and can’t manipulate anything. You can give it such capabilities with specialized APIs, but that is time-consuming and labor-intensive. So every time you want to add a capability like looking up the time or interfacing with Slack, you have to do custom work for the specific model-app/service combination.

But the MCP framework has introduced a new standard for creating a translator that sits between the LLM (or, to be precise, the AI application) and the tools you want it to use. Whatever weird “language” a tool speaks, its MCP server will translate into something any AI model – Claude, ChatGPT, etc. – can understand.

The MCP system contains these major components:

- The MCP host: that’s the AI application you’re working with.

- The MCP client: that’s what the AI uses to create a secure connection to the MCP server.

- The MCP server: does the translation between what the AI wants and what the service in question puts out.

What Is an MCP server?

An MPC server is the majestic translator that allows models to interact with systems and data. While an API would have to be created for a specific combination of service and LLM, an MCP server only has to be specific to a service.

So, for example, the Oxylabs MCP server will provide web scraping functionality for whatever AI model you have.

MCP servers can contain three types of primitives that can be exposed for AI to use:

- Resources: this is context in its most raw/usual form: documents, files, databases. It enables AI to look up data in, say, Apache Doris databases. This way, the AI can access more than just the data it was given when the model was developed.

- Tools: where resources enable passive consumption, tools allow the AI to do things without human involvement. Tools are the way AI enters new entries, deletes data and otherwise manages databases – or creates memes on ImgFlip. This puts AI beyond a sophisticated chatbot and turns it into an agent.

- Prompts: Probably the most AI-specific type of MCP server content, prompts are specialized AI instructions that allow it to execute a task in a pre-set, standardised manner. If you tell the model to “plan a holiday”, the prompt template may then enable the AI to then ask about your desired location, duration, budget, and interests.

As a concrete example, consider an MCP server that provides context about a database. It can expose tools for querying the database, a resource that contains the schema of the database, and a prompt that includes few-shot examples for interacting with the tools.

modelcontextprotocol.io

The protocol is built around communicating in JSON-RPC 2.0 – the RPC part refers to “remote procedure calls,” a concept that closely maps to how MCP clients may need to call MCP servers on the same device or somewhere else online.

But that’s not all – MCP servers can also ask for the clients to provide data as well – or in more technical parlance, there are primitives than can be exposed:

- Sampling: allows servers to request language model completions from the client’s AI application to access a language model without having their own language model SDK.

- Elicitation: for the times when the server creators want to get either more information from the user or prompt a confirmation for an action.

- Logging: the simple act of submitting logs for debugging and monitoring purposes.

What's the Difference Between MCP Servers and APIs?

The key difference between MCP servers and APIs is that MCP servers are made to serve AI/LLMs. Sure, both of them allow software to interact with external services, but that’s where the similarities end:

- We already mentioned standardization. A classical API will output the data in whatever format the developers felt was best. But since MCP is a standard, no matter what the input from the service is, the MCP server’s output will be something any AI model can easily use.

- API are generally created by developers to allow third party software to interact with their apps and services. For example, the Reddit API allowed for the existence of different reddit clients, but it wasn’t made with them in mind. That same API allows AIs to be trained on Reddit data, too. In contrast, an MCP server exists to provide standardised data, tools and prompts for AIs.

- APIs don’t tailor their inputs and outputs for models to easily understand and use. But MCP handles specifically that hard task of calling the API, reading the response, and turning it into usable context. The AI itself doesn’t have to be programmed to “understand” any of the processes happening under the hood.

- APIs usually leave security to the end user. MCP servers, however, have been developed with security already in place, like the authentication procedures embedded in its transport layer.

What’s the Use of MCP Servers in Web Scraping?

Web scraping has already adopted related technologies: web scraping APIs and AI scraping. Web scraping APIs are like services that access the website and carry out the scraping for you. They do the heavy lifting for the user. AI web scraping is more advanced, since it employs machine learning and whatnot to adapt to fancy website design complexities, anti-scraping tech, and such.

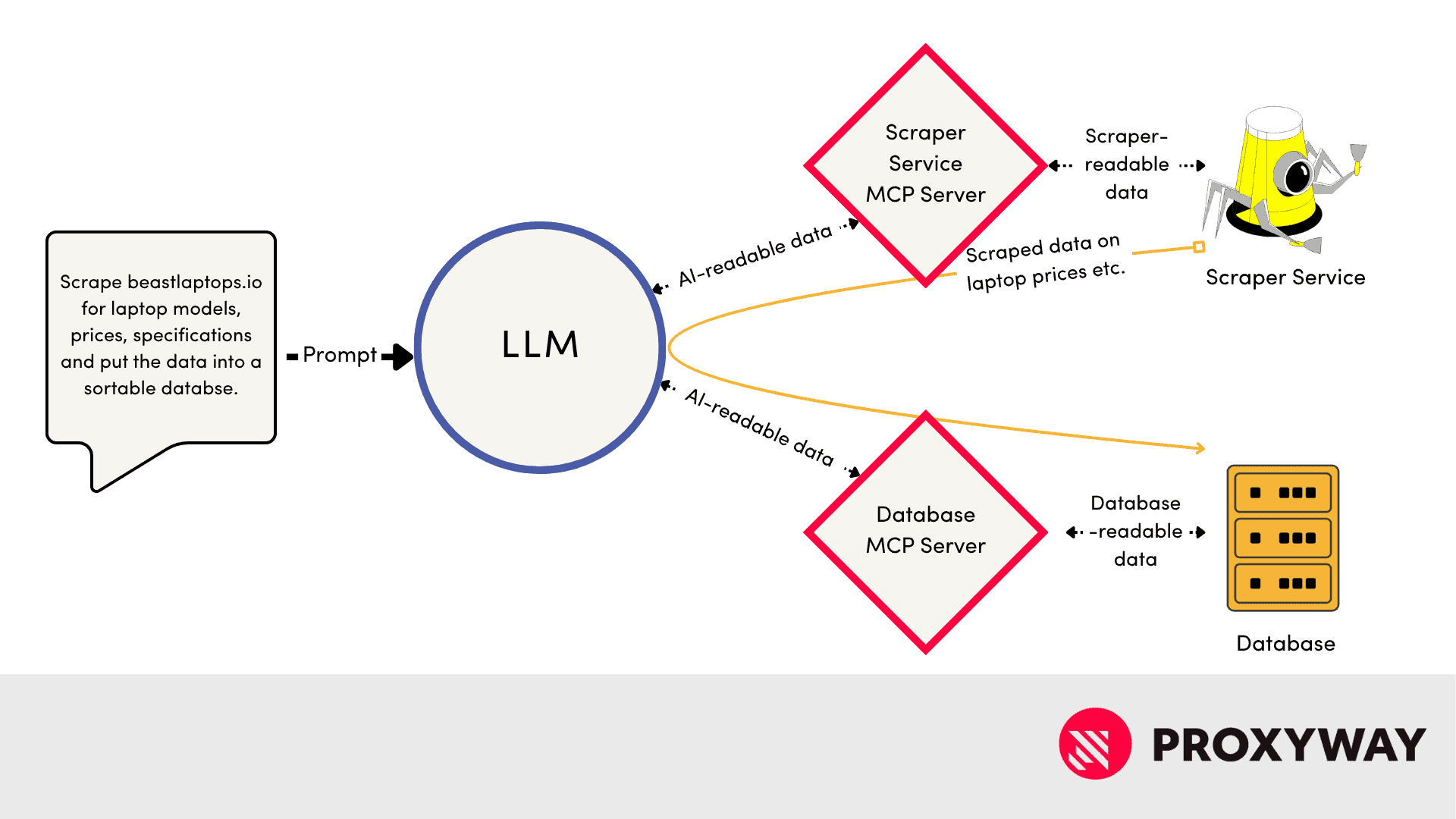

What MCP does is allow your AI/LLM to make use of those ready-made services. Now you yourself don’t even need to interact with them. You tell the AI what needs to be done, it boots up the MCP clients to reach out to the MCP servers, and they provide the tools (in the general, not MCP-server-primitives sense) to do so.

At the same time, an LLM can be running MCP clients for multiple services, so it can access a web scraper MCP server, get the web scraping data you want, and then feed into a database MCP server for storage, processing and retrieval. Et voila.

In Conclusion

MCP servers are a key part of the new MCP architecture powering AI agents. Without it, we’d be reduced to a bunch of patchwork solutions that have to be custom fitted for every new circumstance. But now, MCP servers are what makes AI and other services sing in harmony – or scrape the web efficiently.