The Best Job Posting Data Providers in 2026

Job posting data is a goldmine for those who deal with the hiring process, from job seekers to employers, and data analysts.

While you can always manually search job boards or company career pages, that’s a burdensome process. Instead, APIs and ready-made datasets allow you to access job postings and key insights in a structured, easy-to-use format. So, you can forget about building a custom scraper or skimming through endless pages of listings.

Let’s find the best job posting data providers for you.

The Top Job Posting Data Providers of 2026:

1. Coresignal – the largest job posting data provider.

Visit Coresignal>

2. Bright Data – provider with a robust job posting data infrastructure.

3. Oxylabs – job posting data from major online job advertisers.

4. Apify – the largest pre-made job data template provider.

5. ScraperAPI – job posting data from Google Jobs.

What Is a Job Posting Data?

Job posting data is information gathered from online job advertisers. Job boards like Indeed, LinkedIn, and Glassdoor are the most common sources of job postings. But that’s not all – many companies list available vacancies on their own career pages. Additionally, there are government databases with public labor market information.

Typically, job posting data includes:

- Job titles: specific roles like software engineer or marketing specialist.

- Job descriptions: responsibilities and tasks associated with the position.

- Company details: employer’s overview including its name, industry, and size.

- Locations: where the job is based, for example, New York, London, or remote location.

- Salary ranges: compensation, either as a fixed amount or a range.

- Employment type: details such as full-time, part-time, freelance, or contract.

There are more data points to be considered when collecting job posting information – required skills, posting date, and more.

How to Get Job Posting Data

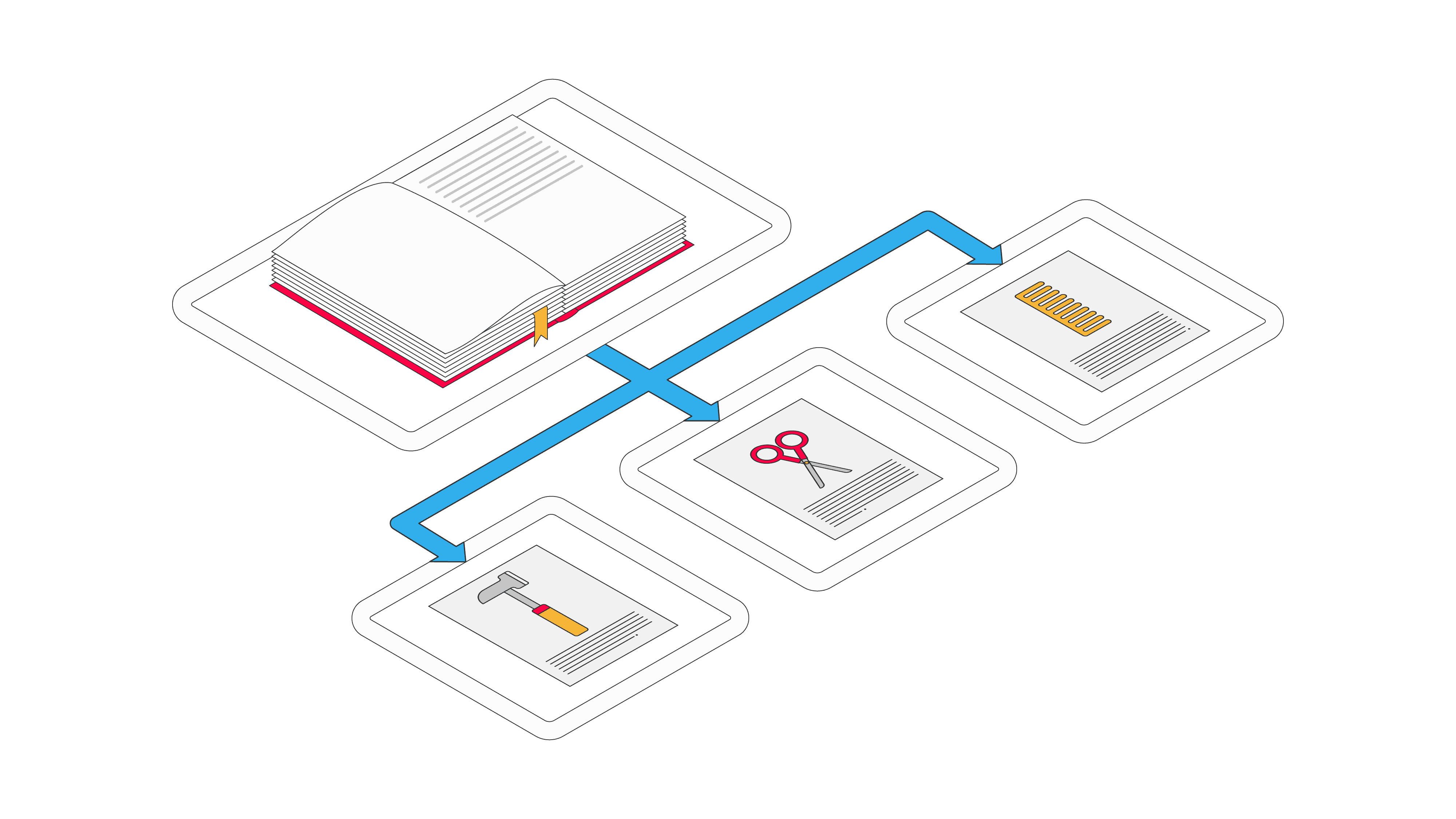

There are three main ways to go about getting job posting data: web scraping, APIs, and datasets.

- Web scraping requires the most effort from your side. To get job posting data you need to extract it directly from the target job site using a self-built web scraper. You have to navigate the website’s structure and identify the relevant data points, such as job titles, descriptions, company names, locations, and application links. You are also the one responsible for handling web scraping challenges like pagination, CAPTCHAs, and dynamic content. Finally, you need to manage data storage and maintain the scraper all the time.

- APIs allow you to access job posting data through a third-party provider’s interface. With an API, you send requests to a server and receive the job posting data in a structured format, such as JSON or XML. As such, you can retrieve only the necessary data points without having to manually scrape websites.

- Pre-collected datasets are the simplest way to get job information. These are collections of job posting data that have already been cleaned and organized by the provider. Once you buy a dataset, it’s ready for immediate use. You can usually download job posting datasets in CSV, JSON, or SQL formats, and integrate them with cloud storage platforms like AWS S3 or Google Cloud Storage. Some providers offer subscription-based services where you receive fresh datasets at regular intervals (e.g. monthly or quarterly).

Web Scraping vs API vs Dataset

| Method | Description | Required effort | Advantages | Disadvantages |

| Web scraping | Gather job data directly from a job site using a self-built web scraper. | High: build and maintain the scraper, navigate website structures, handle challenges like CAPTCHAs and dynamic content. | Full control over data. | Time-consuming, requires technical skills, and ongoing maintenance. |

| APIs | Access job data via a third-party provider’s interface by sending requests to a server and receiving data in structured formats like JSON or XML. | Moderate effort: learn API documentation, set up request processes, and integrate results into your application. | Simplifies data retrieval, no manual scraping needed, structured data format. | Limited to the data provided by the API, might be costly. |

| Datasets | Use pre-collected and pre-cleaned job posting datasets available for purchase or subscription. | Low effort: buy, download, and use immediately without additional work. | Ready-to-use data, saves time, often available in multiple formats, and easy to integrate with cloud storage platforms. | May not cover specific data, the costliest, limited flexibility and customization. |

What to Look for in a Job Posting Dataset

When choosing a job posting dataset, there are several things to consider:

- Data volume: a larger dataset means more coverage of the job market. On the other hand, high volume can also mean more data to manage and analyze, so you should assess whether the scope aligns with your use case.

- Location coverage: some datasets focus on a specific region, such as a country or city, while others provide global coverage. If you’re looking for job postings in a specific location or industry, make sure the dataset covers them.

- Delivery frequency: datasets are typically available for a one-time download, but you can set a schedule – refresh data monthly, quarterly, or at custom frequency.

- Structure: datasets come pre-structured; they are organized into easily digestible categories. Unstructured data, on the other hand, may require additional processing or cleaning before it can be used for further analysis.

- Sources: job postings can come from a variety of platforms, including popular job boards like Indeed or LinkedIn, company websites, or recruitment agencies.

Note that some providers may not disclose these details upfront. In such cases, it can be hard to fully assess the dataset for your needs.

The Best Job Posting Data Providers

1. Coresignal

The largest job posting data provider.

Available tools

Jobs data API, Jobs posting datasets

Refresh frequency (datasets)

daily, weekly, monthly, quarterly (depends on the dataset)

- Data formats: JSON, CSV, Parquet

- Pricing model:

– Datasets: One-year contract, one-time purchase

– Data API: Subscription - Pricing structure:

– Datasets: Custom

– Data API: Credit system (for search and data collection). One credit equals one full record in all available data fields. - Support: contact form, dedicated account manager (for subscribers and dataset users), tech support

- Free trial:

– Datasets: Data samples

– Data API: 200 credits for 14 days - Starting price:

– Datasets: $1,000

– Data API: $49/month

Coresignal is the largest job posting data provider on this list. The provider offers both API and datasets.

Jobs data API comes with nearly 400 million public job posting records and updates every 6 hours. You can get various data, such as job title, description, seniority, salary, and more. There are two methods to access job posting data: search and collect. The search method allows you to use filters to query and refine Coresignal’s database. The collection method lets you retrieve data either individually or in bulk (up to 10,000 records in one batch) with just a few clicks.

Alternatively, you can get job data datasets with over 600 million job posting records from four category sources: Professional Network, Indeed, Glassdoor, and Wellfound (Angellist) jobs. The datasets are delivered in multiple formats depending on the category you choose. You can select a preferred delivery frequency, and get data via links, Amazon S3, Google Cloud, or Microsoft Azure.

Coresignal’s pricing is pretty straightforward – one credit gives access to one complete record, so there’s no hidden fees or additional charges.

For more information and performance tests, read our Coresignal review.

2. Bright Data

Provider with a robust job posting data infrastructure.

Available tools

various datasets and job data APIs, customizable datasets

Refresh frequency (datasets):

one-time, bi-annually, quarterly, monthly

- Data formats:

– Company data APIs: JSON & CSV

– Datasets: JSON, ndJSON, CSV & XLSX - Pricing model:

– Web Scraper API: subscription or pay-as-you-go

– Datasets: one-time, biannual, quarterly, monthly purchase - Pricing structure: based on record amount

- Support: 24/7 via live chat, dedicated account manager

- Free trial: 7-day trial for businesses, 3-day refund for individuals

- Starting price:

– Web Scraper API: $1/1K records or $499/month ($0.85/1K records)

– Datasets: $500 for 200K records ($2.50/1k records)

Bright Data is a strong choice when it comes to getting reliable job posting data – you can choose between various datasets or scrape job postings via API or no-code scrapers.

Off with datasets, you can choose between four options: LinkedIn or LinkedIn profiles, Indeed and Glassdoor job listings. Data covers all 50 US states and you can get updates to your jobs dataset on a daily, weekly, monthly, or custom basis.

Additionally, you can download a data sample in CSV or JSON format with 30 records, but the full dataset will contain 1,000 records. You can also customize the dataset to your liking – remove, rename, and filter.

Bright Data also allows you to get job data via API or no-code interface (plug and play plugin). Its scraper API comes with multiple dedicated endpoints for major job sites – LinkedIn, Glassdoor and Indeed. You can input up to 20 URLs for real-time scraping or significantly more when processing requests in batches, depending on the scraper type.

Bright Data also has useful features, like an API playground, helpful documentation, and you can have your own dedicated manager if you opt for a subscription.

For more information and performance tests, read our Bright Data review.

3. Oxylabs

Job posting data from major online job advertisers.

Available tools

Web Scraper API with dedicated endpoints for company websites, various datasets, and customizable datasets

Refresh frequency (datasets):

one-time, monthly, quarterly, or custom

- Data formats:

– Company data APIs: HTML & JSON

– Datasets: XLSX, CSV & JSON - Pricing model:

– Web Scraper API: based on successful requests

– Datasets: not disclosed - Pricing structure: subscription

- Support: 24/7 via live chat, dedicated account manager

- Free trial:

– Web Scraper API: one week trial with 5K results

– Datasets: contact sales - Price:

– Web Scraper API: $49/month ($2/1K results)

– Datasets: from $1000/month

Oxylabs is another solid choice if you want quality job posting data. Its datasets come from major online job advertisers, like Indeed, Glassdoor and StackShare. Additionally, you can go with the Web Scraper API and choose other popular targets, such as Google Jobs.

The datasets come in multiple storage options: AWS S3, Google Cloud Storage, SFTP, and others. You can also choose the frequency for receiving refreshed datasets – whether it’s monthly, quarterly, or on a custom schedule.

If you want to scrape job data yourself, use the Web Scraper API – simply send the request with the required parameters and target URL. You’ll then receive the results in HTML or JSON formats. Results can also be delivered via API or directly to your cloud storage bucket (AWS S3 or GCS). The scraper includes features like a custom parser, web crawler, and scheduler.

The API includes the OxyCopilot assistant which turns natural language instructions into API code for Python, Node.js, and more. As a premium provider, Oxylabs has one of the best customer support, a dedicated account manager, and thorough documentation.

For more information and performance tests, read our Oxylabs review.

4. Apify

The largest pre-made job data template provider.

Available tools

Actors (different APIs), ability to develop a custom one

Refresh frequency

custom with monitoring actor

- Data formats: JSON, CSV, XML, RSS, JSONL & HTML table

- Pricing model: based on usage

- Pricing structure: subscription

- Support: contact form

- Free trial: a free plan with $5 platform credits is available

- Price: custom (rental, pay per: result, event, or usage); or $49/month

Apify offers hundreds of pre-made templates for various job sites like LinkedIn, Workable, Indeed, and others. The provider also has APIs and calls them Actors.

Actors are cloud-based, serverless programs that perform specific tasks based on predefined scripts. They come with an intuitive interface and flexible configurations and you can run them locally or in the cloud.

The provider allows you to manage incoming requests without complex setups or deep technical skills, similar to how a standard API server functions. When you run an Actor, the results are stored in separate datasets, and you can export the datasets in multiple formats, such as JSON, CSV, and others.

As for pricing, you have the option to pay for individual Actors based on their specific costs, or you can subscribe to a monthly plan for more comprehensive access.

5. ScraperAPI

Job posting data from Google Jobs.

Available tools

Google Jobs API

Data formats

JSON and CSV

- Pricing model: based on credits

- Pricing structure: subscription

- Support: e-mail

- Free trial: 1k free credits/month, 7-day trial with 5K API credits

- Price: custom

ScraperAPI offers an API with a dedicated endpoint for Google Jobs result page.

The provider allows you to fetch structured job listings directly from Google’s search results and return them in a JSON output. The API can handle both single and multiple query requests, making it versatile for different use cases. For a single query, you can send a POST request that includes various parameters, such as your API key, search query, and other settings. For batch requests, the API can manage multiple queries in one go.

Additionally, you can specify the Google domain to scrape (e.g., google.com, google.co.uk) and adjust settings for geo-targeting, query encoding, result ordering, and more. If you want to try the Google Jobs endpoint, you can create a free ScraperAPI account to get 5,000 API credits.

ScraperAPI has competitive pricing at first glance, but it uses a credit-based system. The number of credits required depends on the complexity of the target website – and typically, Google is a difficult website to tackle.

6. Zyte

Job posting data API for simple tasks.

Available tools

General purpose API with universal job parameters

Data formats

JSON and CSV

- Pricing model: based on optional features

- Pricing structure: pay-as-you-go, subscription

- Support: available via an asynchronous contact method

- Free trial: $5 credit

- Price: custom

Zyte API allows you to get job posting data from various websites. With fields like jobPosting (for the details of the job listings) and jobPostingNavigation (for navigating through multiple job postings), the provider allows you to extract structured data from job boards and company websites with minimal setup.

The API provides detailed job data, including titles, descriptions, salary, publication dates, and location. This data is returned in a structured JSON format. Zyte’s jobPostingNavigation parameter helps to manage pagination and crawl job listings across multiple pages without separate requests for each. The API allows you to either send an HTTP request or automate a browser to collect data.

Zyte’s pricing is very customizable, but the provider has a dashboard tool that helps to estimate the cost per request. While it’s affordable for basic scraping configurations, the price can increase if you need features like JavaScript rendering.

For more information and performance tests, read our Zyte review.