10 Years of Scrapy: Interview with Shane Evans

Scrapy’s creator discusses the past, present, and future of the popular web scraping framework.

- Published:

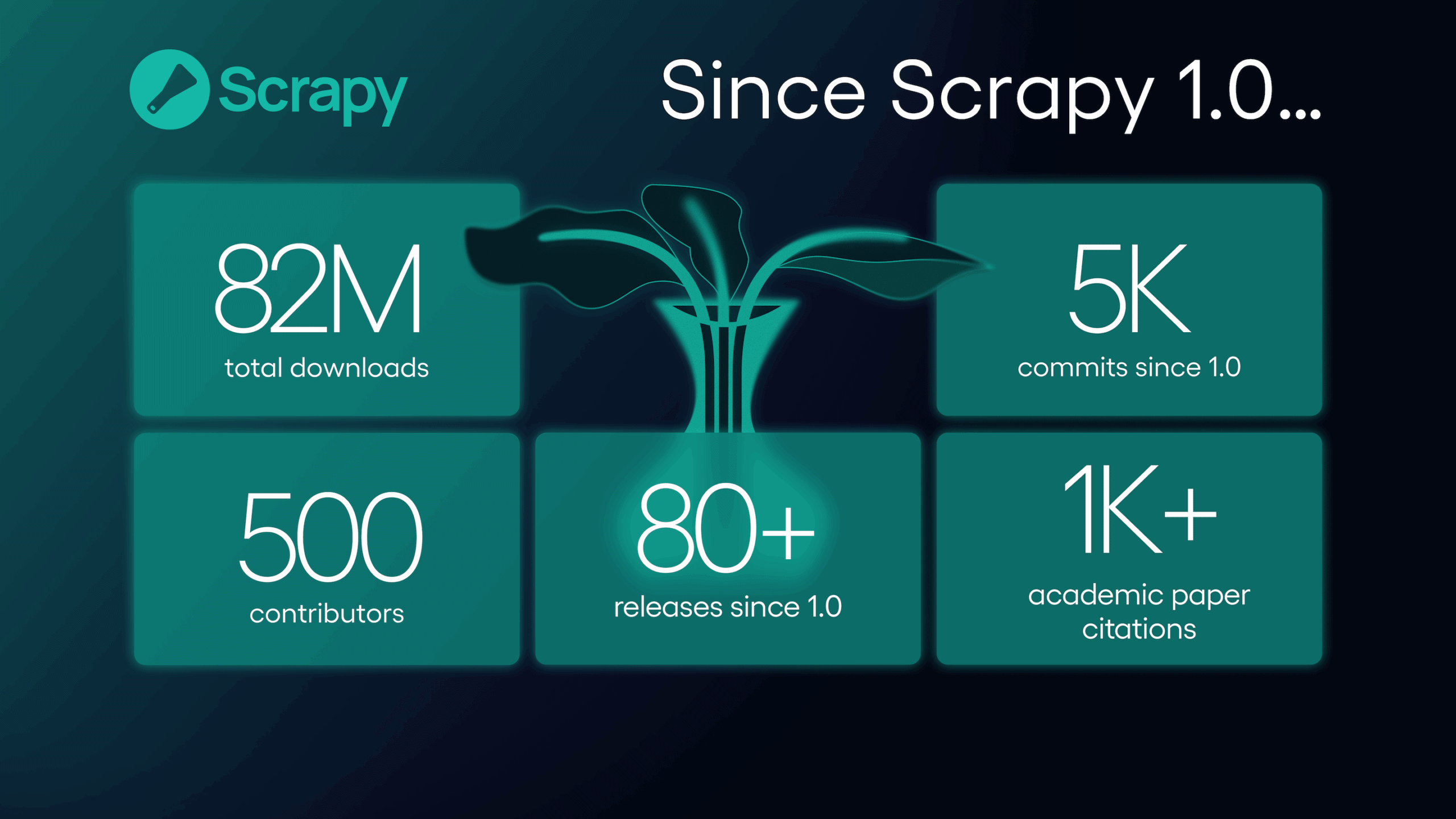

This June marks 10 years since Scrapy’s official 1.0 release. Born to address a company’s internal needs, this open-source framework has become one of the pillars of web scraping, supported by active maintainers and a lively community.

We sat down with Scrapy’s creator and the CEO of Zyte, Shane Evans, to talk about the framework’s roots, evolution over the years, and its role in the modern landscape of web data collection.

Today, Scrapy is considered one of the staples of web scraping. But it has come a long way to get here. How did the framework originate?

“Scrapy started as a practical solution to a real-world problem – not as a general-purpose framework, but as a tool to help a startup I was working with. Back in 2007, my team at Mydeco, a furniture comparison site in London, needed a better way to collect structured product data from across the web. Existing solutions didn’t cut it, so we built our own. That internal tool became the earliest version of Scrapy.

What made it stand out was its focus on being production-grade from the start – built for scale, performance, and developer productivity. It wasn’t just a hacky script; we designed it to run serious crawls reliably and efficiently. That DNA still shows today.”

What was the web scraping ecosystem like when Scrapy’s development began?

“In 2007–2008, scraping was still a niche activity. Most developers were rolling their own scripts with urllib or BeautifulSoup, or hacking together brittle solutions with regex. There were no purpose-built frameworks, and certainly nothing that supported asynchronous crawling, request scheduling, or middleware extensibility out of the box.

Scrapy changed that. It brought a structured, Pythonic approach to web scraping and introduced concepts like spiders, item pipelines, and a built-in scheduler – concepts that made large-scale, production-grade data collection far more manageable.”

What are the main underlying principles behind Scrapy? Have they changed over time?

“At its core, Scrapy has always been about flexibility, speed, and modularity. From day one, we built it to be extensible – developers could plug in custom logic at nearly every step of the data collection pipeline. That principle hasn’t changed.

What has evolved is how Scrapy fits into the broader Python and data ecosystems. Over time, it’s gained better integration with tools for storage, observability, and even browser automation. But the original goal – enabling developers to build clear, maintainable, and scalable crawlers – still drives the project today.”

Building Scrapy for so long has been a cumulative process. Are there any particular milestones you’d like to highlight?

“Definitely. A few milestones stand out to me:

- 2008 – We open-sourced Scrapy, making it one of the first dedicated scraping frameworks available on GitHub.

- 2015 – Scrapy 1.0 was released, marking a major step in API stability and production readiness for a fast-growing user base.

- Python 3 support – This was a huge, community-driven effort that ensured Scrapy evolved alongside the Python language.

- Adoption by major research, journalism, and enterprise teams – While hard to quantify, seeing Scrapy power everything from investigative journalism to AI pipelines has been incredibly validating.”

You’re the creator of Scrapy and CEO of Zyte, but Scrapy is also an open-source project with a large community behind it. What is the community’s role and relationship with the framework?

“Scrapy wouldn’t be what it is without its community. While Zyte has been a major contributor and maintainer over the years, the broader open-source community has played a huge role in pushing features forward, flagging edge cases, improving documentation, and keeping the framework Pythonic and relevant.

It’s a true collaborative ecosystem. We guide the roadmap and review contributions, but many of the best ideas and improvements have come from contributors working on real-world scraping systems and bringing their learnings back into the project.”

Nowadays, web scraping professionals have a large variety of tools – and even categories of tools – at their disposal. Where do you think Scrapy stands in the current landscape?

“Scrapy continues to stand tall as the go-to framework for developers who want full control and customization within an open-source environment. It’s not trying to be a no-code solution or an all-in-one cloud service. It’s built for teams who care about performance, extensibility, and long-term maintainability – teams who often need to integrate custom logic at every layer of their crawlers.

That said, Scrapy integrates well with modern tools. It can be paired with headless browsers, containerized, or run in serverless workflows. In many serious scraping operations, Scrapy remains the reliable backbone.”

As we celebrate Scrapy’s impressive anniversary, could you share some future plans for the project?

“The focus ahead is on modernizing Scrapy while staying true to its original principles. That means enhancing support for modern web technologies (especially JavaScript-heavy sites), improving integrations with headless browsers like Playwright, and continuing to streamline the developer experience – particularly around configuration and observability.

We’re also thinking about how Scrapy can stay relevant in a world increasingly shaped by AI. Smarter retry logic, adaptive throttling, and LLM-assisted extraction are all possibilities we’re exploring. The goal is to ensure Scrapy remains sharp and capable for whatever the future of web scraping demands.”