Bright Data’s Impact Report: Tech Highlights

Cache Proxies, Site Health Monitor, and BrightBot are some of the company’s new tools to scrape responsibly.

- Published:

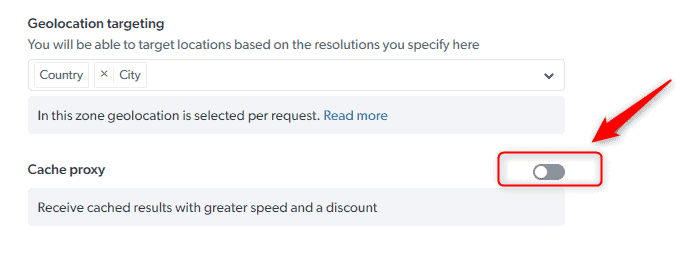

Cache Proxies

The first development is an optional feature that Bright Data offers for its proxy networks. Instead of loading each page anew, the provider can return a cached version of that page if any of its clients had accessed the URL before. This should reduce website load and make the response time up to 20 times faster.

To prevent serving outdated information, Bright Data has decided on the cutoff period of two hours. It also tries to incentivize users by offering a 5% discount for all requests going through that proxy network. In exchange, customers will have to install the provider’s certificate that captures passing traffic.

The feature has the potential to be very useful. However, it requires a huge volume of requests going to the same pages. We’re curious how many websites will be able to meet the recency threshold.

Site Health Monitor

The second development affects proxies as well as Bright Data’s web scrapers. As the name suggests, Site Health Monitor automatically tracks the uptime of websites that clients access through the provider’s infrastructure. Its functionality comprises three elements:

- Monitoring. The tool periodically pings websites from various locations and measures the round-trip-time.

- Throttling. Once the monitor identifies deviations, it throttles requests going to that website.

- Learning. The monitor gradually adapts throttling patterns based on the website’s usage limits.

Site Health Monitor should be a big quality-of-life improvement for webmasters. Zyte is another major data collection company that uses request throttling to scrape politely.

BrightBot

The third and most interesting development involves Bright Data’s own web crawler called BrightBot. The provider has developed it to be a web scraping version of search engine spiders, accessing pages with the knowledge of webmasters.

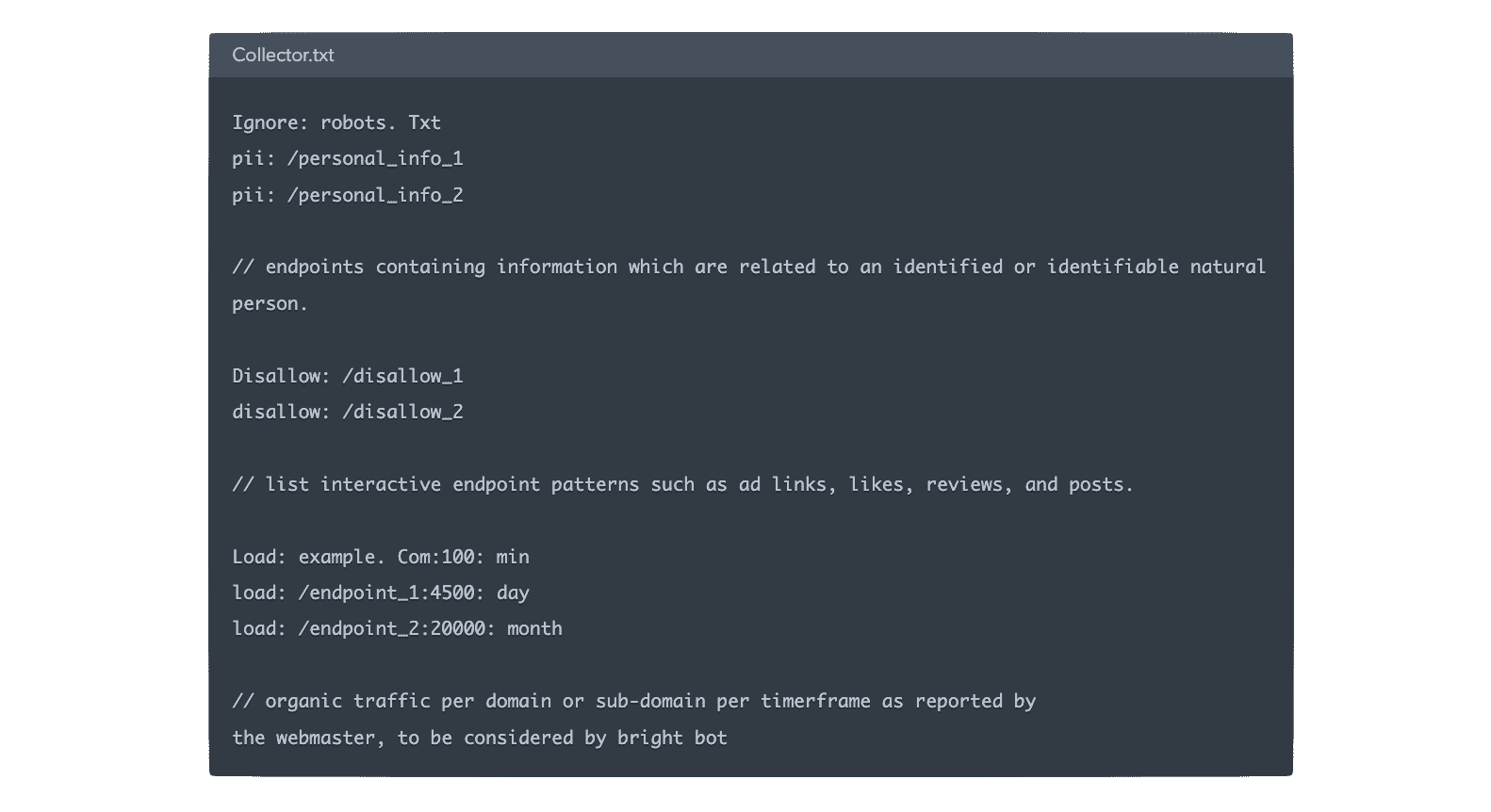

In addition to robots.txt, Bright Data wants websites to add a separate document called collectors.txt. It would inform web scrapers which pages and endpoints include personal information, pages that shouldn’t be scraped, and when the site experiences peak load times.

Bright Data has created a console where webmasters can submit the collectors.txt file and check the round-trip-time metrics to their website. So it looks like BrightBot may also power the Health Site Monitor. If they opt to whitelist the provider’s datacenter IPs, website owners can also get an unspecified data collection credit.

While the idea does sound nice, upon further inspection, it raises multiple considerations:

- Bright Data reserves the discretion not to accept web pages on the platform.

- Webmasters currently need to use Bright Data’s proprietary dashboard, preventing other web scraping companies from accessing the collectors.txt file.

- Bright Data makes it unclear whether its customers will have to follow the instructions while using its data collection infrastructure.

- With the anonymous nature of web scraping, it will be hard to police whether the provider itself adheres to the instructions.

Wrapping Up

It’s great to see a major web data platform taking measures to ensure website-friendly data collection. Some, like the Site Health Monitor, seem very useful. Others, namely BrightBot, have PR value but raise many questions about their implementation. In any case, more considerate web data extraction benefits all parties involved.